hadoop3.0.0运行mapreduce(wordcount)过程及问题总结

1.配置并启动hadoop

下载并安装jdk,hadoop-3.0.0-alpha2,配置环境变量如下:

export JAVA_HOME=/usr/lib/jvm/default-java

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JRE_HOME/lib:$CLASSPATH

export PATH=$JAVA_HOME/bin:$JRE_HOME/bin:$PATH

export HADOOP_HOME=/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_YARN_HOME=$HADOOP_HOME

export HADOOP_INSTALL=$HADOOP_HOME

export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native

export HADOOP_CONF_DIR=$HADOOP_HOME

export HADOOP_PREFIX=$HADOOP_HOME

export HADOOP_LIBEXEC_DIR=$HADOOP_HOME/libexec

export JAVA_LIBRARY_PATH=$HADOOP_HOME/lib/native:$JAVA_LIBRARY_PATH

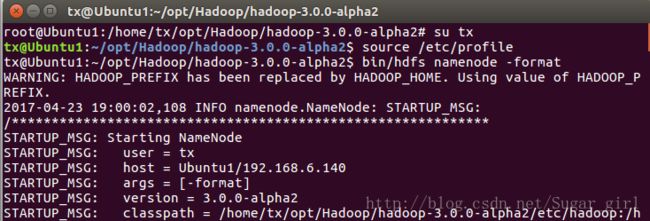

export HADOOP_CONF_DIR=$HADOOP_PREFIX/etc/hadoop然后,source下配置文件,初始化namenode(旧版hadoop命令变为hdfs命令):

此处输入,大写Y(大写!Y!):

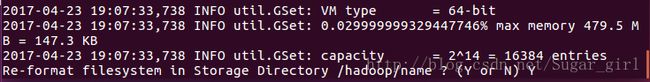

然后,初始化成功:

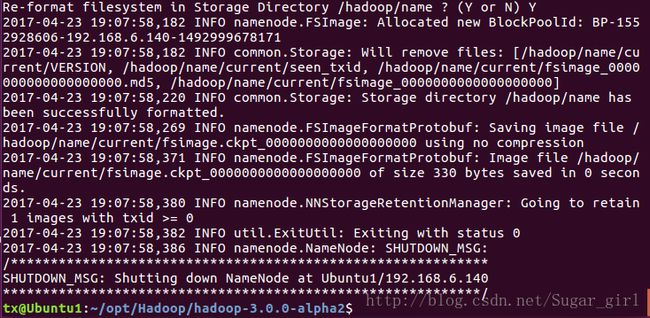

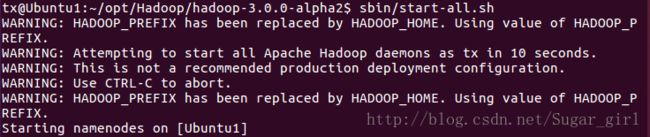

启动hadoop:

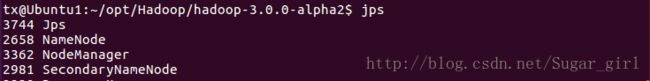

用jps查看到datanode没有启动:

需要把你设置的dfs.data.dir存储位置内VERSION文件内的clusterID 修改为 dfs.name.dir存储位置内VERSION文件内的clusterID,然后重启hadoop。

如果想要用WEB端查看job/application,需要启动hisroty-server。Hadoop启动jobhistoryserver来实现web查看作业的历史运行情况,由于在启动hdfs和Yarn进程之后,jobhistoryserver进程并没有启动,需要手动启动,启动的方法是通过(注意:必须是两个命令):

sbin/mr-jobhistory-daemon.sh start historyserver

sbin/yarn-daemon.sh start timelineserver

2.运行wordcount

首先,在HDFS中创建input文件目录

hadoop fs -mkdir /input

然后,把任意txt文件放到hdfs的input目录下面,这里我用的在hadoop的目录下的LICENSE.txt。

hadoop fs -put LICENSE.txt /input

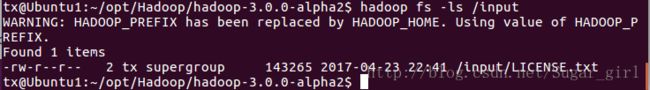

然后查看文件是否正确传入到/input目录下

hadoop fs -ls /input

(一直在报这个warning,查了下网上没有解答,不知道为啥郁闷)

然后,执行(这里用的绝对路径,也可用相对路径):

hadoop jar /home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.0.0-alpha2.jar wordcount /input /output

这时,你兴奋地看到了jobID和applicationID。

hadoop fs -ls /output

查看输出结果:

hadoop fs -cat /output/part-r-00000

结果就是对LICENSE.txt文件中单词进行计数统计了。

3.运行wordcount所遇问题解决

如果你发现只看到jobID没有applicationID时,说明hadoop运行在本地,这WEB端查看不到提交的job和对应application记录。需要另外配置mapreduce的运行时环境,否则就默认在本地运行:

etc/hadoop/mapred-site.xml:

mapreduce.framwork.name 代表mapreduce的运行时环境,默认是local,需配置成yarn:

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>etc/hadoop/yarn-site.xml:

yarn.nodemanager.aux-services代表附属服务的名称,如果使用mapreduce则需要将其配置为mapreduce_shuffle(hadoop2.2.0之前的则配为mapreduce.shuffle):

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>然后,更改完之后重启hadoop并运行wordcount你会遇到如下error:

Failing this attempt.Diagnostics: Exception from container-launch.

Error: Could not find or load main class org.apache.hadoop.mapreduce.v2.app

这时,我已经想去死了。不,我们要坚强,我们只需要配置文件中添加应用类路径就可以了(记得改为您自己的路径):

etc/hadoop/mapred-site.xml:

mapreduce.application.classpath

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/etc/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/etc/hadoop/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/lib/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/common/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/common/lib/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/mapreduce/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/mapreduce/lib-examples/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/hdfs/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/hdfs/lib/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/yarn/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/yarn/lib/*,

etc/hadoop/yarn-site.xml:

yarn.application.classpath

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/etc/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/etc/hadoop/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/lib/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/common/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/common/lib/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/mapreduce/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/mapreduce/lib-examples/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/hdfs/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/hdfs/lib/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/yarn/*,

/home/tx/opt/Hadoop/hadoop-3.0.0-alpha2/share/hadoop/yarn/lib/*,

这时,再重启hadoop,运行wordcount即可(异常嗨皮去吃鸡腿)。

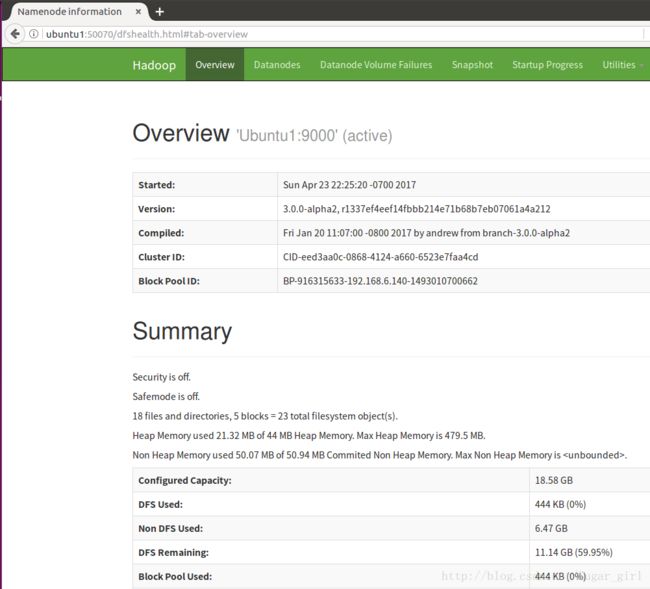

WEB访问 主机地址:50070查看hadoop运行状态:

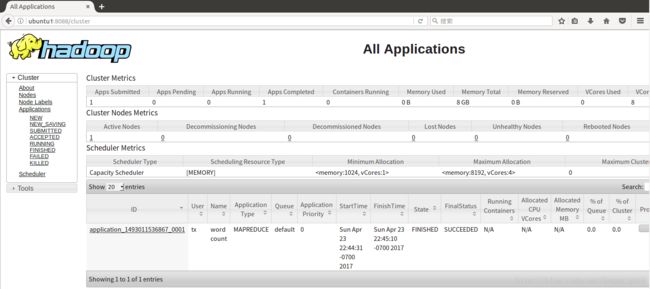

WEB访问 主机地址:8088查看applications:

WEB访问 主机地址:19888查看jobhistory: