神经网络Python练习

用TensorFlow创建FNN神经网络模型,梯度下降采用Adagrad,使用dropout防止过拟合,尝试保存模型再调用的操作。

import tensorflow as tf

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

from sklearn import metrics

#定义读取数据的函数

def load_data(train_path=""):

train=pd.read_csv(train_path,names=["feature1", "feature2" ,"feature3" ,"feature4" ,"feature5",

"feature6" ,"feature7" ,"feature8", "feature9" ,"feature10",

"feature11" ,"feature12", "feature13" ,"feature14", "feature15" ,

"feature16" ,"feature17" ,"feature18", "feature19", "feature20",

"feature21" ,"feature22", "feature23" ,"feature24" ,"feature25",

"feature26", "feature27", "feature28" ,"feature29", "feature30",

"feature31" ,"feature32", "feature33", "feature34" ,"feature35",

"feature36", "feature37", "feature38", "feature39" ,"feature40",

"feature41" ,"feature42", "feature43" ,"feature44", "feature45",

"feature46", "feature47", "feature48" ,"feature49", "feature50" ,

"feature51", "feature52" ,"feature53", "feature54" ,"feature55",

"feature56" ,"feature57", "feature58", "feature59", "feature60" ,

"feature61", "feature62", "feature63", "feature64", "feature65" ,

"feature66", "feature67", "feature68", "feature69" ,"feature70" ,

"feature71" ,"feature72" ,"feature73","y"],header=0)

dict_label={"y":train.pop("y")}

train_x,train_y=train,(pd.DataFrame(dict_label))

return (train_x,train_y)

X_train,y_train=load_data("X8.csv")

X_test,y_test=load_data("X8_test.csv")

print(X_train,y_train)

#占位符

xs = tf.placeholder(shape=[None,X_train.shape[1]],dtype=tf.float32,name="inputs")

ys = tf.placeholder(shape=[None,1],dtype=tf.float32,name="y_true")

#将将添加神经网络层的操作封装起来

def add_layer(inputs,input_size,output_size,activation_function=None):

with tf.variable_scope("Weights"):

Weights = tf.Variable(tf.random_normal(shape=[input_size,output_size]),name="weights")

with tf.variable_scope("biases"):

biases = tf.Variable(tf.zeros(shape=[1,output_size]) + 0.1,name="biases")

with tf.name_scope("Wx_plus_b"):

Wx_plus_b = tf.matmul(inputs,Weights) + biases

#dropout是一个防止过拟合的trick:dropout是指在深度学习网络的训练过程中,对于神经网络单元,按照一定的概率将其暂时从网络中丢弃。

with tf.name_scope("dropout"):

Wx_plus_b = tf.nn.dropout(Wx_plus_b)

#激活函数

if activation_function is None:

return Wx_plus_b

else:

with tf.name_scope("activation_function"):

return activation_function(Wx_plus_b)

#添加神经网络层

with tf.name_scope("layer_1"):

l1 = add_layer(xs,73,30,activation_function=tf.nn.relu)

with tf.name_scope("layer_2"):

l2 = add_layer(l1,30,10,activation_function=tf.nn.relu)

with tf.name_scope("y_pred"):

pred = add_layer(l2,10,1)

#loss函数是均方误差

loss = tf.reduce_mean(tf.reduce_sum(tf.square(ys - pred),reduction_indices=[1])) # mse

#梯度下降采用Adagrad

train_op = tf.train.AdagradOptimizer(learning_rate=0.05).minimize(loss)

init = tf.global_variables_initializer()

with tf.Session() as sess:

saver = tf.train.Saver(tf.global_variables(), max_to_keep=1)

sess.run(init)

for i in range(50000):

_loss, _ = sess.run([loss, train_op], feed_dict={xs: X_train[0:99], ys: y_train[0:99]})

if i % 100 == 0:

print("epoch:%d\tloss:%.5f" % (i, _loss))

# saver.save(sess=sess, save_path="NN/DNN.model", global_step=99999) # 保存模型

# builder=tf.saved_model.builder.SavedModelBuilder("NNmodel")

# builder.add_meta_graph_and_variables(sess,[tf.saved_model.tag_constants.TRAINING])#保存整张网络及其变量,这种方法是可以保存多张网络的,在此不作介绍,可自行了解

#builder.save()#完成保存

y_pred = sess.run(pred, feed_dict={xs: X_train[0:99], ys: y_train[0:99]})

pred_y = sess.run(pred, feed_dict={xs: X_test})

#mse = tf.reduce_mean(tf.square(pred_y - y_test))

print(pred_y)

print("RMSE:", np.sqrt(metrics.mean_squared_error(y_test, pred_y)))

#print("MSE: %.4f" % sess.run(mse))

#print(y_pred)

sess.close()

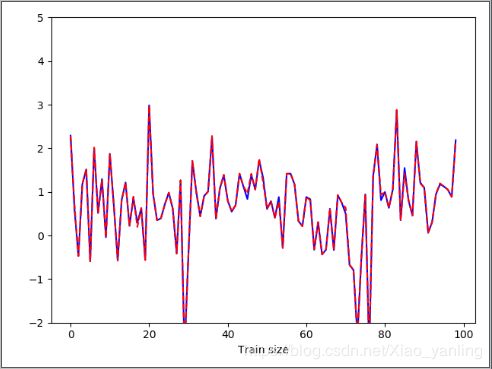

# draw pics

fig = plt.figure()

ax = fig.add_subplot(1,1,1)

ax.plot(range(99),y_train[0:99],'b')

ax.plot(range(99), y_pred[0:99], 'r--')

# plt.pause(1)

ax.set_ylim([-2,5])

plt.xlabel("Train size")

plt.ion()

plt.show()

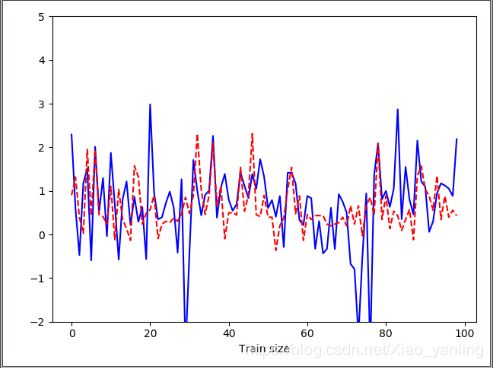

# show data

plt.plot(range(len(y_test)),y_test,'b')

plt.plot(range(len(pred_y)),pred_y,'r--')

plt.xlabel("Test size")

plt.show()