kylin问题总结

一、点击加载hive表出现如下异常:

java.lang.NoClassDefFoundError: org/apache/hadoop/hive/cli/CliSessionState

java.lang.NoClassDefFoundError: org/apache/hadoop/hive/ql/session/SessionState

解决:将hive lib文件夹下的lib拷贝到kylin lib文件夹下。

查看了下bin里的find-hive-dependency.sh,有设置hive_dependency 包含了所有hive lib下的jar包,但不知道为啥没起作用。所以只好拷贝操作了

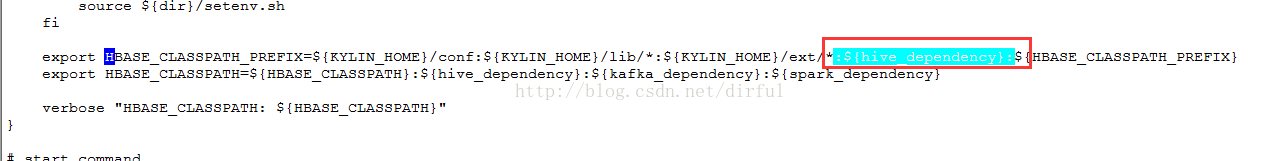

在网上看到一个人说,修改bin目录下的kylin.sh

在HBASE_CLASSPATH_PREFIX把hive_dependency 加上。经测可用。

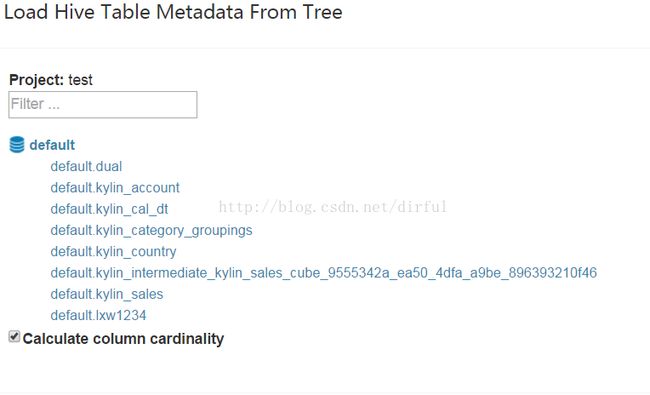

二、解决jar问题,hive表依然加载不出来(点击加载树,一直在转圈)

查看log,如:tail -f -n200 /opt/app/apache-kylin-2.0.0-bin/logs/kylin.log

发现没有异常,弄得我直挠头。尝试点了下reload table按钮,终于报出了异常,但是后台log依然没打出来,报出异常提示连接不上元数据。

hive不知道什么时候挂了,输入: hive --service metastore & 启动一下元数据。终于看到加载的hive表了

为了配置kylin,/etc/profile文件加入如下:

export JAVA_HOME=/opt/app/jdk1.8.0_131

PATH=$PATH:/$JAVA_HOME/bin

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

JRE_HOME=$JAVA_HOME/jre

export HADOOP_HOME=/opt/app/hadoop-2.8.0

PATH=$PATH:$HADOOP_HOME/bin:$PATH

export HIVE_HOME=/opt/app/apache-hive-2.1.1-bin

export HCAT_HOME=$HIVE_HOME/hcatalog

export HIVE_CONF=$HIVE_HOME/conf

PATH=$PATH:$HIVE_HOME/bin:$PATH

export HBASE_HOME=/opt/app/hbase-1.3.1

PATH=$PATH:$HBASE_HOME/bin:$PATH

#export HIVE_CONF=/opt/app/apache-hive-2.1.1-bin/conf

#PATH=$PATH:$HIVE_HOME/bin:$PATH

export KYLIN_HOME=/opt/app/apache-kylin-2.0.0-bin

PATH=$PATH:$KYLIN_HOME/bin:$PATH

#export KYLIN_HOME=/opt/app/kylin/

# zookeeper env 本人zookeeper用了hbase自带的,此没有用到

export ZOOKEEPER_HOME=/opt/app/zookeeper-3.4.6

export PATH=$ZOOKEEPER_HOME/bin:$PATH

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------

问题:将hadoop format,重新搭建环境,报异常: org.apache.hadoop.hbase.TableExistsException: kylin_metadata_user

解决:

1.如果是独立的zookeeper

在zookeeper的bin目录下有一个zkCli.sh ,运行进入;

进入后运行 rmr /hbase , 然后quit退出;

2.如果是用hbase自带的zookeeper

用 hbase zkcli进入

进入后运行 rmr /hbase , 然后quit退出;

----------------------------------------------------------------------------------------------------------------------------------------------------------------------------------

问题:File does not exist: hdfs://localhost:9000/home/hadoop/data/mapred/staging/root1629090427/.staging/job_local1629090427_0006/libjars/hive-metastore-1.2.1.jar

是由于mapred-site.xml、yarn没有配置正确造成的。

mapred-site.xml

mapreduce.reduce.java.opts

-Xms2000m -Xmx4600m

mapreduce.map.memory.mb

5120

mapreduce.reduce.input.buffer.percent

0.5

mapreduce.reduce.memory.mb

2048

mapred.tasktracker.reduce.tasks.maximum

2

mapreduce.framework.name

yarn

mapreduce.jobhistory.address

localhost:10020

yarn.app.mapreduce.am.staging-dir

/home/hadoop/data/mapred/staging

mapreduce.jobhistory.intermediate-done-dir

${yarn.app.mapreduce.am.staging-dir}/history/done_intermediate

mapreduce.jobhistory.done-dir

${yarn.app.mapreduce.am.staging-dir}/history/done

yarn-site.xml

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce_shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

mapreduce.jobtracker.staging.root.dir

/home/hadoop/data/mapred/staging

yarn.app.mapreduce.am.staging-dir

/home/hadoop/data/mapred/staging

yarn.resourcemanager.hostname

localhost

记得启动 /hadoop-2.8.0/sbin/mr-jobhistory-daemon.sh start historyserver

---------------------------------------------------------------------------

问题:build cube没有成功,得到如下log

Counters: 41 File System Counters FILE: Number of bytes read=0 FILE: Number of bytes written=310413 FILE: Number of read operations=0 FILE: Number of large read operations=0 FILE: Number of write operations=0 HDFS: Number of bytes read=1700 HDFS: Number of bytes written=0 HDFS: Number of read operations=4 HDFS: Number of large read operations=0 HDFS: Number of write operations=0 Job Counters Failed map tasks=1 Failed reduce tasks=6 Killed reduce tasks=3 Launched map tasks=2 Launched reduce tasks=9 Other local map tasks=1 Data-local map tasks=1 Total time spent by all maps in occupied slots (ms)=10602 Total time spent by all reduces in occupied slots (ms)=76818 Total time spent by all map tasks (ms)=10602 Total time spent by all reduce tasks (ms)=76818 Total vcore-seconds taken by all map tasks=10602 Total vcore-seconds taken by all reduce tasks=76818 Total megabyte-seconds taken by all map tasks=10856448 Total megabyte-seconds taken by all reduce tasks=78661632 Map-Reduce Framework Map input records=0 Map output records=3 Map output bytes=42 Map output materialized bytes=104 Input split bytes=1570 Combine input records=3 Combine output records=3 Spilled Records=3

Failed Shuffles=0 Merged Map outputs=0 GC time elapsed (ms)=168 CPU time spent (ms)=3790 Physical memory (bytes) snapshot=356413440 Virtual memory (bytes) snapshot=2139308032 Total committed heap usage (bytes)=195035136 File Input Format Counters Bytes Read=0在mapred-site.xml中加上如下配置:

-

mapreduce.reduce.java.opts -Xms2000m -Xmx4600m mapreduce.map.memory.mb 5120 mapreduce.reduce.input.buffer.percent 0.5 mapreduce.reduce.memory.mb 2048