ELKstack是Elasticsearch、Logstash、Kibana三个开源软件的组合。目前都在Elastic.co公司名下。

ELK是一套常用的开源日志监控和分析系统,包括一个分布式索引与搜索服务Elasticsearch,

一个管理日志和事件的工具logstash,和一个数据可视化服务Kibana

logstash_1.5.3 负责日志的收集,处理和储存

elasticsearch-1.7.2 负责日志检索和分析

kibana-4.1.2-linux-x64.tar.gz 负责日志的可视化

redis-2.4.14 DB以及日志传输的通道来处理

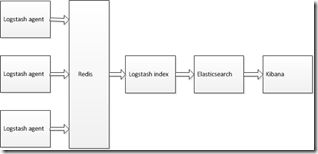

用一张图来表示他们之间的关系

此文以两个服务器为例来部署

服务器A:192.168.0.1 java elasticsearch redis kibana logstash(agent indexer)

服务器B:192.168.0.2 java logstash(agent)

首先安装服务器A相关软件

一、安装基础软件

yum -y install curl wget lrzsz axel

二、安装配置redis服务

1、安装tcl8.6.1

a) tar -xf tcl8.6.1-src.tar.gz --strip-components=1 b) cd tcl8.6.1/unix c) ./configure --prefix=/usr/local d) make e) make test f) make install g) make install-private-headers h) ln -v -sf tclsh8.6 /usr/bin/tclsh i) chmod -v 755 /usr/lib/libtcl8.6.so(可选,并且如报找不到文件,没关系)

2、安装redis-3.0.2

wget http://download.redis.io/releases/redis-3.0.2.tar.gz

tar xzf redis-3.0.2.tar.gz /usr/local/redis

cd redis-3.0.2

make MALLOC=libc

make testmake install

2、配置redis

a) mkdir /etc/redis

b) mkdir /var/redis

c) cp utils/redis_init_script /etc/init.d/redis

d) vim /etc/init.d/redis

头部添加:

#chkconfig: 345 60 60

#!/bin/bash

e) mkdir /var/redis/6379

f) cp redis.conf /etc/redis/6379.conf

g) vim /etc/redis/6379.conf

#设置daemonize为yes

#设置pidfile为/var/run/redis_6379.pid

#设置loglevel

#设置logfile为/var/log/redis_6379.log

#设置dir为/var/redis/6379

h) sysctl vm.overcommit_memory=1

i) chkconfig --add redis

j) chkconfig redis on

3、重启服务

service redis start/stop

4、查看进程和端口

1)查看进程

ps -ef |grep redis root 31927 25099 0 18:26 pts/0 00:00:00 vi /etc/init.d/redis

2)查看端口

netstat -tupnl |grep redis tcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 31966/redis-server tcp 0 0 :::6379 :::* LISTEN 31966/redis-server

三、安装java环境

1、安装包

yum -y list java*

yum -y install openjdk-7-jdk

2、查看版本

java -version java version "1.7.0_91" OpenJDK Runtime Environment (rhel-2.6.2.2.el6_7-x86_64 u91-b00) OpenJDK 64-Bit Server VM (build 24.91-b01, mixed mode)

四、安装elasticsearch

1、下载elasticsearch

wget https://download.elastic.co/elasticsearch/elasticsearch/elasticsearch-1.7.2.noarch.rpm

2、安装elasticsearch

rpm -ivh elasticsearch-1.7.2.noarch.rpm

3、配置

1)备份配置

cp /etc/elasticsearch/elasticsearch.yml /etc/elasticsearch/elasticsearch.yml.bak

2)修改配置

echo "network.bind_host: 192.168.0.1" >> /etc/elasticsearch/elasticsearch.yml

4、启动elasticsearch服务

/etc/init.d/elasticsearch start

/etc/init.d/elasticsearch stop

5、查看进程和端口

1)查看进程

ps -ef |grep java

2)查看端口

netstat -tupnl |grep java

6、测试

curl -X GET http://192.168.54.147:9200 { "status" : 200, "name" : "Miguel O'Hara", "cluster_name" : "elasticsearch", "version" : { "number" : "1.7.2", "build_hash" : "e43676b1385b8125d647f593f7202acbd816e8ec", "build_timestamp" : "2015-09-14T09:49:53Z", "build_snapshot" : false, "lucene_version" : "4.10.4" }, "tagline" : "You Know, for Search" }

7、添加到开机启动

update-rc.d elasticsearch defaults update-rc.d: using dependency based boot sequencing

五、安装logstash

1、下载logstash

wget https://download.elastic.co/logstash/logstash/packages/centos/logstash-1.5.4-1.noarch.rpm

2、安装logstash

rpm -ivh logstash-1.5.4-1.noarch.rpm

3、配置(默认没有这个配置文件)

vim /etc/logstash/conf.d/logstash_indexer.conf input { redis { host => "192.168.0.1" data_type => "list" key => "logstash:redis" type => "redis-input" port => "6379" } } output { elasticsearch { host => "192.168.0.1" } }

4、启动服务

/etc/init.d/logstash start

5、使用jps -mlv或ps -ef来查看下进程

ps -ef|grep logst

6、设置开机启动

update-rc.d logstash defaults update-rc.d: using dependency based boot sequencing

六、安装kibana(前端web)

1、下载

wget https://download.elastic.co/kibana/kibana/kibana-4.1.2-linux-x64.tar.gz

2、解压到指定目录

tar zxvf kibana-4.1.2-linux-x64.tar.gz -C /opt

3、创建日志目录

mkdir -p /opt/kibanalog

4、配置

1)备份配置

cp /opt/kibana-4.1.2-linux-x64/config/kibana.yml /opt/kibana-4.1.2-linux-x64/config/kibana.yml.bak

2)修改配置

sed -i 's!^elasticsearch_url: .*!elasticsearch_url: "http://192.168.0.1:9200"!g' /opt/kibana-4.1.2-linux-x64/config/kibana.yml sed -i 's!^host: .*!host: "192.168.0.1"!g' /opt/kibana-4.1.2-linux-x64/config/kibana.yml

5、启动服务

cd /opt/kibanalog && nohup /opt/kibana-4.1.2-linux-x64/bin/kibana &

6、查看进程和端口

1)查看进程

ps aux |grep kibana

2)查看端口

netstat -tupnl|grep 5601

7、在windows上访问

http://192.168.0.1:5601

8、设置开机启动

echo "cd /opt/kibanalog && nohup /opt/kibana-4.1.2-linux-x64/bin/kibana &" >> /etc/rc.local

到此服务器A的相关软件全部安装完成;

现在安装日志采集端的程序(服务器B),可以有多个

clientB安装配置logstash(agent)

1、安装java环境

yum -y list java*

yum -y install openjdk-7-jdk

1、下载logstash

wget https://download.elastic.co/logstash/logstash/packages/centos/logstash-1.5.4-1.noarch.rpm

2、安装logstash

rpm -ivh logstash-1.5.4-1.noarch.rpm

3、配置(默认没有这个配置文件)

1)配置logstash_agent

vim /etc/logstash/conf.d/logstash_agent.conf input { file { path => "/tmp/*.log" start_position => beginning } } output { redis { host => "192.168.0.1" data_type => "list" key => "logstash:redis" } }

5、启动服务

/etc/init.d/logstash start

logstash started.

6、使用jps -mlv或ps -ef来查看下进程

ps -ef|grep logst

7、设置开机启动

update-rc.d logstash defaults update-rc.d: using dependency based boot sequencing

至此服务器B也安装配置完成,根据日志采集端的需要可以配置N个服务B

如何查看日志:

1、查看redis日志

cat /var/log/redis/redis-server.log

2、查看elasticsearch日志

cat /var/log/elasticsearch/elasticsearch.log tail -300f /var/log/elasticsearch/elasticsearch.log

3、查看logstash日志

cat /var/log/logstash/logstash.errtail -30f /var/log/logstash/logstash.err

4、查看kibana日志

cat /opt/kibanalog/nohup.out tail -30f /opt/kibanalog/nohup.out

错误处理

1)unable to fetch mapping, do you have indices matching the pattem?

kibana 报这个错误就是因为没有从logstash 过来任何数据一般检查一下数据传输