Hadoop集群搭建

一、Hadoop 介绍

Hadoop 实现了一个分布式文件系统( Hadoop Distributed File System),简称 HDFS。 HDFS有高容错性的特点,并且设计用来部署在低廉的(low-cost)硬件上;而且它提供高吞吐量(highthroughput)来访问应用程序的数据,适合那些有着超大数据集(large data set)的应用程序。HDFS 放宽了(relax)POSIX 的要求,可以以流的形式访问(streaming access)文件系统中的数据。

二、实验环境:

1、下载地址

redhat6.5,iptables selinux off

此处采用 hadoop-3.1.0.tar.gz,下载地址:http://hadoop.apache.org/releases.html 点击[binary] 即可进行下载

Hadoop安装报错博客链接:https://blog.csdn.net/dream_ya/article/details/80376002**

Hadoop单节点安装博客链接:https://blog.csdn.net/dream_ya/article/details/80391357**

2、官方参考文档链接:

http://hadoop.apache.org/docs/r3.1.0/hadoop-project-dist/hadoop-common/SingleCluster.html(单节点)

http://hadoop.apache.org/docs/r3.1.0/hadoop-project-dist/hadoop-common/ClusterSetup.html(集群)

3、安装介绍

server1 10.10.10.1(master)

server2 10.10.10.2(slave)

server3 10.10.10.3(slave)4、三台的 host 解析

[root@server1 ~]# cat /etc/hosts

10.10.10.1 server1

10.10.10.2 server2

10.10.10.3 server3三、java 环境的安装

hadoop 是基于 java 的,需要 java 环境的支持,此处用的8版本

1、安装java

[root@server1 ~]# tar xf jdk-8u171-linux-x64.tar.gz -C /usr/local

[root@server1 ~]# vim /etc/profile

export JAVA_HOME=/usr/local/jdk1.8.0_171

export PATH=$JAVA_HOME/bin:$PATH

export DREAM_PATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

[root@server1 ~]# . /etc/profile 2、测试

[root@server1 ~]# java -version

java version "1.8.0_171"

Java(TM) SE Runtime Environment (build 1.8.0_171-b11)

Java HotSpot(TM) 64-Bit Server VM (build 25.171-b11, mixed mode)四、实现免密互相登陆

1、通过ssh命令进行设置

[root@server1 ~]# ssh-keygen

[root@server1 .ssh]# ssh-copy-id -i id_rsa.pub server1

[root@server1 ~]# scp -r .ssh/ server2:

[root@server1 ~]# scp -r .ssh/ server3:2、测试

测试效果即为 server1、server2 和 server3 互相连接不需要密码,最好把server1和其他都进行连接把第一次连接所需要的“YES“,否则后面可能会报错

五、hadoop 安装

1、解压tar包

[root@server1 ~]# tar xf hadoop-3.1.0.tar.gz -C /usr/local2、查看 Hadhoop 是否为 64 位:

[root@server1 ~]# file /usr/local/hadoop-3.1.0/lib/native/libhadoop.so.1.0.0

/usr/local/hadoop-3.1.0/lib/native/libhadoop.so.1.0.0: ELF 64-bit LSB shared object,

x86-64, version 1 (SYSV), dynamically linked, not stripped3、配置文件

注意:不存在的目录在启动后会自我生成!!!

(1)配置 core-site.xml

[root@server1 hadoop-3.1.0]# vim etc/hadoop/core-site.xml

<configuration>

<property>

<name>fs.defaultFSname>

<value>hdfs://server1:9000value>

property>

<property>

<name>hadoop.tmp.dirname>

<value>file:/usr/local/hadoop-3.1.0/tmpvalue>

property>

configuration> (2)配置 hdfs-site.xml

[root@server1 hadoop-3.1.0]# vim etc/hadoop/hdfs-site.xml

<configuration>

<property>

<name>dfs.replicationname>

<value>2value>

property>

<property>

<name>dfs.datanode.data.dirname>

<value>file:/usr/local/hadoop-3.1.0/datavalue>

property>

<property>

<name>dfs.namenode.secondary.http-addressname>

<value>server2:9001value>

property>

<property>

<name>dfs.namenode.http-addressname>

<value>server1:9002value>

property>

<property>

<name>dfs.namenode.name.dirname>

<value>file:/usr/local/hadoop-3.1.0/namevalue>

property>

configuration>(3)配置 mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.namename>

<value>yarnvalue>

property>

configuration>(4)配置 yarn-site.xml

[root@server1 hadoop-3.1.0]# vim etc/hadoop/yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostnamename>

<value>server1value>

property>

<property>

<name>yarn.nodemanager.aux-servicesname>

<value>mapreduce_shufflevalue>

property>

<property>

<name>yarn.nodemanager.env-whitelistname>

<value>truevalue>

property>

<property>

<name>yarn.log-aggregation.retain-secondsname>

<value>604800value>

property>

configuration>(5)配置masters和slaves

注意:下面这 2 个文件本身不存在,自我创建!!!

[root@server1 hadoop-3.1.0]# cat etc/hadoop/masters

server2

[root@server1 hadoop-3.1.0]# cat etc/hadoop/slaves

server2

server34、声明环境变量

[root@server1 hadoop-3.1.0]# vim etc/hadoop/hadoop-env.sh

export JAVA_HOME=/usr/local/jdk1.8.0_171

export HDFS_NAMENODE_USER="root"

export HDFS_DATANODE_USER="root"

export HDFS_SECONDARYNAMENODE_USER="root"

export YARN_RESOURCEMANAGER_USER="root"

export YARN_NODEMANAGER_USER="root"5、scp安装到server2、3

[root@server1 ~]# scp -r /usr/local/hadoop-3.1.0/ server2:/usr/local

[root@server1 ~]# scp -r /usr/local/hadoop-3.1.0/ server3:/usr/local

[root@server1 ~]# scp -r /usr/local/jdk1.8.0_171/ server2:/usr/local

[root@server1 ~]# scp -r /usr/local/jdk1.8.0_171/ server3:/usr/local

[root@server1 ~]# scp /etc/profile server2:/etc

[root@server1 ~]# scp /etc/profile server3:/etc

[root@server2 ~]# . /etc/profile

[root@server3 ~]# . /etc/profile6、启动 Hadoop

[root@server1 hadoop-3.1.0]# ./bin/hdfs namenode -format ###第一次得进行格式化

[root@server1 hadoop-3.1.0]# ./sbin/start-dfs.sh ###启动 dfs

Starting namenodes on [server1]

Starting datanodes

Starting secondary namenodes [server2]

2018-05-17 22:53:07,536 WARN util.NativeCodeLoader: Unable to load

native-hadoop library for your platform... using builtin-java classes where applicable [root@server1 hadoop-3.1.0]# ./sbin/start-yarn.sh ###启动 yarn

Starting resourcemanager

Starting nodemanagers7、测试:

(1)查看开启的服务

[root@server1 hadoop-3.1.0]# jps

1713 ResourceManager

1362 DataNode

1832 NodeManager

2203 Jps

[root@server2 hadoop-3.1.0]# jps

1074 SecondaryNameNode

1380 DataNode

1931 NodeManager

2110 Jps

[root@server3 hadoop-3.1.0]# jps

1765 NodeManager

1305 DataNode

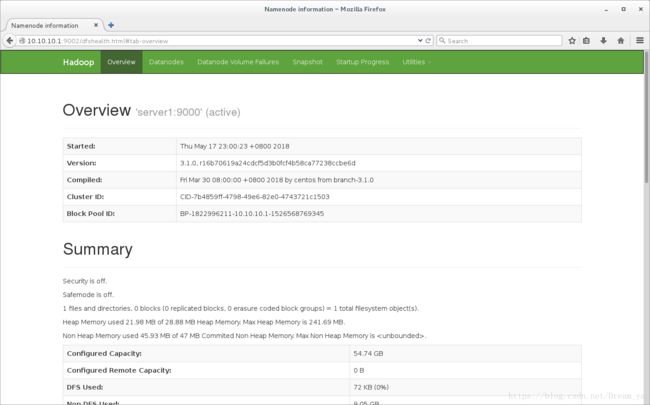

1962 Jps(2)浏览器

http://10.10.10.1:9002