hadoop大数据平台手动搭建(二)-hadoop

1.下载列表:winow和linux之间文件上传下载FileZilla

jdk-7u79-linux-x64.tar.gz

apache-maven-3.3.9-bin.tar.gz

hadoop-2.6.0-cdh5.8.0.tar.gz

hadoop-native-64-2.6.0.tar

hbase-1.2.0-cdh5.8.0.tar.gz

hive-1.1.0-cdh5.8.0.tar.gz

hue-3.9.0-cdh5.8.0.tar.gz

scala-2.10.4.gz

spark-1.6.0-cdh5.8.0.tar

sqoop-1.4.6-cdh5.8.0.tar.gz

2.安装jdk(root身份)

a. cd /usr/

mkdir java

tar -zxvf jdk-7u79-linux-x64.tar.gz

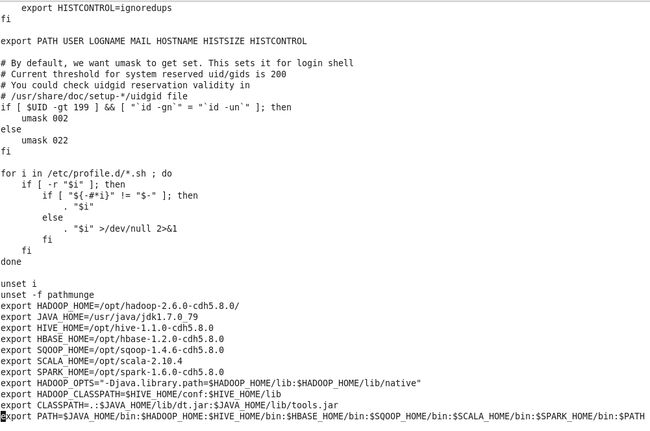

配置环境变量:

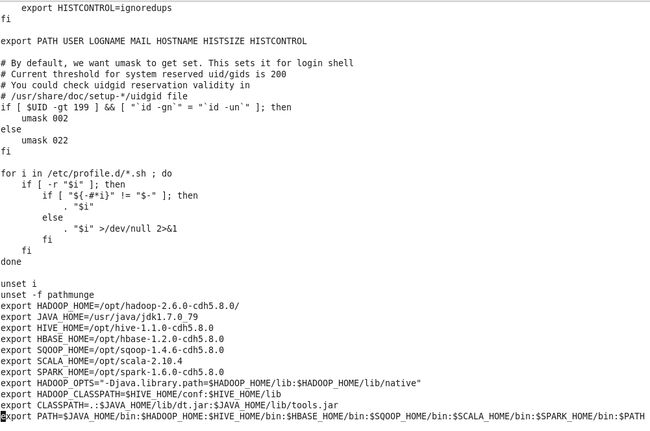

在/etc/profile文件末尾加入:对于这个文件每个登录用户都能加载到环境变量。

export JAVA_HOME=/usr/java/jdk1.7.0_79

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

source /etc/profile #环境变量即刻生效

b.验证安装

java -verion

c.最后像这个样子。

3.安装hadoop,用root身份登录赋予opt文件夹

chown -R hadoop /opt

tar -zxvf hadoop-2.6.0-cdh5.8.0.tar.gz

a.修改/opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop/hadoop-env.sh

末尾加入

b./opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop目录加入core-site.xml

hadoop.tmp.dir

/opt/hadoop-2.6.0-cdh5.8.0/tmp

fs.defaultFS

hdfs://master:9000

hadoop.proxyuser.hadoop.hosts

*

hadoop.proxyuser.hadoop.groups

*

c.修改hdfs-site.xml

dfs.replication

3

dfs.namenode.name.dir

file:/opt/hdfs/name

dfs.namenode.edits.dir

file:/opt/hdfs/nameedit

dfs.datanode.data.dir

file:/opt/hdfs/data

dfs.namenode.rpc-address

master:9000

dfs.http.address master:50070

dfs.namenode.secondary.http-address master:50090

dfs.webhdfs.enabled true

dfs.permissions false

dfs.webhdfs.enabled

true

d.修改mapred-site.xml

mapreduce.framework.name yarn

mapreduce.jobhistory.address slave2:10020

mapreduce.jobhistory.webapp.address slave2:19888

e.修改yarn-site.xml

yarn.resourcemanager.address

master:8080

yarn.resourcemanager.resource-tracker.address

master:8082

yarn.nodemanager.aux-services

mapreduce_shuffle

yarn.nodemanager.aux-services.mapreduce.shuffle.class

org.apache.hadoop.mapred.ShuffleHandler

yarn.resourcemanager.scheduler.address master:8030

yarn.resourcemanager.admin.address master:8033

yarn.resourcemanager.webapp.address master:8088

yarn.log-aggregation-enable true

yarn.log-aggregation.retain-seconds 604800

f.修改salves文件

slaves文件指明那些机器上要运行DataNode,NodeManager

我是在两从节点运行。于是增加两行主机名

slave1

slave2

g

配置环境变量在/etc/profile

export HADOOP_HOME=/opt/hadoop-2.6.0-cdh5.8.0/

export PATH=$PATH:/opt/hadoop-2.6.0-cdh5.8.0/bin

h

第一次启动前需要对HDFS格式化。

/opt/hadoop-2.6.0-cdh5.8.0/bin/hadoop namenode -format

按照提示输入Y.

i启动并验证

jps命令是查看与java相关的进程和进程名

/opt/hadoop-2.6.0-cdh5.8.0/sbin/start-all.sh

[hadoop@master ~]$ /opt/hadoop-2.6.0-cdh5.8.0/sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/hadoop-hadoop-namenode-master.out

slave2: starting datanode, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/hadoop-hadoop-datanode-slave2.out

slave1: starting datanode, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/hadoop-hadoop-datanode-slave1.out

Starting secondary namenodes [master]

master: starting secondarynamenode, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/hadoop-hadoop-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/yarn-hadoop-resourcemanager-master.out

slave2: starting nodemanager, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/yarn-hadoop-nodemanager-slave2.out

slave1: starting nodemanager, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/yarn-hadoop-nodemanager-slave1.out

[hadoop@master ~]$ jps

3467 ResourceManager

3324 SecondaryNameNode

3173 NameNode

3723 Jps

[hadoop@master ~]$

主节点显示上面三进程启动成功。

3467 ResourceManager

3324 SecondaryNameNode

3173 NameNode

在从节点slave1 机器执行jps

能看到NodeManager,DataNode说明成功。

[hadoop@slave1 ~]$ jps

2837 NodeManager

2771 DataNode

3187 Jps

[hadoop@slave1 ~]$

在从节点slave2 机器执行jps

[hadoop@slave2 ~]$ jps

2839 NodeManager

3221 Jps

2773 DataNode

[hadoop@slave2 ~]$

jhadoop安装中各种错误,如何解决。

任何问题都可通过日志解决。日志文件在默认位置。默认日志级别都为info.

/opt/hadoop-2.6.0-cdh5.8.0/logs

所以可修改日志级别:debug 能得到更详尽的出错信息。

HDFS修改为debug级别:

对于HDFS而言,只需要修改sbin/Hadoop-daemon.sh,将INFO替换为DEBUG即可。

export HADOOP_ROOT_LOGGER=${HADOOP_ROOT_LOGGER:-"DEBUG,RFA"}

export HADOOP_SECURITY_LOGGER=${HADOOP_SECURITY_LOGGER:-"DEBUG,RFAS"}

export HDFS_AUDIT_LOGGER=${HDFS_AUDIT_LOGGER:-"DEBUG,NullAppender"}

配置Yarn打印DEBUG信息到日志文件,只需要修改其启动脚本sbin/yarn-daemon.sh,将INFO改为DEBUG即可

export YARN_ROOT_LOGGER=${YARN_ROOT_LOGGER:-DEBUG,RFA}

-------------------------------------------------------------------------

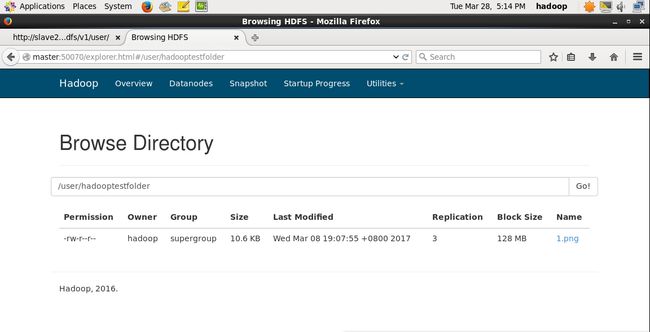

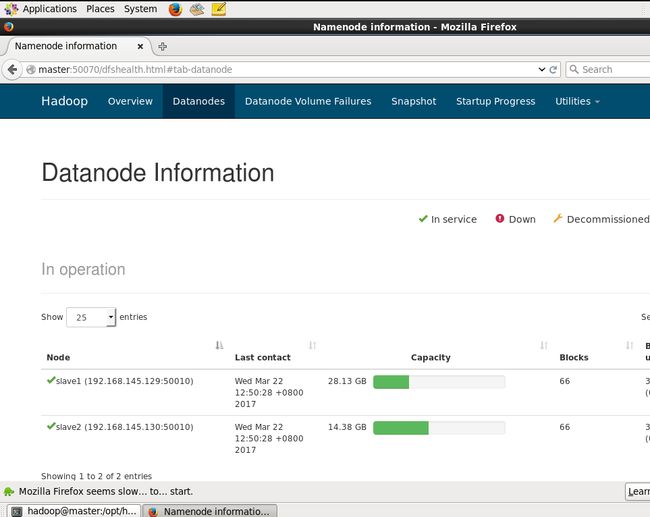

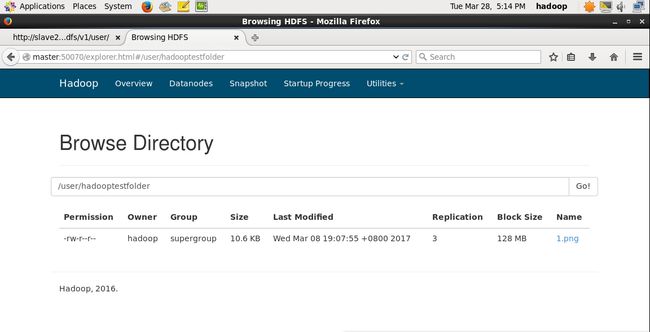

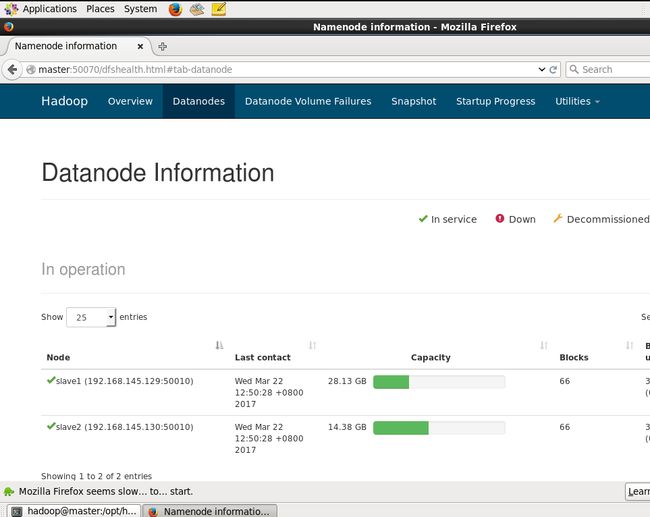

根据xml中配置端口访问web管理,界面如下。

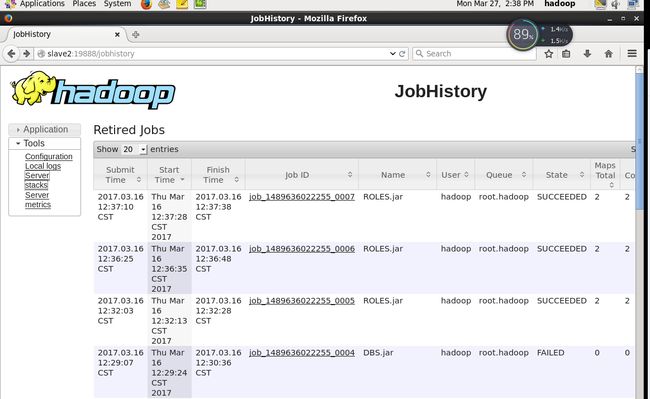

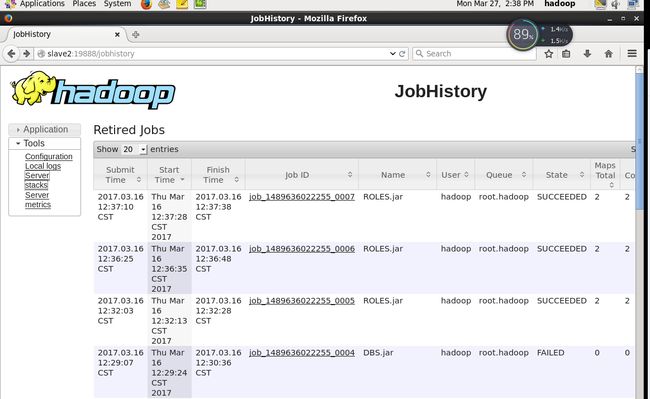

i hadoop历史作业(端口相关配置在mapred-site.xml)

启动history-server:

$HADOOP_HOME/sbin/mr-jobhistory-daemon.sh start historyserver

停止history-server:

$HADOOP_HOME/sbin/mr-jobhistory-daemon.sh stop historyserver

history-server启动之后,可以通过浏览器访问WEBUI: slave2:19888

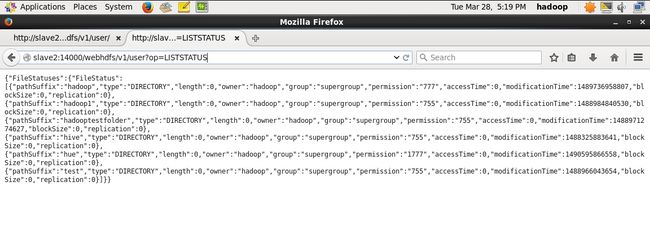

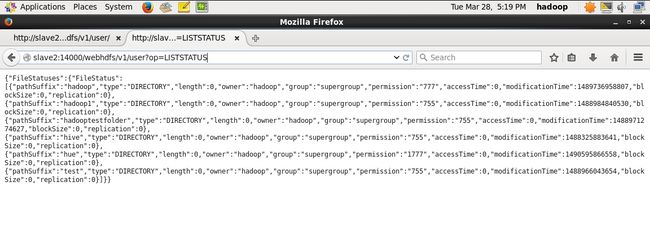

3.httpfs

[hadoop@slave2 sbin]$ ./httpfs.sh start

Setting HTTPFS_HOME: /opt/hadoop-2.6.0-cdh5.8.0

Setting HTTPFS_CONFIG: /opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop

Sourcing: /opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop/httpfs-env.sh

Setting HTTPFS_LOG: /opt/hadoop-2.6.0-cdh5.8.0/logs

Setting HTTPFS_TEMP: /opt/hadoop-2.6.0-cdh5.8.0/temp

Setting HTTPFS_HTTP_PORT: 14000

Setting HTTPFS_ADMIN_PORT: 14001

Setting HTTPFS_HTTP_HOSTNAME: slave2

Setting HTTPFS_SSL_ENABLED: false

Setting HTTPFS_SSL_KEYSTORE_FILE: /home/hadoop/.keystore

Setting HTTPFS_SSL_KEYSTORE_PASS: password

Setting CATALINA_BASE: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat

Setting HTTPFS_CATALINA_HOME: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat

Setting CATALINA_OUT: /opt/hadoop-2.6.0-cdh5.8.0/logs/httpfs-catalina.out

Setting CATALINA_PID: /tmp/httpfs.pid

Using CATALINA_OPTS:

Adding to CATALINA_OPTS: -Dhttpfs.home.dir=/opt/hadoop-2.6.0-cdh5.8.0 -Dhttpfs.config.dir=/opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop -Dhttpfs.log.dir=/opt/hadoop-2.6.0-cdh5.8.0/logs -Dhttpfs.temp.dir=/opt/hadoop-2.6.0-cdh5.8.0/temp -Dhttpfs.admin.port=14001 -Dhttpfs.http.port=14000 -Dhttpfs.http.hostname=slave2

Using CATALINA_BASE: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat

Using CATALINA_HOME: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat

Using CATALINA_TMPDIR: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat/temp

Using JRE_HOME: /usr/java/jdk1.7.0_79

Using CLASSPATH: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat/bin/bootstrap.jar

Using CATALINA_PID: /tmp/httpfs.pid

[hadoop@slave2 sbin]$ su -

Password:

[root@slave2 ~]# netstat -apn|grep 14000

tcp 0 0 :::14000 :::* LISTEN 4013/java

[root@slave2 ~]# netstat -apn|grep 14001

tcp 0 0 ::ffff:127.0.0.1:14001 :::* LISTEN 4013/java

[root@slave2 ~]#

webhdfs

jdk-7u79-linux-x64.tar.gz

apache-maven-3.3.9-bin.tar.gz

hadoop-2.6.0-cdh5.8.0.tar.gz

hadoop-native-64-2.6.0.tar

hbase-1.2.0-cdh5.8.0.tar.gz

hive-1.1.0-cdh5.8.0.tar.gz

hue-3.9.0-cdh5.8.0.tar.gz

scala-2.10.4.gz

spark-1.6.0-cdh5.8.0.tar

sqoop-1.4.6-cdh5.8.0.tar.gz

2.安装jdk(root身份)

a. cd /usr/

mkdir java

tar -zxvf jdk-7u79-linux-x64.tar.gz

配置环境变量:

在/etc/profile文件末尾加入:对于这个文件每个登录用户都能加载到环境变量。

export JAVA_HOME=/usr/java/jdk1.7.0_79

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

source /etc/profile #环境变量即刻生效

b.验证安装

java -verion

c.最后像这个样子。

3.安装hadoop,用root身份登录赋予opt文件夹

chown -R hadoop /opt

tar -zxvf hadoop-2.6.0-cdh5.8.0.tar.gz

a.修改/opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop/hadoop-env.sh

末尾加入

b./opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop目录加入core-site.xml

c.修改hdfs-site.xml

dfs.replication

3

dfs.namenode.name.dir

file:/opt/hdfs/name

dfs.namenode.edits.dir

file:/opt/hdfs/nameedit

dfs.datanode.data.dir

file:/opt/hdfs/data

d.修改mapred-site.xml

e.修改yarn-site.xml

f.修改salves文件

slaves文件指明那些机器上要运行DataNode,NodeManager

我是在两从节点运行。于是增加两行主机名

slave1

slave2

g

配置环境变量在/etc/profile

export HADOOP_HOME=/opt/hadoop-2.6.0-cdh5.8.0/

export PATH=$PATH:/opt/hadoop-2.6.0-cdh5.8.0/bin

h

第一次启动前需要对HDFS格式化。

/opt/hadoop-2.6.0-cdh5.8.0/bin/hadoop namenode -format

按照提示输入Y.

i启动并验证

jps命令是查看与java相关的进程和进程名

/opt/hadoop-2.6.0-cdh5.8.0/sbin/start-all.sh

[hadoop@master ~]$ /opt/hadoop-2.6.0-cdh5.8.0/sbin/start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-yarn.sh

Starting namenodes on [master]

master: starting namenode, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/hadoop-hadoop-namenode-master.out

slave2: starting datanode, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/hadoop-hadoop-datanode-slave2.out

slave1: starting datanode, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/hadoop-hadoop-datanode-slave1.out

Starting secondary namenodes [master]

master: starting secondarynamenode, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/hadoop-hadoop-secondarynamenode-master.out

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/yarn-hadoop-resourcemanager-master.out

slave2: starting nodemanager, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/yarn-hadoop-nodemanager-slave2.out

slave1: starting nodemanager, logging to /opt/hadoop-2.6.0-cdh5.8.0/logs/yarn-hadoop-nodemanager-slave1.out

[hadoop@master ~]$ jps

3467 ResourceManager

3324 SecondaryNameNode

3173 NameNode

3723 Jps

[hadoop@master ~]$

主节点显示上面三进程启动成功。

3467 ResourceManager

3324 SecondaryNameNode

3173 NameNode

在从节点slave1 机器执行jps

能看到NodeManager,DataNode说明成功。

[hadoop@slave1 ~]$ jps

2837 NodeManager

2771 DataNode

3187 Jps

[hadoop@slave1 ~]$

在从节点slave2 机器执行jps

[hadoop@slave2 ~]$ jps

2839 NodeManager

3221 Jps

2773 DataNode

[hadoop@slave2 ~]$

jhadoop安装中各种错误,如何解决。

任何问题都可通过日志解决。日志文件在默认位置。默认日志级别都为info.

/opt/hadoop-2.6.0-cdh5.8.0/logs

所以可修改日志级别:debug 能得到更详尽的出错信息。

HDFS修改为debug级别:

对于HDFS而言,只需要修改sbin/Hadoop-daemon.sh,将INFO替换为DEBUG即可。

export HADOOP_ROOT_LOGGER=${HADOOP_ROOT_LOGGER:-"DEBUG,RFA"}

export HADOOP_SECURITY_LOGGER=${HADOOP_SECURITY_LOGGER:-"DEBUG,RFAS"}

export HDFS_AUDIT_LOGGER=${HDFS_AUDIT_LOGGER:-"DEBUG,NullAppender"}

配置Yarn打印DEBUG信息到日志文件,只需要修改其启动脚本sbin/yarn-daemon.sh,将INFO改为DEBUG即可

export YARN_ROOT_LOGGER=${YARN_ROOT_LOGGER:-DEBUG,RFA}

-------------------------------------------------------------------------

根据xml中配置端口访问web管理,界面如下。

i hadoop历史作业(端口相关配置在mapred-site.xml)

启动history-server:

$HADOOP_HOME/sbin/mr-jobhistory-daemon.sh start historyserver

停止history-server:

$HADOOP_HOME/sbin/mr-jobhistory-daemon.sh stop historyserver

history-server启动之后,可以通过浏览器访问WEBUI: slave2:19888

3.httpfs

[hadoop@slave2 sbin]$ ./httpfs.sh start

Setting HTTPFS_HOME: /opt/hadoop-2.6.0-cdh5.8.0

Setting HTTPFS_CONFIG: /opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop

Sourcing: /opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop/httpfs-env.sh

Setting HTTPFS_LOG: /opt/hadoop-2.6.0-cdh5.8.0/logs

Setting HTTPFS_TEMP: /opt/hadoop-2.6.0-cdh5.8.0/temp

Setting HTTPFS_HTTP_PORT: 14000

Setting HTTPFS_ADMIN_PORT: 14001

Setting HTTPFS_HTTP_HOSTNAME: slave2

Setting HTTPFS_SSL_ENABLED: false

Setting HTTPFS_SSL_KEYSTORE_FILE: /home/hadoop/.keystore

Setting HTTPFS_SSL_KEYSTORE_PASS: password

Setting CATALINA_BASE: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat

Setting HTTPFS_CATALINA_HOME: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat

Setting CATALINA_OUT: /opt/hadoop-2.6.0-cdh5.8.0/logs/httpfs-catalina.out

Setting CATALINA_PID: /tmp/httpfs.pid

Using CATALINA_OPTS:

Adding to CATALINA_OPTS: -Dhttpfs.home.dir=/opt/hadoop-2.6.0-cdh5.8.0 -Dhttpfs.config.dir=/opt/hadoop-2.6.0-cdh5.8.0/etc/hadoop -Dhttpfs.log.dir=/opt/hadoop-2.6.0-cdh5.8.0/logs -Dhttpfs.temp.dir=/opt/hadoop-2.6.0-cdh5.8.0/temp -Dhttpfs.admin.port=14001 -Dhttpfs.http.port=14000 -Dhttpfs.http.hostname=slave2

Using CATALINA_BASE: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat

Using CATALINA_HOME: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat

Using CATALINA_TMPDIR: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat/temp

Using JRE_HOME: /usr/java/jdk1.7.0_79

Using CLASSPATH: /opt/hadoop-2.6.0-cdh5.8.0/share/hadoop/httpfs/tomcat/bin/bootstrap.jar

Using CATALINA_PID: /tmp/httpfs.pid

[hadoop@slave2 sbin]$ su -

Password:

[root@slave2 ~]# netstat -apn|grep 14000

tcp 0 0 :::14000 :::* LISTEN 4013/java

[root@slave2 ~]# netstat -apn|grep 14001

tcp 0 0 ::ffff:127.0.0.1:14001 :::* LISTEN 4013/java

[root@slave2 ~]#

webhdfs