MongoDB Replica Sets(复制集)部署

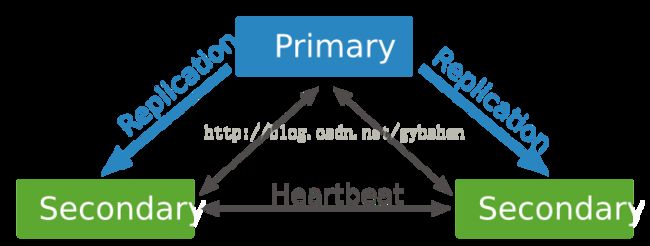

复制集和副本集有所区别,复制集是有自动故障恢复功能的主从集群。在复制集中,当主节点出现故障而宕机,集群会立刻通过选举,选出新的主节点,保证整个集群继续可用。

复制集具有如下优点:

(1) 故障自动恢复

(2) 高可用性

(3) 数据安全

复制集正常情况下:

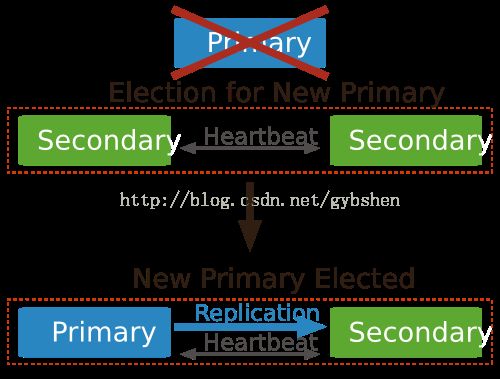

当主节点出现故障,在从节点选举出新的主节点

复制集的部署

环境说明:

系统:Windows 10专业版

集群规模:三个节点,一个主节点两个从节点,一般集群节点个数为奇数个。

注意:

由于资源有限,只在一台主机上开启3个mongod进程模拟3个节点。在实际生产中,最好一个主机上运行一个节点,以保证集群的性能。

为了便于测试直接将日志输出到终端。在实际生产中,将日志输出到日志文件,便于分析。

约定:

| 集群rs1 |

IP |

Port |

Dbpath |

Logpath |

| 节点1 |

127.0.0.1 |

27017 |

C:\data\db |

终端 |

| 节点2 |

127.0.0.1 |

27018 |

D:\data\db |

终端 |

| 节点3 |

127.0.0.1 |

27019 |

E:\data\db |

终端 |

在启动集群之前,保证数据目录存在,且目录为空。

启动mongod进程:

mongod --dbpath c:\data\db --bind_ip127.0.0.1 --port 27017 --replSet rs1

mongod --dbpath D:\data\db --bind_ip127.0.0.1 --port 27018 --replSet rs1

mongod --dbpath E:\data\db --bind_ip127.0.0.1 --port 27019 --replSet rs1

--replSet指定复制集名,三个节点属于同一个复制集,指定为同一个名称。

登陆任一节点,配置复制集,

mongo 127.0.0.1:27017

编写配置对象:

> config={

... _id:'rs1',

... members:[

... {_id:1,host:'127.0.0.1:27017'},

... {_id:2,host:'127.0.0.1:27018'},

... {_id:3,host:'127.0.0.1:27019'}

... ]

... }

{

"_id" : "rs1",

"members" : [

{

"_id" : 1,

"host" :"127.0.0.1:27017"

},

{

"_id" : 2,

"host" :"127.0.0.1:27018"

},

{

"_id" : 3,

"host" :"127.0.0.1:27019"

}

]

}

加载配置:

> rs.initiate(config)

{ "ok" : 1 }

rs1:OTHER>

rs1:PRIMARY> rs.isMaster()

{

"hosts" : [

"127.0.0.1:27017",

"127.0.0.1:27018",

"127.0.0.1:27019"

],

"setName" : "rs1",

"setVersion" : 1,

"ismaster" : true,

"secondary" : false,

"primary" : "127.0.0.1:27017",

"me" : "127.0.0.1:27017",

"electionId" : ObjectId("7fffffff0000000000000001"),

"lastWrite" : {

"opTime" : {

"ts" :Timestamp(1498831736, 1),

"t" :NumberLong(1)

},

"lastWriteDate" :ISODate("2017-06-30T14:08:56Z")

},

"maxBsonObjectSize" : 16777216,

"maxMessageSizeBytes" : 48000000,

"maxWriteBatchSize" : 1000,

"localTime" : ISODate("2017-06-30T14:09:05.756Z"),

"maxWireVersion" : 5,

"minWireVersion" : 0,

"readOnly" : false,

"ok" : 1

}

rs1:PRIMARY>

此节点是一个主节点。

连接另一个节点:

>mongo 127.0.0.1:27018

MongoDB shell version v3.4.5

connecting to: 127.0.0.1:27018

MongoDB server version: 3.4.5

Server has startup warnings:

2017-06-30T21:48:39.401+0800 I CONTROL [initandlisten]

2017-06-30T21:48:39.404+0800 I CONTROL [initandlisten] ** WARNING: Access control isnot enabled for the database.

2017-06-30T21:48:39.407+0800 I CONTROL [initandlisten] ** Read and write access to data and configurationis unrestricted.

2017-06-30T21:48:39.410+0800 I CONTROL [initandlisten]

rs1:SECONDARY>

可以看到这是一个从节点。

在主节点创建数据库和集合并插入数据:

rs1:PRIMARY> show dbs

admin 0.000GB

local 0.000GB

rs1:PRIMARY> use test

switched to db test

rs1:PRIMARY>db.user.insert({name:'gyb'})

WriteResult({ "nInserted" : 1 })

rs1:PRIMARY>db.user.insert({name:'bobo'})

WriteResult({ "nInserted" : 1 })

在从节点查看数据:

rs1:SECONDARY> rs.slaveOk()

rs1:SECONDARY> show dbs

admin 0.000GB

local 0.000GB

test 0.000GB

rs1:SECONDARY> use test

switched to db test

rs1:SECONDARY> db.user.find()

{ "_id" :ObjectId("59566ffe6a3a31e1ea4abec7"), "name" :"gyb" }

{ "_id" :ObjectId("5956700e6a3a31e1ea4abec8"), "name" :"bobo" }

rs1:SECONDARY>

可以看到数据和主节点同步。

注意:从节点只能读数据,无法写入数据。在写入数据时会有如下错误:

rs1:SECONDARY>db.user.insert({name:'1123'})

WriteResult({ "writeError" : {"code" : 10107, "errmsg" : "not master" } })

rs1:SECONDARY>

故障恢复验证:

现在通过手动终止主节点,模拟宕机

在主节点Ctrl+C终止进程,

登陆127.0.0.1:27019

>mongo 127.0.0.1:27019

MongoDB shell version v3.4.5

connecting to: 127.0.0.1:27019

MongoDB server version: 3.4.5

Server has startup warnings:

2017-06-30T21:51:02.535+0800 I CONTROL [initandlisten]

2017-06-30T21:51:02.538+0800 I CONTROL [initandlisten] ** WARNING: Access control isnot enabled for the database.

2017-06-30T21:51:02.542+0800 I CONTROL [initandlisten] ** Read and write access to data andconfiguration is unrestricted.

2017-06-30T21:51:02.548+0800 I CONTROL [initandlisten]

rs1:PRIMARY>

rs1:PRIMARY>

发现此节点被升级为主节点。

查看数据文件

rs1:PRIMARY> show dbs

admin 0.000GB

local 0.000GB

test 0.000GB

rs1:PRIMARY> use test

switched to db test

rs1:PRIMARY> db.user.find()

{ "_id" :ObjectId("59566ffe6a3a31e1ea4abec7"), "name" :"gyb" }

{ "_id" :ObjectId("5956700e6a3a31e1ea4abec8"), "name" :"bobo" }

rs1:PRIMARY>

可以看到数据还在,这就体现了复制集的数据安全性以及高可用性。

查看集群状态:

rs1:PRIMARY> rs.status()

{

"set" : "rs1",

"date" : ISODate("2017-06-30T15:48:41.835Z"),

"myState" : 1,

"term" : NumberLong(2),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime": {

"ts" :Timestamp(1498837720, 1),

"t" : NumberLong(2)

},

"appliedOpTime" : {

"ts" :Timestamp(1498837720, 1),

"t" :NumberLong(2)

},

"durableOpTime" : {

"ts" : Timestamp(1498837720,1),

"t" :NumberLong(2)

}

},

"members" : [

{

"_id" : 1,

"name" :"127.0.0.1:27017",

"health" : 0,

"state" : 8,

"stateStr" :"(not reachable/healthy)",

"uptime" : 0,

"optime" : {

"ts": Timestamp(0, 0),

"t" :NumberLong(-1)

},

"optimeDurable" : {

"ts": Timestamp(0, 0),

"t" :NumberLong(-1)

},

"optimeDate": ISODate("1970-01-01T00:00:00Z"),

"optimeDurableDate" :ISODate("1970-01-01T00:00:00Z"),

"lastHeartbeat" :ISODate("2017-06-30T15:48:37.579Z"),

"lastHeartbeatRecv" :ISODate("2017-06-30T15:43:41.461Z"),

"pingMs" :NumberLong(0),

"lastHeartbeatMessage" : "����Ŀ�����������ܾ��������ӡ�",

"configVersion" : -1

},

{

"_id" : 2,

"name" :"127.0.0.1:27018",

"health" : 1,

"state" : 2,

"stateStr" :"SECONDARY",

"uptime" :216,

"optime" : {

"ts": Timestamp(1498837720, 1),

"t" :NumberLong(2)

},

"optimeDurable": {

"ts": Timestamp(1498837720, 1),

"t" :NumberLong(2)

},

"optimeDate": ISODate("2017-06-30T15:48:40Z"),

"optimeDurableDate": ISODate("2017-06-30T15:48:40Z"),

"lastHeartbeat" :ISODate("2017-06-30T15:48:41.094Z"),

"lastHeartbeatRecv" :ISODate("2017-06-30T15:48:40.046Z"),

"pingMs" :NumberLong(0),

"syncingTo" :"127.0.0.1:27019",

"configVersion" : 1

},

{

"_id" : 3,

"name" :"127.0.0.1:27019",

"health" : 1,

"state" : 1,

"stateStr" :"PRIMARY",

"uptime" :7061,

"optime" : {

"ts": Timestamp(1498837720, 1),

"t" : NumberLong(2)

},

"optimeDate": ISODate("2017-06-30T15:48:40Z"),

"electionTime" : Timestamp(1498837508, 1),

"electionDate" : ISODate("2017-06-30T15:45:08Z"),

"configVersion" : 1,

"self" : true

}

],

"ok" : 1

}

rs1:PRIMARY>

可以看到127.0.0.1:27017成员节点已处于宕机状态。

现在重新开启127.0.0.1:27017mongod 服务:

mongod --dbpath C:\data\db --bind_ip127.0.0.1 --port 27017 --replSet rs1

再次查看集群状态:

rs1:PRIMARY> rs.status()

{

"set" : "rs1",

"date" : ISODate("2017-06-30T15:52:27.392Z"),

"myState" : 1,

"term" : NumberLong(2),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime": {

"ts" :Timestamp(1498837940, 1),

"t" :NumberLong(2)

},

"appliedOpTime" : {

"ts" :Timestamp(1498837940, 1),

"t" :NumberLong(2)

},

"durableOpTime" : {

"ts" :Timestamp(1498837940, 1),

"t" :NumberLong(2)

}

},

"members" : [

{

"_id" : 1,

"name" :"127.0.0.1:27017",

"health" : 1,

"state" : 2,

"stateStr" :"SECONDARY",

"uptime" : 11,

"optime" : {

"ts": Timestamp(1498837940, 1),

"t" :NumberLong(2)

},

"optimeDurable" : {

"ts": Timestamp(1498837940, 1),

"t" :NumberLong(2)

},

"optimeDate": ISODate("2017-06-30T15:52:20Z"),

"optimeDurableDate": ISODate("2017-06-30T15:52:20Z"),

"lastHeartbeat" :ISODate("2017-06-30T15:52:25.910Z"),

"lastHeartbeatRecv" :ISODate("2017-06-30T15:52:25.964Z"),

"pingMs" :NumberLong(1),

"syncingTo" :"127.0.0.1:27018",

"configVersion" : 1

},

{

"_id" : 2,

"name" :"127.0.0.1:27018",

"health" : 1,

"state" : 2,

"stateStr" :"SECONDARY",

"uptime" :441,

"optime" : {

"ts": Timestamp(1498837940, 1),

"t" :NumberLong(2)

},

"optimeDurable" : {

"ts": Timestamp(1498837940, 1),

"t" :NumberLong(2)

},

"optimeDate": ISODate("2017-06-30T15:52:20Z"),

"optimeDurableDate" :ISODate("2017-06-30T15:52:20Z"),

"lastHeartbeat" :ISODate("2017-06-30T15:52:27.254Z"),

"lastHeartbeatRecv": ISODate("2017-06-30T15:52:26.202Z"),

"pingMs" :NumberLong(0),

"syncingTo" :"127.0.0.1:27019",

"configVersion" : 1

},

{

"_id" : 3,

"name" :"127.0.0.1:27019",

"health" : 1,

"state" : 1,

"stateStr" :"PRIMARY",

"uptime" : 7287,

"optime" : {

"ts": Timestamp(1498837940, 1),

"t" :NumberLong(2)

},

"optimeDate": ISODate("2017-06-30T15:52:20Z"),

"electionTime" : Timestamp(1498837508, 1),

"electionDate" : ISODate("2017-06-30T15:45:08Z"),

"configVersion" : 1,

"self" : true

}

],

"ok" : 1

}

rs1:PRIMARY>

可以看到id为1的成员已经恢复,成为了一个从节点。这就体现了复制集的故障恢复以及容错能力。

另外,可以在复制集中动态的增加删除节点。这里就不再演示。

使用rs.add(‘IP:port’).