机器学习之朴素贝叶斯(naive Bayes)模型

机器学习之朴素贝叶斯模型

- 1、朴素贝叶斯模型介绍

- 2、朴素贝叶斯数学原理

- 3、算法及Python实现

- 4、小结

1、朴素贝叶斯模型介绍

朴素贝叶斯(naive Bayes)法是基于贝叶斯定理与特征条件独立假设的分类方法,对于给定的训练数据集,首先基于特征条件独立假设学习输入/输出的联合概率分布;然后基于此模型,对给定的输入x,利用贝叶斯定理求出后验概率最大的输出y。

2、朴素贝叶斯数学原理

贝叶斯公式

朴素贝叶斯法将实例分到后验概率最大的类中,这等价于期望风险最小化,假设选择0-1损失函数:

L(Y,f(X))={1,Y≠f(X)0,Y=f(X) L ( Y , f ( X ) ) = { 1 , Y ≠ f ( X ) 0 , Y = f ( X )

式中f(X)是分类决策函数。这时,期望风险函数为

期望是对联合分布P(X,Y)取的。由此取条件期望

为了使期望风险最小化,只需对X=x逐个取极小化,由此得到:

f(x)=argminy∈Y∑k=1KL(ck,y)P(ck|X=x)=argminy∈Y∑k=1KP(y≠ck|X=x)=argminy∈Y(1−P(y=ck|X=x))=argmaxy∈YP(y=ck|X=x) f ( x ) = a r g m i n y ∈ Y ∑ k = 1 K L ( c k , y ) P ( c k | X = x ) = a r g m i n y ∈ Y ∑ k = 1 K P ( y ≠ c k | X = x ) = a r g m i n y ∈ Y ( 1 − P ( y = c k | X = x ) ) = a r g m a x y ∈ Y P ( y = c k | X = x )

这样一来,根据期望风险最小化准则就得到了后验概率最大化准则:

即朴素贝叶斯法所采用的原理。

3、算法及Python实现

朴素贝叶斯法的学习与分类算法

输入:训练数据T={( x1,y1),(x2,y2),⋯,(x1,y1 x 1 , y 1 ) , ( x 2 , y 2 ) , ⋯ , ( x 1 , y 1 )},其中 xi=(x(1)i,x(2)i,⋯,x(n)i,)T,x(j)i x i = ( x i ( 1 ) , x i ( 2 ) , ⋯ , x i ( n ) , ) T , x i ( j ) 是第i个样本的第j个特征, x(j)i∈aj1,aj2,⋯ajsj,ajl x i ( j ) ∈ a j 1 , a j 2 , ⋯ a j s j , a j l 是第j个特征值可能取的第l个值, j=1,2,⋯,n,l=1,2,⋯,Sj,yi∈{c1,c2,⋯,cK}:实例x; j = 1 , 2 , ⋯ , n , l = 1 , 2 , ⋯ , S j , y i ∈ { c 1 , c 2 , ⋯ , c K } : 实 例 x ;

输出:实例x的类。

(1)计算先验概率及条件概率

(2)对于给定的实例 x=(x(1),x(2),⋯,x(n))T x = ( x ( 1 ) , x ( 2 ) , ⋯ , x ( n ) ) T ,计算

(3)确定实例x的类

下面是Python实现代码(所用到的数据集 email.rar)

from numpy import *

def createVocabList(dataSet):

vocabSet = set([])

for document in dataSet:

vocabSet = vocabSet | set(document)

return list(vocabSet)

def setOfWords2Vec(vocabList,inputSet):

returnVec = [0] * len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] = 1

else:

print("The word: %s is not in my Vocabulary!"%word)

return returnVec

def bagOfWords2Vec(vocabList,inputSet):

returnVec = [0] * len(vocabList)

for word in inputSet:

if word in vocabList:

returnVec[vocabList.index(word)] += 1

return returnVec

def trainNB0(trainMatrix,trainCategory):

numTrainDocs = len(trainMatrix)

numWords = len(trainMatrix[0])

pAbusive = sum(trainCategory)/float(numTrainDocs)

p0Num = ones(numWords); p1Num = ones(numWords)

# p0Denom = 0.0; p1Denom = 0.0

p0Denom = 2.0; p1Denom = 2.0

for i in range(numTrainDocs):

if trainCategory[i] == 1:

p1Num += trainMatrix[i] #将每条内容对应的词汇向量值对应相加,得到每个词汇向量出现的次数

p1Denom += sum(trainMatrix[i]) #使用广播机制将不同类别每条内容加到词汇向量维度的计数向量上去

else:

p0Num += trainMatrix[i]

p0Denom += sum(trainMatrix[i])

p1Vect = log(p1Num/p1Denom)

p0Vect = log(p0Num/p0Denom)

return p0Vect,p1Vect,pAbusive

def classifyNB(vec2Classify,p0Vec,p1Vec,pClass1):

p1 = sum(vec2Classify * p1Vec) + log(pClass1)

p0 = sum(vec2Classify * p0Vec) + log(1.0 - pClass1)

if p1 > p0:

return 1 #代表是垃圾邮件

else:

return 0 #代表不是垃圾邮件

def testingNB():

listOPosts,listClasses = loadDataSet()

myVocabList = createVocabList(listOPosts)

trainMat = []

for postinDoc in listOPosts:

trainMat.append(setOfWords2Vec(myVocabList,postinDoc))

p0V,p1V,pAb = trainNB0(array(trainMat),array(listClasses))

testEntry = ['love','my','dalmation']

thisDoc = array(setOfWords2Vec(myVocabList,testEntry))

print(testEntry,'classified as:',classifyNB(thisDoc,p0V,p1V,pAb))

testEntry = ['stupid','garbage']

thisDoc = array(setOfWords2Vec(myVocabList,testEntry))

print(testEntry,'classified as:',classifyNB(thisDoc,p0V,p1V,pAb))

def textParse(bigString):

import re

listOfTokens = re.split(r'\W*',bigString)

return [tok.lower() for tok in listOfTokens if len(tok) > 2]

def spamTest():

docList = []; classList = []; fullText = []

for i in range(1,26):

wordList = textParse(open('./email/spam/%d.txt'%i).read())

docList.append(wordList)

fullText.extend(wordList)

classList.append(1)

wordList = textParse(open('./email/ham/%d.txt'%i).read())

docList.append(wordList)

fullText.extend(wordList)

classList.append(0)

vocabList = createVocabList(docList)

trainingSet = list(range(50)); testSet = []

for i in range(5):

randIndex = int(random.uniform(0,len(trainingSet)))

testSet.append(trainingSet[randIndex])

del(trainingSet[randIndex])

trainMat = []; trainClasses = []

for docIndex in trainingSet:

trainMat.append(setOfWords2Vec(vocabList,docList[docIndex]))

trainClasses.append(classList[docIndex])

p0V,p1V,pSpam = trainNB0(array(trainMat),array(trainClasses))

errorCount = 0

for docIndex in testSet:

wordVector = setOfWords2Vec(vocabList,docList[docIndex])

predClass = classifyNB(array(wordVector),p0V,p1V,pSpam)

labelClass = classList[docIndex]

print(docList[docIndex]),

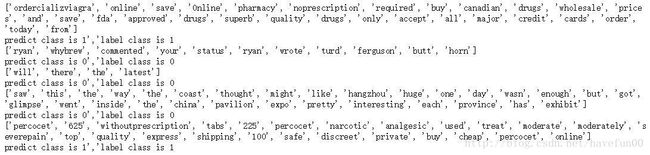

print("predict class is %d','label class is %d" %(predClass,labelClass))执行程序

spamTest()4、小结

对于分类而言,使用概率要比硬规则要更为有效,贝叶斯概率提供了利用已知值来估计未知概率的有效方法。在本算法中通过特征之间的条件独立性假设,降低了对数据量的要求,当然这个假设过于简单,因此本算法称为朴素贝叶斯。尽管条件独立性假设并不正确,但是朴素贝叶斯仍然是一种有效的分类器。