(防坑笔记)hadoop3.0 (三) MapReduce流程及序列化、偏移值(MapReduce)

防坑留言:

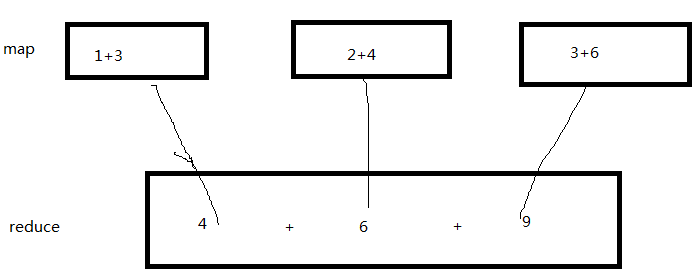

一种将数据量分成小块计算后再汇总的一种方式吧,

基本理解

一张图简单构建MapReduce的基本思路

map():相当于分解任务的集合吧

reduce(): 相当于对分解任务运算结果的汇总

以上的两种函数的形参都是K/V结构

Mapper的任务过程

(其中的mappe任务是一个java进程)

MapReduce运行的时候,通过Mapper运行的任务读取HDFS中的数据文件,然后调用自己的方法,处理数据,最后输出。Reducer任务会接收Mapper任务输出的数据,作为自己的输入数据,调用自己的方法,最后输出到HDFS的文件中。

一共是如下6阶段

1.第一阶段是把输入文件按照一定的标准分片(InputSplit),每个输入片的大小是固定的。默认情况下,输入片(InputSplit)的大小与数据块(Block)的大小是相同的。如果数据块(Block)的大小是默认值64MB,输入文件有两个,一个是32MB,一个是72MB,那么小的文件是一个输入片,大文件会分为两个数据块64MB和8MB,一共产生三个输入片。每一个输入片由一个Mapper进程处理。这里的三个输入片,会有三个Mapper进程处理。

2.第二阶段是对输入片中的记录按照一定的规则解析成键值对。有个默认规则是把每一行文本内容解析成键值对。“键”是每一行的起始位置(单位是字节),“值”是本行的文本内容。

3.第三阶段是调用Mapper类中的map方法。第二阶段中解析出来的每一个键值对,调用一次map方法。如果有1000个键值对,就会调用1000次map方法。每一次调用map方法会输出零个或者多个键值对。

4.第四阶段是按照一定的规则对第三阶段输出的键值对进行分区。比较是基于键进行的。比如我们的键表示省份(如北京、上海、山东等),那么就可以按照不同省份进行分区,同一个省份的键值对划分到一个区中。默认是只有一个区。分区的数量就是Reducer任务运行的数量。默认只有一个Reducer任务。

5.第五阶段是对每个分区中的键值对进行排序。首先,按照键进行排序,对于键相同的键值对,按照值进行排序。比如三个键值对<2,2>、<1,3>、<2,1>,键和值分别是整数。那么排序后的结果是<1,3>、<2,1>、<2,2>。如果有第六阶段,那么进入第六阶段;如果没有,直接输出到本地的linux文件中。

6.第六阶段是对数据进行归约处理,也就是reduce处理。键相等的键值对会调用一次reduce方法。经过这一阶段,数据量会减少。归约后的数据输出到本地的linux文件中。本阶段默认是没有的,需要用户自己增加这一阶段的代码。其实说白了,整个mapreduce的过程,就是将任务按需求的切割成一个个块(block),分别对其进行操作运算,之后再重新汇总的一种过程。

直接拉一个通用手机浏览分析例子

源数据 (3个手机号及其在指定网站的上下传流量)

1363157993044 18211575961 94-71-AC-CD-E6-18:CMCC-EASY 120.196.100.99 iface.qiyi.com 视频网站 15 12 1527 2106 200

1363157995033 15920133257 5C-0E-8B-C7-BA-20:CMCC 120.197.40.4 sug.so.360.cn 信息安全 20 20 3156 2936 200

1363157982040 13502468823 5C-0A-5B-6A-0B-D4:CMCC-EASY 120.196.100.99 y0.ifengimg.com 综合门户 57 102 7335 110349 200

通解:

在此之前,先对序列化做一个初步的认识,序列化后,可以将结构化的数据转为字节流,方便传输与永久性储存

而hadoop也有自己的一套序列化读写接口 Writable,内部仅有两个方法write()、readFields(),便于其集群时节点的通讯。

这里的Text,从源码中看出,它是针对于编码格式且转换为utf-8的,同时继承与实现中也同时实现了Comparable方法,代表可排序的,可以当做一个编码为utf-8的特殊String类型;

KpiWritable.java (类似一个pojo,但是实现了hadoop的序列化接口Writable)

package hadoop.Writable.Serialization;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import org.apache.hadoop.io.Writable;

/**

* @ClassName: KpiWritable

* @Description: 封装KpiWritable类型

* @author linge

* @date 2017年12月27日 下午5:12:18

*

*/

public class KpiWritable implements Writable{

private long upPackNum; // 上行数据包数,单位:个

private long downPackNum; // 下行数据包数,单位:个

private long upPayLoad; // 上行总流量,单位:byte

private long downPayLoad; // 下行总流量,单位:byte

//方便序列化

public KpiWritable() {

super();

}

public KpiWritable(long upPackNum, long downPackNum, long upPayLoad, long downPayLoad) {

super();

this.upPackNum = upPackNum;

this.downPackNum = downPackNum;

this.upPayLoad = upPayLoad;

this.downPayLoad = downPayLoad;

}

public KpiWritable(String upPack, String downPack, String upPay,

String downPay) {//这个构造函数主要是为了在分发处理数据的时候方便字符串插入

upPackNum = Long.parseLong(upPack);

downPackNum = Long.parseLong(downPack);

upPayLoad = Long.parseLong(upPay);

downPayLoad = Long.parseLong(downPay);

}

@Override

public String toString() {//这里是方便分区,分片使用

String result = upPackNum + "\t" + downPackNum + "\t" + upPayLoad

+ "\t" + downPayLoad;

return result;

}

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(upPackNum);

out.writeLong(downPackNum);

out.writeLong(upPayLoad);

out.writeLong(downPayLoad);

}

@Override

public void readFields(DataInput in) throws IOException {

upPackNum = in.readLong();

downPackNum = in.readLong();

upPayLoad = in.readLong();

downPayLoad = in.readLong();

}

public long getUpPackNum() {

return upPackNum;

}

public void setUpPackNum(long upPackNum) {

this.upPackNum = upPackNum;

}

public long getDownPackNum() {

return downPackNum;

}

public void setDownPackNum(long downPackNum) {

this.downPackNum = downPackNum;

}

public long getUpPayLoad() {

return upPayLoad;

}

public void setUpPayLoad(long upPayLoad) {

this.upPayLoad = upPayLoad;

}

public long getDownPayLoad() {

return downPayLoad;

}

public void setDownPayLoad(long downPayLoad) {

this.downPayLoad = downPayLoad;

}

}

MyMapper.java 分散处理,也就是前面说的分区,分片

package hadoop.Writable.Serialization;

import java.io.IOException;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Mapper;

//LongWritable(偏移值) 是因为所有参数都是long类型,也可以用自定义的类,那样只需要让pojo类实现与LongWritable一样的WritableComparable接口就好,里面同样实现了writable接口

public class MyMapper extends Mapper{

@Override

protected void map(LongWritable key, Text value, Mapper.Context context)

throws IOException, InterruptedException {

String[] spilted = key.toString().split("\t");

String string = spilted[1];//手机号码

Text k2 = new Text(string);//手机号码作为主键

KpiWritable v2 = new KpiWritable(spilted[6], spilted[7],spilted[8], spilted[9]);

context.write(k2, v2);

}

}

MyReducer.java对数据进行归约处理

package hadoop.Writable.Serialization;

import java.io.IOException;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Reducer;

public class MyReducer extends Reducer{

@Override

protected void reduce(Text k2, Iterable arg1,

Reducer.Context context) throws IOException, InterruptedException {

//用于统计的参数

long upPackNum = 0L;

long downPackNum = 0L;

long upPayLoad = 0L;

long downPayLoad = 0L;

for (KpiWritable kpiWritable : arg1) {

upPackNum+=kpiWritable.getUpPackNum();

downPackNum+=kpiWritable.getDownPackNum();

upPayLoad+=kpiWritable.getUpPayLoad();

downPayLoad+=kpiWritable.getDownPayLoad();

}

//k2这里的k2是mapper拆分的key,也就是手机号码

KpiWritable v3 = new KpiWritable(upPackNum, downPackNum, upPayLoad, downPayLoad);

context.write(k2, v3);

}

}

代码整合实现Tool接口,通过ToolRunner来运行应用程序

@Override

public int run(String[] args) throws Exception {

// 首先删除输出目录已生成的文件

FileSystem fs = FileSystem.get(new URI(INPUT_PATH), getConf());

Path outPath = new Path(OUTPUT_PATH);

if (fs.exists(outPath)) {

fs.delete(outPath, true);

}

// 定义一个作业

Job job = Job.getInstance(getConf(),"lingeJob");

// 设置输入目录

FileInputFormat.setInputPaths(job, new Path(INPUT_PATH));

// 设置自定义Mapper类

job.setMapperClass(MyMapper.class);

// 指定的类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(KpiWritable.class);

// 设置自定义Reducer类

job.setReducerClass(MyReducer.class);

// 指定的类型

job.setOutputKeyClass(Text.class);

job.setOutputKeyClass(KpiWritable.class);

// 设置输出目录

FileOutputFormat.setOutputPath(job, new Path(OUTPUT_PATH));

// 提交作业

Boolean res = job.waitForCompletion(true);

if(res){

System.out.println("Process success!");

System.exit(0);

}

else{

System.out.println("Process failed!");

System.exit(1);

}

return 0;

}

main方法调用注册

public static void main(String[] args) {

Configuration conf = new Configuration();

try {

int res = ToolRunner.run(conf, new MyKpiJob(), args);

System.exit(res);

} catch (Exception e) {

e.printStackTrace();

}

}完整代码

package hadoop.Writable.Deserialization;

import java.io.DataInput;

import java.io.DataOutput;

import java.io.IOException;

import java.net.URI;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.conf.Configured;

import org.apache.hadoop.fs.FileSystem;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.io.Writable;

import org.apache.hadoop.io.WritableComparable;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.Tool;

import org.apache.hadoop.util.ToolRunner;

public class MyKpiJob extends Configured implements Tool {

/*

* 自定义数据类型KpiWritable

*/

public static class KpiWritable implements WritableComparable{

long upPackNum; // 上行数据包数,单位:个

long downPackNum; // 下行数据包数,单位:个

long upPayLoad; // 上行总流量,单位:byte

long downPayLoad; // 下行总流量,单位:byte

public KpiWritable() {

}

public KpiWritable(String upPack, String downPack, String upPay,

String downPay) {

upPackNum = Long.parseLong(upPack);

downPackNum = Long.parseLong(downPack);

upPayLoad = Long.parseLong(upPay);

downPayLoad = Long.parseLong(downPay);

}

@Override

public String toString() {

String result = upPackNum + "\t" + downPackNum + "\t" + upPayLoad

+ "\t" + downPayLoad;

return result;

}

@Override

public void write(DataOutput out) throws IOException {

out.writeLong(upPackNum);

out.writeLong(downPackNum);

out.writeLong(upPayLoad);

out.writeLong(downPayLoad);

}

@Override

public void readFields(DataInput in) throws IOException {

upPackNum = in.readLong();

downPackNum = in.readLong();

upPayLoad = in.readLong();

downPayLoad = in.readLong();

}

@Override

public int compareTo(KpiWritable o) {

// TODO Auto-generated method stub

return (int) (o.downPackNum-this.downPackNum);

}

}

/*

* 自定义Mapper类,重写了map方法

*/

public static class MyMapper extends

Mapper {

protected void map(

LongWritable k1,

Text v1,

org.apache.hadoop.mapreduce.Mapper.Context context)

throws IOException, InterruptedException {

String[] spilted = v1.toString().split("\\s+");

System.out.println(spilted.length);

if(spilted.length>1){

String msisdn = spilted[1]; // 获取手机号码

Text k2 = new Text(msisdn); // 转换为Hadoop数据类型并作为k2

KpiWritable v2 = new KpiWritable(spilted[6], spilted[7],

spilted[8], spilted[9]);

context.write(k2, v2);

}

};

}

/*

* 自定义Reducer类,重写了reduce方法

*/

public static class MyReducer extends

Reducer {

protected void reduce(

Text k2,

java.lang.Iterable v2s,

org.apache.hadoop.mapreduce.Reducer.Context context)

throws IOException, InterruptedException {

long upPackNum = 0L;

long downPackNum = 0L;

long upPayLoad = 0L;

long downPayLoad = 0L;

for (KpiWritable kpiWritable : v2s) {

upPackNum += kpiWritable.upPackNum;

downPackNum += kpiWritable.downPackNum;

upPayLoad += kpiWritable.upPayLoad;

downPayLoad += kpiWritable.downPayLoad;

}

KpiWritable v3 = new KpiWritable(upPackNum + "", downPackNum + "",

upPayLoad + "", downPayLoad + "");

System.out.println(k2+"\nupPackNum:"+upPackNum+"/ndownPackNum:"+downPackNum+"/n:upPayLoad:"+upPayLoad+"/ndownPayLoad:"+downPayLoad);

context.write(k2, v3);

};

}

// 输入文件目录

public static final String INPUT_PATH = "hdfs://192.168.88.129:9000/ha.txt";

// 输出文件目录

public static final String OUTPUT_PATH = "hdfs://192.168.88.129:9000/out";

@Override

public int run(String[] args) throws Exception {

// 首先删除输出目录已生成的文件

FileSystem fs = FileSystem.get(new URI(INPUT_PATH), getConf());

Path outPath = new Path(OUTPUT_PATH);

if (fs.exists(outPath)) {

fs.delete(outPath, true);

}

// 定义一个作业

Job job = Job.getInstance(getConf(),"lingeJob");

// 设置输入目录

FileInputFormat.setInputPaths(job, new Path(INPUT_PATH));

// 设置自定义Mapper类

job.setMapperClass(MyMapper.class);

// 指定的类型

job.setMapOutputKeyClass(Text.class);

job.setMapOutputValueClass(KpiWritable.class);

// 设置自定义Reducer类

job.setReducerClass(MyReducer.class);

// 指定的类型

job.setOutputKeyClass(Text.class);

job.setOutputKeyClass(KpiWritable.class);

// 设置输出目录

FileOutputFormat.setOutputPath(job, new Path(OUTPUT_PATH));

// 提交作业

Boolean res = job.waitForCompletion(true);

if(res){

System.out.println("Process success!");

System.exit(0);

}

else{

System.out.println("Process failed!");

System.exit(1);

}

return 0;

}

public static void main(String[] args) {

Configuration conf = new Configuration();

try {

int res = ToolRunner.run(conf, new MyKpiJob(), args);

System.exit(res);

} catch (Exception e) {

e.printStackTrace();

}

}

} 整个操作中,我们主要是对Mapper与Reduce类的操作比较多,也主要是这些部分构成我们的业务需求实现。