KINECT+opencv(1)将骨骼图像转化为深度图像后姿势识别

KINECT+opencv(1)将骨骼图像转化为深度图像后姿势识别

环境:kinect1.7+opencv2.4+vc2015

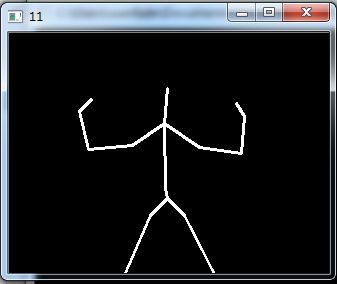

- 使用kinect获取三维空间内的骨骼图像并转换为深度图像坐标绘制

- 对二维的图像进行模式识别,与本地模板库匹配

目录

- KINECTopencv1将骨骼图像转化为深度图像后姿势识别

- 目录

- 写在前面

- 对当前帧处理并匹配

- kinect对帧的处理

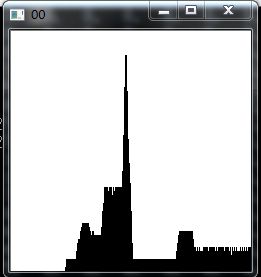

- 条形图变换

- 根据动态时间规划法匹配

- 记录并保存模板到本地

- 使用opencv的FileStorage类生成xml文件

- 写在后边

写在前面

- 接触kinect刚好一个月,自学的过程中从其他人的博客里学到了许多知识。回想2个月前自己还不会任何一门面向对象的语言,全凭着有啥不会就学啥的一股劲,终于到了现在编出来第一个综合性的程序。同时也在我写博客的过程中加深了对这个程序的理解,有不当之处还请各位斧正。

- 这个程序分为两个部分,对当前帧对象处理并匹配,和记录并保存模板到本地。

1.对当前帧处理并匹配

#include

#include

#include

#include

using namespace std;

using namespace cv;

//---参数--------------------------------------------

#define NUM_OF_MODEL 3 //模板的个数

#define NUM_OF_MATRIX 50 //dtw算法中矩阵元素的个数

void drawSkeleton(Mat &image, CvPoint pointSet[]);

string int2str(int _intval)//c++不像java一样重载了+运算符,在字符串与int量加法运算时执行的是地址运算,所以需要自己将int转换为string

{

string time_str;

char c[10];

sprintf_s(c, "%d", _intval);

time_str = c;

return c;

}

class Histogram1D {

private:

int histSize[1];

int dims;

int minmax[2];

public:

Histogram1D()

{

histSize[0] = 240;

int dims(240);

minmax[0] = 0;

minmax[1] = 240;

}

void limitline(cv::Mat image,int &min,int &max, int minVal = 1)//获得柱状图的左右边界

{

for (;minmax[0] < histSize[0];minmax[0]++)

{

if (*(image.data + minmax[0]) > minVal)

break;

}

min = minmax[0];

for (;minmax[1] >= 0;minmax[1]--)

{

if (*(image.data + minmax[1]) > minVal)

break;

}

max = minmax[1];

}

Mat getHistogram(const cv::Mat &image)//将传入的image的每一行的像素数量提取出来保存到一个1x240的矩阵里

{

cv::Mat num;

num.create(1, histSize[0], CV_8SC1);

for (int i = 0;i < histSize[0];i++)

{

cv::Mat row = image.rowRange(i, i + 1);

int x = countNonZero(row);

*(num.data + i) = x;

}

return num;

}

cv::Mat getHistogramImage(cv::Mat image)//如果将一个240x320的二值图像传入,返回这个二值图像按行处理,显示每一行像素数量的条形图

{

Mat _num=getHistogram(image);

double min(0), max(0);

cv::minMaxLoc(_num, &min, &max);

cv::Mat histImg(histSize[0], histSize[0], CV_8U, cv::Scalar(255));

int hpt = static_cast<int>(0.9*histSize[0]);

for (int r = 0;r < histSize[0];r++)

{

int hval = *(_num.data + r);

int intensity = static_cast<int>(hval*hpt / max);

cv::line(histImg, cv::Point(r, histSize[0]), cv::Point(r, histSize[0] - intensity), cv::Scalar::all(0));

}

return histImg;

}

};

class RtwCal: public Histogram1D{

private:

Vector4 points[20];//保存的骨骼关节三维坐标暂时没有用到

Mat skeleton;

Mat modelskeleton[NUM_OF_MODEL];//保存模板用以比较

public:

RtwCal(NUI_SKELETON_FRAME skeletonFrame,int n)//在RtwCal类初始化的时候就读入本地保存好的模板

{

int counter = 0;

FileStorage fds("idx_file.xml", FileStorage::READ);//打开idx_file.xml,模板图像保存名为mat_id

if (!fds.isOpened()) cout << "file is not exist" << endl;

for (int i = 0;i < NUM_OF_MODEL;i++)

{

Mat temp;

fds["mat_id" + int2str(counter)] >> temp;

modelskeleton[i]=getHistogram(temp);

counter++;

}

fds.release();

skeleton.create(240, 320, CV_8UC1);

for (int i = 1;i < 20;i++)

{

points[i].x = skeletonFrame.SkeletonData[n].SkeletonPositions[i].x;

points[i].y = skeletonFrame.SkeletonData[n].SkeletonPositions[i].y;

points[i].z = skeletonFrame.SkeletonData[n].SkeletonPositions[i].z;

}

}

void histCal()

{

imshow("hist",getHistogramImage(skeleton));

waitKey(500);

}

int distance(Mat target,Mat model)//动态时间规划法,将target转换为保存有各行像素数量的1x240的矩阵后,与模板里的矩阵求邻接矩阵

{

Mat compare;

compare.create(NUM_OF_MATRIX, NUM_OF_MATRIX, CV_16U);//邻接矩阵行、列数量为NUM_OF_MATRIX

target = getHistogram(target);

int size1[2];

int size2[2];

limitline(target, size1[0], size1[1]);//获取矩阵内非0数据块的上下边界

limitline(model, size2[0], size2[1]);

CvPoint points1[NUM_OF_MATRIX];

CvPoint points2[NUM_OF_MATRIX];

for (int i = 0;i < NUM_OF_MATRIX;i++)//根据取到的上下边界,从240个数据里均匀抽取出来NUM_OF_MATRIX个点(i,j),表示第i行上有j个像素

{

points1[i] = cvPoint((int)(size1[0] + ((size1[1] - size1[0]) / NUM_OF_MATRIX * i)), target.data[(int)(size1[0] + ((size1[1] - size1[0]) / NUM_OF_MATRIX * i))]);

points2[i] = cvPoint((int)(size2[0] + ((size2[1] - size2[0]) / NUM_OF_MATRIX * i)), model.data[(int)(size2[0] + ((size2[1] - size2[0]) / NUM_OF_MATRIX * i))]);

}

for (int i = 0;i < NUM_OF_MATRIX;i++)//计算出来点points1[i]和点points2[j]的欧氏距离,并放入邻接矩阵中(i,j)处

{

for (int j = 0;j < NUM_OF_MATRIX;j++)

(compare.data + i)[j] = ((points1[i].x - points2[j].x)*(points1[i].x - points2[j].x) + (points1[i].y - points2[j].y)*(points1[i].y - points2[j].y));

}

int dist = *compare.data;

int in = 0;

int jn = 0;

for (;in < NUM_OF_MATRIX && jn < NUM_OF_MATRIX;)//选择出邻接矩阵的最优路径,并求出路径长度

{

int datamin = 0;

if ((compare.data + in + 1)[jn] <= (compare.data + in + 1)[jn + 1] <= (compare.data + in)[jn + 1] || (compare.data + in + 1)[jn] <= (compare.data + in)[jn + 1] <= (compare.data + in + 1)[jn + 1])

{

datamin = (compare.data + in + 1)[jn];

in++;

}

else if ((compare.data + in + 1)[jn + 1] <= (compare.data + in + 1)[jn] <= (compare.data + in)[jn + 1] || (compare.data + in + 1)[jn + 1] <= (compare.data + in)[jn + 1] <= (compare.data + in + 1)[jn])

{

datamin = (compare.data + in + 1)[jn + 1];

in++;

jn++;

}

else

{

datamin = (compare.data + in)[jn + 1];

jn++;

}

dist = dist + datamin;

}

return dist;//返回路径长度,作为目标图像与模板图像的特征距离

}

int classify(Mat image)

{

skeleton = image;

int disval[NUM_OF_MODEL] = { 0 };

for (int i = 0;i < NUM_OF_MODEL;i++)

{

disval[i]=distance(skeleton, modelskeleton[i]);//计算当前帧和第i个模板的距离

}

int findflag = 0;

for (int i = 0;i < NUM_OF_MODEL-1;i++)

{

if (disval[i] > disval[i + 1])

findflag = i + 1;

}

return findflag;

}

};

int main()

{

bool flag = FALSE;//读取到一帧以后设置为true,若为false则一直读取当前图像

HRESULT hr = NuiInitialize(NUI_INITIALIZE_FLAG_USES_SKELETON);

HANDLE skeletonEvent = CreateEvent(NULL, TRUE, FALSE, NULL);

hr = NuiSkeletonTrackingEnable(skeletonEvent, 0);

while (!flag)//当接收到一帧骨骼信号以后,处理当前帧并与模板对比识别,然后结束循环

{

NUI_SKELETON_FRAME skeletonFrame = { 0 };

bool bFoundSkeleton = FALSE;

if (WaitForSingleObject(skeletonEvent, INFINITE) == 0)

{

hr = NuiSkeletonGetNextFrame(0, &skeletonFrame);

for (int i = 0;i //kinect支持最大6人的玩家识别,以及最大两人的玩家骨骼追踪

{

NUI_SKELETON_TRACKING_STATE trackingState = skeletonFrame.SkeletonData[i].eTrackingState;

if (trackingState == NUI_SKELETON_TRACKED)

{

bFoundSkeleton = TRUE;

}

}

if (!bFoundSkeleton)

continue;

for (int i = 0; i < NUI_SKELETON_COUNT; i++)

{

if (skeletonFrame.SkeletonData[i].eTrackingState == NUI_SKELETON_TRACKED &&

skeletonFrame.SkeletonData[i].eSkeletonPositionTrackingState[NUI_SKELETON_POSITION_SHOULDER_CENTER] != NUI_SKELETON_POSITION_NOT_TRACKED)

{

RtwCal rtwcal(skeletonFrame, i);//对帧image进行模板匹配的类,使用了动态时间规划RTW

Mat image(240, 320, CV_8UC1,Scalar(0));//接下来kinect读取到的骨骼数据转换为深度空间图像后保存在image中

Vector4 cpoint[20] = { 0 };

CvPoint cvp[20];

for (int j = 0;j < 20;j++)//kinect一共获取20个关节的空间坐标

{

cpoint[j].x = skeletonFrame.SkeletonData[i].SkeletonPositions[j].x;

cpoint[j].y = skeletonFrame.SkeletonData[i].SkeletonPositions[j].y;

cpoint[j].z = skeletonFrame.SkeletonData[i].SkeletonPositions[j].z;

}

float fx, fy;

for (int i = 0;i < 20;i++)

{

NuiTransformSkeletonToDepthImage(cpoint[i], &fx, &fy);

cvp[i].x = (int)fx;

cvp[i].y = (int)fy;

}

drawSkeleton(image, cvp);//使用函数drawSkeleton将关节的点连成线

imshow("skeleton", image);

cout<<"this pose is "<image)+1<//将image传入classify中,返回匹配到的模板标号

waitKey(4000);

flag = TRUE;//设置标志,kinect不再读取下一帧

break;

}

}

}

}

NuiShutdown();

return 0;

}

void drawSkeleton(Mat &image, CvPoint pointSet[])

{

CvScalar color;

color = cvScalar(255);

if ((pointSet[NUI_SKELETON_POSITION_HEAD].x != 0 || pointSet[NUI_SKELETON_POSITION_HEAD].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_HEAD], pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SPINE].x != 0 || pointSet[NUI_SKELETON_POSITION_SPINE].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SPINE], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SPINE].x != 0 || pointSet[NUI_SKELETON_POSITION_SPINE].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_SPINE], pointSet[NUI_SKELETON_POSITION_HIP_CENTER], color, 2);

//左上肢

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_SHOULDER_LEFT], pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_ELBOW_LEFT], pointSet[NUI_SKELETON_POSITION_WRIST_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HAND_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HAND_LEFT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_WRIST_LEFT], pointSet[NUI_SKELETON_POSITION_HAND_LEFT], color, 2);

//右上肢

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_SHOULDER_CENTER], pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_SHOULDER_RIGHT], pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_ELBOW_RIGHT], pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HAND_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HAND_RIGHT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_WRIST_RIGHT], pointSet[NUI_SKELETON_POSITION_HAND_RIGHT], color, 2);

//左下肢

if ((pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_LEFT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_HIP_CENTER], pointSet[NUI_SKELETON_POSITION_HIP_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_HIP_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_HIP_LEFT], pointSet[NUI_SKELETON_POSITION_KNEE_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_KNEE_LEFT], pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_FOOT_LEFT].x != 0 || pointSet[NUI_SKELETON_POSITION_FOOT_LEFT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_ANKLE_LEFT], pointSet[NUI_SKELETON_POSITION_FOOT_LEFT], color, 2);

//右下肢

if ((pointSet[NUI_SKELETON_POSITION_HIP_CENTER].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_CENTER].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_HIP_CENTER], pointSet[NUI_SKELETON_POSITION_HIP_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_HIP_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_HIP_RIGHT], pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_KNEE_RIGHT], pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT], color, 2);

if ((pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT].y != 0) &&

(pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT].x != 0 || pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT].y != 0))

line(image, pointSet[NUI_SKELETON_POSITION_ANKLE_RIGHT], pointSet[NUI_SKELETON_POSITION_FOOT_RIGHT], color, 2);

} kinect对帧的处理

kinect重复的从骨骼摄像头读取数据,当判断出追踪到人时,kinect的追踪标记会变为NUI_SKELETON_TRACKED,表示当前玩家骨骼已计算出。将骨骼三维坐标用NuiTransformSkeletonToDepthImage函数转换后,可以绘制出这样的骨骼图形:

条形图变换

获得二维的骨骼图形后,把该图中各行像素数量的提取出来,得到条形图。

根据动态时间规划法匹配

计算两个这样的条形图的相似度,运用了动态时间规划法。同时因为条形图起始和结尾一般会有0数据,所以还需要对条形图进行缩放。

2. 记录并保存模板到本地

//@这个程序就是随便写的专门用来采集模板动作的程序

#include 使用opencv的FileStorage类生成xml文件

值得一提的是如果直接用WRITE方式连续写三个数据进去,比如:

fs<<”mat_”<< image1<< image2<< image3;

那么读取的时候出现打开了xml文件却读不到任何数据的情况,所以我在外部先把xml用write方式打开,初始化掉,在循环里用append的方式追加写入。

写在后边

进入研究室和日本老师相談、老师问我会什么,做过什么。

我说我会日语和一些计算机的基础知识。

老师问你不是情報工学的吗?

我说是啊,可是前三年学日语比较多。

老师:困るなあ

我:……

一个月后

我去和老师meeting,老师让我報告这一个月的收获。

我特别自信的拿出写的这个程序,但是还不敢表露出来,

说,我先试着把代码写出来了

老师:诶?代码都写出来了?そりゃちょっと困るなあ

我:……

老师于是听我讲完了程序

老师:为什么要把三维的信息转化为二维呢?好不容易用上了kinect,真是……

我:……

老师:为什么要用条形图来匹配呢?用空间坐标计算距离不行吗?为什么这里……?为什么……?为什么……?

这个程序的思路老师也没有反对,但是却给我提出了一堆问题让我自己去改进,这样的教学模式对我而言始终挑战,但是确实有一个老师能一眼看出来你的不足之处是让我十分感激的。

根据老师的指导,我下一步尝试用三维信息识别一个人侧坐在镜头前的吃饭动作和写字动作。这两个动作只有在手肘以下才可以分别,因此要处理一系列连续的帧,并考虑使用向量或者角度来作为识别的特征量,但是也许匹配的算法可以在RTW算法上进一步改进