【多媒体编解码】Android 视频解析MediaExtractor

写在前面:

学习Android多媒体的步骤:

1,Audio PCM &video YUV各种数据的处理,格式的封装与装换原理

2,多媒体的播放框架,nuplayer ,stagefright

3,音视频分离 MediaExtractor

4,音频编解码(以AAC为例)

5,视频图像编解码(以H264为例)

6,音视频同步技术

这一部分的学习之前,需要了解:

1,音视频容器的概念,参考博文:

http://blog.csdn.net/leixiaohua1020/article/details/17934487

2,不同的视频封装格式标准(这里以MP4文件分析),参考博文:

http://blog.csdn.net/chenchong_219/article/details/44263691

3,openmax IL框架

https://www.khronos.org/openmaxil

4,查看视频文件工具:

ultraedit 一个文本编辑器

Elecard Video Format Analyzer视频格式分析器,可以看到视频每个box的各个元素的说明,偏移值,大小等信息。通过某些具体的box可以查询到视频的格式信息。

=============以下是正文部分====================

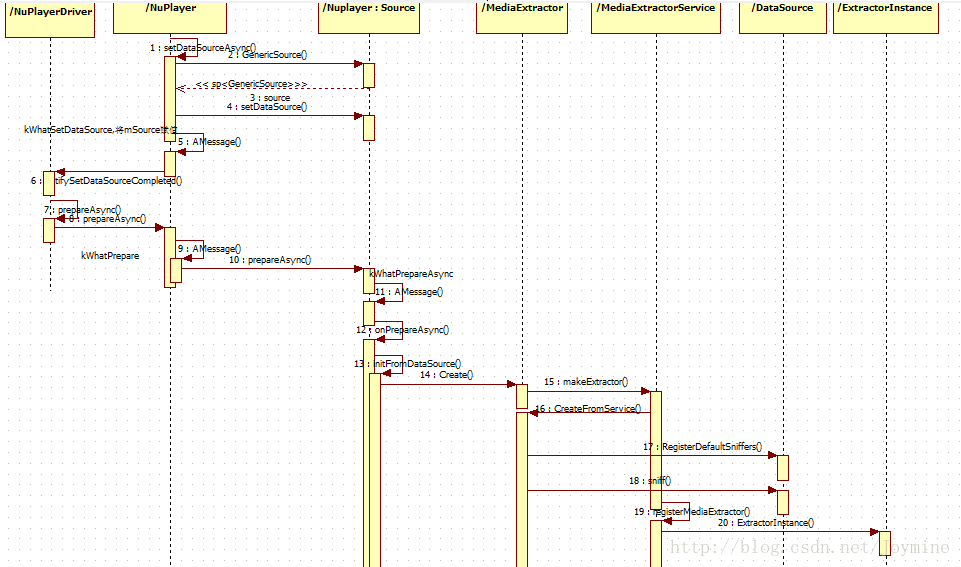

以播放本地视频文件为例,创建MediaExtractor的流程,序列图如下:

交互1,nuplayer::setDataSourceAsync

从MediaPlayer setDataSource开始,实质是调用

setDataSourceAsync(int fd, int64_t offset, int64_t length),不同的播放方式,参数不一样。

主要工作是:

交互2~4 :创建一个GenericSource,同时将获取的参数通过GenericSource::setDataSource传递

交互5: 发送消息kWhatSetDataSource给 nuplayer(AHandler)处理事件。主要是

将获得的nuplayer::Source(GenericSource)赋值给snuplayer::mSource

发送消息给NuPlayerDriver,告诉上层setDataSource完成,提示上层可以开始下一步指令。见交互6:driver->notifySetDataSourceCompleted

交互8:Nuplayer::prepareAsync

上层得到设置谁完成的消息之后,调用这个函数开始下一步的指令,主要工作是:

交互 9, :发送消息kWhatPrepare给Nuplayer(AHandler)

交互10 :nuplayer收到消息后,操作mSource (也是一个AHandler),在这个离职中间,实质是调用NuPlayer::GenericSource::prepareAsync(),主要工作是:

给Souece创建一个ALooper,用来循环接收处理AMessage

发送消息kWhatPrepareAsync给Source(AHandler)开始异步准备- 交互12: 接受到消息后,调用GenericSource::onPrepareAsync(),主要的工作是:

根据条件,实例化NuPlayer::GenericSource::mDataSource,一个具体的DataSource的派生类,本例是 FileSource。

根据mDataSource,创建一个MediaExtractor,GenericSource::initFromDataSource;具体流程,就是交互13~20,这个流程比较繁琐,但是只需要关注17,18,20

交互13~17:这里才是重点

交互13:GenericSource::initFromDataSource

后面还将具体分析这个函数的其他重要工作

1,根据sniff创建指定的mediaExtractor,创建同时读取数据,创建metaData,解析“track”并且分离

2,根据track,初始化mVideoTrack和mAudioTrack,加入 mSources

3,从metaData获取

kKeyDuration

kKeyBitRate交互16:sp MediaExtractor::CreateFromService

主要工作是遍历所有注册的Extractor,分别去读取文件头,根据条件判断具体选用哪个Extractor,以及初始化minetype,具体看下面:

交互17:DataSource::RegisterDefaultSniffers()

// The sniffer can optionally fill in "meta" with an AMessage containing

// a dictionary of values that helps the corresponding extractor initialize

// its state without duplicating effort already exerted by the sniffer.

typedef bool (*SnifferFunc)(

const sp &source, String8 *mimeType,

float *confidence, sp *meta);

// static

void DataSource::RegisterSniffer_l(SnifferFunc func) {

for (List::iterator it = gSniffers.begin();

it != gSniffers.end(); ++it) {

if (*it == func) {

return;

}

}

gSniffers.push_back(func);

}

// static

void DataSource::RegisterDefaultSniffers() {

Mutex::Autolock autoLock(gSnifferMutex);

if (gSniffersRegistered) {

return;

}

/*实质就是将左右的extractor注册并且保存在DataSource::gSniffers(Vector)中间

可见,如果需要自定义一个IMediaExtrector的派生类,则必须实现这个方法,这个方法具体什么作用,看下面分析

*/

RegisterSniffer_l(SniffMPEG4);

RegisterSniffer_l(SniffMatroska);

RegisterSniffer_l(SniffOgg);

RegisterSniffer_l(SniffWAV);

RegisterSniffer_l(SniffFLAC);

RegisterSniffer_l(SniffAMR);

RegisterSniffer_l(SniffMPEG2TS);

RegisterSniffer_l(SniffMP3);

RegisterSniffer_l(SniffAAC);

RegisterSniffer_l(SniffMPEG2PS);+

if (getuid() == AID_MEDIA) {

// WVM only in the media server process

RegisterSniffer_l(SniffWVM);

}

RegisterSniffer_l(SniffMidi);

//RegisterSniffer_l(AVUtils::get()->getExtendedSniffer());

char value[PROPERTY_VALUE_MAX];

if (property_get("drm.service.enabled", value, NULL)

&& (!strcmp(value, "1") || !strcasecmp(value, "true"))) {

RegisterSniffer_l(SniffDRM);

}

gSniffersRegistered = true;

} - 交互18:DataSource::sniff:

主要作用是遍历DataSource::gSniffers,按序执行每个Extractor的SniffXXX函数,给mineType,confidence和meta赋值

bool DataSource::sniff(

String8 *mimeType, float *confidence, sp *meta) {

*mimeType = "";

*confidence = 0.0f;

meta->clear();

int count =0;

{

Mutex::Autolock autoLock(gSnifferMutex);

if (!gSniffersRegistered) {

return false;

}

}

for (List::iterator it = gSniffers.begin();

it != gSniffers.end(); ++it) {//遍历DataSource::gSniffers

String8 newMimeType;

float newConfidence;

sp newMeta;

if ((*it)(this, &newMimeType, &newConfidence, &newMeta)) {

//执行每一个已注册的sniffXXX函数,比较所有返回true的sniffXXX函数中间,将confidence最大的那个的相关赋值,返回

if (newConfidence > *confidence) {

*mimeType = newMimeType;

*confidence = newConfidence;

*meta = newMeta;

}

}

count++;

}

return *confidence > 0.0;

}

这个sniffXXX函数函数到底在做什么?我们以SniffMPEG4为例,函数原型:

// Attempt to actually parse the 'ftyp' atom and determine if a suitable

// compatible brand is present.

// Also try to identify where this file's metadata ends

// (end of the 'moov' atom) and report it to the caller as part of

// the metadata.

static bool BetterSniffMPEG4(

const sp &source, String8 *mimeType, float *confidence,

sp *meta) {

// We scan up to 128 bytes to identify this file as an MP4.

static const off64_t kMaxScanOffset = 128ll;

off64_t offset = 0ll;

bool foundGoodFileType = false;

off64_t moovAtomEndOffset = -1ll;

bool done = false;

ALOGE("%s:begin>>>>>>>>>>>>",__FUNCTION__);

while (!done && offset < kMaxScanOffset) {

uint32_t hdr[2];

if (source->readAt(offset, hdr, 8) < 8) {

return false;

}

//size为1 说明这个是large size 只有"mdat" box 才会有large size域

//size为0 说明这个最后一个box

uint64_t chunkSize = ntohl(hdr[0]);//大端转小端,网络字序转主机字序

uint32_t chunkType = ntohl(hdr[1]); //box type

off64_t chunkDataOffset = offset + 8; //box data 域的起始地址

if (chunkSize == 1) { //size为1 说明这个是largesize

if (source->readAt(offset + 8, &chunkSize, 8) < 8) {

return false;

}

chunkSize = ntoh64(chunkSize);

chunkDataOffset += 8; //只有"mdat" box 才会有large size域

if (chunkSize < 16) {

// The smallest valid chunk is 16 bytes long in this case.

return false;

}

} else if (chunkSize < 8) {

// The smallest valid chunk is 8 bytes long.

return false;

}

// (data_offset - offset) is either 8 or 16

off64_t chunkDataSize = chunkSize - (chunkDataOffset - offset);//box data域的大小

if (chunkDataSize < 0) {

ALOGE("b/23540914");

return ERROR_MALFORMED;

}

char chunkstring[5];

MakeFourCCString(chunkType, chunkstring);

ALOGV("saw chunk type %s, size %" PRIu64 " @ %lld", chunkstring, chunkSize, (long long)offset);

switch (chunkType) {

case FOURCC('f', 't', 'y', 'p'):

{

if (chunkDataSize < 8) { //说明一个compatible_brand元素都没有,每个元素是4个字节

return false;

}

uint32_t numCompatibleBrands = (chunkDataSize - 8) / 4;//计算几个brands,0开始计数

for (size_t i = 0; i < numCompatibleBrands + 2; ++i) {

if (i == 1) {

// Skip this index, it refers to the minorVersion,

// not a brand.

continue;

}

uint32_t brand;

if (source->readAt(

chunkDataOffset + 4 * i, &brand, 4) < 4) {

return false;

}

brand = ntohl(brand);

if (isCompatibleBrand(brand)) {

foundGoodFileType = true;

break;

}

}

if (!foundGoodFileType) {

return false;

}

break;

}

case FOURCC('m', 'o', 'o', 'v'):

{

moovAtomEndOffset = offset + chunkSize;

done = true;

break;

}

default:

break;

}

offset += chunkSize;

}

//ALOGE("%s:END<<<<<<<<<");

//

if (!foundGoodFileType) {

return false;

}

*mimeType = MEDIA_MIMETYPE_CONTAINER_MPEG4;

*confidence = 0.4f;

if (moovAtomEndOffset >= 0) {

*meta = new AMessage;

(*meta)->setInt64("meta-data-size", moovAtomEndOffset);

ALOGV("found metadata size: %lld", (long long)moovAtomEndOffset);

}

ALOGE("%s:END *mimeType(%s),*confidence(%.2f)<<<<<<<<<",__FUNCTION__,mimeType->string(),*confidence);

return true;

}

查看其他Extractor文件的sniff方法,基本上就是检查文件各个box信息,是否满足本Extractor的标准。如果是,就返回true,并且给相应的参数赋值,用于判断选择一个最佳的MediaExtractor对应的minetype,用于CreateFromService判断到底初始化哪一个MediaExtractor,最后初始化的是MPEG4Extractor。

- 交互19:registerMediaExtractor

注册创建的MPEG4Extractor

void registerMediaExtractor(

const sp &extractor,

const sp &source,

const char *mime) {

ExtractorInstance ex;

ex.mime = mime == NULL ? "NULL" : mime;

ex.name = extractor->name();

ex.sourceDescription = source->toString();

ex.owner = IPCThreadState::self()->getCallingPid();

ex.extractor = extractor;

{

Mutex::Autolock lock(sExtractorsLock);

if (sExtractors.size() > 10) {

sExtractors.resize(10);

}

sExtractors.push_front(ex);//将创建的MediaExtractor放入static Vector sExtractors;

ALOGE("ex.mime(%s),ex.sourceDescription(%s)",(ex.mime).string(),(ex.sourceDescription).string());//这个打印很重要,ex.sourceDescription可以看到source非常重要的调试信息

}

} 上面的流程图说明,大概就是说明了MediaExtractor的创建过程,那分离分离音视频是怎么发声的呢?

GenericSource::initFromDataSource

1,根据sniff创建指定的mediaExtractor,创建同时读取数据,创建metaData,解析“track”并且分离

2,根据track,初始化mVideoTrack和mAudioTrack,加入 mSources

创建extractor的过程,上面已经分析了。那分离是如何做到的呢?

status_t NuPlayer::GenericSource::initFromDataSource() {

sp<IMediaExtractor> extractor;

String8 mimeType;

float confidence;

sp<AMessage> dummy;

bool isWidevineStreaming = false;

CHECK(mDataSource != NULL);

//1,创建Extractor

extractor = MediaExtractor::Create(mDataSource,

mimeType.isEmpty() ? NULL : mimeType.string(),

mIsStreaming ? 0 : AVNuUtils::get()->getFlags());

//2,获取metaData,主要是看kKeyDuration是否已经被设置

mFileMeta = extractor->getMetaData();

int32_t totalBitrate = 0;

//3,计算文件文件数据中间的Track数量,实质是读取文件中间的box,不同的标准格式不同,以MPEG4Extractor为例,查看MPEG4Extractor::readMetaData()遍历文件。

size_t numtracks = extractor->countTracks();

//4,遍历文件中间的track,给mVideoTrack和mAudioTrack赋值

for (size_t i = 0; i < numtracks; ++i) {

//4.1,根据索引获得track,原型sp MPEG4Extractor::getTrack(size_t index),返回一个封装track的MPEG4Source

sp<IMediaSource> track = extractor->getTrack(i);

//4.2,还是通过读文件,给如下字段赋值之后,封装成一个MetaData返回。

sp<MetaData> meta = extractor->getTrackMetaData(i);

const char *mime;

CHECK(meta->findCString(kKeyMIMEType, &mime));

// Do the string compare immediately with "mime",

// we can't assume "mime" would stay valid after another

// extractor operation, some extractors might modify meta

// during getTrack() and make it invalid.

//4.3,判断track的minetype,确定是Audio还是Video,每个track有一个MetaData,每个文件有一个MedaData

if (!strncasecmp(mime, "audio/", 6)) {

if (mAudioTrack.mSource == NULL) {

mAudioTrack.mIndex = i;

mAudioTrack.mSource = track;

mAudioTrack.mPackets =

new AnotherPacketSource(mAudioTrack.mSource->getFormat());

if (!strcasecmp(mime, MEDIA_MIMETYPE_AUDIO_VORBIS)) {

mAudioIsVorbis = true;

} else {

mAudioIsVorbis = false;

}

if (AVNuUtils::get()->isByteStreamModeEnabled(meta)) {

mIsByteMode = true;

}

}

} else if (!strncasecmp(mime, "video/", 6)) {

if (mVideoTrack.mSource == NULL) {

mVideoTrack.mIndex = i;

mVideoTrack.mSource = track;

mVideoTrack.mPackets =

new AnotherPacketSource(mVideoTrack.mSource->getFormat());

// check if the source requires secure buffers

int32_t secure;

if (meta->findInt32(kKeyRequiresSecureBuffers, &secure)

&& secure) {

mIsSecure = true;

if (mUIDValid) {

extractor->setUID(mUID);

}

}

}

}

//4.4,初始完成后,放入mSources

mSources.push(track);

int64_t durationUs;

if (meta->findInt64(kKeyDuration, &durationUs)) {

if (durationUs > mDurationUs) {

mDurationUs = durationUs;

}

}

int32_t bitrate;

if (totalBitrate >= 0 && meta->findInt32(kKeyBitRate, &bitrate)) {

totalBitrate += bitrate;

} else {

totalBitrate = -1;

}

}

if (mSources.size() == 0) {

ALOGE("b/23705695");

return UNKNOWN_ERROR;

}

mBitrate = totalBitrate;

ALOGE("%s: END",__FUNCTION__);

return OK;

}

具体如何分离,还需要根据具体的MediaExtractor对应的格式来看,但是流程都是一样的,只是具体的实现取决于具体的格式标准解析

以上分析涉及到的各种Source说明

关于DataSource和MediaSource

sp MediaExtractor::Create(

const sp &source, const char *mime,

const uint32_t flags)

|——sp MediaExtractorService::makeExtractor(

const sp &remoteSource, const char *mime,

const uint32_t extFlags)//存在RemoteDataSource::wrap封装装换IDataSource

|——CreateFromIDataSource(const sp &source)

|——sp MediaExtractor::CreateFromService(

const sp &source, const char *mime,

const uint32_t flags)

|——new MPEG4Extractor(source);

// static

sp MediaExtractor::Create(

const sp &source, const char *mime,

const uint32_t flags) {

// remote extractor

sp mediaExService(interface_cast(binder));

sp ex = mediaExService->makeExtractor(RemoteDataSource::wrap(source), mime, flags);//将DataSource装饰成了IDataSource类型

return ex;

}

//将DataSource封装成IDataSource的派生类

sp RemoteDataSource::wrap(const sp &source) {

return new RemoteDataSource(source);

}

//将IDataSource封装成DataSource

sp DataSource::CreateFromIDataSource(const sp &source) {

return new TinyCacheSource(new CallbackDataSource(source));

} 从MediaExtractor中间打印出来的source封装描述:

ex.sourceDescription(TinyCacheSource(CallbackDataSource(RemoteDataSource(FileSource(Success.mp3)从上面的层层封装,可以看到

1,具体的封装器如MPEG4Extractor 是操作DataSource,

DataSource 会去调用操作调用IDataSource

2,DataSource可以理解为视频文件的描述(如FileSource)

IDataSource可以理解为对DataSource和IMemory之间的映射描述

3,GenericSource(NuPlayer::Source的派生类),会去操作IMediaSource实现对文件的读写操作

4,IMediaSource的派生类,对应的是音视频文件中间 track box“trak“的封装,具体的MediaExtractor需要实现一个IMediaSource,用来实现对问价音视频解析出来的track进行封装,如:

class MPEG4Source : public MediaSource

如果需要重写一个MediaExtractor,需要:

1,实现一个MediaSource的子类,解析文件,同时描述特定封装格式的所有track,实例化的时候,就开始了解析过程

2,实现一个DataSource子类,如MPEG4DataSource,实质是对传入的DataSource的封装与适配

// This custom data source wraps an existing one and satisfies requests

// falling entirely within a cached range from the cache while forwarding

// all remaining requests to the wrapped datasource.

// This is used to cache the full sampletable metadata for a single track,

// possibly wrapping multiple times to cover all tracks, i.e.

// Each MPEG4DataSource caches the sampletable metadata for a single track.

3,实现一个sniffXXX方法,注册到DataSource中间,用来独取文件特定信息,判断播放文件是否可以用该自定义的MediaExtractor

4,实现一个MediaExtractor的子类,实现相关函数,用来给nuplayer提供音视频track的metadata信息

5,按照该封装格式的标准,解析音视频box的算法流程(MediaSource功能之一)

相关的接口文件

MediaSource.h

namespace android {

class MediaBuffer;

class MetaData;

struct MediaSource : public BnMediaSource {

MediaSource();

// To be called before any other methods on this object, except

// getFormat().

virtual status_t start(MetaData *params = NULL) = 0;

// Any blocking read call returns immediately with a result of NO_INIT.

// It is an error to call any methods other than start after this call

// returns. Any buffers the object may be holding onto at the time of

// the stop() call are released.

// Also, it is imperative that any buffers output by this object and

// held onto by callers be released before a call to stop() !!!

virtual status_t stop() = 0;

// Returns the format of the data output by this media source.

virtual sp getFormat() = 0;

// Returns a new buffer of data. Call blocks until a

// buffer is available, an error is encountered of the end of the stream

// is reached.

// End of stream is signalled by a result of ERROR_END_OF_STREAM.

// A result of INFO_FORMAT_CHANGED indicates that the format of this

// MediaSource has changed mid-stream, the client can continue reading

// but should be prepared for buffers of the new configuration.

virtual status_t read(

MediaBuffer **buffer, const ReadOptions *options = NULL) = 0;

// Causes this source to suspend pulling data from its upstream source

// until a subsequent read-with-seek. This is currently not supported

// as such by any source. E.g. MediaCodecSource does not suspend its

// upstream source, and instead discard upstream data while paused.

virtual status_t pause() {

return ERROR_UNSUPPORTED;

}

// The consumer of this media source requests that the given buffers

// are to be returned exclusively in response to read calls.

// This will be called after a successful start() and before the

// first read() call.

// Callee assumes ownership of the buffers if no error is returned.

virtual status_t setBuffers(const Vector & /* buffers */) {

return ERROR_UNSUPPORTED;

}

protected:

virtual ~MediaSource();

private:

MediaSource(const MediaSource &);

MediaSource &operator=(const MediaSource &);

};

} // namespace android

MediaExtractor.h

namespace android {

class DataSource;

class MediaSource;

class MetaData;

class MediaExtractor : public BnMediaExtractor {

public:

static sp Create(

const sp &source, const char *mime = NULL,

const uint32_t flags = 0);

static sp CreateFromService(

const sp &source, const char *mime = NULL,

const uint32_t flags = 0);

virtual size_t countTracks() = 0;

virtual sp getTrack(size_t index) = 0;

enum GetTrackMetaDataFlags {

kIncludeExtensiveMetaData = 1

};

virtual sp getTrackMetaData(

size_t index, uint32_t flags = 0) = 0;

// Return container specific meta-data. The default implementation

// returns an empty metadata object.

virtual sp getMetaData();

enum Flags {

CAN_SEEK_BACKWARD = 1, // the "seek 10secs back button"

CAN_SEEK_FORWARD = 2, // the "seek 10secs forward button"

CAN_PAUSE = 4,

CAN_SEEK = 8, // the "seek bar"

};

// If subclasses do _not_ override this, the default is

// CAN_SEEK_BACKWARD | CAN_SEEK_FORWARD | CAN_SEEK | CAN_PAUSE

virtual uint32_t flags() const;

// for DRM

void setDrmFlag(bool flag) {

mIsDrm = flag;

};

bool getDrmFlag() {

return mIsDrm;

}

virtual char* getDrmTrackInfo(size_t trackID, int *len) {

return NULL;

}

virtual void setUID(uid_t uid) {

}

virtual const char * name() { return "" ; }

virtual void setExtraFlags(uint32_t flags) {}

protected:

MediaExtractor();

virtual ~MediaExtractor() {}

private:

bool mIsDrm;

MediaExtractor(const MediaExtractor &);

MediaExtractor &operator=(const MediaExtractor &);

};

} // namespace android