InnoDB Cluster

文章目录

- 简介

- 部署InnoDB Cluster

- 要求与限制

- 沙箱部署(单机多实例部署)

- 规划

- 安装MySQL

- 安装MySQL Shell

- 创建mysql实例

- 创建沙箱InnoDB Cluster集群

- 持久化配置

- 查看集群状态

- 部署MySQL Router

- 生产环境部署(多机部署)

- 规划

- 配置主机名

- 安装MySQL

- 安装MySQL Shell

- 部署InnoDB Cluster 集群

- 配置MySQL Router

- 组复制切InnoDB Cluster

- InnoDB Cluster日常管理

- 检查InnoDB Cluster 状态

- 查看InnoDB Cluster集群结构

- 沙箱实例启停

- 集群中移除/添加某实例

- 自定义集群

- 重新加入群集

- 重建仲裁

- 重新扫描集群

- 检查单个实例状态

- 切换主节点

- 切换单主/多主集群

- 参考文档

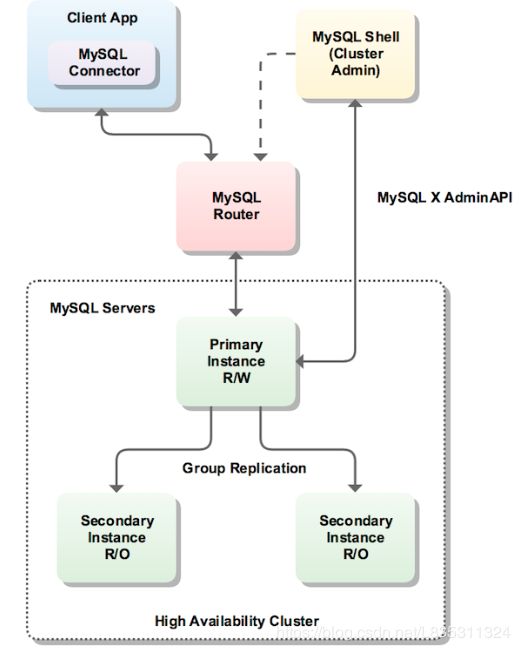

简介

InnoDB Cluster为MySQL提供了完整的高可用解决方案。MySQL Shell包含AdminAPI,可以轻松配置和管理一组至少三个MySQL服务器实例。每个MySQL服务器实例都运行MySQL Group Replication,它提供了在InnoDB Cluster内复制数据的机制,具有内置故障转移功能。AdminAPI无需在InnoDB Cluster中直接使用组复制。 myrouter可以基于已配置的集群自动进行配置,透明地将应用程序连接到服务器实例。如果MySQL Group Replication内的实例发生故障,myrouter会检测到异常并自动的重新配置。在默认的单主模式下,InnoDB Cluster只有一个primary节点可写,多个从节点实际上是主节点的副本,如果primary节点故障退出了,集群会自动将某一台从提升为主节点,myrouter会检测到这个行为,并转发应用程序请求到新的primary节点

部署InnoDB Cluster

部署的方式有2种,一种是单机部署,主要用于测试集群功能的,如果是生产环境肯定是使用多机部署的方式

要求与限制

- 集群内的MySQL节点必须要打开Performance库

- MySQL Shell需要使用Python 2.7版本

- 所有表必须要用InnoDB存储引擎

- 每个表必须要显式指定主键

- 必须开二进制日志,

--log-bin[=log_file_name] - 二进制日志必须行格式,

--binlog-format=row - 需要记录复制主节点的日志,

--log-slave-updates=ON - 二进制日志校验和关闭,

--binlog-checksum=NONE - 开GTID,

--gtid-mode=ON,enforce_gtid_consistency=ON - 复制信息存储到表中,

--master-info-repository=TABLE和--relay-log-info-repository=TABLE - 表名称小写

--lower-case-table-names=1

dba.checkInstanceConfiguration()可以调用管理API去检查配置

沙箱部署(单机多实例部署)

规划

| IP:PORT | 系统版本 | MySQL 版本 | MySQL Shell版本 | MySQL Router版本 |

|---|---|---|---|---|

| 192.168.240.81:3310 | CentOS7.6 | 8.0.17 | mysql-shell-8.0.17 | mysql-router-8.0.17 |

| 192.168.240.81:3320 | CentOS7.6 | 8.0.17 | mysql-shell-8.0.17 | mysql-router-8.0.17 |

| 192.168.240.81:3330 | CentOS7.6 | 8.0.17 | mysql-shell-8.0.17 | mysql-router-8.0.17 |

MySQL Shell ,Rpm包下载地址:https://dev.mysql.com/downloads/shell/

MySQL Router,Rpm包下载地址:https://dev.mysql.com/downloads/router/

安装MySQL

把MySQL二进制安装包上传到/usr/local/src目录下

cd /usr/local/src

tar xf mysql-8.0.17-linux-glibc2.12-x86_64.tar.xz

mv mysql-8.0.17-linux-glibc2.12-x86_64 /usr/local/mysql8.0

echo 'export PATH=/usr/local/mysql8.0/bin:$PATH' >> /etc/profile

. /etc/profile

安装MySQL Shell

上传MySQL Shell包到/usr/local/src目录下

cd /usr/local/src

yum install mysql-shell-8.0.17-1.el7.x86_64.rpm -y

创建mysql实例

登陆MySQL Shell

mysqlsh

创建沙箱实例

# 创建3310端口实例

MySQL JS > dba.deploySandboxInstance(3310)

A new MySQL sandbox instance will be created on this host in

/root/mysql-sandboxes/3310 # 数据目录为/root/mysql-sandboxes/3310

Warning: Sandbox instances are only suitable for deploying and

running on your local machine for testing purposes and are not

accessible from external networks. # 提示沙箱实例只适合本地部署,不能访问外部网络

Please enter a MySQL root password for the new instance: ****** # 为新的实例设置密码,此处为123456

Deploying new MySQL instance... # 显示正在部署新实例

Instance localhost:3310 successfully deployed and started. # 部署成功

# 可以使用shell.connect('root@localhost:3310')连接到新实例

Use shell.connect('root@localhost:3310'); to connect to the instance.

这里传递的参数是端口号,数据目录默认在~/mysql-sandboxes目录下,如果需要指定其他参数可以使用MySQL JS > \help dba.deploySandboxInstance查看更详细信息

同上,继续创建3320和3330端口对应的实例

MySQL JS > dba.deploySandboxInstance(3320)

MySQL JS > dba.deploySandboxInstance(3330)

创建沙箱InnoDB Cluster集群

连接到其中一个节点创建集群,这个节点必须要包含要复制到其他实例的数据,如果都是刚创建的,就连接任意节点即可,这里进入3310端口对应的实例

# 连接3310端口对应的实例

MySQL JS > \connect root@127.0.0.1:3310

Creating a session to '[email protected]:3310' # 正在建立会话连接

Please provide the password for '[email protected]:3310': ****** # 输入root密码

# 是否保存密码,如果设置保存,则下次连接就不需要密码

Save password for '[email protected]:3310'? [Y]es/[N]o/Ne[v]er (default No):

Fetching schema names for autocompletion... Press ^C to stop.

Your MySQL connection id is 9

Server version: 5.7.26-log MySQL Community Server (GPL)

No default schema selected; type \use <schema> to set one.

也可以使用

shell.connect('[email protected]:3310')连接

使用dba.createCluster()方法创建集群

MySQL 127.0.0.1:3310 ssl JS > var cluster = dba.createCluster('MyCluster')

A new InnoDB cluster will be created on instance '127.0.0.1:3310'.

Validating instance at 127.0.0.1:3310...

NOTE: Instance detected as a sandbox.

Please note that sandbox instances are only suitable for deploying test clusters for use within the same host.

This instance reports its own address as 127.0.0.1:3310

Instance configuration is suitable.

Creating InnoDB cluster 'MyCluster' on '127.0.0.1:3310'...

Adding Seed Instance...

Cluster successfully created. Use Cluster.addInstance() to add MySQL instances.

At least 3 instances are needed for the cluster to be able to withstand up to

one server failure.

添加实例进入集群

# 添加实例进入3320进入集群

MySQL 127.0.0.1:3310 ssl JS > cluster.addInstance('[email protected]:3320')

Please provide the password for '[email protected]:3320': ******

Save password for '[email protected]:3320'? [Y]es/[N]o/Ne[v]er (default No): y

# 3320节点没有GTID集合,没办法确认是否有数据在里面,无法决定是否使用增量的方式去恢复它,最安全,最方便的方法就是使用Clone方式,它将自动覆盖3320实例的状态(默认页推荐使用clone)

NOTE: The target instance '127.0.0.1:3320' has not been pre-provisioned (GTID set is

empty). The Shell is unable to decide whether incremental distributed state

recovery can correctly provision it.

The safest and most convenient way to provision a new instance is through

automatic clone provisioning, which will completely overwrite the state of

'127.0.0.1:3320' with a physical snapshot from an existing cluster member. To

use this method by default, set the 'recoveryMethod' option to 'clone'.

#

The incremental distributed state recovery may be safely used if you are sure

all updates ever executed in the cluster were done with GTIDs enabled, there

are no purged transactions and the new instance contains the same GTID set as

the cluster or a subset of it. To use this method by default, set the

'recoveryMethod' option to 'incremental'.

# 选择一个恢复的方式Clone/Incremental 默认Clone能保证数据一致

Please select a recovery method [C]lone/[I]ncremental recovery/[A]bort (default Clone): c

Validating instance at 127.0.0.1:3320...

NOTE: Instance detected as a sandbox.

Please note that sandbox instances are only suitable for deploying test clusters for use within the same host.

This instance reports its own address as 127.0.0.1:3320

Instance configuration is suitable.

A new instance will be added to the InnoDB cluster. Depending on the amount of

data on the cluster this might take from a few seconds to several hours.

Adding instance to the cluster...

Monitoring recovery process of the new cluster member. Press ^C to stop monitoring and let it continue in background.

Clone based state recovery is now in progress.

NOTE: A server restart is expected to happen as part of the clone process. If the

server does not support the RESTART command or does not come back after a

while, you may need to manually start it back.

* Waiting for clone to finish...

NOTE: 127.0.0.1:3320 is being cloned from 127.0.0.1:3310

** Stage DROP DATA: Completed

** Clone Transfer

FILE COPY ############################################################ 100% Completed

PAGE COPY ############################################################ 100% Completed

REDO COPY ############################################################ 100% Completed

** Stage RECOVERY: /

NOTE: 127.0.0.1:3320 is shutting down...

* Waiting for server restart... ready

* 127.0.0.1:3320 has restarted, waiting for clone to finish...

* Clone process has finished: 58.50 MB transferred in 4 sec (14.63 MB/s)

Incremental distributed state recovery is now in progress.

* Waiting for distributed recovery to finish...

NOTE: '127.0.0.1:3320' is being recovered from '127.0.0.1:3310'

* Distributed recovery has finished

The instance '127.0.0.1:3320' was successfully added to the cluster.

# 添加实例进入集群

MySQL 127.0.0.1:3310 ssl JS > cluster.addInstance('[email protected]:3330')

持久化配置

将沙箱实例添加到集群后,必须将InnoDB Cluster集群所需的配置保留到每个实例的选项文件中。连接到每个实例。

shell.connect('[email protected]:3310')

dba.configureLocalInstance('127.0.0.1:3310')

shell.connect('[email protected]:3320')

dba.configureLocalInstance('127.0.0.1:3320')

shell.connect('[email protected]:3330')

dba.configureLocalInstance('127.0.0.1:3330')

查看集群状态

MySQL JS > shell.connect('[email protected]:3310')

MySQL 127.0.0.1:3310 ssl JS > var cluster = dba.getCluster()

MySQL 127.0.0.1:3310 ssl JS > cluster.status()

{

"clusterName": "MyCluster",

"defaultReplicaSet": {

"name": "default",

"primary": "127.0.0.1:3310",

"ssl": "REQUIRED",

"status": "OK",

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

"topology": {

"127.0.0.1:3310": {

"address": "127.0.0.1:3310",

"mode": "R/W",

"readReplicas": {},

"role": "HA",

"status": "ONLINE"

},

"127.0.0.1:3320": {

"address": "127.0.0.1:3320",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "ONLINE"

},

"127.0.0.1:3330": {

"address": "127.0.0.1:3330",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "ONLINE"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "127.0.0.1:3310"

}

部署MySQL Router

上传rpm包到/usr/local/src下

cd /usr/local/src

yum install mysql-router-community-8.0.17-1.el7.x86_64.rpm -y

MySQL Router对接InnoDB Cluster

mysqlrouter --bootstrap [email protected]:3310 --user=mysqlrouter

启动MySQL Router

systemctl start mysqlrouter

会启动4个端口

6446- 对于传统MySQL协议读写会话,MySQL路由器将传入连接重定向到Primary服务器实例。6447- 对于传统MySQL协议只读会话,MySQL路由器将传入连接重定向到其中一个从服务器实例。64460- 对于X协议读写会话,MySQL路由器将传入连接重定向到Primary服务器实例。64470- 对于X协议只读会话,MySQL路由器将传入连接重定向到其中一个从服务器实例。

连接读写端口

mysql -uroot -p123456 -P6446 -h127.0.0.1

生产环境部署(多机部署)

规划

| IP:PORT | 主机名 | server-id | 系统版本 | MySQL版本 | MySQL Shell版本 | MySQL Router版本 |

|---|---|---|---|---|---|---|

| 192.168.240.81:3310 | innodb_cluster_01 | 81 | CentOS7.6 | 8.0.17 | mysql-shell-8.0.17 | mysql-router-8.0.17 |

| 192.168.240.82:3310 | innodb_cluster_02 | 82 | CentOS7.6 | 8.0.17 | - | - |

| 192.168.240.83:3310 | innodb_cluster_03 | 83 | CentOS7.6 | 8.0.17 | - | - |

MySQL Shell ,Rpm包下载地址:https://dev.mysql.com/downloads/shell/

MySQL Router,Rpm包下载地址:https://dev.mysql.com/downloads/router/

配置主机名

InnoDB Cluster集群内部节点主机名必须唯一,可以通过hostname命令设置

按照先前规划,填写/etc/hosts文件

[root@localhost ~]# cat /etc/hosts

192.168.240.81 innodb_cluster_01

192.168.240.82 innodb_cluster_02

192.168.240.83 innodb_cluster_03

修改本机主机名(每台机根据情况修改自己主机名)

hostnamectl set-hostname innodb_cluster_01

安装MySQL

把MySQL二进制安装包上传到/usr/local/src目录下

cd /usr/local/src

tar xf mysql-8.0.17-linux-glibc2.12-x86_64.tar.xz

mv mysql-8.0.17-linux-glibc2.12-x86_64 /usr/local/mysql8.0

echo 'export PATH=/usr/local/mysql8.0/bin:$PATH' >> /etc/profile

. /etc/profile

创建目录结构(所有节点)

useradd -M -s /sbin/nologin mysql

mkdir -pv /datadir/{temp,log,data}

touch /datadir/log/err.log

chown -R mysql:mysql /datadir

初始化数据库(所有节点)

mysqld --initialize-insecure --datadir=/datadir/data --user=mysql

chown -R mysql:mysql /datadir

修改配置文件(所有节点)

[mysqld]

basedir=/usr/local/mysql8.0

user=mysql

port=3310

datadir=/datadir/data

log-error=/datadir/log/err.log

pid-file=/datadir/temp/mysqld.pid

socket=/datadir/temp/mysqld.sock

symbolic-links=0

server_id=81

gtid-mode=on

enforce-gtid-consistency=true

log_slave_updates=ON

log_bin=/datadir/log/binlog

binlog_format=ROW

[client]

socket=/datadir/temp/mysqld.sock

只需要修改server_id这一项即可(保证所有MySQL服务器都不同)

启动数据库(所有节点)

mysqld_safe --defaults-file=/etc/my.cnf --daemonize

设置root密码

mysqladmin -uroot password 123456

创建ic用户

CREATE USER 'ic'@'%' IDENTIFIED BY 'Hal@123';

GRANT ALL ON *.* to 'ic'@'%' WITH GRANT OPTION;

安装MySQL Shell

上传MySQL Shell包到innodb_cluster_01的/usr/local/src目录下

cd /usr/local/src

yum install mysql-shell-8.0.17-1.el7.x86_64.rpm -y

部署InnoDB Cluster 集群

使用MySQL Shell 连接innodb_cluster_01节点进行配置

mysqlsh

配置innodb_cluster_01节点

# 设置日志信息debug级别,方便排查问题

MySQL JS > dba.verbose = 2

# 对innodb_cluster_01实例进行配置

MySQL JS > dba.configureInstance('[email protected]:3310',{ clusterAdmin:"'icadmin'@'%'",clusterAdminPassword: 'Ic@123'});

# 输入ic用户对应的密码

Please provide the password for '[email protected]:3310': *******

# 是否保存密码,默认保存

Save password for '[email protected]:3310'? [Y]es/[N]o/Ne[v]er (default No): y

Configuring local MySQL instance listening at port 3310 for use in an InnoDB cluster...

This instance reports its own address as innodb_cluster_01:3310

Clients and other cluster members will communicate with it through this address by default. If this is not correct, the report_host MySQL system variable should be changed.

# 有以下配置不符合要求

NOTE: Some configuration options need to be fixed:

+-----------------+---------------+----------------+----------------------------+

| Variable | Current Value | Required Value | Note |

+-----------------+---------------+----------------+----------------------------+

| binlog_checksum | CRC32 | NONE | Update the server variable |

+-----------------+---------------+----------------+----------------------------+

# 是否自动进行更改,这里输入y,会帮你将不符合条件的配置进行更改

Do you want to perform the required configuration changes? [y/n]: y

# 创建集群用户'icadmin'@'%'成功

Cluster admin user 'icadmin'@'%' created.

Configuring instance...

The instance '192.168.240.81:3310' was configured for InnoDB cluster usage.

再对innodb_cluster_02和innodb_cluster_03节点进行配置

MySQL JS > dba.configureInstance('[email protected]:3310',{ clusterAdmin:"'icadmin'@'%'",clusterAdminPassword: 'Ic@123'});

MySQL JS > dba.configureInstance('[email protected]:3310',{ clusterAdmin:"'icadmin'@'%'",clusterAdminPassword: 'Ic@123'});

连接到其中一个节点创建集群,这里连接192.168.240.81节点

# 使用icamin用户进行连接(配置节点那一步使用dba.configureInstance()创建的用户)

MySQL JS > shell.connect('[email protected]:3310')

# 创建集群

MySQL JS > var cluster = dba.createCluster('MyCluster')

添加innodb_cluster_03节点进入集群

# 添加innodb_cluster_03节点进入集群

MySQL JS > cluster.addInstance("[email protected]:3310")

# 输入icadmin用户密码

Please provide the password for '[email protected]:3310': ******

# 是否记住密码,默认不记住,这里为了方便记住

Save password for '[email protected]:3310'? [Y]es/[N]o/Ne[v]er (default No): y

# 提示信息,在192.168.240.83:3310实例上检测到了GTID集合,再加入集群之前必须要先这部分数据清掉

WARNING: A GTID set check of the MySQL instance at '192.168.240.83:3310' determined that

it contains transactions that do not originate from the cluster, which must be

discarded before it can join the cluster.

# 检测到192.168.240.83:3310的数据集不存在当前集群内

192.168.240.83:3310 has the following errant GTIDs that do not exist in the cluster:

b04e6d88-af6c-11e9-bc08-000c29112816:1-3

# 从集群内的某个成员中获取物理快照并覆盖掉192.168.240.83:3310的数据,可以设置'recoveryMethod'为'clone'

WARNING: Discarding these extra GTID events can either be done manually or by completely

overwriting the state of 192.168.240.83:3310 with a physical snapshot from an

existing cluster member. To use this method by default, set the

'recoveryMethod' option to 'clone'.

# 有额外的GTID events是不符合预期的,建议确保事务一致可以选择clone恢复方式

Having extra GTID events is not expected, and it is recommended to investigate

this further and ensure that the data can be removed prior to choosing the

clone recovery method.

# 选择恢复的方式,Clone表示物理快照方式覆盖掉原有数据,Abort表示放弃此次操作,这里选C

Please select a recovery method [C]lone/[A]bort (default Abort): c

Validating instance at 192.168.240.83:3310...

# 报告实例拥有的主机名innodb_cluster_03:3310

This instance reports its own address as innodb_cluster_03:3310

# 检测实例配置符合要求,正在被加入集群,根据集群中的数据量,加入的时长从几秒钟到几小时不等

Instance configuration is suitable.

A new instance will be added to the InnoDB cluster. Depending on the amount of

data on the cluster this might take from a few seconds to several hours.

# 正在添加实例进入集群

Adding instance to the cluster...

# 数据恢复过程,可以输入Ctrl+C停止监控,让它在后台运行

Monitoring recovery process of the new cluster member. Press ^C to stop monitoring and let it continue in background.

Clone based state recovery is now in progress.

# 服务器重启是克隆过程的一部分。如果服务器不支持RESTART命令,或者一段时间后不返回,可能需要手动重新启动它

NOTE: A server restart is expected to happen as part of the clone process. If the

server does not support the RESTART command or does not come back after a

while, you may need to manually start it back.

# 正在clone数据到实例

* Waiting for clone to finish...

NOTE: 192.168.240.83:3310 is being cloned from innodb_cluster_01:3310

** Stage DROP DATA: Completed

** Clone Transfer

FILE COPY ############################################################ 100% Completed

PAGE COPY ############################################################ 100% Completed

REDO COPY ############################################################ 100% Completed

** Stage RECOVERY: \

NOTE: 192.168.240.83:3310 is shutting down...

# 实例重启超时,需要手动启动一下实例

* Waiting for server restart... timeout

WARNING: Error while waiting for recovery of the added instance:

terminate called after throwing an instance of 'std::runtime_error'

what(): Not connected

Aborted

重启innodb_cluster_03实例

mysqld --defaults-file=/etc/my.cnf --daemonize

从MySQL Shell接口进入管理集群

mysqlsh

连接innodb_cluster_01实例

# 连接192.168.240.81:3310实例

MySQL JS > shell.connect('[email protected]:3310')

# 重新扫描集群

MySQL JS > var cluster = dba.getCluster()

MySQL JS > cluster.rescan()

Rescanning the cluster...

# 找到192.168.240.83节点

Result of the rescanning operation for the 'default' ReplicaSet:

{

"name": "default",

"newTopologyMode": null,

"newlyDiscoveredInstances": [

{

"host": "innodb_cluster_03:3310",

"member_id": "b04e6d88-af6c-11e9-bc08-000c29112816",

"name": null,

"version": "8.0.17"

}

],

"unavailableInstances": []

}

A new instance 'innodb_cluster_03:3310' was discovered in the ReplicaSet.

# 是否将它加入集群,y表示确认

Would you like to add it to the cluster metadata? [Y/n]: y

Adding instance to the cluster metadata...

# 已经成功添加'innodb_cluster_03:3310'

The instance 'innodb_cluster_03:3310' was successfully added to the cluster metadata.

按照加入192.168.240.83:3310的过程继续添加192.168.240.82:3310实例

MySQL JS > var cluster = dba.getCluster()

MySQL JS > cluster.addInstance("[email protected]:3310")

如果服务器不支持RESTART命令,或者一段时间后不返回,可能需要手动重新启动它

mysqld --defaults-file=/etc/my.cnf --daemonize

然后重新扫描集群加入即可

配置MySQL Router

上传MySQL Router RPM包192.168.240.81节点上的/usr/local/src目录下

cd /usr/local/src

yum install mysql-router-community-8.0.17-1.el7.x86_64.rpm -y

MySQL Router对接InnoDB Cluster

mysqlrouter --bootstrap [email protected]:3310 --user=mysqlrouter

启动MySQL Router

mysqlrouter -c /usr/local/mysql8.0/bin/.././mysqlrouter.conf

会启动4个端口

6446- 对于传统MySQL协议读写会话,MySQL路由器将传入连接重定向到Primary服务器实例。6447- 对于传统MySQL协议只读会话,MySQL路由器将传入连接重定向到其中一个从服务器实例。64460- 对于X协议读写会话,MySQL路由器将传入连接重定向到Primary服务器实例。64470- 对于X协议只读会话,MySQL路由器将传入连接重定向到其中一个从服务器实例。

连接读写端口,进行测试

mysql -uroot -p123456 -P6446 -h192.168.240.81

组复制切InnoDB Cluster

如果现在已经有一套组复制架构了,可以切换到InnoDB Cluster模式,使用MySQL Shell 连接到其中一个节点,使用dba.createCluster()传入 adoptFromGR选项创建集群就可以了

var cluster = dba.createCluster('prodCluster', {adoptFromGR: true});

InnoDB Cluster日常管理

检查InnoDB Cluster 状态

MySQL 127.0.0.1:3310 ssl JS > var cluster = dba.getCluster()

MySQL 127.0.0.1:3310 ssl JS > cluster.status()

{

# 集群名称

"clusterName": "MyCluster",

# 集群内包含的实例

"defaultReplicaSet": {

"name": "default",

# 此集群的主系欸但,如果是多主,这个字段不显示

"primary": "127.0.0.1:3310",

# 是否使用ssl安全连接

"ssl": "REQUIRED",

# 集群状态

"status": "OK",

# 集群状态信息:集群在线,可以容忍一个实例故障退出

"statusText": "Cluster is ONLINE and can tolerate up to ONE failure.",

# 已添加到集群的实例

"topology": {

# 实例的主机名

"127.0.0.1:3310": {

# IP地址

"address": "127.0.0.1:3310",

# "R/W"(可读写)或"R/O"(只读)

"mode": "R/W",

"readReplicas": {},

# 提供的功能,目前只有HA,具有高可用性

"role": "HA",

# 状态

# ONLINE:实例在线,正常

# OFFLINE:实例下线,已经失去和其他实例的连接

# RECOVERING:实例正在恢复,尝试与集群状态同步

# UNREACHABLE:实例已丢失与集群的通讯

# ERROT:实例在恢复阶段或应用事务的时候遇到错误

# (MISSING):作为已配置集群的一部分,但是实际上不可用的实例状态

"status": "ONLINE"

},

"127.0.0.1:3320": {

"address": "127.0.0.1:3320",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "ONLINE"

},

"127.0.0.1:3330": {

"address": "127.0.0.1:3330",

"mode": "R/O",

"readReplicas": {},

"role": "HA",

"status": "ONLINE"

}

},

"topologyMode": "Single-Primary"

},

"groupInformationSourceMember": "127.0.0.1:3310"

}

查看InnoDB Cluster集群结构

MySQL 127.0.0.1:3310 ssl JS > var cluster = dba.getCluster()

MySQL 127.0.0.1:3310 ssl JS > cluster.describe();

{

"clusterName": "MyCluster",

"defaultReplicaSet": {

"name": "default",

"topology": [

{

"address": "127.0.0.1:3310",

"label": "127.0.0.1:3310",

"role": "HA"

},

{

"address": "127.0.0.1:3320",

"label": "127.0.0.1:3320",

"role": "HA"

},

{

"address": "127.0.0.1:3330",

"label": "127.0.0.1:3330",

"role": "HA"

}

],

"topologyMode": "Single-Primary"

}

}

沙箱实例启停

# 启动沙箱实例

dba.startSandboxInstance(3310)

# 停止沙箱实例

dba.stopSandboxInstance(3310)

# 模拟意外故障,停止沙箱实例

dba.killSandboxInstance(3310)

# 删除沙箱实例

dba.deleteSandboxInstance(3310)

集群中移除/添加某实例

cluster.removeInstance('[email protected]:3310')

cluster.addInstance('[email protected]:3310')

自定义集群

要自定义InnoDB集群创建的复制组的名称,请将该groupName选项传递给该dba.createCluster()命令。这将设置group_replication_group_name系统变量。该名称必须是有效的UUID。

要在实例加入群集时自定义用作种子的实例,请将groupSeeds选项传递给dba.createCluster()和 cluster.addInstance()命令。当新实例加入群集并用于向新实例提供数据时,将联系种子实例。地址被指定为以逗号分隔的列表,例如 host1:port1,host2:port2。配置 group_replication_group_seeds 系统变量。

重新加入群集

如果实例离开集群,重新加入集群可以使用cluster.rejoinInstance(),如果实例没有持久化操作,重新启动是不会自动加入集群的,解决方法是使用cluster.rejoinInstance()再次添加实例进入集群,然后连接实例持久化配置dba.configureLocalInstance()

重建仲裁

如果多个实例失败,集群不够法定票数选举出新主,可以使用cluster.force equorumusingpartitionof()方法重新建立仲裁

cluster = dba.getCluster("MyCluster")

cluster.forceQuorumUsingPartitionOf("127.0.0.1:3310")

重新扫描集群

cluster.rescan()

检查单个实例状态

cluster.checkInstanceState('[email protected]:3310')

##解散集群

cluster.dissolve({force:true})

切换主节点

cluster = dba.getCluster("MyCluster")

cluster.setPrimaryInstance("192.168.240.82:3310")

切换单主/多主集群

cluster = dba.getCluster("MyCluster")

cluster.switchToMultiPrimaryMode() # 单主-->多主

cluster.switchToMultiPrimaryMode() # 多主-->单主

参考文档

- https://dev.mysql.com/doc/refman/8.0/en/mysql-innodb-cluster-userguide.html