hadoop系列之完全分布式+HA 环境搭建及测试验证

前置条件:

- 已制作好CentOS 虚拟机模板。

- 该模板安装好Oracle JDK,且 JAVA_HOME 值为/usr/java/jdk1.8.0_171-amd64/jre。

CentOS 模板安装可参考博客: https://blog.csdn.net/gobitan/article/details/80993354

准备三台虚拟机

- 搭建完全 Hadoop 分布式+HA 最少需要三台服务器,假设三台服务器的 IP 地址如下:

192.168.159.200 hadoop01

192.168.159.201 hadoop02

192.168.159.202 hadoop03

配置要求:建议每台虚拟机的配置最低为 2 核 4G,如果主机内存确实有限,可以改为 2 核 3G。

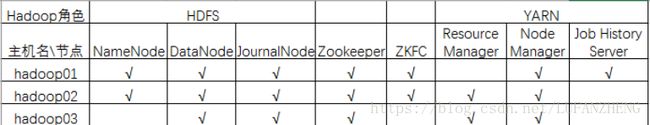

部署架构

配置文件

可分为三类:

- 只读的默认配置文件,包括

hadoop-2.7.3/share/doc/hadoop/hadoop-project-dist/hadoop-common/core-default.xml

hadoop-2.7.3/share/doc/hadoop/hadoop-project-dist/hadoop-hdfs/hdfs-default.xml

hadoop-2.7.3/share/doc/hadoop/hadoop-yarn/hadoop-yarn-common/yarn-default.xml

hadoop-2.7.3/share/doc/hadoop/hadoop-mapreduce-client/hadoop-mapreduce-client- core/mapred-default.xml

- site 相关的配置,包括:

hadoop-2.7.3/etc/hadoop/core-site.xml

hadoop-2.7.3/etc/hadoop/hdfs-site.xml

hadoop-2.7.3/etc/hadoop/yarn-site.xml

hadoop-2.7.3/etc/hadoop/mapred-site.xml

控制脚本文件,在 hadoop-2.7.3/etc/hadoop/*-env.sh

说明:以下操作在 hadoop01 上进行。

第一步:操作系统配置

修改/etc/hostname 的内容为 hadoop01

修改/etc/hosts 的内容为

备注:配置这里主要是想通过域名或者IP地址找到相应的机器

127.0.0.1 localhost

192.168.159.200 hadoop01

192.168.159.201 hadoop02

192.168.159.202 hadoop03

重启操作系统[root@centos7 ~]# init 6

第二步:Hadoop 和 Zookeeper 安装包下载

hadoop 采用 2.7.3 版本

官网下载 https://archive.apache.org/dist/hadoop/core/hadoop-2.7.3/hadoop-2.7.3.tar.gz

zookeeper 采用 3.4.6 版本,主要管理分布式服务

官 网 下 载 https://archive.apache.org/dist/zookeeper/zookeeper-3.4.6/zookeeper-3.4.6.tar.gz

第三步:解压 hadoop 和 zookeeper 包

将 hadoop-2.7.3.tar.gz 和 zookeeper-3.4.6.tar.gz 上传到/root 目录下。

[root@hadoop01 ~]# cd /opt/

[root@hadoop01 opt]# tar zxf ~/hadoop-2.7.3.tar.gz

[root@hadoop01 opt]# tar zxf ~/zookeeper-3.4.6.tar.gz

创建 hadoop 需要的目录

[root@hadoop01 ~]# mkdir -p /opt/hadoop-2.7.3/data/namenode

[root@hadoop01 ~]# mkdir -p /opt/hadoop-2.7.3/data/datanode

创建 zookeeper 需要的目录

[root@hadoop01 ~]# mkdir -p /opt/zookeeper-3.4.6/data

第四步:配置Hadoop

配置 hadoop-env.sh

编辑 etc/hadoop/hadoop-env.sh,修改 JAVA_HOME 的值如下: # The java implementation to use.

备注:这样做是避免,Hadoop配置文件中读不到$JAVA_HOME而报错。

export JAVA_HOME=/usr/java/jdk1.8.0_171-amd64/jre

配置core-site.xml

编辑 etc/hadoop/core-site.xml,修改如下:

说明:hadoop.tmp.dir 默认值为"/tmp/hadoop-${user.name}"。Linux 操作系统重启后,这个目录会被清空,这可能导致数据丢失,因此需要修改。

fs.defaultFS:定义master的URI和端口

ha.zookeeper.quorum:这指定应设置群集以进行自动故障转移,列出了运行ZooKeeper服务的主机端口

配置 hdfs-site.xml

编辑 etc/hadoop/hdfs-site.xml,修改如下:

org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider

dfs.permissions.enabled:如果为“true”,则启用HDFS中的权限检查。如果为“false”,则关闭权限检查

dfs.nameservices命名空间的逻辑名称,如果使用HDFS Federation,可以配置多个命名空间的名称,使用逗号分开即可。

dfs.ha.namenodes.[nameservice ID] 命名空间中所有NameNode的唯一标示名称。可以配置多个 使用逗号分隔。该名称是可以让DataNode知道每个集群的所有NameNode。当前,每个集群最多只能配置两个NameNode

dfs.namenode.rpc-address.ha-cluster.nn1:每个namenode监听的RPC地址

dfs.namenode.http-address:[nameservice ID].[name node ID] 每个namenode监听的http地址

dfs.namenode.shared.edits.dir 这是NameNode读写JNs组的uri。通过这个uri,NameNodes可以读写edit log内容。URI的格 式"qjournal://host1:port1;host2:port2;host3:port3/journalId"。这里的host1、host2、host3指的是Journal Node的地址,这里必须是奇数个,至少3个;其中journalId是集群的唯一标识符,对于多个联邦命名空间,也使用同一个journalId。

dfs.client.failover.proxy.provider.[nameservice ID] 这里配置HDFS客户端连接到Active NameNode

dfs.ha.automatic-failover.enabled:是否启用了自动故障转移。默认值:假

dfs.ha.fencing.methods 配置active namenode出错时的处理类

配置 mapred-site.xml

[root@hadoop01 hadoop-2.7.3]# cd etc/hadoop/

[root@hadoop01 hadoop]# mv mapred-site.xml.template mapred-site.xml

编辑 etc/hadoop/mapred-site.xml,修改如下:

配置 yarn-site.xml

编辑 etc/hadoop/yarn-site.xml,修改如下:

配置 etc/hadoop/slaves

编辑 etc/hadoop/slaves,修改下:

hadoop01

hadoop02

hadoop03

- 配置 hadoop 的环境变量

编辑/etc/profile,在文件末尾加上如下内容:

export HADOOP_HOME=/opt/hadoop-2.7.3

export HADOOP_MAPRED_HOME=$HADOOP_HOME

export HADOOP_COMMON_HOME=$HADOOP_HOME

export HADOOP_HDFS_HOME=$HADOOP_HOME

export YARN_HOME=$HADOOP_HOME

export HADOOP_CONF_DIR=$HADOOP_HOME/etc/hadoop

export YARN_CONF_DIR=$HADOOP_HOME/etc/hadoop export ZOOKEEPER_HOME=/opt/zookeeper-3.4.6

export PATH=$PATH:$HADOOP_HOME/bin:$HADOOP_HOME/sbin:$ZOOKEEPER_HOME/bin

保存后执行”. /etc/profile 使之生效。

第五步:配置 zookeeper

[root@hadoop01 ~]# cd/opt/zookeeper-3.4.6/conf

[root@hadoop01 conf]# mv zoo_sample.cfg zoo.cfg

打开 zoo.cfg,修改两点:

将 dataDir 设置为刚创建的目录,如下:

dataDir=/opt/zookeeper-3.4.6/data

在文件末尾增加如下内容

server.1=hadoop01:2888:3888

server.2=hadoop02:2888:3888

server.3=hadoop03:2888:3888

注意:下面第六步的操作需要依次在 hadoop01、hadoop02 和 hadoop03 上执行。

第六步:配置 SSH 无密登录及同步配置等

hadoop01 上的操作

生成私钥和公钥对

[root@hadoop01~]# ssh-keygen -t rsa

直接四个回车。

本机免登录

[root@hadoop01 ~]# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

执行如下命令验证 SSH 本机免登录配置,如下:

[root@hadoop01 ~]# ssh localhost

[root@hadoop01 ~]# ssh hadoop01

hadoop01 到 hadoop02 的免登录

[root@hadoop01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop02

执行如下命令验证 SSH 到hadoop02 免登录配置,如下:

[root@hadoop01 ~]# ssh hadoop02

hadoop01 到 hadoop03 的免登录

[root@hadoop01 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop03

执行如下命令验证 SSH 到hadoop03 免登录配置,如下:

[root@hadoop01 ~]# ssh hadoop03

同 步 /etc/hosts 到 hadoop02 和 hadoop03

[root@hadoop01 ~]# scp /etc/hosts hadoop02:/etc/hosts

[root@hadoop01 ~]# scp /etc/hosts hadoop03:/etc/hosts

同 步 /etc/profile 到 hadoop02 和 hadoop03

[root@hadoop01 ~]# scp /etc/profile hadoop02:/etc/profile

[root@hadoop01 ~]# scp /etc/profile hadoop03:/etc/profile

同步 hadoop 包及配置到 hadoop02 和 hadoop03

[root@hadoop01 ~]# scp -r /opt/hadoop-2.7.3/ hadoop02:/opt/hadoop-2.7.3/

[root@hadoop01 ~]# scp -r /opt/hadoop-2.7.3/ hadoop03:/opt/hadoop-2.7.3/

同步 zookeeper 包及配置到 hadoop02 和 hadoop03

[root@hadoop01 ~]# scp -r /opt/zookeeper-3.4.6/ hadoop02:/opt/zookeeper-3.4.6/

[root@hadoop01 ~]# scp -r /opt/zookeeper-3.4.6/ hadoop03:/opt/zookeeper-3.4.6/

zookeeper 的配置

[root@hadoop01 ~]# cd /opt/zookeeper-3.4.6/data

[root@hadoop01 data]# echo 1 > myid

安装 fuser

[root@hadoop01 ~]# yum install psmisc -y

hadoop02 上的操作

- 修改/etc/hostname 的内容为 hadoop02

- 重 启 hadoop02 [root@centos7 ~]# init 6

- zookeeper 的配置

[root@hadoop02 ~]# cd /opt/zookeeper-3.4.6/data

[root@hadoop02 data]# echo 2 > myid

生成私钥和公钥对

[root@hadoop02 ~]# ssh-keygen -t rsa

连续四个回车。

本机免密登录

[root@hadoop02 ~]# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys

执行如下命令验证 SSH 本机免密登录配置,如下:

[root@hadoop02 ~]# ssh localhost

[root@hadoop02 ~]# ssh hadoop02

hadoop02 到 hadoop01 的免登录

[root@hadoop02 ~]#

ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop01

执行如下命令验证:

[root@hadoop02 ~]# ssh hadoop01

hadoop02 到 hadoop03 的免登录

[root@hadoop02 ~]# ssh-copy-id -i ~/.ssh/id_rsa.pub hadoop03

执行如下命令验证 SSH 到hadoop03 免密登录配置,如下:

[root@hadoop02 ~]# ssh hadoop03

安装 fuser

[root@hadoop02 ~]# yum install psmisc -y

hadoop03 上的操作

- 修改/etc/hostname 的内容为 hadoop03

- 重 启 hadoop03 [root@centos7 ~]# init 6

- zookeeper 的配置

[root@hadoop03 ~]# cd /opt/zookeeper-3.4.6/data

[root@hadoop02 data]# echo 3 > myid

第七步:在三台机器上依次启动 zookeeper

[root@hadoop01 ~]# zkServer.sh start

[root@hadoop02 ~]# zkServer.sh start

[root@hadoop03 ~]# zkServer.sh start

注意:每个节点启动完之后,都要用 jps 检查是否新增一个名为 QuorumPeerMain 的进程。

第 八 步 : 在 三 台 机 器 上 依 次 启 动 JournalNode

[root@hadoop01 ~]# hadoop-daemon.sh start journalnode

[root@hadoop02 ~]# hadoop-daemon.sh start journalnode

[root@hadoop03 ~]# hadoop-daemon.sh start journalnode

注意:

- JournalNode 必须先于namenode 格式化启动;

- 每个节点启动完之后,都要用 jps 检查是否新增一个名为 JournalNode 的进程。

第九步:格式化 HDFS 文件系统

注意:本步操作在hadoop01 上进行。[root@hadoop01 ~]# hdfs namenode -format

如果执行成功,会在日志末尾看到格式化成功的提示,如下:

INFO common.Storage: Storage directory /opt/hadoop-2.7.3/hadoop-tmp/dfs/name has been successfully formatted.

第十步:启动 hadoop01 上的NameNode

[root@hadoop01 ~]# hadoop-daemon.sh start namenode

starting namenode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-namenode- hadoop01.out

第十一步:同步和启动 hadoop02 上的NameNode

从 Active NameNode 节点拷贝 HDFS 的元数据到 Standby NameNode 节点

[root@hadoop02 ~]# hdfs namenode -bootstrapStandby

启动 namenode

[root@hadoop02 ~]# hadoop-daemon.sh start namenode

starting namenode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-namenode- hadoop02.out

第十二步:在三台机器上依次启动 DataNode

在 hadoop01 上启动datanode

[root@hadoop01 ~]# hadoop-daemon.sh start datanode

starting datanode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-datanode- hadoop01.out

在 hadoop02 上启动datanode

[root@hadoop02 ~]# hadoop-daemon.sh start datanode

starting datanode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-datanode- hadoop02.out

在 hadoop03 上启动datanode

[root@hadoop03 ~]# hadoop-daemon.sh start datanode

starting datanode, logging to /opt/hadoop-2.7.3/logs/hadoop-root-datanode-

hadoop03.out

第十三步:启动 ZFKC

hadoop01 上的操作

先格式化

[root@hadoop01 ~]# hdfs zkfc -formatZK

再启动 zkfc

[root@hadoop01 ~]# hadoop-daemon.sh start zkfc

starting zkfc, logging to /opt/hadoop-2.7.3/logs/hadoop-root-zkfc-hadoop01.out

hadoop02 上的操作

[1] 启 动 zkfc

[root@hadoop01 ~]# hadoop-daemon.sh start zkfc

starting zkfc, logging to /opt/hadoop-2.7.3/logs/hadoop-root-zkfc-hadoop01.out

第十四步:检查 HDFS 集群的状态

[root@hadoop01 ~]#

hdfs haadmin -getServiceState nn1

显示:active

[root@hadoop01 ~]#

hdfs haadmin -getServiceState nn2

显示:standby

[root@hadoop01 ~]#

检查 zookeeper 的状态

zkServer.sh status 应该是一个 leader,两个 follower

第十五步:启动 YARN

在 hadoop02 上 启 动

yarn [root@hadoop02 ~]# start-yarn.sh

starting yarn daemons

starting resourcemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root-resourcemanager- hadoop02.out

hadoop02: starting nodemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root- nodemanager-hadoop02.out

hadoop01: starting nodemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root- nodemanager-hadoop01.out

hadoop03: starting nodemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root- nodemanager-hadoop03.out

在 hadoop03 上启动ResourceManager

[root@hadoop03 ~]# yarn-daemon.sh start resourcemanager

starting resourcemanager, logging to /opt/hadoop-2.7.3/logs/yarn-root-resourcemanager- hadoop03.out

第十六步:检查 YARN 集群状态

[root@hadoop02 ~]#

yarn rmadmin -getServiceState rm1

active

[root@hadoop02 ~]#

yarn rmadmin -getServiceState rm2

standby

第十七步:启动 historyserver

[root@hadoop01 dfs]# mr-jobhistory-daemon.sh start historyserver

starting historyserver, logging to /opt/hadoop-2.7.3/logs/mapred-root-historyserver- hadoop01.out

第十八步:查看 Hadoop 集群的各个 Web 界面

查看 NameNode 的 Web 界面http://192.168.159.200:50070

查看ResourceManager 的 Web 界面http://192.168.159.201:8088/

查看 Job History Server 的web 页面http://192.168.159.200:19888/jobhistory

第十九步:创建 HDFS 目录,以便执行MapReduce 任务

[root@hadoop01 ~]# hdfs dfs -mkdir -p /user/root

注意:这里的 root,如果你是其他用户就换成相应的用户名。

第二十步:拷贝输入文件到分布式文件系统

[root@hadoop01 ~]# cd /opt/hadoop-2.7.3/

[root@hadoop01 hadoop-2.7.3]#

hdfs dfs -put etc/hadoop input

这里举例拷贝et/hadoop 目录下的文件到HDFS 中。

查看拷贝结果

[root@hadoop01 hadoop-2.7.3]# hdfs dfs -ls input 这个命令也可以:bin/hadoop fs -ls input

第二十一步:运行Hadopo 自带的WordCount 例子

[root@hadoop01 ~]# cd /opt/hadoop-2.7.3/

[root@hadoop01 hadoop-2.7.3]# bin/hadoop jar share/hadoop/mapreduce/hadoop- mapreduce-examples-2.7.3.jar grep input output 'dfs[a-z.]+'

说明:

- 这个例子是计算某个目录下所有文件中包含某个字符串的次数,这里是匹配'dfs[a-z.]+' 的次数;

- 中间有报如下错误, 可忽略。"18/05/14 00:03:54 WARN io.ReadaheadPool: Failed readahead on ifile EBADF: Bad file descriptor"

各个节点上进程的变化

hadoop01 上新增进程:YarnChild、MRAppMaster 和 RunJar

hadoop02 和 hadoop03 上有多个新增的 YarnChild 进程。

第二十二步:将结果从分布式文件系统拷贝到本地

[root@ hadoop01 hadoop-2.7.3]# hdfs dfs -get output output [root@ hadoop01 hadoop-2.7.3]# cat output/*

6 dfs.audit.logger

4 dfs.class

3 dfs.server.namenode.

2 dfs.period

2 dfs.audit.log.maxfilesize

2 dfs.audit.log.maxbackupindex

1 dfsmetrics.log

1 dfsadmin

1 dfs.servers

1 dfs.replication

1 dfs.file

或者直接查看

[root@hadoop01 hadoop-2.7.3]# hdfs dfs -cat output/*

第二十三步:验证结果

用 linux 命令来统计一下"dfs.class"的次数,结果为 4 次,与 mapreduce 统计的一致。[root@hadoop01 hadoop-2.7.3]# grep -r 'dfs.class' etc/hadoop/

etc/hadoop/hadoop- metrics.properties:dfs.class=org.apache.hadoop.metrics.spi.NullContext etc/hadoop/hadoop- metrics.properties:#dfs.class=org.apache.hadoop.metrics.file.FileContext etc/hadoop/hadoop-metrics.properties:# dfs.class=org.apache.hadoop.metrics.ganglia.GangliaContext etc/hadoop/hadoop-metrics.properties:# dfs.class=org.apache.hadoop.metrics.ganglia.GangliaContext31 [root@hadoop01 hadoop-2.7.3]#

第二十四步:HDFS 集群HA 的切换测试

- 先确认 HDFS 的主 namenode 在哪一台机器上[root@hadoop01 ~]#

hdfs haadmin -getServiceState nn1

active

[root@hadoop01 ~]# hdfs haadmin -getServiceState nn2 standby

通过上面的命令,确认主NameNode 在 hadoop01 上面。

- 通过jps 找到主 NameNode 的进程 id [root@hadoop01 ~]# jps

1963 NameNode

- 杀死该进程

[root@hadoop01 ~]# kill -9 1963

- 检查 nn2 的状态

[root@hadoop01 ~]# hdfs haadmin -getServiceState nn2 active

- 启动 nn1 并检查状态

[root@hadoop01 ~]# hadoop-daemon.sh start namenode [root@hadoop01 ~]# hdfs haadmin -getServiceState nn1 standby

[root@hadoop01 ~]# hdfs haadmin -getServiceState nn2 active

第二十五步:YARN 集群 HA 的切换测试

- 先确认 YARN 的主resourcemanager 在哪一台机器上[root@hadoop02 ~]#

yarn rmadmin -getServiceState rm1

active

[root@hadoop02 ~]# yarn rmadmin -getServiceState rm2

standby

通过上面的命令,确认主ResourceManager 在 hadoop02 上面。

- 通过jps 找到主 ResourceManager 的进程 id [root@hadoop02 ~]# jps

2829 ResourceManager

- 杀死该进程

[root@hadoop01 ~]# kill -9 2829

- 检查 rm2 的状态

[root@hadoop02 ~]# yarn rmadmin -getServiceState rm2 active

- 启动 rm1 并检查状态

[root@hadoop02 ~]#

yarn-daemon.sh start resourcemanager

[root@hadoop02 ~]# yarn rmadmin -getServiceState rm1 standby

[root@hadoop02 ~]# yarn rmadmin -getServiceState rm2 active

第二十六步:停止集群

- 停止 historyserver

[root@hadoop01 ~]# mr-jobhistory-daemon.sh stop historyserver

- 停止 YARN

[root@hadoop01 hadoop-2.7.3]# stop-yarn.sh

- 停止 HDFS

[root@hadoop01 hadoop-2.7.3]# stop-dfs.sh

第二十七:如何从零启动 HA 集群? 依次在三台机器上启动 zookeeper [root@hadoop01 ~]# zkServer.sh start [root@hadoop02 ~]# zkServer.sh start [root@hadoop03 ~]# zkServer.sh start

[root@hadoop01 ~]# start-dfs.sh

start-dfs.sh 会依次启动两个 namenode,三个datanode,三个 JournalNode 和两个 zkfc。[root@hadoop01 ~]# mr-jobhistory-daemon.sh start historyserver

注意:这里是在 hadoop02 上执行。[root@hadoop02 ~]# start-yarn.sh

start-yarn.sh 会启动一个 resourcemanager,三个 nodemanager。

注意:这里是在 hadoop03 上执行。

[root@hadoop03 ~]# yarn-daemon.sh start resourcemanager