【图像分割模型coding】Keras 利用Unet进行多类分割

1.前言

unet论文地址unet,刚开始被用于医学图像分割。但当时的训练集是黑白图像并且只涉及到二分类问题。此前一直有尝试使用unet处理rgb三彩色图像进行多类分割问题,摸索之后,终有所获。【CPU实现】

2.代码实现

所采用的数据集是CamVid,图片尺寸均为360*480,训练集367张,校准集101张,测试集233张,共计701张图片。所采用的深度学习框架是tensorflow+keras.数据来源在此:https://github.com/preddy5/segnet/tree/master/CamVid

我们从main2.py开始查看整个项目的实现步骤。

2.1 main函数

# -*- coding:utf-8 -*-

# Author : Ray

# Data : 2019/7/25 2:15 PM

from datapre2 import *

from model import *

import warnings

warnings.filterwarnings('ignore') #忽略警告信息

# os.environ['CUDA_VISIBLE_DEVICES'] = '0' #设置GPU

#测试集233张,训练集367张,校准集101张,总共233+367+101=701张,图像大小为360*480,语义类别为13类

aug_args = dict( #设置ImageDataGenerator参数

rotation_range = 0.2,

width_shift_range = 0.05,

height_shift_range = 0.05,

shear_range = 0.05,

zoom_range = 0.05,

horizontal_flip = True,

vertical_flip = True,

fill_mode = 'nearest'

)

train_gene = trainGenerator(batch_size=2,aug_dict=aug_args,train_path='CamVid/',

image_folder='train',label_folder='trainannot',

image_color_mode='rgb',label_color_mode='rgb',

image_save_prefix='image',label_save_prefix='label',

flag_multi_class=True,save_to_dir=None

)

val_gene = valGenerator(batch_size=2,aug_dict=aug_args,val_path='CamVid/',

image_folder='val',label_folder='valannot',

image_color_mode='rgb',label_color_mode='rgb',

image_save_prefix='image',label_save_prefix='label',

flag_multi_class=True,save_to_dir=None

)

tensorboard = TensorBoard(log_dir='./log')

model = unet(num_class=13)

model_checkpoint = ModelCheckpoint('camvid.hdf5',monitor='val_loss',verbose=1,save_best_only=True)

history = model.fit_generator(train_gene,

steps_per_epoch=100,

epochs=20,

verbose=1,

callbacks=[model_checkpoint,tensorboard],

validation_data=val_gene,

validation_steps=50 #validation/batchsize

)

# model.load_weights('camvid.hdf5')

test_gene = testGenerator(test_path='CamVid/test')

results = model.predict_generator(test_gene,233,verbose=1)

saveResult('CamVid/testpred/',results)

从上述代码中可以看到,整个项目的思路比较明确,概括而言就是:

- 数据准备

- 模型训练

- 预测结果

先利用

trainGenerator、valGenerator准备训练数据,利用tensorboard = TensorBoard(log_dir='./log')保存日志信息,能够查看训练过程的loss、acc变化。调用ModelCheckpoint保存模型。模型训练时采用fit_generator模式输入。最后在调用predict_generatorj进行预测时先准备好测试集数据,然后调用saveResult保存预测结果。

2.2 datapre.py

数据预处理部分主要是因为计算机硬件限制,在处理数据时是将数据处理为

生成器的形式,防止电脑内存不足出现MemERROR以及其他错误。

这部分工作由datapre.py文件完成,实现了两个功能,一是数据准备,包含训练集、验证集以及测试集数据的处理;二是保存结果,根据预测得到的结果绘制成图像。

对数据处理时,主要依赖keras中的预训练函数keras.preprocessing.image import ImageDataGenerator以及它的方法flow_from_directory。代码中参数比较多,但是有一些参数并不是必要的,但是在写的时候为了完善起见,保留了。

具体参数的含义可查阅如下链接:

Keras Documentation

英文不好的盆友可参考中文文档keras中文文档

这里trainGenerator、valGenerator返回的都是batch_size张原始图像、标注图像。

- 数据准备

def trainGenerator(batch_size,aug_dict,train_path,image_folder,label_folder,image_color_mode='rgb',

label_color_mode='rgb',image_save_prefix='image',label_save_prefix='label',

flag_multi_class=True,num_class=13,save_to_dir=None,target_size=(512,512),seed=1):

image_datagen = ImageDataGenerator(**aug_dict)

label_datagen = ImageDataGenerator(**aug_dict)

image_generator = image_datagen.flow_from_directory(

train_path,

classes=[image_folder],

class_mode=None,

color_mode=image_color_mode,

target_size = target_size,

batch_size = batch_size,

save_to_dir = save_to_dir,

save_prefix = image_save_prefix,

seed = seed

)

label_generator = label_datagen.flow_from_directory(

train_path,

classes = [label_folder],

class_mode = None,

color_mode = label_color_mode,

target_size = target_size,

batch_size = batch_size,

save_to_dir = save_to_dir,

save_prefix = label_save_prefix,

seed = seed

)

train_generator = zip(image_generator,label_generator)

for img,label in train_generator:

img,label = adjustData(img,label,flag_multi_class,num_class)

# print('------2-------',img.shape,label.shape)#(2, 512, 512, 3) (2, 512, 0, 3, 13)

yield img,label

注意到另外有一个函数adjustData函数,其功能是将image、labelreshape为(batch-size,width,height,classes),并且对于第四通道classes将其进行one-hot表征。代码如下:(注释部分是我在调试过程中写的,可以忽略)

def adjustData(img,label,flag_multi_class,num_class):

if (flag_multi_class):

img = img/255.

label = label[:,:,:,0] if (len(label.shape)==4) else label[:,:,0]

# print('-----1-----', img.shape, label.shape)

new_label = np.zeros(label.shape+(num_class,))

# print('new_label.shape',new_label.shape)

for i in range(num_class):

new_label[label==i,i] = 1

label = new_label

elif (np.max(img)>1):

img = img/255.

label = label/255.

label[label>0.5] = 1

label[label<=0.5] = 0

return (img,label)

对测试集的数据处理与trainGenerator、valGenerator有一些地方不一致。因为在训练集和验证集数据处理时,我们是使用keras的库函数帮我们实现的,并且将image和label整合为generator形式。这个过程中我们或多或少的忽略了,模型的input_size是(512,512,3),在这里需要对测试集数据进行处理,如下:

def testGenerator(test_path,target_size=(512,512),flag_multi_class=True,as_gray=False):

filenames = os.listdir(test_path)

for filename in filenames:

img = io.imread(os.path.join(test_path,filename),as_gray=as_gray)

# img = img/255.

img = trans.resize(img,target_size,mode = 'constant')

img = np.reshape(img,img.shape+(1,)) if (not flag_multi_class) else img

img = np.reshape(img,(1,)+img.shape)

yield img

最后一部分就是对于预测结果的保存,如何将预测结果转化为图像,这里是因为输入模型的数据的第四个通道被onehot编码过,所以没办法直接转化。代码如下:

def saveResult(save_path,npyfile,flag_multi_class=True):

for i,item in enumerate(npyfile):

if flag_multi_class:

img = item

img_out = np.zeros(img[:, :, 0].shape + (3,))

for row in range(img.shape[0]):

for col in range(img.shape[1]):

index_of_class = np.argmax(img[row, col])

img_out[row, col] = COLOR_DICT[index_of_class]

img = img_out.astype(np.uint8)

io.imsave(os.path.join(save_path, '%s_predict.png' % i), img)

else:

img = item[:, :, 0]

img[img > 0.5] = 1

img[img <= 0.5] = 0

img = img * 255.

io.imsave(os.path.join(save_path, '%s_predict.png' % i), img)

2.3 model.py

这里就是unet模型部分,因为keras构建模型更加简便、直观。最后这里实现的unet模型能够处理二分问题也能处理多类分割问题。另外对模型进行了细微的调整,在卷积过程中设置

activation=None

# -*- coding:utf-8 -*-

# Author : Ray

# Data : 2019/7/23 4:53 PM

from keras.models import *

from keras.layers import *

from keras.optimizers import *

IMAGE_SIZE = 512

def unet(pretrained_weights = None, input_size = (IMAGE_SIZE,IMAGE_SIZE,3),num_class=2):

inputs = Input(input_size)

conv1 = Conv2D(64,3,activation=None,padding='same',kernel_initializer='he_normal')(inputs)

conv1 = LeakyReLU(alpha=0.3)(conv1)

conv1 = Conv2D(64,3,activation=None,padding='same',kernel_initializer='he_normal')(conv1)

conv1 = LeakyReLU(alpha=0.3)(conv1)

pool1 = MaxPool2D(pool_size=(2,2))(conv1)

conv2 = Conv2D(128,3,activation=None,padding='same',kernel_initializer='he_normal')(pool1)

conv2 = LeakyReLU(alpha=0.3)(conv2)

conv2 = Conv2D(128,3,activation=None,padding='same',kernel_initializer='he_normal')(conv2)

conv2 = LeakyReLU(alpha=0.3)(conv2)

pool2 = MaxPool2D(pool_size=(2,2))(conv2)

conv3 = Conv2D(256,3,activation=None,padding='same',kernel_initializer='he_normal')(pool2)

conv3 = LeakyReLU(alpha=0.3)(conv3)

conv3 = Conv2D(256,3,activation=None,padding='same',kernel_initializer='he_normal')(conv3)

conv3 = LeakyReLU(alpha=0.3)(conv3)

pool3 = MaxPool2D(pool_size=(2,2))(conv3)

conv4 = Conv2D(512,3,activation=None,padding='same',kernel_initializer='he_normal')(pool3)

conv4 = LeakyReLU(alpha=0.3)(conv4)

conv4 = Conv2D(512,3,activation=None,padding='same',kernel_initializer='he_normal')(conv4)

conv4 = LeakyReLU(alpha=0.3)(conv4)

drop4 = Dropout(0.5)(conv4)

pool4 = MaxPool2D(pool_size=(2,2))(drop4)

conv5 = Conv2D(1024,3,activation=None,padding='same',kernel_initializer='he_normal')(pool4)

conv5 = LeakyReLU(alpha=0.3)(conv5)

conv5 = Conv2D(1024,3,activation=None,padding='same',kernel_initializer='he_normal')(conv5)

conv5 = LeakyReLU(alpha=0.3)(conv5)

drop5 = Dropout(0.5)(conv5)

up6 = Conv2D(512,2,activation=None,padding='same',kernel_initializer='he_normal')(UpSampling2D(size=(2,2))(drop5))

up6 = LeakyReLU(alpha=0.3)(up6)

merge6 = concatenate([drop4,up6],axis=3)

conv6 = Conv2D(512,3,activation=None,padding='same',kernel_initializer='he_normal')(merge6)

conv6 = LeakyReLU(alpha=0.3)(conv6)

conv6 = Conv2D(512,3,activation=None,padding='same',kernel_initializer='he_normal')(conv6)

conv6 = LeakyReLU(alpha=0.3)(conv6)

up7 = Conv2D(256,3,activation=None,padding='same',kernel_initializer='he_normal')(UpSampling2D(size=(2,2))(conv6))

up7 = LeakyReLU(alpha=0.3)(up7)

merge7 = concatenate([conv3,up7],axis=3)

conv7 = Conv2D(256,3,activation=None,padding='same',kernel_initializer='he_normal')(merge7)

conv7 = LeakyReLU(alpha=0.3)(conv7)

conv7 = Conv2D(256,3,activation=None,padding='same',kernel_initializer='he_normal')(conv7)

conv7 = LeakyReLU(alpha=0.3)(conv7)

up8 = Conv2D(128,3,activation=None,padding='same',kernel_initializer='he_normal')(UpSampling2D(size=(2,2))(conv7))

up8 = LeakyReLU(alpha=0.3)(up8)

merge8 = concatenate([conv2,up8],axis=3)

conv8 = Conv2D(128,3,activation=None,padding='same',kernel_initializer='he_normal')(merge8)

conv8 = LeakyReLU(alpha=0.3)(conv8)

conv8 = Conv2D(128,3,activation=None,padding='same',kernel_initializer='he_normal')(conv8)

conv8 = LeakyReLU(alpha=0.3)(conv8)

up9 = Conv2D(64,3,activation=None,padding='same',kernel_initializer='he_normal')(UpSampling2D(size=(2,2))(conv8))

up9 = LeakyReLU(alpha=0.3)(up9)

merge9 = concatenate([conv1,up9],axis=3)

conv9 = Conv2D(64,3,activation=None,padding='same',kernel_initializer='he_normal')(merge9)

conv9 = LeakyReLU(alpha=0.3)(conv9)

conv9 = Conv2D(64,3,activation=None,padding='same',kernel_initializer='he_normal')(conv9)

conv9 = LeakyReLU(alpha=0.3)(conv9)

if num_class == 2:#判断是否多分类问题,二分类激活函数使用sigmoid,而多分割采用softmax。相应的损失函数的选择也各有不同。

conv10 = Conv2D(1,1,activation='sigmoid')(conv9)

loss_function = 'binary_crossentropy'

else:

conv10 = Conv2D(num_class,1,activation='softmax')(conv9)

loss_function = 'categorical_crossentropy'

# conv10 = Conv2D(3,1,activation='softamx')(conv9)

model = Model(input= inputs,output=conv10)

model.compile(optimizer=Adam(lr = 1e-5),loss=loss_function,metrics=['accuracy'])

model.summary()

if pretrained_weights:

model.load_weights(pretrained_weights)

return model

3.result

注意到main.py中在model.fit_generator()中设置callbacks=[model_checkpoint,tensorboard],我们可以在训练结束之后查阅loss和acc的训练过程。

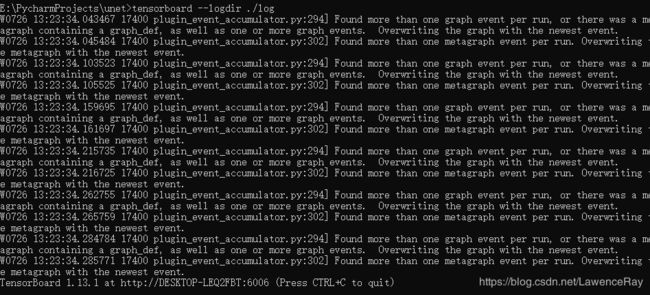

打开terminal,进入项目所在路径,输入tensorboard --logdir ./log(是你代码中所写的),然后出现以下界面就说明你的路径正确了,

在浏览器键入http://http:localhost:6006即可查阅loss及acc的训练过程。

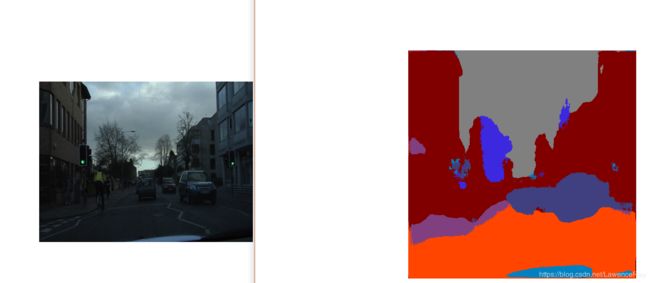

在这里随便给一张最后的结果图。最终效果并不好,还有很多可以改进的地方,慢慢来。

其中有些我遇到的麻烦以及细节比较繁琐,这里我就不再说了,有疑问的朋友可以留言,看到会直接回复。