高斯过程回归GPR(sklearn.gaussian_process+python实现)

1、高斯过程:

scikit-learn (sklearn) 官方文档

scikit-learn (sklearn) 官方文档中文版

scikit-learn (sklearn) 官方文档中文版(1.7. 高斯过程)

其他介绍:

A Visual Exploration of Gaussian Processes

看得见的高斯过程:这是一份直观的入门解读(上面中文翻译-机器之心)

Introduction to Gaussian Processes - Part I

从数学到实现,全面回顾高斯过程中的函数最优化(机器之心)

浅谈高斯过程回归

相关paper:

Gaussian Processes for Regression A Quick Introduction, M.Ebden, August 2008.

[RW2006] Carl Eduard Rasmussen and Christopher K.I. Williams, “Gaussian Processes for Machine Learning”, MIT Press 2006.

2、python实现:

(例1)

import numpy as np

import matplotlib.pyplot as plt

from sklearn.gaussian_process import GaussianProcessRegressor

from sklearn.gaussian_process.kernels import RBF, ConstantKernel as C

from mpl_toolkits.mplot3d import Axes3D

# 创建数据集

test = np.array([[2004, 98.31]])

data = np.array([

[2001, 100.83, 410], [2005, 90.9, 500], [2007, 130.03, 550], [2004, 78.88, 410], [2006, 74.22, 460],

[2005, 90.4, 497], [1983, 64.59, 370], [2000, 164.06, 610], [2003, 147.5, 560], [2003, 58.51, 408],

[1999, 95.11, 565], [2000, 85.57, 430], [1995, 66.44, 378], [2003, 94.27, 498], [2007, 125.1, 760],

[2006, 111.2, 730], [2008, 88.99, 430], [2005, 92.13, 506], [2008, 101.35, 405], [2000, 158.9, 615]])

# 核函数的取值

kernel = C(0.1, (0.001, 0.1)) * RBF(0.5, (1e-4, 10))

# 创建高斯过程回归,并训练

reg = GaussianProcessRegressor(kernel=kernel, n_restarts_optimizer=10, alpha=0.1)

reg.fit(data[:, :-1], data[:, -1])

# 创建一个作图用的网格的测试数据,数据位线性,x为【1982,2009】间隔位0.5;y为【57.5,165】间隔位0.5

x_min, x_max = data[:, 0].min() - 1, data[:, 0].max() + 1

y_min, y_max = data[:, 1].min() - 1, data[:, 1].max() + 1

xset, yset = np.meshgrid(np.arange(x_min, x_max, 0.5), np.arange(y_min, y_max, 0.5))

# 查看网格测试数据输出结果,并返回标准差。

output, err = reg.predict(np.c_[xset.ravel(), yset.ravel()], return_std=True)

output, err = output.reshape(xset.shape), err.reshape(xset.shape)

sigma = np.sum(reg.predict(data[:, :-1], return_std=True)[1])

up, down = output * (1 + 1.96 * err), output * (1 - 1.96 * err)

# 作图,并画出

fig = plt.figure(figsize=(10.5, 5))

ax1 = fig.add_subplot(121, projection='3d')

surf = ax1.plot_wireframe(xset, yset, output, rstride=10, cstride=2, antialiased=True)

surf_u = ax1.plot_wireframe(xset, yset, up, colors='lightgreen', linewidths=1,

rstride=10, cstride=2, antialiased=True)

surf_d = ax1.plot_wireframe(xset, yset, down, colors='lightgreen', linewidths=1,

rstride=10, cstride=2, antialiased=True)

ax1.scatter(data[:, 0], data[:, 1], data[:, 2], c='red')

ax1.set_title('House Price at (2004, 98.31): {0:.2f}$*10^4$ RMB'.format(reg.predict(test)[0]))

ax1.set_xlabel('Year')

ax1.set_ylabel('Area, $m^2$')

plt.show()

结果:

(例2)

import numpy as np

import matplotlib.pyplot as plt

from sklearn.gaussian_process.kernels import RBF, ConstantKernel as C

from sklearn.gaussian_process import GaussianProcessRegressor

# Build a model

kernel = C(1.0, (1e-3, 1e3)) * RBF(10, (0.5, 2))

gp = GaussianProcessRegressor(kernel=kernel, n_restarts_optimizer=9)

# Some data

xobs = np.array([[1], [1.5], [-3]])

yobs = np.array([3, 0, 1])

# Fit the model to the data (optimize hyper parameters)

gp.fit(xobs, yobs)

# Plot points and predictions

x_set = np.arange(-6, 6, 0.1)

x_set = np.array([[i] for i in x_set])

means, sigmas = gp.predict(x_set, return_std=True)

plt.figure(figsize=(8, 5))

plt.errorbar(x_set, means, yerr=sigmas, alpha=0.5)

plt.plot(x_set, means, 'g', linewidth=4)

colors = ['g', 'r', 'b', 'k']

for c in colors:

y_set = gp.sample_y(x_set, random_state=np.random.randint(1000))

plt.plot(x_set, y_set, c + '--', alpha=0.5)

plt.show()

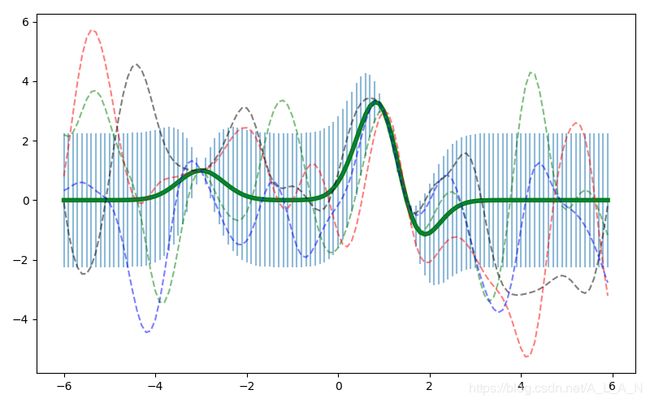

结果:

(例3)

import numpy as np

import matplotlib.pyplot as plt

from scipy.interpolate import spline

from scipy.linalg import cho_solve

from numpy.linalg import cholesky

from itertools import cycle

class SimpleGP():

""" One dimensional Gaussian Process class. Uses

squared exponential covariance form.

parameters

----------

width_scale : float, positive

Same as sigma in (4) of post

length_scale : float, positive

Same as l in (4) of post

noise : float

Added to diagonal of covariance, useful for

improving convergence

"""

def __init__(self, width_scale, length_scale, noise=10 ** (-6)):

self.width_scale = width_scale

self.length_scale = length_scale

self.noise = noise

def _exponential_cov(self, x1, x2):

"""

Return covariance matrix for two arrays,

with i-j element = cov(x_1i, x_2j).

parameters

----------

x1, x2: np.array

arrays containing x locations

"""

return (self.width_scale ** 2) * np.exp(

- np.subtract.outer(x1, x2) ** 2 / (2 * self.length_scale ** 2))

def fit(self, sample_x, sample_y, sample_s):

"""

Save for later use the Cholesky matrix

associated with the inverse that appears

in (5) of post. Also evaluate the weighted

y vector that appears in that equation.

parameters

----------

sample_x : np.array

locations where we have sampled

sample_y : np.array

y values observed at each sample location

sample_s : np.array

array of stds for each sample

"""

self.sample_x = np.array(sample_x)

S = self._exponential_cov(sample_x, sample_x)

d = np.diag(np.array(sample_s) ** 2 + self.noise)

self.lower_cholesky = cholesky(S + d)

self.weighted_sample_y = cho_solve(

(self.lower_cholesky, True), sample_y)

def interval(self, test_x):

"""

Obtain the one-sigam confidence interval

for a set of test points

parameters

----------

test_x : np.array

locations where we want to test

"""

test_x = np.array([test_x]).flatten()

means, stds = [], []

for row in test_x:

S0 = self._exponential_cov(row, self.sample_x)

v = cho_solve((self.lower_cholesky, True), S0)

means.append(np.dot(S0, self.weighted_sample_y))

stds.append(np.sqrt(self.width_scale ** 2 - np.dot(S0, v)))

return means, stds

def sample(self, test_x, samples=1):

"""

Obtain function samples from the posterior

parameters

----------

test_x : np.array

locations where we want to test

samples : int

Number of samples to take

"""

S0 = self._exponential_cov(test_x, self.sample_x)

# construct covariance matrix of sampled points.

m = []

for row in S0:

m.append(cho_solve((self.lower_cholesky, True), row))

cov = self._exponential_cov(test_x, test_x) - np.dot(S0, np.array(m).T)

mean = np.dot(S0, self.weighted_sample_y)

return np.random.multivariate_normal(mean, cov, samples)

# Insert data here.

sample_x = [0.5, 2, -2]

sample_y = [2, 1.5, -0.5]

sample_s = [0.01, 0.05, 0.125]

WIDTH_SCALE = 1

LENGTH_SCALE = 1

SAMPLES = 8

model = SimpleGP(WIDTH_SCALE, LENGTH_SCALE)

model.fit(sample_x, sample_y, sample_s)

test_x = np.arange(-5, 5, .1)

means, stds = model.interval(test_x)

samples = model.sample(test_x, SAMPLES)

# plots here.

fig, ax = plt.subplots(figsize=(12, 5))

ax.set_ylim([-3, 3])

ax.axis('off')

colors = cycle(['g', 'b', 'k', 'y', 'c', 'r', 'm'])

plt.errorbar(test_x, means, yerr=stds,

ecolor='g', linewidth=1.5,

elinewidth=0.5, alpha=0.75)

for sample, c in zip(samples, colors):

plt.plot(test_x, sample, c, linewidth=2. * np.random.rand(), alpha=0.5)

plt.show()

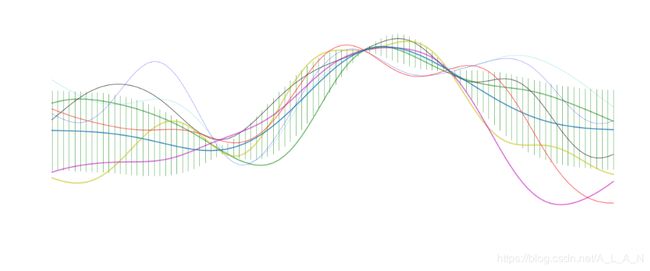

结果:

C++库:

github地址:https://github.com/resibots/limbo

Documentation:http://www.resibots.eu/limbo

参考:

python.sklearn.gaussian_process高斯过程回归的调用

https://github.com/EFavDB/gaussian_processes/blob/master/GP_example.ipynb