网站日志流量系统----【结果输出模块】

模块开发----结果导出

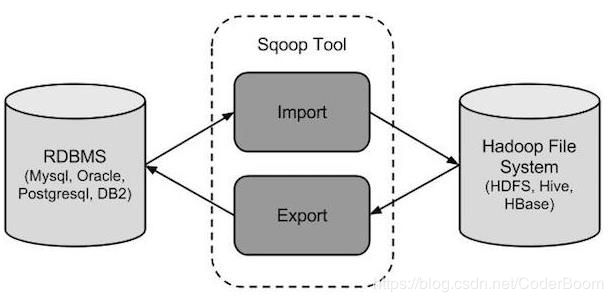

1. Apache Sqoop

Sqoop 是Hadoop 和关系数据库服务器之间传送数据的一种工具 .

Sqoop:“SQL 到 Hadoop 和 Hadoop 到 SQL

导入导出是站在Apache的角度来说的

Sqoop工作机制是将导入或导出命令翻译成mapreduce程序来实现。

在翻译出的 mapreduce 中主要是对 inputformat 和 outputformat 进行定制。

sqoop 安装

安装 sqoop 的前提是已经具备java 和 hadoop 的环境

最新稳定版: 1.4.6

上传安装包到 /export/servers

解压并改名 , 删除安装包即可

配置文件修改:mv sqoop-env-template.sh sqoop-env.sh

vi sqoop-env.sh

which hadoop查询hadoop安装路径

由于我们hadoop的HADOOP_COMMON_HOME和HADOOP_MAPRED_HOME就是同一个路径 , 因此路径相同

# Licensed to the Apache Software Foundation (ASF) under one or more

# contributor license agreements. See the NOTICE file distributed with

# this work for additional information regarding copyright ownership.

# The ASF licenses this file to You under the Apache License, Version 2.0

# (the "License"); you may not use this file except in compliance with

# the License. You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# included in all the hadoop scripts with source command

# should not be executable directly

# also should not be passed any arguments, since we need original $*

# Set Hadoop-specific environment variables here.

#Set path to where bin/hadoop is available

export HADOOP_COMMON_HOME=/export/servers/hadoop-2.7.4

#Set path to where hadoop-*-core.jar is available

export HADOOP_MAPRED_HOME=/export/servers/hadoop-2.7.4

#set the path to where bin/hbase is available

#export HBASE_HOME=

#Set the path to where bin/hive is available

export HIVE_HOME=/export/servers/hive

#Set the path for where zookeper config dir is

#export ZOOCFGDIR=

由于sqoop中没有mysql 的 jdbc 驱动包 , 加入到其中 , 导入mysql的jdbc的驱动包mysql-connector-java-5.1.28.jar到$SQOOP_HOME/lib/下

验证启动 :

bin/sqoop list-databases --connect jdbc:mysql://localhost:3306/ --username root --password 123

注 : sqoop命令要求在一行写完 , 如果一行写不完 , 在换行出添加\即可

本命令会列出所有 mysql 的数据库 , 到这里,整个 Sqoop 安装工作完成。

2. Sqoop导入

将数据导入 HDFS的语法$ sqoop import (generic-args) (import-args)

Sqoop 测试表数据

在 mysql 中创建数据库 userdb,然后执行参考资料中的 sql 脚本:创建三张表: emp emp_add emp_conn。

所有操作都是在sqoop的文件夹下

- 导入mysql表数据到HDFS

bin/sqoop import \

--connect jdbc:mysql://node-1:3306/userdb \

--username root \

--password 123 \

--target-dir /sqoopresult \

--table emp --m 1

# --m表示mapreduce的个数

查看

hdfs dfs -cat /sqoopresult/part-m-00000

tips: 导入mysql数据到hdfs,默认分隔符是

逗号

- 导入mysql表数据到hive , 需要两步:

- 复制mysql中表结构到hive中

- 复制表数据到hive表对应的hdfs路径下

导入到hive中,数据默认分隔符是'\001'

将关系型数据的表结构复制到hive中

bin/sqoop create-hive-table \

--connect jdbc:mysql://node-1:3306/userdb \

--table emp_add \

--username root \

--password 123 \

--hive-table test01.emp_add_sp

其中 --table emp_add为mysql中的数据库sqoopdb中的表

--hive-table emp_add_sp 为hive中新建的表名称

从关系数据库导入文件到hive中

bin/sqoop import \

--connect jdbc:mysql://node-1:3306/userdb \

--username root \

--password 123 \

--table emp_add \

--hive-table test01.emp_add_sp \

--hive-import \

--m 1

- 导入表数据子集

bin/sqoop import \

--connect jdbc:mysql://node-1:3306/userdb \

--username root \

--password 123 \

--where "city ='sec-bad'" \

--target-dir /wherequery \

--table emp_add --m 1

将表数据中city为'sec-bad'到hdfs下的'/wherequery' , 分隔符是逗号

bin/sqoop import \

--connect jdbc:mysql://node-1:3306/userdb \

--username root \

--password 123 \

--target-dir /wherequery12 \

--query 'select id,name,deg from emp WHERE id>1203 and $CONDITIONS' \

--split-by id \

--fields-terminated-by '\001' \

--m 1

导入到hdfs上并设置分隔符为'\001'

-

增量导入(对应的是全量) : 上次导出数据之后,本次导入数据之前中间新增的数据

sqoop中增量导入的判断是通过上次导入到某个列的某个值来标识 的,这个值由用户自己维护,一般企业中选择不重复且自增长的主键最多,自增长的时间也可以。

bin/sqoop import \

--connect jdbc:mysql://node-1:3306/sqoopdb \

--username root \

--password 123 \

--table emp --m 1 \

--incremental append \

--check-column id \

--last-value 1205

3. Sqoop 导出

将数据从 HDFS 导出到 RDBMS 数据库导出前,目标表必须存在于目标数据库中。

export 命令语法:$ sqoop export (generic-args) (export-args)

-

导出hdfs、hive数据到mysql

- 手动自己去mysql根据结构化数据类型创建相应的表

- 执行导出数据操作

-

sqoop 导出数据至mysql

/emp_data/

1201,gopal,manager,50000,TP

1202,manisha,preader,50000,TP

1203,kalil,php dev,30000,AC

1204,prasanth,php dev,30000,AC

1205,kranthi,admin,20000,TP

1206,satishp,grpdes,20000,GR

首先需要手动创建mysql中的目标表:

mysql> USE userdb;

mysql> CREATE TABLE employee (

id INT NOT NULL PRIMARY KEY,

name VARCHAR(20),

deg VARCHAR(20),

salary INT,

dept VARCHAR(10));

然后执行导出命令

bin/sqoop export \

--connect jdbc:mysql://node-1:3306/userdb \

--username root \

--password 123 \

--table employee \

--export-dir /emp_data/

1)列出mysql数据库中的所有数据库命令

bin/sqoop list-databases \

--connect jdbc:mysql://node-21:3306 \

--username root \

--password 123

2)连接mysql并列出数据库中的表命令

bin/sqoop list-tables \

--connect jdbc:mysql://node-1:3306/userdb \

--username root \

--password 123

- 项目中实际数据导出操作

--建表

create table dw_user_dstc_ip_h(

remote_addr varchar(30),

pvs bigint,

hour varchar(10)

)

bin/sqoop export \

--connect jdbc:mysql://node-1:3306/userdb \

--username root \

--password 123 \

--table dw_user_dstc_ip_h \

--export-dir /user/hive/warehouse/weblog.db/dw_user_dstc_ip_h/

报错 针对直接位于hdfs上的数据 sqoop默认分隔符是`,` 需要指定分隔符

Can't parse input data: '86.143.113.226150918 09'

正确如下:

bin/sqoop export \

--connect jdbc:mysql://node-1:3306/userdb \

--username root \

--password 123 \

--table dw_user_dstc_ip_h \

--export-dir /user/hive/warehouse/weblog.db/dw_user_dstc_ip_h/ \

--fields-terminated-by '\001'

tips : sqoop实际生产环境中 关于mysql地址 尽量不要使用;localhost 可用ip或者域名代替

模块开发----工作流调度

整个项目的数据按照处理过程,从数据采集到数据分析,再到结果数据的导出,一系列的任务可以分割成若干个 azkaban 的 job 单元,然后由工作流调度器调度执行。

调度脚本的编写难点在于 shell 脚本。但是一般都是有固定编写模式。大家可以参考资料中的脚本进行编写。大体框架如下:

#!/bin/bash#set java env#set hadoop env- #设置一些主类、目录等常量

- #获取时间信息

- #shell 主程序、结合流程控制(if…else)去分别执行 shell 命令。

- azkaban调度hive

可以采用执行sql文件脚本

bin/hive -f etl_every_hour.sql

bin/hive -e 'select....'

- 首先将编写好的mr程序依次打包

- 编写job脚本

# weblog_preprocess.job

type=command

command=/export/servers/hadoop-2.7.4/bin/hadoop jar weblog_preprocess.jar /test/input /test/output

----------------------------------

# weblog_click_pageviews.job

type=command

dependencies=weblog_preprocess

command=/export/servers/hadoop-2.7.4/bin/hadoop jar weblog_click_pageviews.jar

-----------------------------------

# weblog_click_visits.job

type=command

dependencies=weblog_click_pageviews

command=/export/servers/hadoop-2.7.4/bin/hadoop jar weblog_click_visits.jar

- 将jar包和job脚本打包成zip

上传至azkaban上即可

开启azkaban

首先进入webserver下执行bin/azkaban-web-start.sh

再进入到executor下执行bin/azkaban-executor-start.sh

- 导入数据到input下

- 执行azkaban即可

- 问题:azkaban上传大文件出错

Installation Failed. Error chunking

Caused by: java.sql.SQLException: Packet for query is too large (1625872 > 1048576). You can change this value on the server by sett

ing the max_allowed_packet' variable.

解决办法:https://blog.csdn.net/sunny05296/article/details/80446944

模块开发----数据可视化

1. Echarts 介绍

ECharts 是一款由百度前端技术部开发的,基于 Javascript 的数据可视化图表库,提供直观,生动,可交互,可个性化定制的数据可视化图表 .

2. Echarts使用

- 页面引入echarts的js库

- 创建一个dom容器(div)

- 根据容器初始化一个echarts实例

var myChart = echarts.init(document.getElementById('main'));

- 根据官网查找实例 确定自己需要图标的option选项

http://echarts.baidu.com/

- 把option设置到实例中

myChart.setOption(option);

实际使用中,最大工作量在于把数据按照前端需要的格式返回。

2.1 Echarts----简单入门

2.1.1 下载Echarts

开发环境建议下载源代码版本,包含了常见的错误提示和警告。

2.1.2 页面引入Echarts

Echarts3 只需要像普通的JavaScript库一样用script标签引入即可 .

<html>

<head>

<meta charset="utf-8">

<script src="echarts.min.js">script>

head>

html>

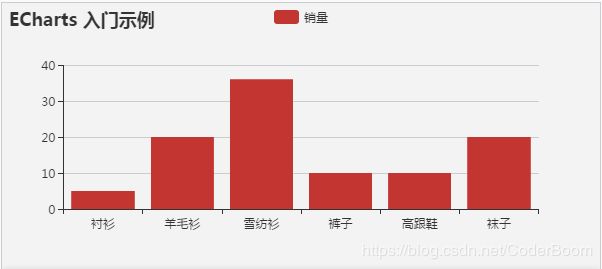

2.1.3 绘制一个简单的图表

在绘图前我们需要为 ECharts 准备一个具备高宽的 DOM 容器:

<body>

<div id="main" style="width: 600px;height:400px;">div>

body>

然后就可以通过 echarts.init 方法初始化一个 echarts 实例并通过 setOption 方法生成一个简单的柱状图,下面是完整代码。

<html>

<head>

<meta charset="utf-8">

<title>EChartstitle>

<script src="echarts.min.js">script>

head>

<body>

<div id="main" style="width: 600px;height:400px;">div>

<script type="text/javascript">

// 基于准备好的 dom,初始化 echarts 实例

var myChart = echarts.init(document.getElementById('main'));

// 指定图表的配置项和数据

var option = {

title: {

text: 'ECharts 入门示例'

},

tooltip: {},

legend: {

data:['销量']

},

xAxis: {

data: ["衬衫","羊毛衫","雪纺衫","裤子","高跟鞋","袜子"]

},

yAxis: {},

series: [{

name: '销量',

type: 'bar',

data: [5, 20, 36, 10, 10, 20]

}]

};

// 使用刚指定的配置项和数据显示图表。

myChart.setOption(option);

script>

body>

html>

可以看到如下图片

3. 数据可视化的展现

几个开发细节注意点:

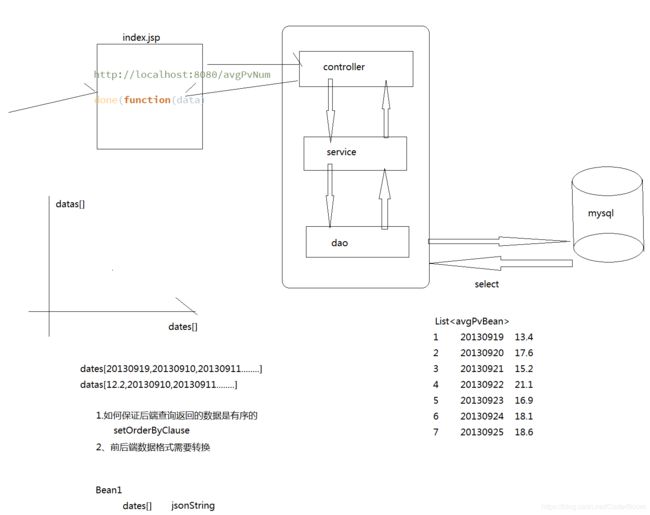

4.1 Mybatis example 排序问题

example.setOrderByClause("dateStr ASC");

查询结果便可以根据 dataStr 字段正序排列(从小到大)

4.2 Echarts 前端数据格式问题

注意,当异步加载数据的时候,前端一般需要的是数据格式是数组。一定要对应上。在这里我们可以使用 Java Bean 封装数据,然后转换成 json 扔到前端,对应上相应的字段即可。

ObjectMapper om = new ObjectMapper();

beanJson = om.writeValueAsString(bean);

4.3 Controller 返回的 json

@RequestMapping(value="/xxxx",produces="application/json;charset=UTF-8")

@ResponseBody

数据可视化展示详细步骤

- 首先创建一个web工程用于数据可视化展示

- 导入pom文件

<dependencies>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-contextartifactId>

<version>4.2.4.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-beansartifactId>

<version>4.2.4.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-webmvcartifactId>

<version>4.2.4.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-jdbcartifactId>

<version>4.2.4.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-aspectsartifactId>

<version>4.2.4.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-jmsartifactId>

<version>4.2.4.RELEASEversion>

dependency>

<dependency>

<groupId>org.springframeworkgroupId>

<artifactId>spring-context-supportartifactId>

<version>4.2.4.RELEASEversion>

dependency>

<dependency>

<groupId>org.mybatisgroupId>

<artifactId>mybatisartifactId>

<version>3.2.8version>

dependency>

<dependency>

<groupId>org.mybatisgroupId>

<artifactId>mybatis-springartifactId>

<version>1.2.2version>

dependency>

<dependency>

<groupId>com.github.miemiedevgroupId>

<artifactId>mybatis-paginatorartifactId>

<version>1.2.15version>

dependency>

<dependency>

<groupId>mysqlgroupId>

<artifactId>mysql-connector-javaartifactId>

<version>5.1.32version>

dependency>

<dependency>

<groupId>com.alibabagroupId>

<artifactId>druidartifactId>

<version>1.0.9version>

dependency>

<dependency>

<groupId>jstlgroupId>

<artifactId>jstlartifactId>

<version>1.2version>

dependency>

<dependency>

<groupId>javax.servletgroupId>

<artifactId>servlet-apiartifactId>

<version>2.5version>

<scope>providedscope>

dependency>

<dependency>

<groupId>javax.servletgroupId>

<artifactId>jsp-apiartifactId>

<version>2.0version>

<scope>providedscope>

dependency>

<dependency>

<groupId>junitgroupId>

<artifactId>junitartifactId>

<version>4.12version>

dependency>

<dependency>

<groupId>com.fasterxml.jackson.coregroupId>

<artifactId>jackson-databindartifactId>

<version>2.4.2version>

dependency>

dependencies>

<build>

<finalName>${project.artifactId}finalName>

<resources>

<resource>

<directory>src/main/javadirectory>

<includes>

<include>**/*.propertiesinclude>

<include>**/*.xmlinclude>

includes>

<filtering>falsefiltering>

resource>

<resource>

<directory>src/main/resourcesdirectory>

<includes>

<include>**/*.propertiesinclude>

<include>**/*.xmlinclude>

includes>

<filtering>falsefiltering>

resource>

resources>

<plugins>

<plugin>

<groupId>org.apache.maven.pluginsgroupId>

<artifactId>maven-compiler-pluginartifactId>

<version>3.2version>

<configuration>

<source>1.7source>

<target>1.7target>

<encoding>UTF-8encoding>

configuration>

plugin>

<plugin>

<groupId>org.apache.tomcat.mavengroupId>

<artifactId>tomcat7-maven-pluginartifactId>

<version>2.2version>

<configuration>

<path>/path>

<port>8080port>

configuration>

plugin>

plugins>

build>

-

spring的配置文件等

- applicationContext-dao.xml

<beans xmlns="http://www.springframework.org/schema/beans" xmlns:context="http://www.springframework.org/schema/context" xmlns:p="http://www.springframework.org/schema/p" xmlns:aop="http://www.springframework.org/schema/aop" xmlns:tx="http://www.springframework.org/schema/tx" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-4.2.xsd http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-4.2.xsd http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-4.2.xsd http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx-4.2.xsd http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util-4.2.xsd"> <context:property-placeholder location="classpath:properties/db.properties" /> <bean id="dataSource" class="com.alibaba.druid.pool.DruidDataSource" destroy-method="close"> <property name="url" value="${jdbc.url}" /> <property name="username" value="${jdbc.username}" /> <property name="password" value="${jdbc.password}" /> <property name="driverClassName" value="${jdbc.driver}" /> <property name="maxActive" value="10" /> <property name="minIdle" value="5" /> bean> <bean id="sqlSessionFactory" class="org.mybatis.spring.SqlSessionFactoryBean"> <property name="dataSource" ref="dataSource" /> <property name="configLocation" value="classpath:mybatis/SqlMapConfig.xml" /> bean> <bean class="org.mybatis.spring.mapper.MapperScannerConfigurer"> <property name="basePackage" value="com.itck.mapper" /> bean> beans>- applicationContext-service.xml

<beans xmlns="http://www.springframework.org/schema/beans" xmlns:context="http://www.springframework.org/schema/context" xmlns:p="http://www.springframework.org/schema/p" xmlns:aop="http://www.springframework.org/schema/aop" xmlns:tx="http://www.springframework.org/schema/tx" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-4.2.xsd http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-4.2.xsd http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-4.2.xsd http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx-4.2.xsd http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util-4.2.xsd"> <context:component-scan base-package="com.itck.service"/> beans>- applicationContext-trans.xml

<beans xmlns="http://www.springframework.org/schema/beans" xmlns:context="http://www.springframework.org/schema/context" xmlns:p="http://www.springframework.org/schema/p" xmlns:aop="http://www.springframework.org/schema/aop" xmlns:tx="http://www.springframework.org/schema/tx" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-4.2.xsd http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-4.2.xsd http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-4.2.xsd http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx-4.2.xsd http://www.springframework.org/schema/util http://www.springframework.org/schema/util/spring-util-4.2.xsd"> <bean id="transactionManager" class="org.springframework.jdbc.datasource.DataSourceTransactionManager"> <property name="dataSource" ref="dataSource" /> bean> <tx:advice id="txAdvice" transaction-manager="transactionManager"> <tx:attributes> <tx:method name="save*" propagation="REQUIRED" /> <tx:method name="insert*" propagation="REQUIRED" /> <tx:method name="add*" propagation="REQUIRED" /> <tx:method name="create*" propagation="REQUIRED" /> <tx:method name="delete*" propagation="REQUIRED" /> <tx:method name="update*" propagation="REQUIRED" /> <tx:method name="find*" propagation="SUPPORTS" read-only="true" /> <tx:method name="select*" propagation="SUPPORTS" read-only="true" /> <tx:method name="get*" propagation="SUPPORTS" read-only="true" /> tx:attributes> tx:advice> <aop:config> <aop:advisor advice-ref="txAdvice" pointcut="execution(* com.itck.service..*.*(..))" /> aop:config> beans>- springmvc

<beans xmlns="http://www.springframework.org/schema/beans" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:p="http://www.springframework.org/schema/p" xmlns:context="http://www.springframework.org/schema/context" xmlns:mvc="http://www.springframework.org/schema/mvc" xsi:schemaLocation="http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-4.2.xsd http://www.springframework.org/schema/mvc http://www.springframework.org/schema/mvc/spring-mvc-4.2.xsd http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-4.2.xsd"> <context:component-scan base-package="com.itck.controller" /> <mvc:annotation-driven /> <bean class="org.springframework.web.servlet.view.InternalResourceViewResolver"> <property name="prefix" value="/WEB-INF/jsp/" /> <property name="suffix" value=".jsp" /> bean> <mvc:resources location="/css/" mapping="/css/**"/> <mvc:resources location="/js/" mapping="/js/**"/> <mvc:resources location="/echarts/" mapping="/echarts/**"/> <mvc:resources location="/assets/" mapping="/assets/**"/> <mvc:resources location="/img/" mapping="/img/**"/> beans> -

导入properties文件

- db.properties

jdbc.driver=com.mysql.jdbc.Driver jdbc.url=jdbc:mysql://node-1:3306/sqoopdb?characterEncoding=utf-8 jdbc.username=root jdbc.password=123 -

mybatis配置文件

- SqlMapConfig.xml

<configuration> configuration> -

web.xml

<web-app xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xmlns="http://java.sun.com/xml/ns/javaee"

xsi:schemaLocation="http://java.sun.com/xml/ns/javaee http://java.sun.com/xml/ns/javaee/web-app_2_5.xsd"

version="2.5">

<display-name>report_datadisplay-name>

<welcome-file-list>

<welcome-file>index.htmlwelcome-file>

welcome-file-list>

<context-param>

<param-name>contextConfigLocationparam-name>

<param-value>classpath:spring/applicationContext-*.xmlparam-value>

context-param>

<listener>

<listener-class>org.springframework.web.context.ContextLoaderListenerlistener-class>

listener>

<filter>

<filter-name>CharacterEncodingFilterfilter-name>

<filter-class>org.springframework.web.filter.CharacterEncodingFilterfilter-class>

<init-param>

<param-name>encodingparam-name>

<param-value>utf-8param-value>

init-param>

filter>

<filter-mapping>

<filter-name>CharacterEncodingFilterfilter-name>

<url-pattern>/*url-pattern>

filter-mapping>

<servlet>

<servlet-name>data-reportservlet-name>

<servlet-class>org.springframework.web.servlet.DispatcherServletservlet-class>

<init-param>

<param-name>contextConfigLocationparam-name>

<param-value>classpath:spring/springmvc.xmlparam-value>

init-param>

<load-on-startup>1load-on-startup>

servlet>

<servlet-mapping>

<servlet-name>data-reportservlet-name>

<url-pattern>/url-pattern>

servlet-mapping>

<error-page>

<error-code>404error-code>

<location>/WEB-INF/jsp/404.jsplocation>

error-page>

web-app>

根据配置文件建立相应的包 , 然后编写Controller层 , 查看首页

- IndexController.java

@Controller

public class IndexController {

@RequestMapping("/index")

public String showIndex(){

return "index";

}

}

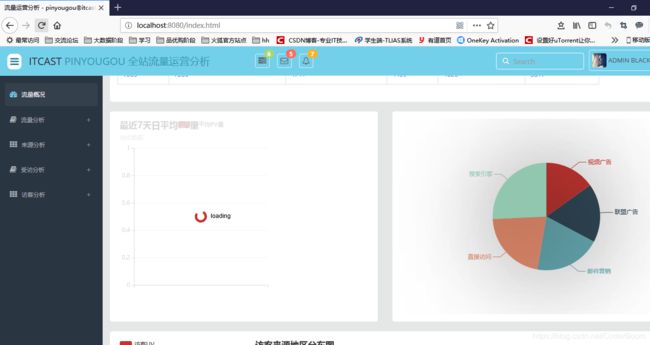

即可看到相应的页面 , 我们发现数据没有导入到页面

因此此时我们考虑如何将页面中需要的数据导入进来

动态加载数据思路

- 通过代码生成器生成所需要的代码导入到项目中

import java.io.File;

import java.io.IOException;

import java.util.ArrayList;

import java.util.List;

import org.mybatis.generator.api.MyBatisGenerator;

import org.mybatis.generator.config.Configuration;

import org.mybatis.generator.config.xml.ConfigurationParser;

import org.mybatis.generator.exception.XMLParserException;

import org.mybatis.generator.internal.DefaultShellCallback;

public class GeneratorSqlmap {

public void generator() throws Exception{

List<String> warnings = new ArrayList<String>();

boolean overwrite = true;

//指定 逆向工程配置文件

File configFile = new File("generatorConfig.xml");

ConfigurationParser cp = new ConfigurationParser(warnings);

Configuration config = cp.parseConfiguration(configFile);

DefaultShellCallback callback = new DefaultShellCallback(overwrite);

MyBatisGenerator myBatisGenerator = new MyBatisGenerator(config,

callback, warnings);

myBatisGenerator.generate(null);

}

public static void main(String[] args) throws Exception {

try {

GeneratorSqlmap generatorSqlmap = new GeneratorSqlmap();

generatorSqlmap.generator();

} catch (Exception e) {

e.printStackTrace();

}

}

}

<generatorConfiguration>

<context id="testTables" targetRuntime="MyBatis3">

<commentGenerator>

<property name="suppressAllComments" value="true" />

commentGenerator>

<jdbcConnection driverClass="com.mysql.jdbc.Driver"

connectionURL="jdbc:mysql://node-21:3306/sqoopdb" userId="root"

password="hadoop">

jdbcConnection>

<javaTypeResolver>

<property name="forceBigDecimals" value="false" />

javaTypeResolver>

<javaModelGenerator targetPackage="cn.itcast.pojo"

targetProject=".\src">

<property name="enableSubPackages" value="false" />

<property name="trimStrings" value="true" />

javaModelGenerator>

<sqlMapGenerator targetPackage="cn.itcast.mapper"

targetProject=".\src">

<property name="enableSubPackages" value="false" />

sqlMapGenerator>

<javaClientGenerator type="XMLMAPPER"

targetPackage="cn.itcast.mapper"

targetProject=".\src">

<property name="enableSubPackages" value="false" />

javaClientGenerator>

<table schema="" tableName="t_t1_fromHive">table>

context>

generatorConfiguration>

- 将pojo包中的复制到项目中 , mapper文件中的文件导入进来

- 编写service层的接口

public interface AvgService {

public String getAvgNumByDts(String startDt,String endDt);

}

- 编写接口实现类

import java.util.Iterator;

import java.util.List;

import org.springframework.beans.factory.annotation.Autowired;

import org.springframework.stereotype.Service;

import com.fasterxml.jackson.core.JsonProcessingException;

import com.fasterxml.jackson.databind.ObjectMapper;

import cn.itcast.mapper.TAvgpvNumMapper;

import cn.itcast.pojo.AvgToPageBean;

import cn.itcast.pojo.TAvgpvNum;

import cn.itcast.pojo.TAvgpvNumExample;

import cn.itcast.pojo.TAvgpvNumExample.Criteria;

import cn.itcast.service.AvgPvService;

@Service

public class AvgPvServiceImpl implements AvgPvService{

@Autowired

private TAvgpvNumMapper mapper;

@Override

public String getAvgPvNumByDates(String startDate, String endDate){

TAvgpvNumExample example = new TAvgpvNumExample();

Criteria criteria = example.createCriteria();

criteria.andDatestrBetween(startDate, endDate);

example.setOrderByClause("`dateStr` ASC");

List<TAvgpvNum> lists = mapper.selectByExample(example);

// 数组大小

int size = 7;

String[] dates = new String[size];

double[] datas = new double[size];

int i = 0;

for (TAvgpvNum tAvgpvNum : lists) {

dates[i]=tAvgpvNum.getDatestr();

datas[i]=tAvgpvNum.getAvgpvnum().doubleValue();

i++;

}

AvgToPageBean bean = new AvgToPageBean();

bean.setDates(dates);

bean.setData(datas);

ObjectMapper om = new ObjectMapper();

String beanJson= null;

try {

beanJson = om.writeValueAsString(bean);

} catch (JsonProcessingException e) {

e.printStackTrace();

}

return beanJson;

}

}

- 编写controller层

@Controller

public class IndexController {

@Autowired

private AvgPvService pvSeivice;

@RequestMapping("/")

public String showIndex2() {

return "index";

}

@RequestMapping("/index")

public String showIndex() {

return "index";

}

@RequestMapping(value="/avgPvNum",produces="application/json;charset=UTF-8")

@ResponseBody

public String getBarChart1() throws Exception{

System.out.println("获取平均pv数据...");

String s = pvSeivice.getAvgPvNumByDates("20130919", "20130925");

return s;

}

}

再次查看即可查看成功