一、DRBD定义

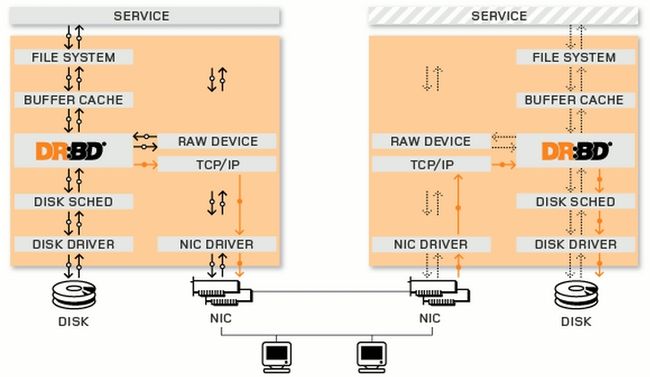

DRBD的全称为:Distributed Replicated Block Device (DRBD)分布式块设备复制,DRBD是由内核模块和相关脚本而构成,用以构建高可用性的集群。其实现方式是通过网络来镜像整个设备。它允许用户在远程机器上建立一个本地块设备的实时镜像。与心跳连接结合使用,也可以把它看作是一种网络RAID。DRBD因为是工作在系统内核空间,而不是用户空间,它直接复制的是二进制数据,这是它速度快的根本原因。

一个DRBD系统由两个以上节点构成,与HA集群类似,也有主用节点和备用节点之分,在带有主要设备的节点上,应用程序和操作系统可以运行和访问DRBD设备。

在主节点写入的数据通过drbd设备存储到主节点的磁盘设备中,同时,这个数据也会自动发送到备用节点相应的drbd设备,最终写入备用节点的磁盘设备中,在备用节点上,drbd只是将数据从drbd设备写入到备用节点的磁盘设备中。

DRBD在数据进入Buffer Cache时,先经过DRBD这一层,复制一份数据经过TCP/IP协议封装,发送到另一个节点上,另一个节点通过TCP/IP协议来接受复制过来的数据,同步到次节点的DRBD设备上。

DRBD内核中工作模型:

DRBD是有资源组成:

resource name:可以使用除空白字符以外的任意ACSII表中的字符。

drbd设备:drbd的设备的访问路径;设备文件/dev/drbd#。

disk:各节点为组成此drbd设备所提供的块设备。

网络属性:节点间为了实现跨主机的磁盘镜像而使用的网络配置。

DRBD的复制模式:

| 协议A(异步) | 数据一旦写入磁盘并发送到本地TCP/IP协议栈中就认为完成了写入操作。 |

| 协议B(半同步) | 收到对方接收确认即发送到对方的TCP/IP协议栈就认为完成了写入操作。 |

| 协议C(同步) | 等待对方写入完成后并返回确认信息才认为完成写入操作。 |

脑裂(split brain)自动修复方法:

丢弃比较新的主节点的所做的修改:

在这种模式下,当网络重新建立连接并且发现了裂脑,DRBD就会丢弃自切换到主节点后所修改的数据。

丢弃老的主节点所做的修改:

在这种模式下,DRBD将丢弃首先切换到主节点后所修改的数据。

丢弃修改比较少的主节点的修改:

在这种模式下,DRBD会检查两个节点的数据,然后丢弃修改比较少的主机上的节点。

一个节点数据没有发生变化的完美的修复裂脑:

在这种模式下,如果其中一台主机的在发生裂脑时数据没有发生修改,则可简单的完美的修复并声明已经解决裂脑问题。

了解基本概念后可以来安装配置DRBD了。

二、安装

由于在Linux 2.6.33以后的版本中,drbd已经集成到内核中;而之前的版本只能通过两种方法安装:

1、对内核打补丁;编译该模块

2、安装rpm包(要与内核完全匹配)

准备工作:

#下载与内核版本一致的内核rpm包和用户空间工具包

[root@node1 drbd]# ls

drbd-8.4.3-33.el6.x86_64.rpm drbd-kmdl-2.6.32-431.el6-8.4.3-33.el6.x86_64.rpm

[root@node1 drbd]# uname -r

2.6.32-431.el6.x86_64

#两个测试节点

node1.soul.com 192.168.0.111

node2.soul.com 192.168.0.112

#保证时间同步;双机互信

[root@node1 ~]# ssh node2 'date';date

Wed Apr 23 18:13:30 CST 2014

Wed Apr 23 18:13:30 CST 2014

[root@node1 ~]#

Wed Apr 23 18:11:12 CST 2014

安装:

[root@node1 drbd]# rpm -ivh drbd-8.4.3-33.el6.x86_64.rpm drbd-kmdl-2.6.32-431.el6-8.4.3-33.el6.x86_64.rpm warning: drbd-8.4.3-33.el6.x86_64.rpm: Header V4 DSA/SHA1 Signature, key ID 66534c2b: NOKEY Preparing... ########################################### [100%] 1:drbd-kmdl-2.6.32-431.el########################################### [ 50%] 2:drbd ########################################### [100%] [root@node1 drbd]# scp *.rpm node2:/root/drbd/ drbd-8.4.3-33.el6.x86_64.rpm 100% 283KB 283.3KB/s 00:00 drbd-kmdl-2.6.32-431.el6-8.4.3-33.el6.x86_64.rpm 100% 145KB 145.2KB/s 00:00 [root@node1 drbd]# ssh node2 "rpm -ivh /root/drbd/*.rpm" warning: /root/drbd/drbd-8.4.3-33.el6.x86_64.rpm: Header V4 DSA/SHA1 Signature, key ID 66534c2b: NOKEY Preparing... ################################################## drbd-kmdl-2.6.32-431.el6 ################################################## drbd ################################################## [root@node1 drbd]# #两个节点都安装相同的包

查看配置文件

[root@node1 ~]# cd /etc/drbd.d/

[root@node1 drbd.d]# ls

global_common.conf

[root@node1 drbd.d]#

[root@node1 drbd.d]# vim global_common.conf

global {

usage-count no; #在可以访问互联网的情况下drbd可以统计使用数据

# minor-count dialog-refresh disable-ip-verification

}

common {

handlers { #处理器

# These are EXAMPLE handlers only.

# They may have severe implications,

# like hard resetting the node under certain circumstances.

# Be careful when chosing your poison.

#启动下面这三项:

pri-on-incon-degr "/usr/lib/drbd/notify-pri-on-incon-degr.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

pri-lost-after-sb "/usr/lib/drbd/notify-pri-lost-after-sb.sh; /usr/lib/drbd/notify-emergency-reboot.sh; echo b > /proc/sysrq-trigger ; reboot -f";

local-io-error "/usr/lib/drbd/notify-io-error.sh; /usr/lib/drbd/notify-emergency-shutdown.sh; echo o > /proc/sysrq-trigger ; halt -f";

# fence-peer "/usr/lib/drbd/crm-fence-peer.sh";

# split-brain "/usr/lib/drbd/notify-split-brain.sh root";

# out-of-sync "/usr/lib/drbd/notify-out-of-sync.sh root";

# after-resync-target /usr/lib/drbd/unsnapshot-resync-target-lvm.sh;

}

startup { #启动时执行脚本

# wfc-timeout degr-wfc-timeout outdated-wfc-timeout wait-after-sb

}

options {

# cpu-mask on-no-data-accessible

}

disk { #drbd设备

# size max-bio-bvecs on-io-error fencing disk-barrier disk-flushes

# disk-drain md-flushes resync-rate resync-after al-extents

# c-plan-ahead c-delay-target c-fill-target c-max-rate

# c-min-rate disk-timeout

on-io-error detach; #当io发生错误时直接拆掉磁盘

}

net {

# protocol timeout max-epoch-size max-buffers unplug-watermark

# connect-int ping-int sndbuf-size rcvbuf-size ko-count

# allow-two-primaries cram-hmac-alg shared-secret after-sb-0pri

# after-sb-1pri after-sb-2pri always-asbp rr-conflict

# ping-timeout data-integrity-alg tcp-cork on-congestion

# congestion-fill congestion-extents csums-alg verify-alg

# use-rle

cram-hmac-alg "sha1";

shared-secret "node.soul.com"; #建议使用随机数值

protocol C; #复制协议

}

syncer {

rate 1000M; #传输速率

}

}

三、配置使用

提供一个磁盘分区;或单独一个磁盘;两节点需大小一致

[root@node1 ~]# fdisk -l /dev/sda Disk /dev/sda: 128.8 GB, 128849018880 bytes 255 heads, 63 sectors/track, 15665 cylinders Units = cylinders of 16065 * 512 = 8225280 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00076a20 Device Boot Start End Blocks Id System /dev/sda1 * 1 26 204800 83 Linux Partition 1 does not end on cylinder boundary. /dev/sda2 26 7859 62914560 8e Linux LVM /dev/sda3 7859 8512 5252256 83 Linux #该分区作为drbd资源 #node2也是如此

定义资源

[root@node1 ~]# vim /etc/drbd.d/web.res

resource web {

on node1.soul.com {

device /dev/drbd0;

disk /dev/sda3;

address 192.168.0.111:7789;

meta-disk internal;

}

on node2.soul.com {

device /dev/drbd0;

disk /dev/sda3;

address 192.168.0.112:7789;

meta-disk internal;

}

}

#复制到node2上一份

[root@node1 ~]# scp /etc/drbd.d/web.res node2:/etc/drbd.d/

web.res 100% 247 0.2KB/s 00:00

[root@node1 ~]# scp /etc/drbd.d/global_common.conf node2:/etc/drbd.d/

global_common.conf 100% 1945 1.9KB/s 00:00

[root@node1 ~]#

初始化资源并启动服务

[root@node1 ~]# drbdadm create-md web

Writing meta data...

initializing activity log

NOT initializing bitmap

New drbd meta data block successfully created.

#node2同样执行

[root@node1 ~]# service drbd start

Starting DRBD resources: [

create res: web

prepare disk: web

adjust disk: web

adjust net: web

]

..........

***************************************************************

DRBD's startup script waits for the peer node(s) to appear.

- In case this node was already a degraded cluster before the

reboot the timeout is 0 seconds. [degr-wfc-timeout]

- If the peer was available before the reboot the timeout will

expire after 0 seconds. [wfc-timeout]

(These values are for resource 'web'; 0 sec -> wait forever)

To abort waiting enter 'yes' [ 11]: #等待node2启动,node2启动即可

.

[root@node1 ~]

#查看状态

[root@node1 ~]# drbd-overview

0:web/0 Connected Secondary/Secondary Inconsistent/Inconsistent C r-----

[root@node1 ~]#

手动将其中一个节点提升为主节点

[root@node1 ~]# drbdadm primary --force web

[root@node1 ~]# cat /proc/drbd

version: 8.4.3 (api:1/proto:86-101)

GIT-hash: 89a294209144b68adb3ee85a73221f964d3ee515 build by gardner@, 2013-11-29 12:28:00

0: cs:SyncSource ro:Primary/Secondary ds:UpToDate/Inconsistent C r-----

ns:321400 nr:0 dw:0 dr:329376 al:0 bm:19 lo:0 pe:1 ua:8 ap:0 ep:1 wo:f oos:4931544

[>...................] sync'ed: 6.3% (4812/5128)M

finish: 0:02:33 speed: 32,048 (32,048) K/sec

#显示正常同步进行

#显示的Primary/Secondary左边为自己;右边为对方节点

[root@node2 ~]# drbd-overview

0:web/0 Connected Secondary/Primary UpToDate/UpToDate C r-----

#node2上显示

手动做主从切换

#因为同时只能有一个为主;所以切换时;需要先将主的降级;才能提升

#且只有主的才能格式化被挂载使用

node1

[root@node1 ~]# drbdadm secondary web

[root@node1 ~]# drbd-overview

0:web/0 Connected Secondary/Secondary UpToDate/UpToDate C r-----

node2

[root@node2 ~]# drbdadm primary web

[root@node2 ~]# drbd-overview

0:web/0 Connected Primary/Secondary UpToDate/UpToDate C r-----

#格式化挂载

[root@node2 ~]# mke2fs -t ext4 /dev/drbd0

This filesystem will be automatically checked every 35 mounts or

180 days, whichever comes first. Use tune2fs -c or -i to override.

[root@node2 ~]#

[root@node2 ~]# mount /dev/drbd0 /mnt/

[root@node2 ~]# ls /mnt/

lost+found

#挂载成功

#如果要为node1为主;这边需要先卸载降级

四、与pacemaker结合使用自动角色转换

[root@node1 ~]# drbdadm secondary web

[root@node1 ~]# drbd-overview

0:web/0 Connected Secondary/Secondary UpToDate/UpToDate C r-----

[root@node1 ~]# service drbd stop

Stopping all DRBD resources: .

[root@node1 ~]# ssh node2 'service drbd stop'

Stopping all DRBD resources: .

[root@node1 ~]#

[root@node1 ~]# chkconfig drbd off

[root@node1 ~]# ssh node2 'chkconfig drbd off'

#卸载降级关闭服务;且关闭开启自动启动。两个节点相同操作

配置pacemaker

#具体配置就不详细介绍了;上一篇介绍的有

[root@node1 ~]# crm status

Last updated: Wed Apr 23 21:00:11 2014

Last change: Wed Apr 23 18:59:58 2014 via cibadmin on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node2.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

0 Resources configured

Online: [ node1.soul.com node2.soul.com ]

crm(live)configure# show

node node1.soul.com

node node2.soul.com

property $id="cib-bootstrap-options" \

dc-version="1.1.10-14.el6-368c726" \

cluster-infrastructure="classic openais (with plugin)" \

expected-quorum-votes="2" \

stonith-enabled="false" \

no-quorum-policy="ignore"

rsc_defaults $id="rsc-options" \

resource-stickiness="100"

#配置好直接查看相关信息

配置drbd为高可用资源

#drbd的资源代理是有linbit提供

crm(live)ra# info ocf:linbit:drbd

Parameters (* denotes required, [] the default):

drbd_resource* (string): drbd resource name

The name of the drbd resource from the drbd.conf file.

drbdconf (string, [/etc/drbd.conf]): Path to drbd.conf

Full path to the drbd.conf file.

....

#查看详细信息

#定义资源

crm(live)configure# primitive webdrbd ocf:linbit:drbd params drbd_resource=web op monitor role=Master interval=40s timeout=30s op monitor role=Slave interval=60s timeout=30s op start timeout=240s op stop timeout=100s

crm(live)configure# verify

crm(live)configure# master MS_webdrbd webdrbd meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

crm(live)configure# verify

crm(live)configure# show

node node1.soul.com

node node2.soul.com

primitive webdrbd ocf:linbit:drbd \

params drbd_resource="web" \

op monitor role="Master" interval="40s" timeout="30s" \

op monitor role="Slave" interval="60s" timeout="30s" \

op start timeout="240s" interval="0" \

op stop timeout="100s" interval="0"

ms MS_webdrbd webdrbd \

meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

crm(live)configure# commit

定义完成后可以查看当前状态

crm(live)# status

Last updated: Wed Apr 23 21:39:49 2014

Last change: Wed Apr 23 21:39:32 2014 via cibadmin on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node1.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

2 Resources configured

Online: [ node1.soul.com node2.soul.com ]

Master/Slave Set: MS_webdrbd [webdrbd]

Masters: [ node1.soul.com ]

Slaves: [ node2.soul.com ]

#可以让node1下线测试

crm(live)# node standby node1.soul.com

crm(live)# status

Last updated: Wed Apr 23 21:44:31 2014

Last change: Wed Apr 23 21:44:27 2014 via crm_attribute on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node1.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

2 Resources configured

Node node1.soul.com: standby

Online: [ node2.soul.com ]

Master/Slave Set: MS_webdrbd [webdrbd]

Masters: [ node2.soul.com ]

Stopped: [ node1.soul.com ]

#可以看出node2自动变成主节点了

五、定义webfs服务共享drbd资源

crm(live)configure# primitive webfs ocf:heartbeat:Filesystem params device="/dev/drbd0" directory="/var/www/html" fstype="ext4" op monitor interval=30s timeout=40s on-fail=restart op start timeout=60s op stop timeout=60s

crm(live)configure# verify

crm(live)configure# colocation webfs_with_MS_webdrbd_M inf: webfs MS_webdrbd:Master

crm(live)configure# verify

crm(live)configure# order webfs_after_MS_webdrbd_M inf: MS_webdrbd:promote webfs:start

crm(live)configure# verify

crm(live)configure# commit

crm(live)# status

Last updated: Wed Apr 23 21:57:23 2014

Last change: Wed Apr 23 21:57:11 2014 via cibadmin on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node1.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

3 Resources configured

Online: [ node1.soul.com node2.soul.com ]

Master/Slave Set: MS_webdrbd [webdrbd]

Masters: [ node2.soul.com ]

Slaves: [ node1.soul.com ]

webfs (ocf::heartbeat:Filesystem): Started node2.soul.com

[root@node2 ~]# ls /var/www/html/

issue lost+found

#在node2上测试挂载成功

#测试转移

crm(live)# node standby node2.soul.com

crm(live)# status

Last updated: Wed Apr 23 21:59:00 2014

Last change: Wed Apr 23 21:58:51 2014 via crm_attribute on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node1.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

3 Resources configured

Node node2.soul.com: standby

Online: [ node1.soul.com ]

Master/Slave Set: MS_webdrbd [webdrbd]

Masters: [ node1.soul.com ]

Stopped: [ node2.soul.com ]

webfs (ocf::heartbeat:Filesystem): Started node1.soul.com

[root@node1 ~]# ls /var/www/html/

issue lost+found

[root@node1 ~]#

#测试转移成功

六、配置webip和webserver实现web服务高可用

crm(live)# configure primitive webip ocf:heartbeat:IPaddr params ip="192.168.0.222" op monitor it=30s on-fail=restart

crm(live)configure# primitive webserver lsb:httpd op monitor interval=30s timeout=30s on-fail=restart

crm(live)configure# group webcluster MS_webdrbd webip webfs webserver

INFO: resource references in colocation:webfs_with_MS_webdrbd_M updated

INFO: resource references in order:webfs_after_MS_webdrbd_M updated

crm(live)configure# show

group webcluster webip webfs webserver

ms MS_webdrbd webdrbd \

meta master-max="1" master-node-max="1" clone-max="2" clone-node-max="1" notify="true"

colocation webfs_with_MS_webdrbd_M inf: webcluster MS_webdrbd:Master

order webfs_after_MS_webdrbd_M inf: MS_webdrbd:promote webcluster:start

#查看信息正符合要求

crm(live)# status

Last updated: Wed Apr 23 23:02:26 2014

Last change: Wed Apr 23 22:55:36 2014 via cibadmin on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node1.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

5 Resources configured

Node node2.soul.com: standby

Online: [ node1.soul.com ]

Master/Slave Set: MS_webdrbd [webdrbd]

Masters: [ node1.soul.com ]

Stopped: [ node2.soul.com ]

Resource Group: webcluster

webip (ocf::heartbeat:IPaddr): Started node1.soul.com

webfs (ocf::heartbeat:Filesystem): Started node1.soul.com

webserver (lsb:httpd): Started node1.soul.com

#转移测试

crm(live)# node standby node1.soul.com

crm(live)# status

Last updated: Wed Apr 23 23:03:18 2014

Last change: Wed Apr 23 23:03:15 2014 via crm_attribute on node1.soul.com

Stack: classic openais (with plugin)

Current DC: node1.soul.com - partition with quorum

Version: 1.1.10-14.el6-368c726

2 Nodes configured, 2 expected votes

5 Resources configured

Node node1.soul.com: standby

Online: [ node2.soul.com ]

Master/Slave Set: MS_webdrbd [webdrbd]

Masters: [ node2.soul.com ]

Stopped: [ node1.soul.com ]

Resource Group: webcluster

webip (ocf::heartbeat:IPaddr): Started node2.soul.com

webfs (ocf::heartbeat:Filesystem): Started node2.soul.com

webserver (lsb:httpd): Started node2.soul.com

到此;drbd基础与pacemaker实现自动转移以完成。

如有错误;恳请指正。