爬取网易云音乐某个歌手的全部歌曲的歌词

网易云音乐的网页大多都是js加载出来,我们无法直接通过解析网页来获取歌词,本文讲解如何通过网易提供的API和相应的爬虫技术下载某个歌手全部歌曲的歌词。

网易云音乐的歌词是通过js加载的,无法通过页面直接爬取歌词,好在网易提供了一个歌词的接口地址:http://music.163.com/api/song/lyric?id=song_id&lv=1&kv=1&tv=-1.

因此获取歌词的关键在于找到歌曲的id。

网易云音乐在点击首页的歌手找到目标歌手之后,点击进去在首页只会显示热门的50首歌曲,如何获取该歌手的全部歌曲的歌词呢?实现这个目的可以分为三步:

- 获取歌手的id

- 获取该歌手的所有歌曲id

- 获取所有歌曲的歌词

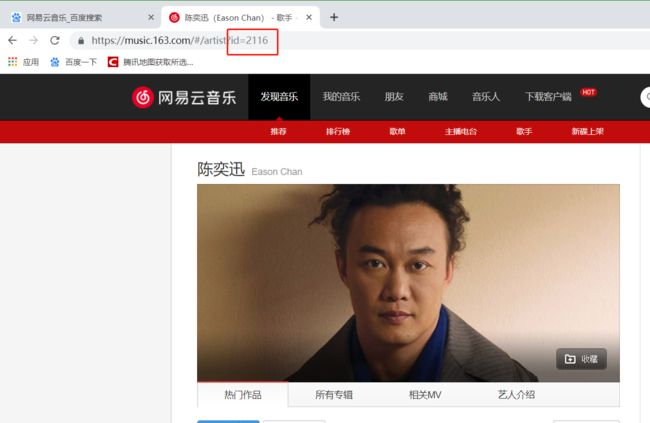

获取歌手id

网易云音乐对每个歌手都进行了编号,我们可以通过在首页点击歌手,找到相应的歌手后点击进去,在网址链接中可以找到该歌手的id。

网易云音乐中陈奕迅的id是2116

获取所有歌曲id

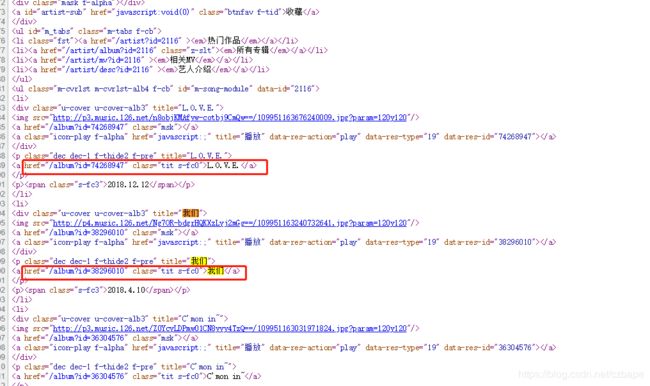

在歌手的主页会有该歌手的热门歌曲,但是只有50首,我们在浏览器地址栏看到的网址是:https://music.163.com/#/artist?id=2116 但是这并不是真正可以爬取到歌曲名称的url,去掉url中的‘#’后才是可以直接爬取的网页。https://music.163.com/artist?id=2116 通过网页查看源代码,可以发现在下图的位置,我们可以找到歌曲的名称个对应的id

接下来就是通过BeautifuSoup4或者lxml来获取网页上的内容了。歌曲的名称和id直接在a标签中。但是页面上的a标签非常多,如何获取该位置的a标签呢,我们可以上层的div获取,寻找div的class属性是‘f-hide’的div。获取该div下的子孙a。在获取a标签的href和文本内容就可以得到song_id和song_name了。本文使用的lxml模块,通过xpath定位标签的方法。也可以通过BeautifulSoup4提供的方法,定位标签并获取文本内容。

这种方式只能获取top50.如何获取全部歌曲的id呢?我们在歌手的界面可以看到有歌手的全部专辑,点击每张专辑后能显示专辑的全部歌曲,我们如果能获取所有专辑里的歌曲,不就能得到该歌手的全部歌曲id了吗,所有这个部分分为两步:

- 获取全部专辑id

- 获取每张专辑下的全部歌曲id

获取所有专辑id

专辑对应的接口地址是:“https://music.163.com/artist/album?id="+str(singer_id)+"&limit=150&offset=0” 这里有三个单数,分别是歌手id、limit是每页显示的专辑数量、offset是偏移量。后面这两个参数,学过数据库的同学应该都会明白了,如果你实在不理解,就把limit设置的大一点,那所有专辑就会在一个网页上显示。

将陈奕迅对应的专辑limit设为150就能包含所有的专辑。

查看这个网页的源代码:https://music.163.com/artist/album?id=2116&limit=150&offset=0 我们可以找到所有专辑和专辑对应的id:

通过xpath或者BeautifulSoup4可以找到对应的专辑名和专辑id。同样,这些都在网页的a标签中。

album_url = "https://music.163.com/artist/album?id="+str(singer_id)+"&limit=150&offset=0"

html_album = self.get_url_html(album_url)

album_ids, album_names = self.get_album(html_album)

def get_url_html(self, url):

with requests.Session() as session:

response = session.get(url, headers=headers)

text = response.text

html = etree.HTML(text)

return html

def get_album(self, html):

album_ids = html.xpath("//ul[@id='m-song-module']/li/p/a/@href")

album_names = html.xpath("//ul[@id='m-song-module']/li/p/a/text()")

album_ids = [ids.split('=')[-1] for ids in album_ids]

return album_ids, album_names

通过这一步就可以获取所有专辑的id和专辑名。

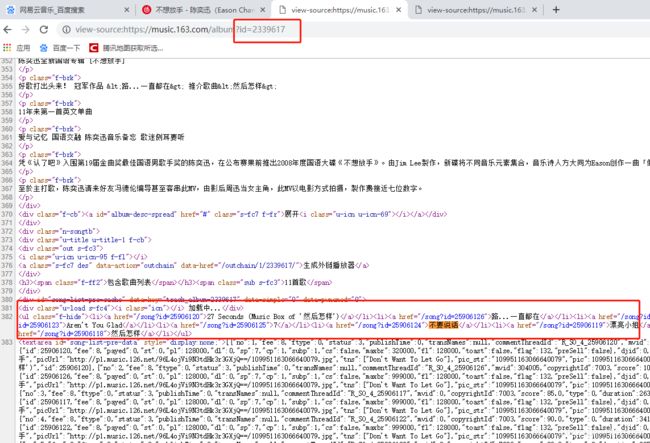

获取专辑里的所有歌曲id

上一步我们获取了所有专辑的id。那如何通过专辑id获取专辑里的所有歌曲呢?接口地址是:

“https://music.163.com/album?id=”+str(album_id)

以陈奕迅《不想放手》专辑为例,该专辑id是2339617.查看网页源代码,如下图:

通过爬取对应的标签后获取歌曲的id和歌曲名。

def get_all_song_id(self, album_ids):

with requests.Session() as session:

all_song_ids, all_song_names = [], []

for album_id in album_ids:

one_album_url = "https://music.163.com/album?id="+str(album_id)

response = session.get(one_album_url, headers=headers)

text = response.text

html = etree.HTML(text)

album_song_ids = html.xpath("//ul[@class='f-hide']/li/a/@href")

album_song_names = html.xpath("//ul[@class='f-hide']/li/a/text()")

album_song_ids = [ids.split('=')[-1] for ids in album_song_ids]

all_song_ids.append(album_song_ids)

all_song_names.append(album_song_names)

return all_song_ids, all_song_names

通过这一步,我们得到了该歌手的全部歌曲名称和id

获取所有歌曲歌词

网易云音乐的歌词在网页上是不能直接爬取的,好在我们可以通过接口来得到歌曲的歌词:

http://music.163.com/api/song/lyric?id=song_id&lv=1&kv=1&tv=-1

该url返回的是json数据格式的结果,因此我们可以通过python的json模块解析结果,本文使用的simplejson具有同样的效果,simplejson更加灵活轻量一些。

得到json结果:

def get_url_json(self, url):

with requests.Session() as session:

response = session.get(url, headers=headers)

text = response.text

text_json = simplejson.loads(text)

return text_json

解析json歌词:

def parse_lyric(self, text_json):

try:

lyric = text_json.get('lrc').get('lyric')

regex = re.compile(r'\[.*\]')

final_lyric = re.sub(regex, '', lyric).strip()

return final_lyric

except AttributeError as k:

print(k)

pass

获取歌手全部歌词的关键主要流程代码,本次将爬取的结果以文件的形式保存:

def get_all_song_lyric(self,singer_id):

album_url = "https://music.163.com/artist/album?id="+str(singer_id)+"&limit=150&offset=0"

html_album = self.get_url_html(album_url)

album_ids, album_names = self.get_album(html_album)

all_song_ids, all_song_names = self.get_all_song_id(album_ids)

all_song_ids = reduce(operator.add, all_song_ids)

all_song_names = reduce(operator.add, all_song_names)

print(all_song_ids)

print(all_song_names)

for song_id, song_name in zip(all_song_ids, all_song_names):

url_song = 'http://music.163.com/api/song/lyric?' + 'id=' + str(song_id) + '&lv=1&kv=1&tv=-1'

json_text = self.get_url_json(url_song)

print(song_name)

try:

with open('D:/lyric/陈奕迅/'+str(song_name)+".txt", 'w+') as f:

f.write(self.parse_lyric(json_text))

# print(song_name)

# print(self.parse_lyric(json_text))

# print('-' * 30)

except Exception as e:

pass

Tips:

- 有些歌曲是轻音乐没有歌词,在解析歌词的时候会出错,因此在解析的使用要使用try的方式解析。

def parse_lyric(self, text_json):

try:

lyric = text_json.get('lrc').get('lyric')

regex = re.compile(r'\[.*\]')

final_lyric = re.sub(regex, '', lyric).strip()

return final_lyric

except AttributeError as k:

print(k)

pass

- 本文使用的lxml模块利用xpath路径获取标签内容和属性,一定要定位准确。

全部代码如下:

import requests

from lxml import etree

import simplejson

import re

import operator

from functools import reduce

ua = 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/63.0.3239.132 Safari/537.36'

headers = {

'User-agent': ua

}

class CrawlerLyric:

def __init__(self):

self.author_name = ""

def get_url_html(self, url):

with requests.Session() as session:

response = session.get(url, headers=headers)

text = response.text

html = etree.HTML(text)

return html

def get_url_json(self, url):

with requests.Session() as session:

response = session.get(url, headers=headers)

text = response.text

text_json = simplejson.loads(text)

return text_json

def parse_song_id(self, html):

song_ids = html.xpath("//ul[@class='f-hide']//a/@href")

song_names = html.xpath("//ul[@class='f-hide']//a/text()")

self.author_name = html.xpath('//title/text()')

song_ids = [ids[9:len(ids)] for ids in song_ids]

return self.author_name, song_ids, song_names

def parse_lyric(self, text_json):

try:

lyric = text_json.get('lrc').get('lyric')

regex = re.compile(r'\[.*\]')

final_lyric = re.sub(regex, '', lyric).strip()

return final_lyric

except AttributeError as k:

print(k)

pass

def get_album(self, html):

album_ids = html.xpath("//ul[@id='m-song-module']/li/p/a/@href")

album_names = html.xpath("//ul[@id='m-song-module']/li/p/a/text()")

album_ids = [ids.split('=')[-1] for ids in album_ids]

return album_ids, album_names

def get_top50(self, sing_id):

url_singer = 'https://music.163.com/artist?id='+str(sing_id) # 陈奕迅

html_50 = self.get_url_html(url_singer)

author_name, song_ids, song_names = self.parse_song_id(html_50)

# print(author_name, song_ids, song_names)

for song_id, song_name in zip(song_ids, song_names):

url_song = 'http://music.163.com/api/song/lyric?' + 'id=' + str(song_id) + '&lv=1&kv=1&tv=-1'

json_text = self.get_url_json(url_song)

print(song_name)

print(self.parse_lyric(json_text))

print('-' * 30)

def get_all_song_id(self, album_ids):

with requests.Session() as session:

all_song_ids, all_song_names = [], []

for album_id in album_ids:

one_album_url = "https://music.163.com/album?id="+str(album_id)

response = session.get(one_album_url, headers=headers)

text = response.text

html = etree.HTML(text)

album_song_ids = html.xpath("//ul[@class='f-hide']/li/a/@href")

album_song_names = html.xpath("//ul[@class='f-hide']/li/a/text()")

album_song_ids = [ids.split('=')[-1] for ids in album_song_ids]

all_song_ids.append(album_song_ids)

all_song_names.append(album_song_names)

return all_song_ids, all_song_names

def get_all_song_lyric(self,singer_id):

album_url = "https://music.163.com/artist/album?id="+str(singer_id)+"&limit=150&offset=0"

html_album = self.get_url_html(album_url)

album_ids, album_names = self.get_album(html_album)

all_song_ids, all_song_names = self.get_all_song_id(album_ids)

all_song_ids = reduce(operator.add, all_song_ids)

all_song_names = reduce(operator.add, all_song_names)

print(all_song_ids)

print(all_song_names)

for song_id, song_name in zip(all_song_ids, all_song_names):

url_song = 'http://music.163.com/api/song/lyric?' + 'id=' + str(song_id) + '&lv=1&kv=1&tv=-1'

json_text = self.get_url_json(url_song)

print(song_name)

try:

with open('D:/lyric/陈奕迅/'+str(song_name)+".txt", 'w+') as f:

f.write(self.parse_lyric(json_text))

# print(song_name)

# print(self.parse_lyric(json_text))

# print('-' * 30)

except Exception as e:

pass

if __name__ == "__main__":

sing_id = '2116' # 陈奕迅

sing_id_chenli = '1007170' # 陈粒

c = CrawlerLyric()

c.get_all_song_lyric(sing_id_chenli)