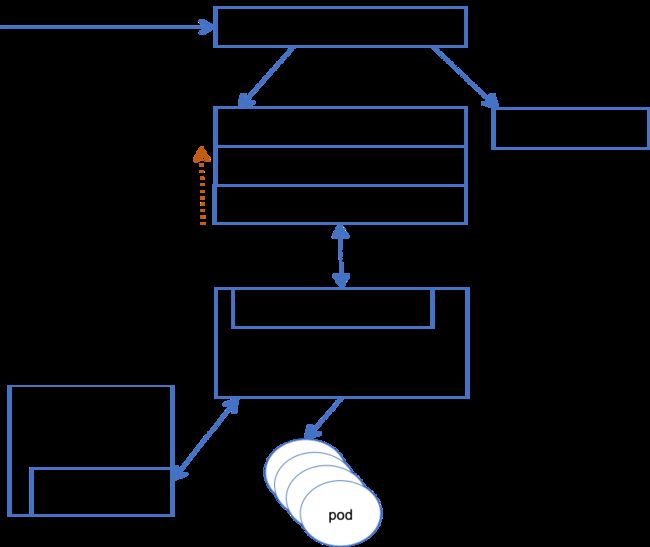

kubernetes新一代的监控模型由:核心指标流水线和第三方非核心监控流水线组成。核心指标流水线由kubelet、metric-server 以及由API-server提供的API组成;负责CPU累积使用率、内存实时使用率、POD资源占用率、Container磁盘占用率等。而第三方非核心监控流水线 负责从OS收集各种指标数据并提供给终端用户、存储系统、以及HPA等。

监控系统收集两种指标: 资源指标与自定义指标。

Metrics-server 是资源指标API 。它提供核心指标,包括CPU累积使用率、内存实时使用率、Pod 的资源占用率及容器的磁盘占用率。这些指标由kubelet、metrics-server以及由API server提供的。

Prometheus是自定义指标 的提供者。它收集的数据还需要经过kube-state-metrics转换处理,再由 k8s-prometheus-adapter 输出为metrics-api 才能被 kubernetes cluster 所读取。用于从系统收集各种指标数据,并经过处理提供给 终端用户、存储系统以及HPA,这些数据包括核心指标和许多非核心指标。

资源指标API 负责收集各种资源指标,但它需要扩展APIServer 。可以利用 aggregator 将 metrics-server 与 APIServer进行聚合,达到扩展功能的效果。这样 就可以利用扩展的 API Server 功能(即资源指标API)进行收集 各种资源指标(1.8+支持)。kubectl top 、HPA等功能组件 必须依赖资源指标API (早期版本它们依赖heapster)。

HPA 根据CPU、Memory、IO、net connections等指标进行扩展或收缩(早期的heapster只能提供CPU、Memory指标)

一、metrics-server

是托管在kubernetes cluster上的一个Pod ,再由 kube-aggregator 将它和原API Server 进行聚合,达到扩展API 的效果。它是现在 kubectl top 、HPA的前提依赖。

部署metrics-server 如下:

参考 :https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/metrics-server

[root@k8s-master-dev metric-v0.3]# cat metrics-server.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: system:metrics-server

rules:

- apiGroups:

- ""

resources:

- pods

- nodes

- nodes/stats

- namespaces

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

resources:

- deployments

verbs:

- get

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: system:metrics-server

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:metrics-server

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: metrics-server-auth-reader

namespace: kube-system

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: extension-apiserver-authentication-reader

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: metrics-server:system:auth-delegator

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:auth-delegator

subjects:

- kind: ServiceAccount

name: metrics-server

namespace: kube-system

---

apiVersion: apiregistration.k8s.io/v1beta1

kind: APIService

metadata:

name: v1beta1.metrics.k8s.io

spec:

service:

name: metrics-server

namespace: kube-system

group: metrics.k8s.io

version: v1beta1

insecureSkipTLSVerify: true

groupPriorityMinimum: 100

versionPriority: 100

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: metrics-server

namespace: kube-system

labels:

k8s-app: metrics-server

spec:

selector:

matchLabels:

k8s-app: metrics-server

template:

metadata:

name: metrics-server

labels:

k8s-app: metrics-server

spec:

serviceAccountName: metrics-server

volumes:

# mount in tmp so we can safely use from-scratch images and/or read-only containers

- name: tmp-dir

emptyDir: {}

containers:

- name: metrics-server

image: k8s.gcr.io/metrics-server-amd64:v0.3.0

imagePullPolicy: IfNotPresent

command:

- /metrics-server

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP

volumeMounts:

- name: tmp-dir

mountPath: /tmp

---

apiVersion: v1

kind: Service

metadata:

name: metrics-server

namespace: kube-system

labels:

kubernetes.io/name: "Metrics-server"

spec:

selector:

k8s-app: metrics-server

ports:

- port: 443

protocol: TCP

targetPort: 443

[root@k8s-master-dev metric-v0.3]# kubectl apply -f metrics-server.yaml

[root@k8s-master-dev metric-v0.3]# cd

[root@k8s-master-dev ~]# kubectl api-versions

admissionregistration.k8s.io/v1beta1

apiextensions.k8s.io/v1beta1

apiregistration.k8s.io/v1

apiregistration.k8s.io/v1beta1

apps/v1

apps/v1beta1

apps/v1beta2

authentication.k8s.io/v1

authentication.k8s.io/v1beta1

authorization.k8s.io/v1

authorization.k8s.io/v1beta1

autoscaling/v1

autoscaling/v2beta1

batch/v1

batch/v1beta1

certificates.k8s.io/v1beta1

custom.metrics.k8s.io/v1beta1

events.k8s.io/v1beta1

extensions/v1beta1

*metrics.k8s.io/v1beta1*

networking.k8s.io/v1

policy/v1beta1

rbac.authorization.k8s.io/v1

rbac.authorization.k8s.io/v1beta1

scheduling.k8s.io/v1beta1

storage.k8s.io/v1

storage.k8s.io/v1beta1

v1

[root@k8s-master-dev ~]#

[root@k8s-master-dev ~]# kubectl top nodes

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master-dev 299m 3% 1884Mi 11%

k8s-node1-dev 125m 1% 4181Mi 26%

k8s-node2-dev 66m 3% 2736Mi 17%

k8s-node3-dev 145m 1% 2686Mi 34%

[root@k8s-master-dev metric-v0.3]# kubectl top pods

NAME CPU(cores) MEMORY(bytes)

mongo-0 12m 275Mi

mongo-1 11m 251Mi

mongo-2 8m 271Mi

[root@k8s-master-dev metric-v0.3]#当metrics-server部署完毕后,如上所示可以查看到 metrics相关的API,并且可以使用kubectl top 命令查看node或pod的资源占用情况 。

如果需要安装最新版本可以 git clone https://github.com/kubernetes-incubator/metrics-server.git

cd metrics-server/deploy/1.8+/

kubectl apply -f ./

如果发现metrics-server 的pod可以正常启动,但在执行kubectl top node时提示metrics-server 不可用,在执行 kubectl log metrics-server-* -n kube-system 时有错误提示,很可能是因为:resource-reader.yaml 文件中 ClusterRole 的rules中缺少 namespaces 权限,以及 metrics-server-deployment.yaml文件中container下缺少以下语句,以忽略tls认证。

command:

- /metrics-server

- --kubelet-insecure-tls

- --kubelet-preferred-address-types=InternalIP二、Prometheus

架构图如下:

Prometheus 通过node_exporter获取各Nodes的信息。 node_exporter它只负责节点级别的信息汇总,如果需要采集其它指标数据,就需要部署专用的exporter 。

Prometheus 通过 metrics-url 地址到各Pods获取数据 。

prometheus 提供了一个Restful 风格的PromQL接口,可以让用户输入查询表达式。但K8s的 API Server 无法查询其值 ,因为它们默认的数据格式不统一。数据需要kube-state-metrics组件将其处理、转换,然后由k8s-prometheus-adapter组件读取并聚合到API上,最后 kubernetes cluster 的API server 才能识别。

所以各节点需要部署node_exporter 组件,然后Prometheus从各节点的node_exporter上获取infomation,然后就可以通过 PromQL 查询各种数据。这些数据的格式再由kube-state-metrics组件进行转换,然后再由kube-prometheus-adapter组件将转换后的数据输出为Custom metrics API ,并聚合到API上,以便用户使用。

示意图如下

部署Prometheus, 如下:

参考 :https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/prometheus

1) 定义名称空间

[root@k8s-master-dev prometheus]# cd k8s-prom/

[root@k8s-master-dev k8s-prom]#

[root@k8s-master-dev k8s-prom]# ls

k8s-prometheus-adapter namespace.yaml podinfo README.md

kube-state-metrics node_exporter prometheus

[root@k8s-master-dev k8s-prom]# cat namespace.yaml

---

apiVersion: v1

kind: Namespace

metadata:

name: prom

[root@k8s-master-dev k8s-prom]# kubectl apply -f namespace.yaml

namespace/prom created2) 部署node_exporter

[root@k8s-master-dev k8s-prom]# cd node_exporter/

[root@k8s-master-dev node_exporter]# ls

node-exporter-ds.yaml node-exporter-svc.yaml

[root@k8s-master-dev node_exporter]# vim node-exporter-ds.yaml

[root@k8s-master-dev node_exporter]# kubectl apply -f ./

daemonset.apps/prometheus-node-exporter created

service/prometheus-node-exporter created

[root@k8s-master-dev node_exporter]# kubectl get pods -n prom

NAME READY STATUS RESTARTS AGE

prometheus-node-exporter-7729r 1/1 Running 0 17s

prometheus-node-exporter-hhc7f 1/1 Running 0 17s

prometheus-node-exporter-jxjcq 1/1 Running 0 17s

prometheus-node-exporter-pswbb 1/1 Running 0 17s

[root@k8s-master-dev node_exporter]# cd ..3) 部署prometheus

[root@k8s-master-dev k8s-prom]# cd prometheus/

[root@k8s-master-dev prometheus]# ls

prometheus-cfg.yaml prometheus-deploy.yaml prometheus-rbac.yaml prometheus-svc.yaml

[root@k8s-master-dev prometheus]# kubectl apply -f ./

configmap/prometheus-config created

deployment.apps/prometheus-server created

clusterrole.rbac.authorization.k8s.io/prometheus created

serviceaccount/prometheus created

clusterrolebinding.rbac.authorization.k8s.io/prometheus created

service/prometheus created

[root@k8s-master-dev prometheus]# kubectl get all -n prom

NAME READY STATUS RESTARTS AGE

pod/prometheus-node-exporter-7729r 1/1 Running 0 1m

pod/prometheus-node-exporter-hhc7f 1/1 Running 0 1m

pod/prometheus-node-exporter-jxjcq 1/1 Running 0 1m

pod/prometheus-node-exporter-pswbb 1/1 Running 0 1m

pod/prometheus-server-65f5d59585-5fj6n 1/1 Running 0 33s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/prometheus NodePort 10.98.96.66 9090:30090/TCP 34s

service/prometheus-node-exporter ClusterIP None 9100/TCP 1m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-node-exporter 4 4 4 4 4 1m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/prometheus-server 1 1 1 1 34s

NAME DESIRED CURRENT READY AGE

replicaset.apps/prometheus-server-65f5d59585 1 1 1 34s

[root@k8s-master-dev prometheus]# 然后就可以以PromQL的方式查询数据了,如下所示:

4) 部署kube-state-metrics

[root@k8s-master-dev prometheus]# cd ..

[root@k8s-master-dev k8s-prom]# cd kube-state-metrics/

[root@k8s-master-dev kube-state-metrics]# ls

kube-state-metrics-deploy.yaml kube-state-metrics-rbac.yaml kube-state-metrics-svc.yaml

[root@k8s-master-dev kube-state-metrics]# kubectl apply -f ./

deployment.apps/kube-state-metrics created

serviceaccount/kube-state-metrics created

clusterrole.rbac.authorization.k8s.io/kube-state-metrics created

clusterrolebinding.rbac.authorization.k8s.io/kube-state-metrics created

service/kube-state-metrics created

[root@k8s-master-dev kube-state-metrics]#

[root@k8s-master-dev kube-state-metrics]# kubectl get all -n prom

NAME READY STATUS RESTARTS AGE

pod/kube-state-metrics-58dffdf67d-j4jdv 0/1 Running 0 34s

pod/prometheus-node-exporter-7729r 1/1 Running 0 3m

pod/prometheus-node-exporter-hhc7f 1/1 Running 0 3m

pod/prometheus-node-exporter-jxjcq 1/1 Running 0 3m

pod/prometheus-node-exporter-pswbb 1/1 Running 0 3m

pod/prometheus-server-65f5d59585-5fj6n 1/1 Running 0 2m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kube-state-metrics ClusterIP 10.108.165.171 8080/TCP 35s

service/prometheus NodePort 10.98.96.66 9090:30090/TCP 2m

service/prometheus-node-exporter ClusterIP None 9100/TCP 3m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-node-exporter 4 4 4 4 4 3m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/kube-state-metrics 1 1 1 0 35s

deployment.apps/prometheus-server 1 1 1 1 2m

NAME DESIRED CURRENT READY AGE

replicaset.apps/kube-state-metrics-58dffdf67d 1 1 0 35s

replicaset.apps/prometheus-server-65f5d59585 1 1 1 2m

[root@k8s-master-dev kube-state-metrics]# cd .. 5) 部署prometheus-adapter

参考 :https://github.com/DirectXMan12/k8s-prometheus-adapter/tree/master/deploy

[root@k8s-master-dev k8s-prom]# cd k8s-prometheus-adapter/

[root@k8s-master-dev k8s-prometheus-adapter]# ls

custom-metrics-apiserver-auth-delegator-cluster-role-binding.yaml custom-metrics-apiserver-service.yaml

custom-metrics-apiserver-auth-reader-role-binding.yaml custom-metrics-apiservice.yaml

custom-metrics-apiserver-deployment.yaml custom-metrics-cluster-role.yaml

custom-metrics-apiserver-deployment.yaml.bak custom-metrics-config-map.yaml

custom-metrics-apiserver-resource-reader-cluster-role-binding.yaml custom-metrics-resource-reader-cluster-role.yaml

custom-metrics-apiserver-service-account.yaml hpa-custom-metrics-cluster-role-binding.yaml

[root@k8s-master-dev k8s-prometheus-adapter]# grep secretName custom-metrics-apiserver-deployment.yaml

secretName: cm-adapter-serving-certs

[root@k8s-master-dev k8s-prometheus-adapter]# cd /etc/kubernetes/pki/

[root@k8s-master-dev pki]# (umask 077; openssl genrsa -out serving.key 2048)

Generating RSA private key, 2048 bit long modulus

.....................+++

..........+++

e is 65537 (0x10001)

[root@k8s-master-dev pki]#

[root@k8s-master-dev pki]# openssl req -new -key serving.key -out serving.csr -subj "/CN=serving"

[root@k8s-master-dev pki]# openssl x509 -req -in serving.csr -CA ca.crt -CAkey ca.key -CAcreateserial -out serving.crt -days 3650

Signature ok

subject=/CN=serving

Getting CA Private Key

[root@k8s-master-dev pki]# kubectl create secret generic cm-adapter-serving-certs --from-file=serving.crt=./serving.crt --from-file=serving.key=./serving.key -n prom

secret/cm-adapter-serving-certs created

[root@k8s-master-dev pki]# kubectl get secret -n prom

NAME TYPE DATA AGE

cm-adapter-serving-certs Opaque 2 9s

default-token-w4f44 kubernetes.io/service-account-token 3 8m

kube-state-metrics-token-dfcmf kubernetes.io/service-account-token 3 4m

prometheus-token-4lb78 kubernetes.io/service-account-token 3 6m

[root@k8s-master-dev pki]#

[root@k8s-master-dev pki]# cd -

/root/manifests/prometheus/k8s-prom/k8s-prometheus-adapter

[root@k8s-master-dev k8s-prometheus-adapter]# ls custom-metrics-config-map.yaml

custom-metrics-config-map.yaml

[root@k8s-master-dev k8s-prometheus-adapter]# cat custom-metrics-config-map.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: adapter-config

namespace: prom

data:

config.yaml: |

rules:

- seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}'

seriesFilters: []

resources:

overrides:

namespace:

resource: namespace

pod_name:

resource: pod

name:

matches: ^container_(.*)_seconds_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}[5m]))

by (<<.GroupBy>>)

- seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}'

seriesFilters:

- isNot: ^container_.*_seconds_total$

resources:

overrides:

namespace:

resource: namespace

pod_name:

resource: pod

name:

matches: ^container_(.*)_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}[5m]))

by (<<.GroupBy>>)

- seriesQuery: '{__name__=~"^container_.*",container_name!="POD",namespace!="",pod_name!=""}'

seriesFilters:

- isNot: ^container_.*_total$

resources:

overrides:

namespace:

resource: namespace

pod_name:

resource: pod

name:

matches: ^container_(.*)$

as: ""

metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>,container_name!="POD"}) by (<<.GroupBy>>)

- seriesQuery: '{namespace!="",__name__!~"^container_.*"}'

seriesFilters:

- isNot: .*_total$

resources:

template: <<.Resource>>

name:

matches: ""

as: ""

metricsQuery: sum(<<.Series>>{<<.LabelMatchers>>}) by (<<.GroupBy>>)

- seriesQuery: '{namespace!="",__name__!~"^container_.*"}'

seriesFilters:

- isNot: .*_seconds_total

resources:

template: <<.Resource>>

name:

matches: ^(.*)_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[5m])) by (<<.GroupBy>>)

- seriesQuery: '{namespace!="",__name__!~"^container_.*"}'

seriesFilters: []

resources:

template: <<.Resource>>

name:

matches: ^(.*)_seconds_total$

as: ""

metricsQuery: sum(rate(<<.Series>>{<<.LabelMatchers>>}[5m])) by (<<.GroupBy>>)

[root@k8s-master-dev k8s-prometheus-adapter]# grep namespace custom-metrics-apiserver-deployment.yaml

namespace: prom

[root@k8s-master-dev k8s-prometheus-adapter]# kubectl apply -f ./

clusterrolebinding.rbac.authorization.k8s.io/custom-metrics:system:auth-delegator created

rolebinding.rbac.authorization.k8s.io/custom-metrics-auth-reader created

deployment.apps/custom-metrics-apiserver created

clusterrolebinding.rbac.authorization.k8s.io/custom-metrics-resource-reader created

serviceaccount/custom-metrics-apiserver created

service/custom-metrics-apiserver created

apiservice.apiregistration.k8s.io/v1beta1.custom.metrics.k8s.io created

clusterrole.rbac.authorization.k8s.io/custom-metrics-server-resources created

configmap/adapter-config created

clusterrole.rbac.authorization.k8s.io/custom-metrics-resource-reader created

clusterrolebinding.rbac.authorization.k8s.io/hpa-controller-custom-metrics created

[root@k8s-master-dev k8s-prometheus-adapter]# kubectl get cm -n prom

NAME DATA AGE

adapter-config 1 21s

prometheus-config 1 21m

[root@k8s-master-dev k8s-prometheus-adapter]# kubectl get all -n prom

NAME READY STATUS RESTARTS AGE

pod/custom-metrics-apiserver-65f545496-2hfvb 1/1 Running 0 40s

pod/kube-state-metrics-58dffdf67d-j4jdv 0/1 Running 0 20m

pod/prometheus-node-exporter-7729r 1/1 Running 0 23m

pod/prometheus-node-exporter-hhc7f 1/1 Running 0 23m

pod/prometheus-node-exporter-jxjcq 1/1 Running 0 23m

pod/prometheus-node-exporter-pswbb 1/1 Running 0 23m

pod/prometheus-server-65f5d59585-5fj6n 1/1 Running 0 22m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/custom-metrics-apiserver ClusterIP 10.100.7.28 443/TCP 41s

service/kube-state-metrics ClusterIP 10.108.165.171 8080/TCP 20m

service/prometheus NodePort 10.98.96.66 9090:30090/TCP 22m

service/prometheus-node-exporter ClusterIP None 9100/TCP 23m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/prometheus-node-exporter 4 4 4 4 4 23m

NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

deployment.apps/custom-metrics-apiserver 1 1 1 1 42s

deployment.apps/kube-state-metrics 1 1 1 0 20m

deployment.apps/prometheus-server 1 1 1 1 22m

NAME DESIRED CURRENT READY AGE

replicaset.apps/custom-metrics-apiserver-65f545496 1 1 1 42s

replicaset.apps/kube-state-metrics-58dffdf67d 1 1 0 20m

replicaset.apps/prometheus-server-65f5d59585 1 1 1 22m

[root@k8s-master-dev k8s-prometheus-adapter]#

[root@k8s-master-dev k8s-prometheus-adapter]# kubectl api-versions | grep custom

custom.metrics.k8s.io/v1beta1

[root@k8s-master-dev k8s-prometheus-adapter]# 三、Grafana

grafana 是一个可视化面板,有着非常漂亮的图表和布局展示,功能齐全的度量仪表盘和图形编辑器,支持 Graphite、zabbix、InfluxDB、Prometheus、OpenTSDB、Elasticsearch 等作为数据源,比 Prometheus 自带的图表展示功能强大太多,更加灵活,有丰富的插件,功能更加强大。(使用promQL语句查询出了一些数据,并且在 Prometheus 的 Dashboard 中进行了展示,但是明显可以感觉到 Prometheus 的图表功能相对较弱,所以一般会使用第三方的工具展示这些数据,例Grafana)

部署Grafana

参考 :https://github.com/kubernetes/heapster/tree/master/deploy/kube-config/influxdb

[root@k8s-master-dev prometheus]# ls

grafana k8s-prom

[root@k8s-master-dev prometheus]# cd grafana/

[root@k8s-master-dev grafana]# head -11 grafana.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: monitoring-grafana

namespace: prom

spec:

replicas: 1

selector:

matchLabels:

task: monitoring

k8s-app: grafana

[root@k8s-master-dev grafana]# tail -2 grafana.yaml

k8s-app: grafana

type: NodePort

[root@k8s-master-dev grafana]# kubectl apply -f grafana.yaml

deployment.apps/monitoring-grafana created

service/monitoring-grafana created

[root@k8s-master-dev grafana]# kubectl get pods -n prom

NAME READY STATUS RESTARTS AGE

custom-metrics-apiserver-65f545496-2hfvb 1/1 Running 0 13m

kube-state-metrics-58dffdf67d-j4jdv 1/1 Running 0 32m

monitoring-grafana-ffb4d59bd-w9lg9 0/1 Running 0 8s

prometheus-node-exporter-7729r 1/1 Running 0 35m

prometheus-node-exporter-hhc7f 1/1 Running 0 35m

prometheus-node-exporter-jxjcq 1/1 Running 0 35m

prometheus-node-exporter-pswbb 1/1 Running 0 35m

prometheus-server-65f5d59585-5fj6n 1/1 Running 0 34m

[root@k8s-master-dev grafana]# kubectl get svc -n prom

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

custom-metrics-apiserver ClusterIP 10.100.7.28 443/TCP 13m

kube-state-metrics ClusterIP 10.108.165.171 8080/TCP 32m

monitoring-grafana NodePort 10.100.131.108 80:42690/TCP 22s

prometheus NodePort 10.98.96.66 9090:30090/TCP 34m

prometheus-node-exporter ClusterIP None 9100/TCP 35m

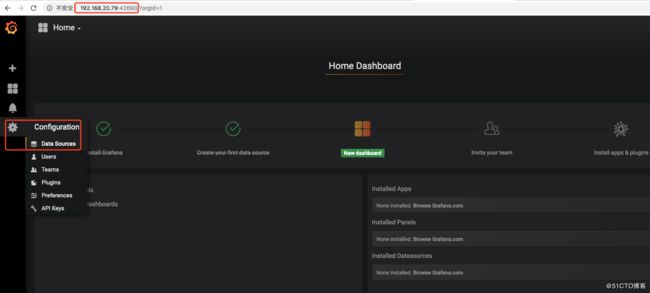

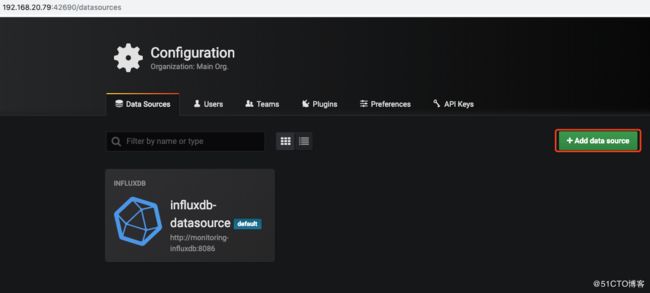

[root@k8s-master-dev grafana]# Grafana的使用,默认用户名密码都是admin,登录后首先添加数据源 (如果登录grafana web 时不用输入用户名、密码即可操作,说明在grafana.yml 文件中的GF_AUTH_ANONYMOUS_ENABLED 项设置了true,导致匿名用户以admin的角色登录;将其更改为 false,然后再次kubectl apply -f grafana.yml 即可解决 )

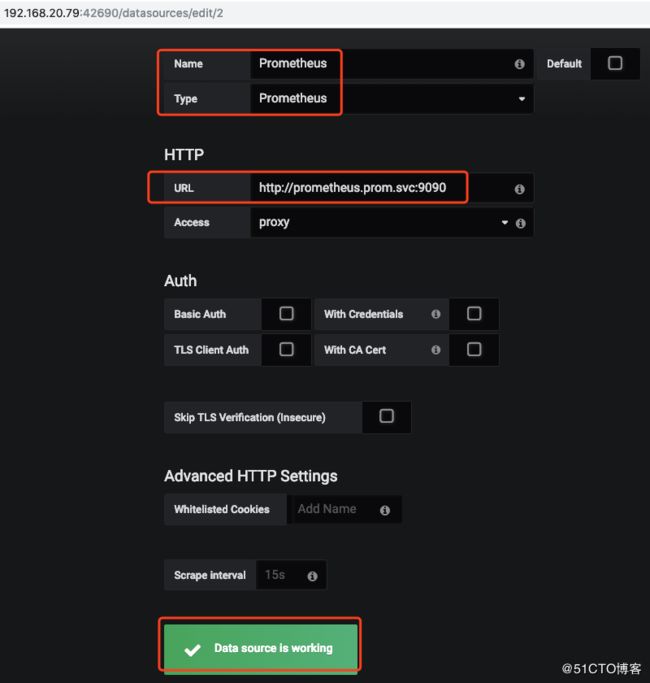

指定Prometheus 的数据源从哪个PromQL URL获取:

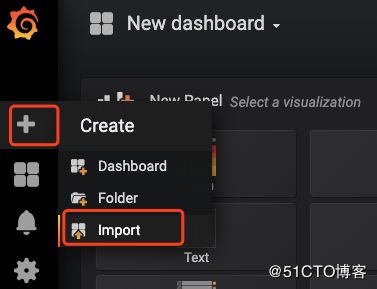

然后导入Dashboard (Dashboard可以在https://grafana.com/dashboards下载)

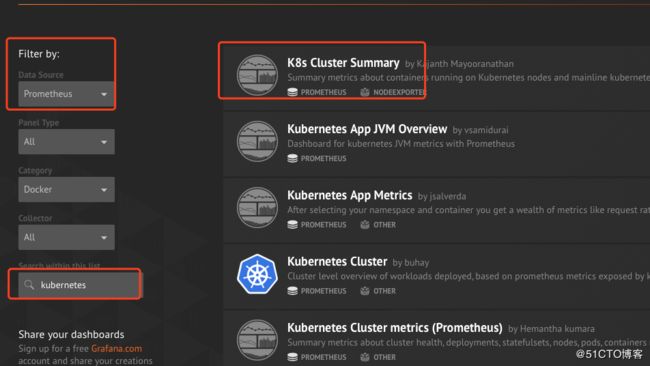

(补充) 笔者在grafana官网下载了k8s 相关的dashboard 如下所示:

然后将下载的k8s cluster summary 再导入到自己环境的grafana中,效果如下所示:

如果对dashboard不满意,可以自行创建或修改Dashboard.

(补充)使用ingress 代理promethemus和grafana :

[root@k8s-master1-dev ~]# cat ingress-rule-monitor-svc.yaml

apiVersion: extensions/v1beta1

kind: Ingress

metadata:

name: ingress-rule-monitor

namespace: prom

annotations:

kubernetes.io/ingress.class: "nginx"

nginx.ingress.kubernetes.io/whitelist-source-range: "10.0.0.0/8, 192.168.0.0/16"

spec:

rules:

- host: grafana-devel.domain.cn

http:

paths:

- path:

backend:

serviceName: monitoring-grafana

servicePort: 80

- host: prometheus-devel.domain.cn

http:

paths:

- path:

backend:

serviceName: prometheus

servicePort: 9090

# kubectl apply -f ingress-rule-monitor-svc.yaml