calico 支持ipip、BGP路由 (属于三层技术) ,使用虚拟路由代替虚拟交换,每一台虚拟路由通过BGP协议传播可达信息(路由)到剩余数据中心。

参考 :https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/flannel

将calico 与 flannel整合后的项目就是canal 。可以利用canal的策略来控制Pods之间的隔离。canal 支持的版本 1.10+ ,要求kubernetes 启用CNI插件,必须通过传递--network-plugin=cni参数将kubelet配置为使用CNI网络( kubeadm安装方式中这是默认设置)。要求支持kube-proxy的模式为 iptable ,而ipvs模式需要1.9以上。且确保环境中etcd正常工作,确保k8s设置了--cluster-cidr=10.244.0.0/16和--allocate-node-cidrs=true (kubeadm安装方式这些是默认的,可见/etc/kubernetes/manifests/kube-controller-manager.yaml文件)

部署canal

1) 部署RBAC

[root@docker79 manifests]# mkdir calico

[root@docker79 manifests]# cd calico/

[root@docker79 calico]# wget https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/hosted/canal/rbac.yaml

--2018-09-11 17:51:19-- https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/hosted/canal/rbac.yaml

正在解析主机 docs.projectcalico.org (docs.projectcalico.org)... 35.189.132.21

正在连接 docs.projectcalico.org (docs.projectcalico.org)|35.189.132.21|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:2469 (2.4K) [application/x-yaml]

正在保存至: “rbac.yaml”

100%[======================================>] 2,469 --.-K/s 用时 0s

2018-09-11 17:51:20 (83.1 MB/s) - 已保存 “rbac.yaml” [2469/2469])

[root@docker79 calico]# kubectl apply -f rbac.yaml

clusterrole.rbac.authorization.k8s.io/calico created

clusterrole.rbac.authorization.k8s.io/flannel configured

clusterrolebinding.rbac.authorization.k8s.io/canal-flannel created

clusterrolebinding.rbac.authorization.k8s.io/canal-calico created

[root@docker79 calico]#2) 部署canal

[root@docker79 calico]# wget https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/hosted/canal/canal.yaml

--2018-09-11 17:51:57-- https://docs.projectcalico.org/v3.2/getting-started/kubernetes/installation/hosted/canal/canal.yaml

正在解析主机 docs.projectcalico.org (docs.projectcalico.org)... 35.189.132.21

正在连接 docs.projectcalico.org (docs.projectcalico.org)|35.189.132.21|:443... 已连接。

已发出 HTTP 请求,正在等待回应... 200 OK

长度:11237 (11K) [application/x-yaml]

正在保存至: “canal.yaml”

100%[======================================>] 11,237 --.-K/s 用时 0.001s

2018-09-11 17:51:58 (18.2 MB/s) - 已保存 “canal.yaml” [11237/11237])

[root@docker79 calico]# kubectl apply -f canal.yaml

configmap/canal-config created

daemonset.extensions/canal created

serviceaccount/canal created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

[root@docker79 calico]#

[root@docker79 calico]# kubectl get pods -n kube-system

NAME READY STATUS RESTARTS AGE

canal-cbspc 3/3 Running 0 1m

canal-cq7kq 0/3 ContainerCreating 0 1m

canal-kzd46 3/3 Running 0 1m

coredns-78fcdf6894-pn4s2 1/1 Running 0 14d

coredns-78fcdf6894-sq7vg 1/1 Running 0 14d

etcd-docker79 1/1 Running 0 14d

kube-apiserver-docker79 1/1 Running 0 14d

kube-controller-manager-docker79 1/1 Running 0 14d

kube-flannel-ds-amd64-29j4r 1/1 Running 0 36m

kube-flannel-ds-amd64-f2fsk 1/1 Running 0 36m

kube-flannel-ds-amd64-g9wlf 1/1 Running 0 36m

kube-proxy-c78x5 1/1 Running 2 14d

kube-proxy-hhxrh 1/1 Running 0 14d

kube-proxy-k8hgk 1/1 Running 0 14d

kube-scheduler-docker79 1/1 Running 0 14d

kubernetes-dashboard-767dc7d4d-hpxbb 1/1 Running 0 7h

[root@docker79 calico]#canal 策略

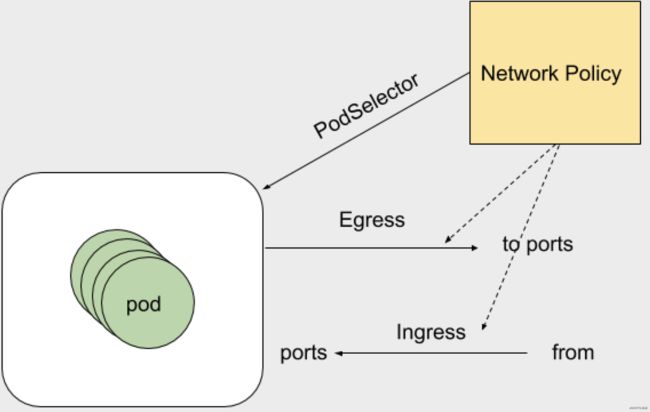

- Egress 表示出站流量,pod作为客户端访问外部服务,pod地址作为源地址。策略可以定义目标地址或者目的端口

- Ingress 表示入站流量,pod地址和服务作为服务端,提供外部访问。pod地址作为目标地址。策略可以定义源地址和自己端口

- podSelector 规则生效在那个pod上,可以配置单个pod或者一组pod,可以定义单方向;空 podSelector则选择本命名空间中所有Pod。

使用 kubectl explain networkpolicy.spec 命令可以看到 egress、ingress、podSelector、policyTypes等选项,详细如下: - egress 出站流量规则 可以根据ports和to去定义规则。ports下可以指定目标端口和协议。to(目标地址):目标地址分为ip地址段、pod、namespace

- ingress 入站流量规则 可以根据ports和from。ports下可以指定目标端口和协议。from(来自那个地址可以进来):地址分为ip地址段、pod、namespace

- podSelector 定义NetworkPolicy的限制范围。直白的说就是规则应用到那个pod上。podSelector: {},留空就是定义对当前namespace下的所有pod生效。没有定义白名单的话 默认就是Deny ALL (拒绝所有)

- policyTypes 指定哪个规则生效,不指定就是默认规则。

网络策略控制实例

1) 创建两个namespace ,分别是dev、prod,如下所示:

[root@docker79 calico]# kubectl create namespace dev

namespace/dev created

[root@docker79 calico]# kubectl create namespace prod

namespace/prod created2) 定义ingress-def 的策略,并应用到dev namespace,如下所示:

[root@docker79 calico]# vim ingress-def.yaml

[root@docker79 calico]# cat ingress-def.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all-ingress

spec:

podSelector: {}

policyTypes:

- Ingress

[root@docker79 calico]#

[root@docker79 calico]# kubectl apply -f ingress-def.yaml -n dev

networkpolicy.networking.k8s.io/deny-all-ingress created

[root@docker79 calico]#

[root@docker79 calico]# kubectl get netpol -n dev

NAME POD-SELECTOR AGE

deny-all-ingress 3m

[root@docker79 calico]# 3) 在 dev 的 namespace中运行pod,并测试访问,如下所示:

[root@docker79 calico]# vim pod-1.yaml

[root@docker79 calico]# cat pod-1.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod1

spec:

containers:

- name: myapp

image: ikubernetes/myapp:v1

[root@docker79 calico]#

[root@docker79 calico]# kubectl apply -f pod-1.yaml -n dev

pod/pod1 created

[root@docker79 calico]# kubectl get pods -n dev -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

pod1 1/1 Running 0 4s 10.244.2.3 docker78

[root@docker79 calico]#

[root@docker79 calico]# curl 10.244.2.3 <无法访问>

^C 由于 ingress-def.yaml文件中policyTypes只定义了Ingress ,且Ingress rules没定义,所以默认拒绝所有(入栈)连接。

4) 在prod名称空间中运行pod ,并测试访问,如下所示:

[root@docker79 calico]# kubectl apply -f pod-1.yaml -n prod

pod/pod1 created

[root@docker79 calico]# kubectl get pods -n prod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE

pod1 1/1 Running 0 21s 10.244.2.4 docker78

[root@docker79 calico]# curl 10.244.2.4

Hello MyApp | Version: v1 | Pod Name

[root@docker79 calico]# 由于 prod空间并没有应用ingress策略,所以运行pod后 可以正常访问 。

5) 修改dev 名称空间中的 ingress 策略,并测试访问,如下所示:

[root@docker79 calico]# vim ingress-def.yaml

[root@docker79 calico]# cat ingress-def.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all-ingress

spec:

podSelector: {}

ingress:

- {}

policyTypes:

- Ingress

[root@docker79 calico]# kubectl apply -f ingress-def.yaml -n dev

networkpolicy.networking.k8s.io/deny-all-ingress configured

[root@docker79 calico]# curl 10.244.2.3

Hello MyApp | Version: v1 | Pod Name

[root@docker79 calico]#由于 ingress-def.yaml文件中policyTypes定义了Ingress,而ingress 字段又定义了允许范围为空(表示允许所有访问)的rule,所以最终可以访问dev namespace 中的pod。

6)恢复ingress策略中的deny操作,并在dev名称空间中添加新的ingress 策略,并指定具体的rule,测试如下操作:

[root@docker79 calico]# cat allow-netpol-demo.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-myapp-ingress

spec:

podSelector:

matchLabels:

app: myapp

ingress:

- from:

- ipBlock:

cidr: 10.244.0.0/16

except:

- 10.244.1.2/32

ports:

- protocol: TCP

port: 80

- protocol: TCP

port: 443

[root@docker79 calico]# kubectl apply -f allow-netpol-demo.yaml -n dev

networkpolicy.networking.k8s.io/allow-myapp-ingress created

[root@docker79 calico]# curl 10.244.2.3

Hello MyApp | Version: v1 | Pod Name

[root@docker79 calico]#7) 在Prod名称空间添加egress 策略,如下所示:

[root@docker79 calico]# vim egress-def.yaml

[root@docker79 calico]# cat egress-def.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all-egress

spec:

podSelector: {}

policyTypes:

- Egress

[root@docker79 calico]# kubectl apply -f egress-def.yaml -n prod

networkpolicy.networking.k8s.io/deny-all-egress created

[root@docker79 calico]# kubectl get pods -n prod

NAME READY STATUS RESTARTS AGE

pod1 1/1 Running 0 23m

[root@docker79 calico]# kubectl exec pod1 -it -n prod -- /bin/sh

/ # ping 10.244.0.2

PING 10.244.0.10 (10.244.0.2): 56 data bytes

^C

--- 10.244.0.2 ping statistics ---

4 packets transmitted, 0 packets received, 100% packet loss

/ # command terminated with exit code 1

[root@docker79 calico]# 由于没有定义egress 的rules ,所以默认deny all,导致prod名称空间中的pod不能出栈。

8) 修改prod 名称空间中的egress策略,并添加 egress rules ,如下所示:

[root@docker79 calico]# vim egress-def.yaml

[root@docker79 calico]# cat egress-def.yaml

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: deny-all-egress

spec:

podSelector: {}

egress:

- {}

policyTypes:

- Egress

[root@docker79 calico]# kubectl apply -f egress-def.yaml -n prod

networkpolicy.networking.k8s.io/deny-all-egress configured

[root@docker79 calico]# kubectl exec pod1 -it -n prod -- /bin/sh

/ # ping 10.244.0.2

PING 10.244.0.2 (10.244.0.2): 56 data bytes

64 bytes from 10.244.0.2: seq=0 ttl=62 time=0.649 ms

64 bytes from 10.244.0.2: seq=1 ttl=62 time=0.507 ms

^C

--- 10.244.0.2 ping statistics ---

2 packets transmitted, 2 packets received, 0% packet loss

round-trip min/avg/max = 0.507/0.578/0.649 ms

/ # [root@docker79 calico]#由于egress rule 定义为空,所以允许所有出栈操作。