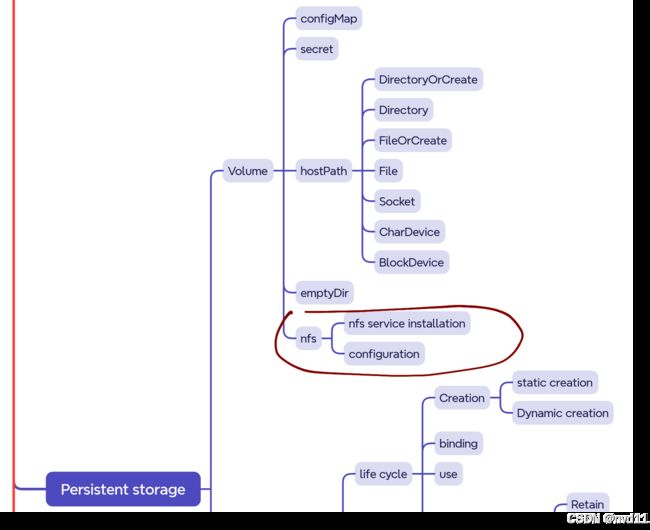

K8S - Volume - NFS 卷的简介和使用

在之前的文章里已经介绍了 K8S 中两个简单卷类型 hostpath 和 emptydir

k8s - Volume 简介和HostPath的使用

K8S - Emptydir - 取代ELK 使用fluentd 构建logging saidcar

但是这两种卷都有同1个限制, 就是依赖于 k8s nodes的空间

如果某个service pod中需要的volumn 空间很大, 这时我们就需要考虑网络磁盘方案, 其中NAS 类型的Volume 是常用且简单的选择之一

找1个VM安装NFS服务

先找个vm

首先我们需要1个NAS 服务 provider

gateman@MoreFine-S500:~$ gcloud compute ssh tf-vpc0-subnet0-main-server

WARNING: This command is using service account impersonation. All API calls will be executed as [[email protected]].

WARNING: This command is using service account impersonation. All API calls will be executed as [[email protected]].

No zone specified. Using zone [europe-west2-c] for instance: [tf-vpc0-subnet0-main-server].

Linux tf-vpc0-subnet0-main-server 5.10.0-32-cloud-amd64 #1 SMP Debian 5.10.223-1 (2024-08-10) x86_64

The programs included with the Debian GNU/Linux system are free software;

the exact distribution terms for each program are described in the

individual files in /usr/share/doc/*/copyright.

Debian GNU/Linux comes with ABSOLUTELY NO WARRANTY, to the extent

permitted by applicable law.

Last login: Sat Sep 7 15:27:35 2024 from 112.94.250.239

gateman@tf-vpc0-subnet0-main-server:~$ df -h

Filesystem Size Used Avail Use% Mounted on

udev 7.9G 0 7.9G 0% /dev

tmpfs 1.6G 1.2M 1.6G 1% /run

/dev/sda1 59G 23G 34G 41% /

tmpfs 7.9G 0 7.9G 0% /dev/shm

tmpfs 5.0M 0 5.0M 0% /run/lock

/dev/sda15 124M 11M 114M 9% /boot/efi

tmpfs 1.6G 0 1.6G 0% /run/user/1000

gateman@tf-vpc0-subnet0-main-server:~$

还有30GB 空间, 应该还行

创建 共享文件夹

gateman@tf-vpc0-subnet0-main-server:~$ sudo su -

root@tf-vpc0-subnet0-main-server:~# mkdir -p /nfs/k8s-share

root@tf-vpc0-subnet0-main-server:~#

安装nfs

root@tf-vpc0-subnet0-main-server:~# apt-get install nfs-kernel-server

修改配置

root@tf-vpc0-subnet0-main-server:~# cat /etc/exports

# /etc/exports: the access control list for filesystems which may be exported

# to NFS clients. See exports(5).

#

# Example for NFSv2 and NFSv3:

# /srv/homes hostname1(rw,sync,no_subtree_check) hostname2(ro,sync,no_subtree_check)

#

# Example for NFSv4:

# /srv/nfs4 gss/krb5i(rw,sync,fsid=0,crossmnt,no_subtree_check)

# /srv/nfs4/homes gss/krb5i(rw,sync,no_subtree_check)

#

/nfs/k8s-share 192.168.0.0/16(rw,sync,no_subtree_check,no_root_squash)

只允许同1个局域网的vm访问

注意rw,sync,no_subtree_check,no_root_squash 这里之间不能有空格, 否则会有bad option list error.

重启nfs 服务

root@tf-vpc0-subnet0-main-server:~# vi /etc/exports

root@tf-vpc0-subnet0-main-server:~# systemctl restart nfs-server

root@tf-vpc0-subnet0-main-server:~# systemctl status nfs-server

● nfs-server.service - NFS server and services

Loaded: loaded (/lib/systemd/system/nfs-server.service; enabled; vendor preset: enabled)

Active: active (exited) since Fri 2024-09-13 19:29:44 UTC; 2s ago

Process: 158390 ExecStartPre=/usr/sbin/exportfs -r (code=exited, status=0/SUCCESS)

Process: 158391 ExecStart=/usr/sbin/rpc.nfsd $RPCNFSDARGS (code=exited, status=0/SUCCESS)

Main PID: 158391 (code=exited, status=0/SUCCESS)

CPU: 17ms

Sep 13 19:29:43 tf-vpc0-subnet0-main-server systemd[1]: Starting NFS server and services...

Sep 13 19:29:44 tf-vpc0-subnet0-main-server systemd[1]: Finished NFS server and services.

找另1台测试 mount 这个nfs drive

首先等录另一台, 需要先安装nfs client (nfs-common)

否则mount 不上

root@tf-vpc0-subnet0-mysql0:~# apt install nfs-common

然后 可以用showmount 看一下主机有什么shared 的drive 可以mount

root@tf-vpc0-subnet0-mysql0:~# showmount -e 192.168.0.35

创建1个mount point

root@tf-vpc0-subnet0-mysql0:~# mkdir -p /mnt/nfs-test

挂载主机NAS drive 到本机 mountpoint

root@tf-vpc0-subnet0-mysql0:~# mount -t nfs 192.168.0.35:/nfs/k8s-share /mnt/nfs-test

在K8S 测试 case 1

编写deployment yaml

apiVersion: apps/v1

kind: Deployment

metadata:

labels: # label of this deployment

app: cloud-order # custom defined

author: nvd11

name: deployment-cloud-order # name of this deployment

namespace: default

spec:

replicas: 3 # desired replica count, Please note that the replica Pods in a Deployment are typically distributed across multiple nodes.

revisionHistoryLimit: 10 # The number of old ReplicaSets to retain to allow rollback

selector: # label of the Pod that the Deployment is managing,, it's mandatory, without it , we will get this error

# error: error validating data: ValidationError(Deployment.spec.selector): missing required field "matchLabels" in io.k8s.apimachinery.pkg.apis.meta.v1.LabelSelector ..

matchLabels:

app: cloud-order

strategy: # Strategy of upodate

type: RollingUpdate # RollingUpdate or Recreate

rollingUpdate:

maxSurge: 25% # The maximum number of Pods that can be created over the desired number of Pods during the update

maxUnavailable: 25% # The maximum number of Pods that can be unavailable during the update

template: # Pod template

metadata:

labels:

app: cloud-order # label of the Pod that the Deployment is managing. must match the selector, otherwise, will get the error Invalid value: map[string]string{"app":"bq-api-xxx"}: `selector` does not match template `labels`

spec: # specification of the Pod

containers:

- image: europe-west2-docker.pkg.dev/jason-hsbc/my-docker-repo/cloud-order:1.1.0 # image of the container

imagePullPolicy: Always

name: container-cloud-order

command: ["bash"]

args:

- "-c"

- |

java -jar -Dserver.port=8080 app.jar --spring.profiles.active=$APP_ENVIRONMENT --logging.file.name=/app/logs/app.log

env: # set env varaibles

- name: APP_ENVIRONMENT # name of the environment variable

value: prod # value of the environment variable

volumeMounts: # write log here

- name: volume-nfs-log

mountPath: /app/logs/

readOnly: false # read only is set to false

ports:

- containerPort: 8080

name: cloud-order

volumes:

- name: volume-nfs-log

nfs:

server: 192.168.0.35

path: /nfs/k8s-share # nfs share path

readOnly: false

restartPolicy: Always # Restart policy for all containers within the Pod

terminationGracePeriodSeconds: 10 # The period of time in seconds given to the Pod to terminate gracefully

这里我定义了1个 nfs 的volumes

volumes:

- name: volume-nfs-log

nfs:

server: 192.168.0.35

path: /nfs/k8s-share # nfs share path

readOnly: false

并在容器里挂载在/app/logs/

启动容器

这时容器启动失败

gateman@MoreFine-S500:nfs$ kubectl apply -f deployment-cloud-order-fluentd-nfs-case1.yaml

deployment.apps/deployment-cloud-order created

gateman@MoreFine-S500:nfs$ kubectl get pods

NAME READY STATUS RESTARTS AGE

deployment-bq-api-service-6f6ffc7866-58drw 1/1 Running 5 (5d1h ago) 10d

deployment-bq-api-service-6f6ffc7866-8djx9 1/1 Running 6 (5d1h ago) 30d

deployment-bq-api-service-6f6ffc7866-mxwcq 1/1 Running 16 (5d1h ago) 74d

deployment-bq-api-service-6f6ffc7866-x8pl6 1/1 Running 3 (5d1h ago) 10d

deployment-cloud-order-5f45f44fbc-8vzx5 0/1 Terminating 0 5m19s

deployment-cloud-order-5f45f44fbc-b2p5r 0/1 Terminating 0 5m19s

deployment-cloud-order-5f45f44fbc-xnnr7 0/1 Terminating 0 5m19s

deployment-cloud-order-5f46d97659-8nsln 0/1 ContainerCreating 0 8s

deployment-cloud-order-5f46d97659-9pvsb 0/1 ContainerCreating 0 8s

deployment-cloud-order-5f46d97659-jsmsm 0/1 ContainerCreating 0 8s

deployment-fluentd-test-56bd589c6-dptxl 1/1 Running 1 (5d1h ago) 5d1h

dns-test 0/1 Completed 0 28d

gateman@MoreFine-S500:nfs$ kubectl describe po deployment-cloud-order-5f46d97659-jsmsm | tail -n 20

Path: /nfs/k8s-share

ReadOnly: false

kube-api-access-d54rh:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 30s default-scheduler Successfully assigned default/deployment-cloud-order-5f46d97659-jsmsm to k8s-node0

Warning FailedMount 14s (x6 over 29s) kubelet MountVolume.SetUp failed for volume "volume-nfs-log" : mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t nfs 192.168.0.35:/nfs/k8s-share /var/lib/kubelet/pods/00850c02-3766-4481-93bc-089f19c1547b/volumes/kubernetes.io~nfs/volume-nfs-log

Output: mount: /var/lib/kubelet/pods/00850c02-3766-4481-93bc-089f19c1547b/volumes/kubernetes.io~nfs/volume-nfs-log: bad option; for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount.<type> helper program.

Output: mount: /var/lib/kubelet/pods/00850c02-3766-4481-93bc-089f19c1547b/volumes/kubernetes.io~nfs/volume-nfs-log: bad option; for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount. helper program.

难道要在k8s nodes 里安装nfs-common?

在K8S 测试 case 2 - 只在k8s-node0 1个node 安装nfs-common

gateman@MoreFine-S500:nfs$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-bq-api-service-6f6ffc7866-58drw 1/1 Running 5 (5d1h ago) 10d 10.244.2.235 k8s-node0 <none> <none>

deployment-bq-api-service-6f6ffc7866-8djx9 1/1 Running 6 (5d1h ago) 30d 10.244.1.149 k8s-node1 <none> <none>

deployment-bq-api-service-6f6ffc7866-mxwcq 1/1 Running 16 (5d1h ago) 74d 10.244.2.234 k8s-node0 <none> <none>

deployment-bq-api-service-6f6ffc7866-x8pl6 1/1 Running 3 (5d1h ago) 10d 10.244.1.150 k8s-node1 <none> <none>

deployment-cloud-order-5f46d97659-drpqk 0/1 ContainerCreating 0 43s <none> k8s-node3 <none> <none>

deployment-cloud-order-5f46d97659-g65zz 0/1 ContainerCreating 0 43s <none> k8s-node1 <none> <none>

deployment-cloud-order-5f46d97659-jmld7 1/1 Running 0 43s 10.244.2.237 k8s-node0 <none> <none>

deployment-fluentd-test-56bd589c6-dptxl 1/1 Running 1 (5d1h ago) 5d2h 10.244.3.169 k8s-node3 <none> <none>

dns-test 0/1 Completed 0 28d 10.244.1.92 k8s-node1 <none> <none>

gateman@MoreFine-S500:nfs$ kubectl describe po deployment-cloud-order-5f46d97659-g65zz | tail -n 20

ReadOnly: false

kube-api-access-nq7d9:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional: <nil>

DownwardAPI: true

QoS Class: BestEffort

Node-Selectors: <none>

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 2m12s default-scheduler Successfully assigned default/deployment-cloud-order-5f46d97659-g65zz to k8s-node1

Warning FailedMount 9s kubelet Unable to attach or mount volumes: unmounted volumes=[volume-nfs-log], unattached volumes=[volume-nfs-log kube-api-access-nq7d9]: timed out waiting for the condition

Warning FailedMount 4s (x9 over 2m11s) kubelet MountVolume.SetUp failed for volume "volume-nfs-log" : mount failed: exit status 32

Mounting command: mount

Mounting arguments: -t nfs 192.168.0.35:/nfs/k8s-share /var/lib/kubelet/pods/020d15ee-8ee5-4846-9d66-afd658a9073b/volumes/kubernetes.io~nfs/volume-nfs-log

Output: mount: /var/lib/kubelet/pods/020d15ee-8ee5-4846-9d66-afd658a9073b/volumes/kubernetes.io~nfs/volume-nfs-log: bad option; for several filesystems (e.g. nfs, cifs) you might need a /sbin/mount.<type> helper program.

果然只有k8s-node0 里的pod 启动成功

在K8S 测试 case 3 - 在其他nodes 里也安装nfs-common

gateman@MoreFine-S500:nfs$ kubectl get po -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

deployment-bq-api-service-6f6ffc7866-58drw 1/1 Running 5 (5d1h ago) 10d 10.244.2.235 k8s-node0 <none> <none>

deployment-bq-api-service-6f6ffc7866-8djx9 1/1 Running 6 (5d1h ago) 30d 10.244.1.149 k8s-node1 <none> <none>

deployment-bq-api-service-6f6ffc7866-mxwcq 1/1 Running 16 (5d1h ago) 74d 10.244.2.234 k8s-node0 <none> <none>

deployment-bq-api-service-6f6ffc7866-x8pl6 1/1 Running 3 (5d1h ago) 10d 10.244.1.150 k8s-node1 <none> <none>

deployment-cloud-order-5f46d97659-2d7nk 1/1 Running 0 8s 10.244.3.170 k8s-node3 <none> <none>

deployment-cloud-order-5f46d97659-j7dj8 1/1 Running 0 8s 10.244.1.154 k8s-node1 <none> <none>

deployment-cloud-order-5f46d97659-w7xlf 1/1 Running 0 8s 10.244.2.238 k8s-node0 <none> <none>

这次所有的pod 都是running了

检查日志文件

root@tf-vpc0-subnet0-main-server:/nfs/k8s-share# pwd

/nfs/k8s-share

root@tf-vpc0-subnet0-main-server:/nfs/k8s-share# ls -l

total 52

-rw-r--r-- 1 root root 49107 Sep 13 20:56 app.log

-rw-r--r-- 1 root root 5 Sep 13 20:01 test1.txt

app.log 生成,而且相信3个pod 都会往同1个文件写日志

检查node 的mount point

gateman@k8s-node3:~$ ls /app

ls: cannot access '/app': No such file or directory

gateman@k8s-node3:~$

可以见node server里并没有mountpoint 生成

nfs drive 被直接挂载到容器内

这也是我们expected的!