一、boston房价预测

1. 读取数据集

from sklearn.datasets import load_boston boston = load_boston() boston.keys() print(boston.DESCR) boston.data.shape import pandas as pd pd.DataFrame(boston.data)

2. 训练集与测试集划分

from sklearn.model_selection import train_test_split x_train,x_test,y_train,y_test = train_test_split(data.data,data.target,test_size=0.3) print(x_train.shape,y_train.shape)

3. 线性回归模型:建立13个变量与房价之间的预测模型,并检测模型好坏。

from sklearn.linear_model import LinearRegression lineR = LinearRegression() lineR.fit(boston.data,y) lineR.coef_ lineR.intercept_ import matplotlib.pyplot as plt x= boston.data[:,12].reshape(-1,1) y= boston.target plt.figure(figsize=(10,6)) plt.scatter(x,y) from sklearn.linear_model import LinearRegression lineR = LinearRegression() lineR.fit(x,y) y_pred = lineR.predict(x) plt.plot(x,y_pred) print(lineR.coef_,lineR.intercept_) plt.show()

4. 多项式回归模型:建立13个变量与房价之间的预测模型,并检测模型好坏。

from sklearn.preprocessing import PolynomialFeatures poly = PolynomialFeatures(degree=2) poly.fit_transform(x) lrp = LinearRegression() lineR.fit(x,y) y_pred = lineR.predict(x) plt.plot(x,y_pred) from sklearn.preprocessing import PolynomialFeatures poly = PolynomialFeatures(degree=2) x_poly = poly.fit_transform(x) lrp = LinearRegression() lrp.fit(x_poly,y) y_pred = lineR.predict(x) plt.plot(x,y_pred) x_poly from sklearn.preprocessing import PolynomialFeatures poly = PolynomialFeatures(degree=2) x_poly = poly.fit_transform(x) lrp = LinearRegression() lrp.fit(x_poly,y) y_poly_pred = lrp.predict(x_poly) plt.scatter(x,y) plt.scatter(x,y_pred) plt.scatter(x,y_poly_pred) plt.show() from sklearn.preprocessing import PolynomialFeatures poly = PolynomialFeatures(degree=2) x_poly = poly.fit_transform(x) lp = LinearRegression() lp.fit(x_poly,y) y_poly_pred = lp.predict(x_poly) plt.scatter(x,y) plt.plot(x,y_pred,'r') plt.scatter(x,y_poly_pred,c='b') plt.show()

5. 比较线性模型与非线性模型的性能,并说明原因。

如果模型是参数的线性函数,并且存在线性分类面,那么就是线性分类器,否则不是。线性分类器可解释性好,

计算复杂度较低,不足之处是模型拟合效果相对弱些。非线性分类器拟合能力较强,不足之处是数据量不足容易过拟合,计算复杂度高,可解释性不好。线性分类器有三大类:感知器准则函数,SVM,Fisher准则,而贝叶斯分类器不是线性分类器。

二、中文文本分类

1.各种获取文件,写文件

2.除去噪声,如:格式转换,去掉符号,整体规范化

3.遍历每个个文件夹下的每个文本文件。

4.使用jieba分词将中文文本切割。

import os data = [] target = [] fileList = os.listdir(path) path = r'C:\Users\Administrator\Desktop\147\财经' for f in fileList: file = os.path.join(path,f) print(file)

import jieba with open(r'G:\杜云梅\stops.txt', encoding='utf-8') as f: stopwords = f.read().split('\n')

5.去掉停用词。

def processing(tokens): tokens = "".join([char for char in tokens if char.isalpha()]) tokens = [token for token in jieba.cut(tokens,cut_all=True) if len(token) >=2] tokens = " ".join([token for token in tokens if token not in stopwords]) return tokens

6.对处理之后的文本开始用TF-IDF算法进行单词权值的计算

7.贝叶斯预测种类

8.模型评价

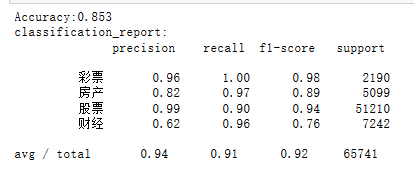

tokenList = [] targetList = [] for root,dirs,files in os.walk(path): for f in files: filePath = os.path.join(root,f) with open(filePath,encoding='utf-8') as f: content = f.read() target = filePath.split('\\')[-2] targetList.append(target) tokenList.append(processing(content)) from sklearn.feature_extraction.text import TfidfVectorizer from sklearn.model_selection import train_test_split from sklearn.naive_bayes import GaussianNB,MultinomialNB from sklearn.model_selection import cross_val_score from sklearn.metrics import classification_report x_train,x_test,y_train,y_test = train_test_split(tokenList,targetList,test_size=0.3,stratify=targetList) vectorizer = TfidfVectorizer() x_train = vectorizer.fit_transform(x_train) x_test = vectorizer.transform(x_test) from sklearn.naive_bayes import MultinomialNB mnb = MultinomialNB() module = mnb.fit(x_train,y_train) y_predict = module.predict(X_test) scores=cross_val_score(mnb,X_test,y_test,cv=5) print("Accuracy:%.3f"%scores.mean()) print("classification_report:\n",classification_report(y_predict,y_test))

9.新文本类别预测

targetList.append(target) print(targetList[0:10]) tokenList.append(processing(content)) tokenList[0:10]