Hadoop 分析Apache日志记录 URLlog日志分析

Hadoop 分析Apache日志记录 URLlog日志分析:

1、GET方式的URL出现的次数

2、PUT方式的URL出现的次数

数据文件

[root@master IMFdatatest]#hadoop dfs -cat /library/URLLog.txt

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

16/02/16 07:23:53 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

127.0.0.1 - - [03/Jul/2015:23:36:38 +0800] "GET /course/detail/3.htm HTTP/1.0" 200 38435 0.038

182.131.89.195 - - [03/Jul/2015:23:37:43 +0800] "GET / HTTP/1.0" 301 - 0.000

127.0.0.1 - - [03/Jul/2015:23:38:27 +0800] "POST /service/notes/addViewTimes_23.htm HTTP/1.0" 200 2 0.003

127.0.0.1 - - [03/Jul/2015:23:39:03 +0800] "GET /html/notes/20140617/779.html HTTP/1.0" 200 69539 0.046

127.0.0.1 - - [03/Jul/2015:23:43:00 +0800] "GET /html/notes/20140318/24.html HTTP/1.0" 200 67171 0.049

127.0.0.1 - - [03/Jul/2015:23:43:59 +0800] "POST /service/notes/addViewTimes_779.htm HTTP/1.0" 200 1 0.003

127.0.0.1 - - [03/Jul/2015:23:45:51 +0800] "GET / HTTP/1.0" 200 70044 0.060

127.0.0.1 - - [03/Jul/2015:23:46:17 +0800] "GET /course/list/73.htm HTTP/1.0" 200 12125 0.010

127.0.0.1 - - [03/Jul/2015:23:46:58 +0800] "GET /html/notes/20140609/542.html HTTP/1.0" 200 94971 0.077

127.0.0.1 - - [03/Jul/2015:23:48:31 +0800] "POST /service/notes/addViewTimes_24.htm HTTP/1.0" 200 2 0.003

127.0.0.1 - - [03/Jul/2015:23:48:34 +0800] "POST /service/notes/addViewTimes_542.htm HTTP/1.0" 200 2 0.003

127.0.0.1 - - [03/Jul/2015:23:49:31 +0800] "GET /notes/index-top-3.htm HTTP/1.0" 200 53494 0.041

127.0.0.1 - - [03/Jul/2015:23:50:55 +0800] "GET /html/notes/20140609/544.html HTTP/1.0" 200 183694 0.076

127.0.0.1 - - [03/Jul/2015:23:53:32 +0800] "POST /service/notes/addViewTimes_544.htm HTTP/1.0" 200 2 0.004

127.0.0.1 - - [03/Jul/2015:23:54:53 +0800] "GET /html/notes/20140620/900.html HTTP/1.0" 200 151770 0.054

127.0.0.1 - - [03/Jul/2015:23:57:42 +0800] "GET /html/notes/20140620/872.html HTTP/1.0" 200 52373 0.034

127.0.0.1 - - [03/Jul/2015:23:58:17 +0800] "POST /service/notes/addViewTimes_900.htm HTTP/1.0" 200 2 0.003

127.0.0.1 - - [03/Jul/2015:23:58:51 +0800] "GET / HTTP/1.0" 200 70044 0.057[root@master IMFdatatest]#

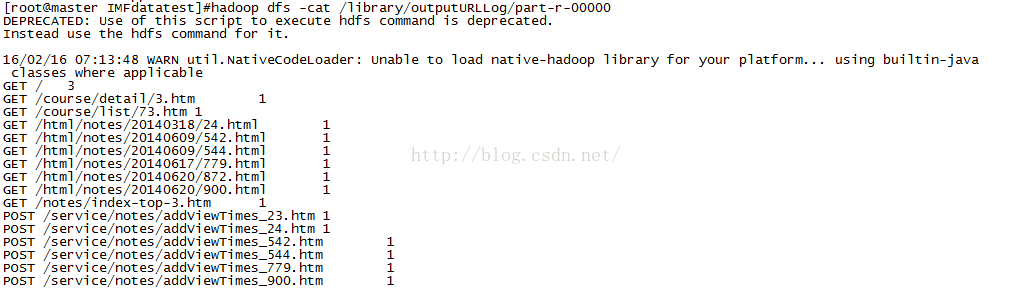

运行结果

[root@master IMFdatatest]#hadoop dfs -cat /library/outputURLLog/part-r-00000

DEPRECATED: Use of this script to execute hdfs command is deprecated.

Instead use the hdfs command for it.

16/02/16 07:13:48 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

GET / 3

GET /course/detail/3.htm 1

GET /course/list/73.htm 1

GET /html/notes/20140318/24.html 1

GET /html/notes/20140609/542.html 1

GET /html/notes/20140609/544.html 1

GET /html/notes/20140617/779.html 1

GET /html/notes/20140620/872.html 1

GET /html/notes/20140620/900.html 1

GET /notes/index-top-3.htm 1

POST /service/notes/addViewTimes_23.htm 1

POST /service/notes/addViewTimes_24.htm 1

POST /service/notes/addViewTimes_542.htm 1

POST /service/notes/addViewTimes_544.htm 1

POST /service/notes/addViewTimes_779.htm 1

POST /service/notes/addViewTimes_900.htm 1

源代码

package com.dtspark.hadoop.hellomapreduce;

import org.apache.hadoop.conf.Configuration;

import org.apache.hadoop.fs.Path;

import org.apache.hadoop.io.FloatWritable;

import org.apache.hadoop.io.IntWritable;

import org.apache.hadoop.io.LongWritable;

import org.apache.hadoop.io.Text;

import org.apache.hadoop.mapreduce.Job;

import org.apache.hadoop.mapreduce.Mapper;

import org.apache.hadoop.mapreduce.Reducer;

import org.apache.hadoop.mapreduce.lib.input.FileInputFormat;

import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat;

import org.apache.hadoop.util.GenericOptionsParser;

import java.io.IOException;

import java.util.Arrays;

import java.util.Iterator;

import java.util.StringTokenizer;

public class URLLog {

public static class DataMapper

extends Mapper

private LongWritable resultValue = new LongWritable(1);

private Text text =new Text();

public void map(LongWritable key, Text value, Context context

) throws IOException, InterruptedException {

System.out.println("Map Methond Invoked!!!");

String line =value.toString();

String result = handleLine(line);

if (result != null && result.length() > 0 ){

text.set(result);

context.write(text, resultValue);

}

}

private String handleLine(String line) {

StringBuffer buffer = new StringBuffer();

if(line.length()>0){

if(line.contains("GET")){

buffer.append(line.substring(line.indexOf("GET"),line.indexOf("HTTP/1.0")).trim());

}else if ( line.contains("POST")) {

buffer.append(line.substring(line.indexOf("POST"),line.indexOf("HTTP/1.0")).trim());

}

}

return buffer.toString();

}

}

public static class DataReducer

extends Reducer

private LongWritable totalresultValue = new LongWritable(1);

public void reduce(Text key, Iterable

Context context

) throws IOException, InterruptedException {

System.out.println("Reduce Methond Invoked!!!" );

int total =0;

for (LongWritable item : values){

total += item.get();

}

totalresultValue.set(total);

context.write(key, totalresultValue);

}

}

public static void main(String[] args) throws Exception {

Configuration conf = new Configuration();

String[] otherArgs = new GenericOptionsParser(conf, args).getRemainingArgs();

if (otherArgs.length < 2) {

System.err.println("Usage: URLLog

System.exit(2);

}

Job job = Job.getInstance(conf, "URLLog");

job.setJarByClass(URLLog.class);

job.setMapperClass(DataMapper.class);

job.setReducerClass(DataReducer.class);

job.setOutputKeyClass(Text.class);

job.setOutputValueClass(LongWritable.class);

for (int i = 0; i < otherArgs.length - 1; ++i) {

FileInputFormat.addInputPath(job, new Path(otherArgs[i]));

}

FileOutputFormat.setOutputPath(job,

new Path(otherArgs[otherArgs.length - 1]));

System.exit(job.waitForCompletion(true) ? 0 : 1);

}

}