Pinpoint是一个分析大型分布式系统的平台,提供解决方案来处理海量跟踪数据。

Pinpoint, 2012年七月开始开发,在2015年1月作为一个开源项目启动, 是一个为大型分布式系统服务的n层架构跟踪平台。 Pinpoint的特点如下:

分布式事务跟踪,跟踪跨分布式应用的消息

自动检测应用拓扑,帮助你搞清楚应用的架构

水平扩展以便支持大规模服务器集群

提供代码级别的可见性以便轻松定位失败点和瓶颈

使用字节码增强技术,添加新功能而无需修改代码

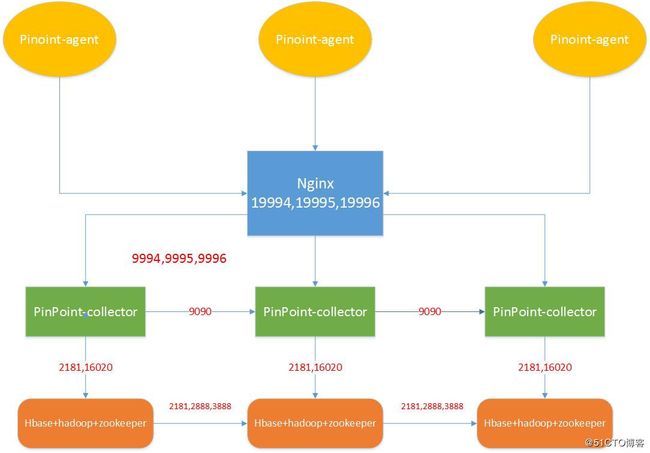

PinPoint集群(本篇基于搭建)

一、服务器规划和基础配置

*PC-pinpoint-collector,PW-pinpoint-web,PA-pinpoint-agent

IP |

Hostname |

角色 |

10.0.0.4 |

Node4 |

PC,PW |

10.0.0.5 |

Node5 |

PC,nginx |

10.0.0.6 |

Node6 |

PC,PA |

1.ssh免密认证

ssh-keygen yum -y install openssh-clients ssh-copy-id

2.Hosts文件主机名解析

vim /etc/hosts

10.0.0.4 Node4

10.0.0.5 Node5

10.0.0.6 Node6

10.0.0.1 Node1

10.0.0.2 Node2

10.0.0.3 Node3

3.Java8安装

java 1.8.0_144

vim /etc/profile

export JAVA_HOME=/usr/local/jdk

export PATH=$JAVA_HOME/bin:$PATH

export CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$JAVA_HOME/lib/tools.jar

4.软件版本(经测试,1.8.2不支持hbase2.1以上版本)

pinpoint-agent-1.8.2 pinpoint-web-1.8.2 pinpoint-collector-1.8.2 apache-tomcat-8.5.28 nginx-1.15.10

二、初始化PinPoint表结构

1. 下载最新版本表文件

https://github.com/naver/pinpoint

https://github.com/naver/pinpoint/tree/master/hbase/scripts

下载 hbase-create.hbase

2. 用hbase命令创建表结构

1.进入10.0.0.1的/usr/loca/hbase目录

2../bin/hbase shell ./scripts/hbase-create.hbase

3.http://10.0.0.1:60010/master-status #共创建出15张表

三、PinPoint-Collector集群搭建

1. Tomcat配置和war包部署

1.mv /root/soft/apache-tomcat-8.5.28 /usr/local/

2.rm -rf /usr/local/apache-tomcat-8.5.28/webapps/ROOT/*

3.cd /usr/local/apache-tomcat-8.5.28/webapps/ROOT/

mv /root/soft/pinpoint-collector-1.8.2.war .

unzip pinpoint-collector-1.8.2.war

2. Hbase配置

vim WEB-INF/classes/hbase.properties

#zookeeper集群服务器IP地址 hbase.client.host=10.0.0.1,10.0.0.2,10.0.0.3 #zookeeper端口 hbase.client.port=2181

3. PinPoint-Collector配置(另外两个节点修改ip即可)

vim WEB-INF/classes/pinpoint-collector.properties

# base data receiver config --------------------------------------------------------------------- collector.receiver.base.ip=10.0.0.4 collector.receiver.base.port=9994 # number of tcp worker threads collector.receiver.base.worker.threadSize=8 # capacity of tcp worker queue collector.receiver.base.worker.queueSize=1024 # monitoring for tcp worker collector.receiver.base.worker.monitor=true collector.receiver.base.request.timeout=3000 collector.receiver.base.closewait.timeout=3000 # 5 min collector.receiver.base.ping.interval=300000 # 30 min collector.receiver.base.pingwait.timeout=1800000 # stat receiver config --------------------------------------------------------------------- collector.receiver.stat.udp=true collector.receiver.stat.udp.ip=10.0.0.4 collector.receiver.stat.udp.port=9995 collector.receiver.stat.udp.receiveBufferSize=212992 # Should keep in mind that TCP transport load balancing is per connection.(UDP transport loadbalancing is per packet) collector.receiver.stat.tcp=false collector.receiver.stat.tcp.ip=10.0.0.4 collector.receiver.stat.tcp.port=9995 collector.receiver.stat.tcp.request.timeout=3000 collector.receiver.stat.tcp.closewait.timeout=3000 # 5 min collector.receiver.stat.tcp.ping.interval=300000 # 30 min collector.receiver.stat.tcp.pingwait.timeout=1800000 # number of udp statworker threads collector.receiver.stat.worker.threadSize=8 # capacity of udp statworker queue collector.receiver.stat.worker.queueSize=64 # monitoring for udp stat worker collector.receiver.stat.worker.monitor=true # span receiver config --------------------------------------------------------------------- collector.receiver.span.udp=true collector.receiver.span.udp.ip=10.0.0.4 collector.receiver.span.udp.port=9996 collector.receiver.span.udp.receiveBufferSize=212992 # Should keep in mind that TCP transport load balancing is per connection.(UDP transport loadbalancing is per packet) collector.receiver.span.tcp=false collector.receiver.span.tcp.ip=10.0.0.4 collector.receiver.span.tcp.port=9996 collector.receiver.span.tcp.request.timeout=3000 collector.receiver.span.tcp.closewait.timeout=3000 # 30 min collector.receiver.span.tcp.pingwait.timeout=1800000 # number of udp statworker threads collector.receiver.span.worker.queueSize=256 # monitoring for udp stat worker collector.receiver.span.worker.monitor=true # change OS level read/write socket buffer size (for linux) #sudo sysctl -w net.core.rmem_max= #sudo sysctl -w net.core.wmem_max= # check current values using: #$ /sbin/sysctl -a | grep -e rmem -e wmem # number of agent event worker threads collector.agentEventWorker.threadSize=4 # capacity of agent event worker queue collector.agentEventWorker.queueSize=1024 statistics.flushPeriod=1000 # ------------------------------------------------------------------------------------------------- # You may enable additional features using this option (Ex : RealTime Active Thread Chart). # ------------------------------------------------------------------------------------------------- # Usage : Set the following options for collector/web components that reside in the same cluster in order to enable this feature. # 1. cluster.enable (pinpoint-web.properties, pinpoint-collector.properties) - "true" to enable # 2. cluster.zookeeper.address (pinpoint-web.properties, pinpoint-collector.properties) - address of the ZooKeeper instance that will be used to man age the cluster# 3. cluster.web.tcp.port (pinpoint-web.properties) - any available port number (used to establish connection between web and collector) # ------------------------------------------------------------------------------------------------- # Please be aware of the following: #1. If the network between web, collector, and the agents are not stable, it is advisable not to use this feature. #2. We recommend using the cluster.web.tcp.port option. However, in cases where the collector is unable to establish connection to the web, you may reverse this and make the web establish connection to the collector.# In this case, you must set cluster.connect.address (pinpoint-web.properties); and cluster.listen.ip, cluster.listen.port (pinpoint-collector.pro perties) accordingly.cluster.enable=true cluster.zookeeper.address=10.0.0.1,10.0.0.2,10.0.0.3 cluster.zookeeper.sessiontimeout=30000 cluster.listen.ip=10.0.0.4 cluster.listen.port=9090 #collector.admin.password= #collector.admin.api.rest.active= #collector.admin.api.jmx.active= collector.spanEvent.sequence.limit=10000 # Flink configuration flink.cluster.enable=false flink.cluster.zookeeper.address=localhost flink.cluster.zookeeper.sessiontimeout=3000

4. 服务启动测试

1.cd /usr/local/apache-tomcat-8.5.28

2../bin/startup.sh

3.tailf logs/catalina.out #查看是否有报错

四、PinPoint-Web服务器搭建

1. Tomcat配置和war包部署

1.mv /root/soft/apache-tomcat-8.5.28 /usr/local/pinpoint-web

2.rm -rf /usr/local/pinpoint-web/webapps/ROOT/*

3.cd /usr/local/pinpoint-web/webapps/ROOT/

mv /root/soft/pinpoint-web-1.8.2.war .

unzip pinpoint-web-1.8.2.war

2. Hbase配置

1.vim WEB-INF/classes/hbase.properties

#zookeeper集群服务器IP地址 hbase.client.host=10.0.0.1,10.0.0.2,10.0.0.3 #zookeeper端口 hbase.client.port=2181

3. PinPoint-Web配置

1.vim WEB-INF/classes/pinpoint-web.properties

cluster.enable=true #pinpoint web的tcp端口(默认是9997) cluster.web.tcp.port=9997 cluster.zookeeper.address=10.0.0.1,10.0.0.2,10.0.10.3 cluster.zookeeper.sessiontimeout=30000 cluster.zookeeper.retry.interval=60000 #对应pinpoint collector集群IP:端口 cluster.connect.address=10.0.0.4:9090,10.0.0.5:9090,10.0.0.6:9090

4. 服务启动测试

1.cd /usr/local/pinpoint-web

2../bin/startup.sh

3.tailf logs/catalina.out #查看是否有报错

4.http://10.0.0.4:8080 #访问web进行测试

五、配置Nginx代理PinPoint-collector

1. 下载安装Nginx

①http://nginx.org/en/download.html

②tar xvf /root/soft/nginx-1.15.10.tar.gz

③cd /root/soft/nginx-1.15.10

④useradd nginx

⑤yum -y install gcc gcc-c++ automake pcre pcre-devel zlip zlib-devel openssl openssl-devel

⑥./configure --prefix=/usr/local/nginx

--sbin-path=/usr/local/nginx/sbin/nginx --conf-path=/usr/local/nginx/conf/nginx.conf

--error-log-path=/var/log/nginx/error.log

--http-log-path=/var/log/nginx/access.log

--pid-path=/var/run/nginx/nginx.pid

--lock-path=/var/lock/nginx.lock

--user=nginx --group=nginx

--with-http_ssl_module

--with-http_stub_status_module

--with-http_gzip_static_module --http-client-body-temp-path=/var/tmp/nginx/client/ --http-proxy-temp-path=/var/tmp/nginx/proxy/ --http-fastcgi-temp-path=/var/tmp/nginx/fcgi/ --http-uwsgi-temp-path=/var/tmp/nginx/uwsgi --http-scgi-temp-path=/var/tmp/nginx/scgi

--with-pcre

--with-stream --with-stream_ssl_module

⑦make&&make install

2. 配置Nginx

1.cd /usr/local/nginx

2.vim conf/nginx.conf #使用stream模块实现tcp/udp流量的转发,stream模块和http模块为平级模块

stream {

proxy_protocol_timeout 120s;

log_format main '$remote_addr $remote_port - [$time_local] '

'$status $bytes_sent $server_addr $server_port'

'$proxy_protocol_addr $proxy_protocol_port';

access_log /var/log/nginx/access.log main;

upstream 9994_tcp_upstreams {

server 10.0.0.4:9994;

server 10.0.0.5:9994;

server 10.0.0.6:9994;

}

upstream 9995_udp_upstreams {

server 10.0.0.4:9995;

server 10.0.0.5:9995;

server 10.0.0.6:9995;

}

upstream 9996_udp_upstreams {

server 10.0.0.4:9996;

server 10.0.0.5:9996;

server 10.0.0.6:9996;

}

server {

listen 19994;

proxy_pass 9994_tcp_upstreams;

proxy_connect_timeout 5s;

}

server {

listen 19995 udp;

proxy_pass 9995_udp_upstreams;

proxy_timeout 1s;

}

server {

listen 19996 udp;

proxy_pass 9996_udp_upstreams;

proxy_timeout 1s;

}

}

3.启动nginx

./sbin/nginx

netstat -anp|grep 19994

六、测试PinPoint-agent

1. 建立目录和压缩包解压

①mkdir /pinpoint-agent

②mv /root/soft/pinpoint-agent-1.8.2.tar.gz /pinpoint-agent

③cd /pinpoint-agent

④tar xvf pinpoint-agent-1.8.2.tar.gz

2. 配置PinPoint-agent

vim pinpoint.config

# 本次使用了nginx进行代理,此处改为nginx IP地址

profiler.collector.ip=10.0.0.5

# placeHolder support "${key}"

profiler.collector.span.ip=${profiler.collector.ip}

profiler.collector.span.port=19996

# placeHolder support "${key}"

profiler.collector.stat.ip=${profiler.collector.ip}

profiler.collector.stat.port=19995

# placeHolder support "${key}"

profiler.collector.tcp.ip=${profiler.collector.ip}

profiler.collector.tcp.port=19994

3. 配置测试用tomcat

1.mv /root/soft/apache-tomcat-8.5.28 /usr/local/apache-tomcat-test

2.cd /usr/local/apache-tomcat-test

3.vim bin/catalina.sh

CATALINA_OPTS="$CATALINA_OPTS -javaagent:/usr/local/pinpoint-agent/pinpoint-bootstrap-1.8.2.jar" CATALINA_OPTS="$CATALINA_OPTS -Dpinpoint.applicationName=test-tomcat" CATALINA_OPTS="$CATALINA_OPTS -Dpinpoint.agentId=tomcat-01"

其中,第一个路径指向pinpoint-bootstrap的jar包位置,第二个pinpoint.applicationName表示监控的目标应用的名称,第三 个pinpoint.agentId表示监控目标应用的ID,其中pinpoint.applicationName可以不唯一,pinpoint.agentId要求唯一,如果 pinpoint.applicationName相同但pinpoint.agentId不同,则表示的是同一个应用的集群。

3.1.weblogic配置

①vim bin/startWeblogic.sh

JAVA_OPTIONS="$JAVA_OPTIONS -javaagent:/home/pinpoint-agent/pinpoint-bootstrap-1.8.2.jar" JAVA_OPTIONS="$JAVA_OPTIONS -Dpinpoint.applicationName=test-weblogic" JAVA_OPTIONS="$JAVA_OPTIONS -Dpinpoint.agentId=weblogic-01"

4.启动tomcat

./bin/startup.sh

tailf logs/catalina.out

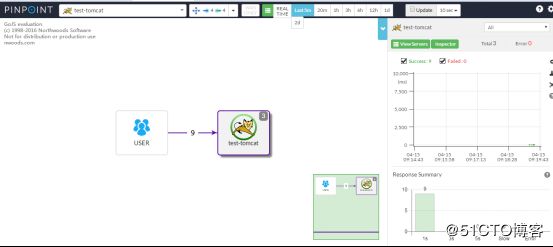

七、登录网页查看结果

最终架构图