现在分析到YYImage

首先看文件

YYImage

YYFrameImage

YYSpriteSheetImage

YYAnimatedImageView

YYImageCoder

YYImageCache

YYWebImageOperation

YYWebImageManager

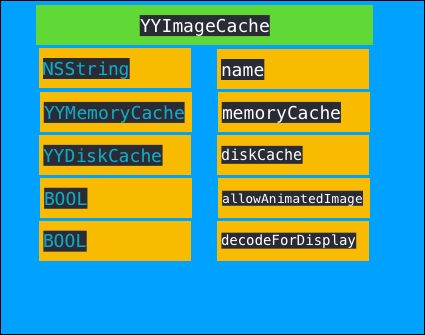

YYImageCache

结构

结构简单,五个属性

初始化

两个初始化

- (instancetype)initWithPath:(NSString *)path {

YYMemoryCache *memoryCache = [YYMemoryCache new];

memoryCache.shouldRemoveAllObjectsOnMemoryWarning = YES;

memoryCache.shouldRemoveAllObjectsWhenEnteringBackground = YES;

memoryCache.countLimit = NSUIntegerMax;

memoryCache.costLimit = NSUIntegerMax;

memoryCache.ageLimit = 12 * 60 * 60;

YYDiskCache *diskCache = [[YYDiskCache alloc] initWithPath:path];

diskCache.customArchiveBlock = ^(id object) { return (NSData *)object; };

diskCache.customUnarchiveBlock = ^(NSData *data) { return (id)data; };

if (!memoryCache || !diskCache) return nil;

self = [super init];

_memoryCache = memoryCache;

_diskCache = diskCache;

_allowAnimatedImage = YES;

_decodeForDisplay = YES;

return self;

}

1.初始化YYMemoryCache。给相关属性赋值。(标记当记忆内存警告,删除内存中的数据,标记当进入后台,删除内存数据,并且不设置条数和大小限制,保存最长时间设置12个小时)

2.初始化YYDiskCache。

3.给self的变量赋值(_memoryCache,_diskCache)这里还有两个属性,_allowAnimatedImage ,_decodeForDisplay 。字面意思是开启动画,和解码。

public method

既然是cache,那么就应该有增删改查

增

- (void)setImage:(UIImage *)image imageData:(NSData *)imageData forKey:(NSString *)key withType:(YYImageCacheType)type {

if (!key || (image == nil && imageData.length == 0)) return;

__weak typeof(self) _self = self;

if (type & YYImageCacheTypeMemory) { // add to memory cache

if (image) {

if (image.isDecodedForDisplay) {

[_memoryCache setObject:image forKey:key withCost:[_self imageCost:image]];

} else {

dispatch_async(YYImageCacheDecodeQueue(), ^{

__strong typeof(_self) self = _self;

if (!self) return;

[self.memoryCache setObject:[image imageByDecoded] forKey:key withCost:[self imageCost:image]];

});

}

} else if (imageData) {

dispatch_async(YYImageCacheDecodeQueue(), ^{

__strong typeof(_self) self = _self;

if (!self) return;

UIImage *newImage = [self imageFromData:imageData];

[self.memoryCache setObject:newImage forKey:key withCost:[self imageCost:newImage]];

});

}

}

if (type & YYImageCacheTypeDisk) { // add to disk cache

if (imageData) {

if (image) {

[YYDiskCache setExtendedData:[NSKeyedArchiver archivedDataWithRootObject:@(image.scale)] toObject:imageData];

}

[_diskCache setObject:imageData forKey:key];

} else if (image) {

dispatch_async(YYImageCacheIOQueue(), ^{

__strong typeof(_self) self = _self;

if (!self) return;

NSData *data = [image imageDataRepresentation];

[YYDiskCache setExtendedData:[NSKeyedArchiver archivedDataWithRootObject:@(image.scale)] toObject:data];

[self.diskCache setObject:data forKey:key];

});

}

}

}

1.检查加入缓存数据参数是否正确

2.检查type 类型

3要是type 类型包含YYImageCacheTypeMemory,那么就将数据保存在内存中

《1》要是参数image不是nil

《2》要是image!=nil,那么检查image是否decode,decode就直接保存在内存中,没有,则先将image decode ,再保存到内存中

《3》要是image ==nil,但是imageData不是nil,那么,将imageData 转换成image,将数据保存到内存中。

4.要是type类型包含YYImageCacheTypeDisk,那么就将数据保存在磁盘上

《1》要是imageData不是nil,并且image 也是不是nil ,那么我们就将image的scale 一并保存到数据库中。这里是同步执行的

《2》要是imageData是nil,image不是nil,那么将image转换成data,将数据保存到磁盘上

[YYDiskCache setExtendedData:[NSKeyedArchiver archivedDataWithRootObject:@(image.scale)] toObject:data];

这句话给data添加关联,写入的sqlite表的 extended_data中。

- (void)setImage:(UIImage *)image forKey:(NSString *)key {

[self setImage:image imageData:nil forKey:key withType:YYImageCacheTypeAll];

}

这个函数就是调用上面函数,type类型是YYImageCacheTypeAll ,既保持到内存,也保存到磁盘上

删

- (void)removeImageForKey:(NSString *)key {

[self removeImageForKey:key withType:YYImageCacheTypeAll];

}

- (void)removeImageForKey:(NSString *)key withType:(YYImageCacheType)type {

if (type & YYImageCacheTypeMemory) [_memoryCache removeObjectForKey:key];

if (type & YYImageCacheTypeDisk) [_diskCache removeObjectForKey:key];

}

删除很简单,就是依次删除内存和磁盘上的数据,这里不过是同步删除。

查

- (BOOL)containsImageForKey:(NSString *)key {

return [self containsImageForKey:key withType:YYImageCacheTypeAll];

}

- (BOOL)containsImageForKey:(NSString *)key withType:(YYImageCacheType)type {

if (type & YYImageCacheTypeMemory) {

if ([_memoryCache containsObjectForKey:key]) return YES;

}

if (type & YYImageCacheTypeDisk) {

if ([_diskCache containsObjectForKey:key]) return YES;

}

return NO;

}

内存或者磁盘上分别检查是否包含key,当前线程检查

- (UIImage *)getImageForKey:(NSString *)key {

return [self getImageForKey:key withType:YYImageCacheTypeAll];

}

- (UIImage *)getImageForKey:(NSString *)key withType:(YYImageCacheType)type {

if (!key) return nil;

if (type & YYImageCacheTypeMemory) {

UIImage *image = [_memoryCache objectForKey:key];

if (image) return image;

}

if (type & YYImageCacheTypeDisk) {

NSData *data = (id)[_diskCache objectForKey:key];

UIImage *image = [self imageFromData:data];

if (image && (type & YYImageCacheTypeMemory)) {

[_memoryCache setObject:image forKey:key withCost:[self imageCost:image]];

}

return image;

}

return nil;

}

获取key对应的数据,因为磁盘上存入的是NSData数据,从disk上获取到的数据要转行成NSdata

- (void)getImageForKey:(NSString *)key withType:(YYImageCacheType)type withBlock:(void (^)(UIImage *image, YYImageCacheType type))block {

if (!block) return;

dispatch_async(dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

UIImage *image = nil;

if (type & YYImageCacheTypeMemory) {

image = [_memoryCache objectForKey:key];

if (image) {

dispatch_async(dispatch_get_main_queue(), ^{

block(image, YYImageCacheTypeMemory);

});

return;

}

}

if (type & YYImageCacheTypeDisk) {

NSData *data = (id)[_diskCache objectForKey:key];

image = [self imageFromData:data];

if (image) {

[_memoryCache setObject:image forKey:key];

dispatch_async(dispatch_get_main_queue(), ^{

block(image, YYImageCacheTypeDisk);

});

return;

}

}

dispatch_async(dispatch_get_main_queue(), ^{

block(nil, YYImageCacheTypeNone);

});

});

}

这个是异步获取image,但是获取到的image是回调到主线程执行block。

- (NSData *)getImageDataForKey:(NSString *)key {

return (id)[_diskCache objectForKey:key];

}

获取key对应在磁盘上的数据

- (void)getImageDataForKey:(NSString *)key withBlock:(void (^)(NSData *imageData))block {

if (!block) return;

dispatch_async(dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_DEFAULT, 0), ^{

NSData *data = (id)[_diskCache objectForKey:key];

dispatch_async(dispatch_get_main_queue(), ^{

block(data);

});

});

}

异步获取,这里代码很清晰。不做介绍

不影响逻辑的其他方法

- (NSUInteger)imageCost:(UIImage *)image {

CGImageRef cgImage = image.CGImage;

if (!cgImage) return 1;

CGFloat height = CGImageGetHeight(cgImage);

size_t bytesPerRow = CGImageGetBytesPerRow(cgImage);

NSUInteger cost = bytesPerRow * height;

if (cost == 0) cost = 1;

return cost;

}

这个是返回数据的cost,我们知道在内存或者磁盘上都有一个属性cost 属性。在YYImageCache中,内存或者磁盘上的cost 记录的是image的像素大小。

- (UIImage *)imageFromData:(NSData *)data {

NSData *scaleData = [YYDiskCache getExtendedDataFromObject:data];

CGFloat scale = 0;

if (scaleData) {

scale = ((NSNumber *)[NSKeyedUnarchiver unarchiveObjectWithData:scaleData]).doubleValue;

}

if (scale <= 0) scale = [UIScreen mainScreen].scale;

UIImage *image;

if (_allowAnimatedImage) {

image = [[YYImage alloc] initWithData:data scale:scale];

if (_decodeForDisplay) image = [image imageByDecoded];

} else {

YYImageDecoder *decoder = [YYImageDecoder decoderWithData:data scale:scale];

image = [decoder frameAtIndex:0 decodeForDisplay:_decodeForDisplay].image;

}

return image;

}

这个函数将data 转换成image。不过这里生成的image是YYImage。

YYImage

继承UIImage

结构

初始化

初始化public方法只有四个。

+ (nullable YYImage *)imageNamed:(NSString *)name; // no cache!

+ (nullable YYImage *)imageWithContentsOfFile:(NSString *)path;

+ (nullable YYImage *)imageWithData:(NSData *)data;

+ (nullable YYImage *)imageWithData:(NSData *)data scale:(CGFloat)scale;

+ (YYImage *)imageNamed:(NSString *)name {

if (name.length == 0) return nil;

if ([name hasSuffix:@"/"]) return nil;

NSString *res = name.stringByDeletingPathExtension;

NSString *ext = name.pathExtension;

NSString *path = nil;

CGFloat scale = 1;

// If no extension, guess by system supported (same as UIImage).

NSArray *exts = ext.length > 0 ? @[ext] : @[@"", @"png", @"jpeg", @"jpg", @"gif", @"webp", @"apng"];

NSArray *scales = [NSBundle preferredScales];

for (int s = 0; s < scales.count; s++) {

scale = ((NSNumber *)scales[s]).floatValue;

NSString *scaledName = [res stringByAppendingNameScale:scale];

for (NSString *e in exts) {

path = [[NSBundle mainBundle] pathForResource:scaledName ofType:e];

if (path) break;

}

if (path) break;

}

if (path.length == 0) return nil;

NSData *data = [NSData dataWithContentsOfFile:path];

if (data.length == 0) return nil;

return [[self alloc] initWithData:data scale:scale];

}

我们知道,UIImage 调用该方法是在内存中保存一份的。但是在YYImage 是没有缓存在内存中的

1.首先检查name是否合法

2.从本地获取image路径。

《1》获取去除后缀的字符串

《2》获取后缀名字

《3》检查后缀名字是不是nil,不是nil,将其包装成数组,是nil,那么将支持的后缀包装 成在一个数组中

《4》重新拼装path,拼接成 eg [email protected] 。检查路径是否存在,存在就结束

3获取path 的数据

4.生成image

+ (YYImage *)imageWithContentsOfFile:(NSString *)path {

return [[self alloc] initWithContentsOfFile:path];

}

+ (YYImage *)imageWithData:(NSData *)data {

return [[self alloc] initWithData:data];

}

+ (YYImage *)imageWithData:(NSData *)data scale:(CGFloat)scale {

return [[self alloc] initWithData:data scale:scale];

}

- (instancetype)initWithContentsOfFile:(NSString *)path {

NSData *data = [NSData dataWithContentsOfFile:path];

return [self initWithData:data scale:path.pathScale];

}

- (instancetype)initWithData:(NSData *)data {

return [self initWithData:data scale:1];

}

上面的所有函数最后都调用下面这个函数

- (instancetype)initWithData:(NSData *)data scale:(CGFloat)scale {

if (data.length == 0) return nil;

if (scale <= 0) scale = [UIScreen mainScreen].scale;

_preloadedLock = dispatch_semaphore_create(1);

@autoreleasepool {

YYImageDecoder *decoder = [YYImageDecoder decoderWithData:data scale:scale];

YYImageFrame *frame = [decoder frameAtIndex:0 decodeForDisplay:YES];

UIImage *image = frame.image;

if (!image) return nil;

self = [self initWithCGImage:image.CGImage scale:decoder.scale orientation:image.imageOrientation];

if (!self) return nil;

_animatedImageType = decoder.type;

if (decoder.frameCount > 1) {

_decoder = decoder;

_bytesPerFrame = CGImageGetBytesPerRow(image.CGImage) * CGImageGetHeight(image.CGImage);

_animatedImageMemorySize = _bytesPerFrame * decoder.frameCount;

}

self.isDecodedForDisplay = YES;

}

return self;

}

核心函数了。看看具体干嘛了

1.数据nil 就返回

2.获取scale

3.初始化信号量

4因为涉及到图片,图片对象比较大,希望放入最上面的自动释放池里面。所以自动释放池里面操作image

5.对图片进行decode

6获取decode 的中的第一张imageFrame

7获取image

8.将image转换成bitmap格式的image

9 根据decode解码图片是否是多张,是多张就保存当前decode

10.标记image 已经解码

这个函数里面出现了YYImageDecoder 和 YYImageFrame

YYImageDecoder 代表图片解码集合,类似图片数组

YYImageFrame 代表 一张图片的解码。可以解码出一张图片。

其他方法

- (NSData *)animatedImageData {

return _decoder.data;

}

获取image的原始数据

- (void)setPreloadAllAnimatedImageFrames:(BOOL)preloadAllAnimatedImageFrames {

if (_preloadAllAnimatedImageFrames != preloadAllAnimatedImageFrames) {

if (preloadAllAnimatedImageFrames && _decoder.frameCount > 0) {

NSMutableArray *frames = [NSMutableArray new];

for (NSUInteger i = 0, max = _decoder.frameCount; i < max; i++) {

UIImage *img = [self animatedImageFrameAtIndex:i];

if (img) {

[frames addObject:img];

} else {

[frames addObject:[NSNull null]];

}

}

dispatch_semaphore_wait(_preloadedLock, DISPATCH_TIME_FOREVER);

_preloadedFrames = frames;

dispatch_semaphore_signal(_preloadedLock);

} else {

dispatch_semaphore_wait(_preloadedLock, DISPATCH_TIME_FOREVER);

_preloadedFrames = nil;

dispatch_semaphore_signal(_preloadedLock);

}

}

}

这个是重新的属性方法。搜索了这个文件,综合分析,这个属性要是设置为Yes,那么提前将decode解码成图片数组。提前保存起来,直接用就行了。这就是典型的空间换时间

- (instancetype)initWithCoder:(NSCoder *)aDecoder {

NSNumber *scale = [aDecoder decodeObjectForKey:@"YYImageScale"];

NSData *data = [aDecoder decodeObjectForKey:@"YYImageData"];

if (data.length) {

self = [self initWithData:data scale:scale.doubleValue];

} else {

self = [super initWithCoder:aDecoder];

}

return self;

}

- (void)encodeWithCoder:(NSCoder *)aCoder {

if (_decoder.data.length) {

[aCoder encodeObject:@(self.scale) forKey:@"YYImageScale"];

[aCoder encodeObject:_decoder.data forKey:@"YYImageData"];

} else {

[super encodeWithCoder:aCoder]; // Apple use UIImagePNGRepresentation() to encode UIImage.

}

}

+ (BOOL)supportsSecureCoding {

return YES;

}

实现UIimage的NSSecureCoding必须返回YES

下面实现了一个动画协议

- (NSUInteger)animatedImageFrameCount {

return _decoder.frameCount;

}

获取有多少张图片

- (NSUInteger)animatedImageLoopCount {

return _decoder.loopCount;

}

循环次数

- (NSUInteger)animatedImageBytesPerFrame {

return _bytesPerFrame;

}

没帧图片的大小

- (UIImage *)animatedImageFrameAtIndex:(NSUInteger)index {

if (index >= _decoder.frameCount) return nil;

dispatch_semaphore_wait(_preloadedLock, DISPATCH_TIME_FOREVER);

UIImage *image = _preloadedFrames[index];

dispatch_semaphore_signal(_preloadedLock);

if (image) return image == (id)[NSNull null] ? nil : image;

return [_decoder frameAtIndex:index decodeForDisplay:YES].image;

}

获取index处的图片。

UIImage *image = _preloadedFrames[index];

这里我们知道_preloadedFrames 赋值是通过preloadAllAnimatedImageFrames 设置为Yes的时候,因此_preloadedFrames 可能是nil,我们通过nil获取index ,返回值肯定是image。我在想这里是不是会产生崩溃呢?测试不会。

测试代码

NSArray * testCrash=nil;

UIImage * image = testCrash[4];

- (NSTimeInterval)animatedImageDurationAtIndex:(NSUInteger)index { NSTimeInterval duration = [_decoder frameDurationAtIndex:index]; /* http://opensource.apple.com/source/WebCore/WebCore-7600.1.25/platform/graphics/cg/ImageSourceCG.cpp Many annoying ads specify a 0 duration to make an image flash as quickly as possible. We follow Safari and Firefox's behavior and use a duration of 100 ms for any frames that specify a duration of <= 10 ms. Seeand for more information.

See also: http://nullsleep.tumblr.com/post/16524517190/animated-gif-minimum-frame-delay-browser.

*/

if (duration < 0.011f) return 0.100f;

return duration;

}

图片之间播放间隔。

YYImageCoder

最难搞的一个类了。

结构

初始化

类方法

+ (instancetype)decoderWithData:(NSData *)data scale:(CGFloat)scale {

if (!data) return nil;

YYImageDecoder *decoder = [[YYImageDecoder alloc] initWithScale:scale];

[decoder updateData:data final:YES];

if (decoder.frameCount == 0) return nil;

return decoder;

}

- (instancetype)init {

return [self initWithScale:[UIScreen mainScreen].scale];

}

- (instancetype)initWithScale:(CGFloat)scale {

self = [super init];

if (scale <= 0) scale = 1;

_scale = scale;

_framesLock = dispatch_semaphore_create(1);

pthread_mutex_init_recursive(&_lock, true);

return self;

}

这里很简单,初始化基本参数。

不过这里有个c函数,递归锁

static inline void pthread_mutex_init_recursive(pthread_mutex_t *mutex, bool recursive) {

#define YYMUTEX_ASSERT_ON_ERROR(x_) do { \

__unused volatile int res = (x_); \

assert(res == 0); \

} while (0)

assert(mutex != NULL);

if (!recursive) {

YYMUTEX_ASSERT_ON_ERROR(pthread_mutex_init(mutex, NULL));

} else {

pthread_mutexattr_t attr;

YYMUTEX_ASSERT_ON_ERROR(pthread_mutexattr_init (&attr));

YYMUTEX_ASSERT_ON_ERROR(pthread_mutexattr_settype (&attr, PTHREAD_MUTEX_RECURSIVE));

YYMUTEX_ASSERT_ON_ERROR(pthread_mutex_init (mutex, &attr));

YYMUTEX_ASSERT_ON_ERROR(pthread_mutexattr_destroy (&attr));

}

#undef YYMUTEX_ASSERT_ON_ERROR

}

递归锁。调用每个函数,都检测assert是否正确。

传入数据函数

- (BOOL)updateData:(NSData *)data final:(BOOL)final {

BOOL result = NO;

pthread_mutex_lock(&_lock);

result = [self _updateData:data final:final];

pthread_mutex_unlock(&_lock);

return result;

}

这个没有啥看掉用的函数

- (BOOL)_updateData:(NSData *)data final:(BOOL)final {

if (_finalized) return NO;

if (data.length < _data.length) return NO;

_finalized = final;

_data = data;

YYImageType type = YYImageDetectType((__bridge CFDataRef)data);

if (_sourceTypeDetected) {

if (_type != type) {

return NO;

} else {

[self _updateSource];

}

} else {

if (_data.length > 16) {

_type = type;

_sourceTypeDetected = YES;

[self _updateSource];

}

}

return YES;

}

1.给相关变量赋值。

2.检测数据图片类型。

3.更新源文件

这里检查图片的类型使用的函数是

YYImageType YYImageDetectType(CFDataRef data) {

if (!data) return YYImageTypeUnknown;

uint64_t length = CFDataGetLength(data);

if (length < 16) return YYImageTypeUnknown;

const char *bytes = (char *)CFDataGetBytePtr(data);

uint32_t magic4 = *((uint32_t *)bytes);

switch (magic4) {

case YY_FOUR_CC(0x4D, 0x4D, 0x00, 0x2A): { // big endian TIFF

return YYImageTypeTIFF;

} break;

case YY_FOUR_CC(0x49, 0x49, 0x2A, 0x00): { // little endian TIFF

return YYImageTypeTIFF;

} break;

case YY_FOUR_CC(0x00, 0x00, 0x01, 0x00): { // ICO

return YYImageTypeICO;

} break;

case YY_FOUR_CC(0x00, 0x00, 0x02, 0x00): { // CUR

return YYImageTypeICO;

} break;

case YY_FOUR_CC('i', 'c', 'n', 's'): { // ICNS

return YYImageTypeICNS;

} break;

case YY_FOUR_CC('G', 'I', 'F', '8'): { // GIF

return YYImageTypeGIF;

} break;

case YY_FOUR_CC(0x89, 'P', 'N', 'G'): { // PNG

uint32_t tmp = *((uint32_t *)(bytes + 4));

if (tmp == YY_FOUR_CC('\r', '\n', 0x1A, '\n')) {

return YYImageTypePNG;

}

} break;

case YY_FOUR_CC('R', 'I', 'F', 'F'): { // WebP

uint32_t tmp = *((uint32_t *)(bytes + 8));

if (tmp == YY_FOUR_CC('W', 'E', 'B', 'P')) {

return YYImageTypeWebP;

}

} break;

/*

case YY_FOUR_CC('B', 'P', 'G', 0xFB): { // BPG

return YYImageTypeBPG;

} break;

*/

}

uint16_t magic2 = *((uint16_t *)bytes);

switch (magic2) {

case YY_TWO_CC('B', 'A'):

case YY_TWO_CC('B', 'M'):

case YY_TWO_CC('I', 'C'):

case YY_TWO_CC('P', 'I'):

case YY_TWO_CC('C', 'I'):

case YY_TWO_CC('C', 'P'): { // BMP

return YYImageTypeBMP;

}

case YY_TWO_CC(0xFF, 0x4F): { // JPEG2000

return YYImageTypeJPEG2000;

}

}

// JPG FF D8 FF

if (memcmp(bytes,"\377\330\377",3) == 0) return YYImageTypeJPEG;

// JP2

if (memcmp(bytes + 4, "\152\120\040\040\015", 5) == 0) return YYImageTypeJPEG2000;

return YYImageTypeUnknown;

}

是检测文件头来判断数据的格式的。

#define YY_FOUR_CC(c1,c2,c3,c4) ((uint32_t)(((c4) << 24) | ((c3) << 16) | ((c2) << 8) | (c1)))

#define YY_TWO_CC(c1,c2) ((uint16_t)(((c2) << 8) | (c1)))

因为图片的排列是假如gif8 的内存排列是 gif8xxxxx ,当转换成int 类类型的时候,

做个图

好多图片格式,这里不做介绍

- (void)_updateSource {

switch (_type) {

case YYImageTypeWebP: {

[self _updateSourceWebP];

} break;

case YYImageTypePNG: {

[self _updateSourceAPNG];

} break;

default: {

[self _updateSourceImageIO];

} break;

}

}

看这个函数,我们知道了,图片中单独解析了webP 和 png ,其他类型作为默认类型了

先看不是webP和png的图片解析- (void)_updateSourceImageIO

- (void)_updateSourceImageIO {

_width = 0;

_height = 0;

_orientation = UIImageOrientationUp;

_loopCount = 0;

dispatch_semaphore_wait(_framesLock, DISPATCH_TIME_FOREVER);

_frames = nil;

dispatch_semaphore_signal(_framesLock);

if (!_source) {

if (_finalized) {

_source = CGImageSourceCreateWithData((__bridge CFDataRef)_data, NULL);

} else {

_source = CGImageSourceCreateIncremental(NULL);

if (_source) CGImageSourceUpdateData(_source, (__bridge CFDataRef)_data, false);

}

} else {

CGImageSourceUpdateData(_source, (__bridge CFDataRef)_data, _finalized);

}

if (!_source) return;

_frameCount = CGImageSourceGetCount(_source);

if (_frameCount == 0) return;

if (!_finalized) { // ignore multi-frame before finalized

_frameCount = 1;

} else {

if (_type == YYImageTypePNG) { // use custom apng decoder and ignore multi-frame

_frameCount = 1;

}

if (_type == YYImageTypeGIF) { // get gif loop count

CFDictionaryRef properties = CGImageSourceCopyProperties(_source, NULL);

if (properties) {

CFDictionaryRef gif = CFDictionaryGetValue(properties, kCGImagePropertyGIFDictionary);

if (gif) {

CFTypeRef loop = CFDictionaryGetValue(gif, kCGImagePropertyGIFLoopCount);

if (loop) CFNumberGetValue(loop, kCFNumberNSIntegerType, &_loopCount);

}

CFRelease(properties);

}

}

}

/*

ICO, GIF, APNG may contains multi-frame.

*/

NSMutableArray *frames = [NSMutableArray new];

for (NSUInteger i = 0; i < _frameCount; i++) {

_YYImageDecoderFrame *frame = [_YYImageDecoderFrame new];

frame.index = i;

frame.blendFromIndex = i;

frame.hasAlpha = YES;

frame.isFullSize = YES;

[frames addObject:frame];

CFDictionaryRef properties = CGImageSourceCopyPropertiesAtIndex(_source, i, NULL);

if (properties) {

NSTimeInterval duration = 0;

NSInteger orientationValue = 0, width = 0, height = 0;

CFTypeRef value = NULL;

value = CFDictionaryGetValue(properties, kCGImagePropertyPixelWidth);

if (value) CFNumberGetValue(value, kCFNumberNSIntegerType, &width);

value = CFDictionaryGetValue(properties, kCGImagePropertyPixelHeight);

if (value) CFNumberGetValue(value, kCFNumberNSIntegerType, &height);

if (_type == YYImageTypeGIF) {

CFDictionaryRef gif = CFDictionaryGetValue(properties, kCGImagePropertyGIFDictionary);

if (gif) {

// Use the unclamped frame delay if it exists.

value = CFDictionaryGetValue(gif, kCGImagePropertyGIFUnclampedDelayTime);

if (!value) {

// Fall back to the clamped frame delay if the unclamped frame delay does not exist.

value = CFDictionaryGetValue(gif, kCGImagePropertyGIFDelayTime);

}

if (value) CFNumberGetValue(value, kCFNumberDoubleType, &duration);

}

}

frame.width = width;

frame.height = height;

frame.duration = duration;

if (i == 0 && _width + _height == 0) { // init first frame

_width = width;

_height = height;

value = CFDictionaryGetValue(properties, kCGImagePropertyOrientation);

if (value) {

CFNumberGetValue(value, kCFNumberNSIntegerType, &orientationValue);

_orientation = YYUIImageOrientationFromEXIFValue(orientationValue);

}

}

CFRelease(properties);

}

}

dispatch_semaphore_wait(_framesLock, DISPATCH_TIME_FOREVER);

_frames = frames;

dispatch_semaphore_signal(_framesLock);

}

我们分布分析这个decode函数

1.默认_width =_height = 0 ,图片方向默认是UIImageOrientationUp,_loopCount 默认0,_frames = nil。这里给_frames 之间加入了锁,说明这个变量可能被多处访问。要求访问这个变量的时候,只能一处一处进行。

2检查变量_source是否是nil

3 _source 是nil,那么将_data 转换成_source

CGImageSourceCreateIncremental这个函数干嘛的,(shift+cmd+0)

The function CGImageSourceCreateIncremental creates an empty image source container to which you can add data later by calling the functions CGImageSourceUpdateDataProvider or CGImageSourceUpdateData. You don’t provide data when you call this function.

An incremental image is an image that is created in chunks, similar to the way large images viewed over the web are loaded piece by piece.

只是生成一个empty的source,可以将数据加入其中。

4_source!=nil ,那么将_data 更新到_source

5 获取_source 有多少帧保存到_frameCount 中

6.要是_frameCount 是0 ,就返回

7.这里有个_finalized ,猜测是不是decode结束标志

8.这里对gif 数据单独处理了,要是gif图。需要获取gif图循环次数

9.针对有多帧的image,我们需要将每张图片解析成_YYImageDecoderFrame,将其保存在_frame中,比如图片的宽,高。

10要是图片格式是gif,就要读取两帧间隔时间

- (void)_updateSourceAPNG {

/*

APNG extends PNG format to support animation, it was supported by ImageIO

since iOS 8.

We use a custom APNG decoder to make APNG available in old system, so we

ignore the ImageIO's APNG frame info. Typically the custom decoder is a bit

faster than ImageIO.

*/

yy_png_info_release(_apngSource);

_apngSource = nil;

[self _updateSourceImageIO]; // decode first frame

if (_frameCount == 0) return; // png decode failed

if (!_finalized) return; // ignore multi-frame before finalized

yy_png_info *apng = yy_png_info_create(_data.bytes, (uint32_t)_data.length);

if (!apng) return; // apng decode failed

if (apng->apng_frame_num == 0 ||

(apng->apng_frame_num == 1 && apng->apng_first_frame_is_cover)) {

yy_png_info_release(apng);

return; // no animation

}

if (_source) { // apng decode succeed, no longer need image souce

CFRelease(_source);

_source = NULL;

}

uint32_t canvasWidth = apng->header.width;

uint32_t canvasHeight = apng->header.height;

NSMutableArray *frames = [NSMutableArray new];

BOOL needBlend = NO;

uint32_t lastBlendIndex = 0;

for (uint32_t i = 0; i < apng->apng_frame_num; i++) {

_YYImageDecoderFrame *frame = [_YYImageDecoderFrame new];

[frames addObject:frame];

yy_png_frame_info *fi = apng->apng_frames + i;

frame.index = i;

frame.duration = yy_png_delay_to_seconds(fi->frame_control.delay_num, fi->frame_control.delay_den);

frame.hasAlpha = YES;

frame.width = fi->frame_control.width;

frame.height = fi->frame_control.height;

frame.offsetX = fi->frame_control.x_offset;

frame.offsetY = canvasHeight - fi->frame_control.y_offset - fi->frame_control.height;

BOOL sizeEqualsToCanvas = (frame.width == canvasWidth && frame.height == canvasHeight);

BOOL offsetIsZero = (fi->frame_control.x_offset == 0 && fi->frame_control.y_offset == 0);

frame.isFullSize = (sizeEqualsToCanvas && offsetIsZero);

switch (fi->frame_control.dispose_op) {

case YY_PNG_DISPOSE_OP_BACKGROUND: {

frame.dispose = YYImageDisposeBackground;

} break;

case YY_PNG_DISPOSE_OP_PREVIOUS: {

frame.dispose = YYImageDisposePrevious;

} break;

default: {

frame.dispose = YYImageDisposeNone;

} break;

}

switch (fi->frame_control.blend_op) {

case YY_PNG_BLEND_OP_OVER: {

frame.blend = YYImageBlendOver;

} break;

default: {

frame.blend = YYImageBlendNone;

} break;

}

if (frame.blend == YYImageBlendNone && frame.isFullSize) {

frame.blendFromIndex = i;

if (frame.dispose != YYImageDisposePrevious) lastBlendIndex = i;

} else {

if (frame.dispose == YYImageDisposeBackground && frame.isFullSize) {

frame.blendFromIndex = lastBlendIndex;

lastBlendIndex = i + 1;

} else {

frame.blendFromIndex = lastBlendIndex;

}

}

if (frame.index != frame.blendFromIndex) needBlend = YES;

}

_width = canvasWidth;

_height = canvasHeight;

_frameCount = frames.count;

_loopCount = apng->apng_loop_num;

_needBlend = needBlend;

_apngSource = apng;

dispatch_semaphore_wait(_framesLock, DISPATCH_TIME_FOREVER);

_frames = frames;

dispatch_semaphore_signal(_framesLock);

}

这个类是专门针对apng 图片做的处理,把apng的多张图片解析出来

这里就是对apng 图片解析了,再将每一帧图片封装成_YYImageDecoderFrame

不想对apng图片解析做具体介绍。可自行查询,是自定义了apng图片的chunk

- (void)_updateSourceWebP

这个函数是对webp的解析,不做介绍了。这个是使用的webP.framework api

从上面解析我们知道了,这个类将图片解析成统一格式

_YYImageDecoderFrame 代表图片的一帧,而_frame中装有所有的图片帧。

上面对图片都解析完成了。下面我们看看其他的获取api

- (YYImageFrame *)frameAtIndex:(NSUInteger)index decodeForDisplay:(BOOL)decodeForDisplay {

YYImageFrame *result = nil;

pthread_mutex_lock(&_lock);

result = [self _frameAtIndex:index decodeForDisplay:decodeForDisplay];

pthread_mutex_unlock(&_lock);

return result;

}

从这个类我们知道返回的是YYImageFrame,其实这个类是暴露给外界的类,而_YYImageDecoderFrame 是内部解析类,这两个类是对应的。

- (YYImageFrame *)_frameAtIndex:(NSUInteger)index decodeForDisplay:(BOOL)decodeForDisplay {

if (index >= _frames.count) return 0;

_YYImageDecoderFrame *frame = [(_YYImageDecoderFrame *)_frames[index] copy];

BOOL decoded = NO;

BOOL extendToCanvas = NO;

if (_type != YYImageTypeICO && decodeForDisplay) { // ICO contains multi-size frame and should not extend to canvas.

extendToCanvas = YES;

}

if (!_needBlend) {

CGImageRef imageRef = [self _newUnblendedImageAtIndex:index extendToCanvas:extendToCanvas decoded:&decoded];

if (!imageRef) return nil;

if (decodeForDisplay && !decoded) {

CGImageRef imageRefDecoded = YYCGImageCreateDecodedCopy(imageRef, YES);

if (imageRefDecoded) {

CFRelease(imageRef);

imageRef = imageRefDecoded;

decoded = YES;

}

}

UIImage *image = [UIImage imageWithCGImage:imageRef scale:_scale orientation:_orientation];

CFRelease(imageRef);

if (!image) return nil;

image.isDecodedForDisplay = decoded;

frame.image = image;

return frame;

}

// blend

if (![self _createBlendContextIfNeeded]) return nil;

CGImageRef imageRef = NULL;

if (_blendFrameIndex + 1 == frame.index) {

imageRef = [self _newBlendedImageWithFrame:frame];

_blendFrameIndex = index;

} else { // should draw canvas from previous frame

_blendFrameIndex = NSNotFound;

CGContextClearRect(_blendCanvas, CGRectMake(0, 0, _width, _height));

if (frame.blendFromIndex == frame.index) {

CGImageRef unblendedImage = [self _newUnblendedImageAtIndex:index extendToCanvas:NO decoded:NULL];

if (unblendedImage) {

CGContextDrawImage(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height), unblendedImage);

CFRelease(unblendedImage);

}

imageRef = CGBitmapContextCreateImage(_blendCanvas);

if (frame.dispose == YYImageDisposeBackground) {

CGContextClearRect(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height));

}

_blendFrameIndex = index;

} else { // canvas is not ready

for (uint32_t i = (uint32_t)frame.blendFromIndex; i <= (uint32_t)frame.index; i++) {

if (i == frame.index) {

if (!imageRef) imageRef = [self _newBlendedImageWithFrame:frame];

} else {

[self _blendImageWithFrame:_frames[i]];

}

}

_blendFrameIndex = index;

}

}

if (!imageRef) return nil;

UIImage *image = [UIImage imageWithCGImage:imageRef scale:_scale orientation:_orientation];

CFRelease(imageRef);

if (!image) return nil;

image.isDecodedForDisplay = YES;

frame.image = image;

if (extendToCanvas) {

frame.width = _width;

frame.height = _height;

frame.offsetX = 0;

frame.offsetY = 0;

frame.dispose = YYImageDisposeNone;

frame.blend = YYImageBlendNone;

}

return frame;

}

这个函数获取动画的第几张图片

1.首先获取_YYImageDecoderFrame

2_needBlend=NO, 那么获取图片image 返回frame。

3.2_needBlend=YES,_blendCanvas 初始化成bitmap(这里_blendFrameIndex=-1)

4.要是_blendFrameIndex+1==frame.index ,说明frame还没blend过,那么调用- (CGImageRef)_newBlendedImageWithFrame:(_YYImageDecoderFrame *)frame

5._blendFrameIndex+1 不等于frame.index,清空_blendCanvas

6.要是frame的 blendFromIndex 等于index。直接获取image

7要是从blendFromIndex 到index,那么将几张图片融合到一起。返回index。

8最后返回frame

据我估计大家看到这里不明白。第七步为啥要将几步融合到一起呢?最后想通了。

做动画的时候,为了节省内存,我们把把第二帧动画与前一帧动画想同的部分不绘制,只绘制与前一帧不同的图片,这样节省内存,在做动画的时候,我们需要将这样的几张图片进行重新blend下,就是相互叠加一起渲染。

这里面有几个新函数,

- (CGImageRef)_newBlendedImageWithFrame:(_YYImageDecoderFrame *)frame CF_RETURNS_RETAINED{

CGImageRef imageRef = NULL;

if (frame.dispose == YYImageDisposePrevious) {

if (frame.blend == YYImageBlendOver) {

CGImageRef previousImage = CGBitmapContextCreateImage(_blendCanvas);

CGImageRef unblendImage = [self _newUnblendedImageAtIndex:frame.index extendToCanvas:NO decoded:NULL];

if (unblendImage) {

CGContextDrawImage(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height), unblendImage);

CFRelease(unblendImage);

}

imageRef = CGBitmapContextCreateImage(_blendCanvas);

CGContextClearRect(_blendCanvas, CGRectMake(0, 0, _width, _height));

if (previousImage) {

CGContextDrawImage(_blendCanvas, CGRectMake(0, 0, _width, _height), previousImage);

CFRelease(previousImage);

}

} else {

CGImageRef previousImage = CGBitmapContextCreateImage(_blendCanvas);

CGImageRef unblendImage = [self _newUnblendedImageAtIndex:frame.index extendToCanvas:NO decoded:NULL];

if (unblendImage) {

CGContextClearRect(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height));

CGContextDrawImage(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height), unblendImage);

CFRelease(unblendImage);

}

imageRef = CGBitmapContextCreateImage(_blendCanvas);

CGContextClearRect(_blendCanvas, CGRectMake(0, 0, _width, _height));

if (previousImage) {

CGContextDrawImage(_blendCanvas, CGRectMake(0, 0, _width, _height), previousImage);

CFRelease(previousImage);

}

}

} else if (frame.dispose == YYImageDisposeBackground) {

if (frame.blend == YYImageBlendOver) {

CGImageRef unblendImage = [self _newUnblendedImageAtIndex:frame.index extendToCanvas:NO decoded:NULL];

if (unblendImage) {

CGContextDrawImage(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height), unblendImage);

CFRelease(unblendImage);

}

imageRef = CGBitmapContextCreateImage(_blendCanvas);

CGContextClearRect(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height));

} else {

CGImageRef unblendImage = [self _newUnblendedImageAtIndex:frame.index extendToCanvas:NO decoded:NULL];

if (unblendImage) {

CGContextClearRect(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height));

CGContextDrawImage(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height), unblendImage);

CFRelease(unblendImage);

}

imageRef = CGBitmapContextCreateImage(_blendCanvas);

CGContextClearRect(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height));

}

} else { // no dispose

if (frame.blend == YYImageBlendOver) {

CGImageRef unblendImage = [self _newUnblendedImageAtIndex:frame.index extendToCanvas:NO decoded:NULL];

if (unblendImage) {

CGContextDrawImage(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height), unblendImage);

CFRelease(unblendImage);

}

imageRef = CGBitmapContextCreateImage(_blendCanvas);

} else {

CGImageRef unblendImage = [self _newUnblendedImageAtIndex:frame.index extendToCanvas:NO decoded:NULL];

if (unblendImage) {

CGContextClearRect(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height));

CGContextDrawImage(_blendCanvas, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height), unblendImage);

CFRelease(unblendImage);

}

imageRef = CGBitmapContextCreateImage(_blendCanvas);

}

}

return imageRef;

}

1当frame.dispose == YYImageDisposePrevious ,

《1》当frame.blend == YYImageBlendOver,获取image,(这里把现在的image融合到以前的_blendCanvas中)而_blendCanvas 不过要渲染成以前的样式previousImage,

《2》当frame.blend 是其他情况,获取image,(这里把现在的image不要融合到以前的_blendCanvas中)而_blendCanvas 要渲染成以前的样式previousImage。

2.当frame.dispose== YYImageDisposeBackground

《1》当frame.blend == YYImageBlendOver,获取image,不过这了需要清空_blendCanvas

《2》当frame.blend 是其他情况,这里需要将_blendCanvas清空,之后再绘制。

3.当frame.dispose== 其他情况

《1》当frame.blend == YYImageBlendOver,就是获取image

《2》当frame.blend 是其他情况,_blendCanvas清空,之后再绘制。

这里有两个枚举

typedef NS_ENUM(NSUInteger, YYImageBlendOperation) {

/**

All color components of the frame, including alpha, overwrite the current

contents of the frame's canvas region.

*/

YYImageBlendNone = 0,

/**

The frame should be composited onto the output buffer based on its alpha.

*/

YYImageBlendOver,

};

YYImageBlendOver ,这个就是让_blendCanvas 作为画布,在绘制image之前,不要清除以前的_blendCanvas。默认是绘制image之前,要清除_blendCanvas

typedef NS_ENUM(NSUInteger, YYImageDisposeMethod) {

/**

No disposal is done on this frame before rendering the next; the contents

of the canvas are left as is.

*/

YYImageDisposeNone = 0,

/**

The frame's region of the canvas is to be cleared to fully transparent black

before rendering the next frame.

*/

YYImageDisposeBackground,

/**

The frame's region of the canvas is to be reverted to the previous contents

before rendering the next frame.

*/

YYImageDisposePrevious,

};

这个枚举是绘制完image之后,_blendCanvas 的状态

YYImageDisposeBackground ,请_blendCanvas 清空

YYImageDisposePrevious 将_blendCanvas 渲染成以前的状态

YYImageDisposeNone ,不对_blendCanvas 做任何处理

- (CGImageRef)_newUnblendedImageAtIndex:(NSUInteger)index

extendToCanvas:(BOOL)extendToCanvas

decoded:(BOOL *)decoded CF_RETURNS_RETAINED {

if (!_finalized && index > 0) return NULL;

if (_frames.count <= index) return NULL;

_YYImageDecoderFrame *frame = _frames[index];

if (_source) {

CGImageRef imageRef = CGImageSourceCreateImageAtIndex(_source, index, (CFDictionaryRef)@{(id)kCGImageSourceShouldCache:@(YES)});

if (imageRef && extendToCanvas) {

size_t width = CGImageGetWidth(imageRef);

size_t height = CGImageGetHeight(imageRef);

if (width == _width && height == _height) {

CGImageRef imageRefExtended = YYCGImageCreateDecodedCopy(imageRef, YES);

if (imageRefExtended) {

CFRelease(imageRef);

imageRef = imageRefExtended;

if (decoded) *decoded = YES;

}

} else {

CGContextRef context = CGBitmapContextCreate(NULL, _width, _height, 8, 0, YYCGColorSpaceGetDeviceRGB(), kCGBitmapByteOrder32Host | kCGImageAlphaPremultipliedFirst);

if (context) {

CGContextDrawImage(context, CGRectMake(0, _height - height, width, height), imageRef);

CGImageRef imageRefExtended = CGBitmapContextCreateImage(context);

CFRelease(context);

if (imageRefExtended) {

CFRelease(imageRef);

imageRef = imageRefExtended;

if (decoded) *decoded = YES;

}

}

}

}

return imageRef;

}

if (_apngSource) {

uint32_t size = 0;

uint8_t *bytes = yy_png_copy_frame_data_at_index(_data.bytes, _apngSource, (uint32_t)index, &size);

if (!bytes) return NULL;

CGDataProviderRef provider = CGDataProviderCreateWithData(bytes, bytes, size, YYCGDataProviderReleaseDataCallback);

if (!provider) {

free(bytes);

return NULL;

}

bytes = NULL; // hold by provider

CGImageSourceRef source = CGImageSourceCreateWithDataProvider(provider, NULL);

if (!source) {

CFRelease(provider);

return NULL;

}

CFRelease(provider);

if(CGImageSourceGetCount(source) < 1) {

CFRelease(source);

return NULL;

}

CGImageRef imageRef = CGImageSourceCreateImageAtIndex(source, 0, (CFDictionaryRef)@{(id)kCGImageSourceShouldCache:@(YES)});

CFRelease(source);

if (!imageRef) return NULL;

if (extendToCanvas) {

CGContextRef context = CGBitmapContextCreate(NULL, _width, _height, 8, 0, YYCGColorSpaceGetDeviceRGB(), kCGBitmapByteOrder32Host | kCGImageAlphaPremultipliedFirst); //bgrA

if (context) {

CGContextDrawImage(context, CGRectMake(frame.offsetX, frame.offsetY, frame.width, frame.height), imageRef);

CFRelease(imageRef);

imageRef = CGBitmapContextCreateImage(context);

CFRelease(context);

if (decoded) *decoded = YES;

}

}

return imageRef;

}

#if YYIMAGE_WEBP_ENABLED

if (_webpSource) {

WebPIterator iter;

if (!WebPDemuxGetFrame(_webpSource, (int)(index + 1), &iter)) return NULL; // demux webp frame data

// frame numbers are one-based in webp -----------^

int frameWidth = iter.width;

int frameHeight = iter.height;

if (frameWidth < 1 || frameHeight < 1) return NULL;

int width = extendToCanvas ? (int)_width : frameWidth;

int height = extendToCanvas ? (int)_height : frameHeight;

if (width > _width || height > _height) return NULL;

const uint8_t *payload = iter.fragment.bytes;

size_t payloadSize = iter.fragment.size;

WebPDecoderConfig config;

if (!WebPInitDecoderConfig(&config)) {

WebPDemuxReleaseIterator(&iter);

return NULL;

}

if (WebPGetFeatures(payload , payloadSize, &config.input) != VP8_STATUS_OK) {

WebPDemuxReleaseIterator(&iter);

return NULL;

}

size_t bitsPerComponent = 8;

size_t bitsPerPixel = 32;

size_t bytesPerRow = YYImageByteAlign(bitsPerPixel / 8 * width, 32);

size_t length = bytesPerRow * height;

CGBitmapInfo bitmapInfo = kCGBitmapByteOrder32Host | kCGImageAlphaPremultipliedFirst; //bgrA

void *pixels = calloc(1, length);

if (!pixels) {

WebPDemuxReleaseIterator(&iter);

return NULL;

}

config.output.colorspace = MODE_bgrA;

config.output.is_external_memory = 1;

config.output.u.RGBA.rgba = pixels;

config.output.u.RGBA.stride = (int)bytesPerRow;

config.output.u.RGBA.size = length;

VP8StatusCode result = WebPDecode(payload, payloadSize, &config); // decode

if ((result != VP8_STATUS_OK) && (result != VP8_STATUS_NOT_ENOUGH_DATA)) {

WebPDemuxReleaseIterator(&iter);

free(pixels);

return NULL;

}

WebPDemuxReleaseIterator(&iter);

if (extendToCanvas && (iter.x_offset != 0 || iter.y_offset != 0)) {

void *tmp = calloc(1, length);

if (tmp) {

vImage_Buffer src = {pixels, height, width, bytesPerRow};

vImage_Buffer dest = {tmp, height, width, bytesPerRow};

vImage_CGAffineTransform transform = {1, 0, 0, 1, iter.x_offset, -iter.y_offset};

uint8_t backColor[4] = {0};

vImage_Error error = vImageAffineWarpCG_ARGB8888(&src, &dest, NULL, &transform, backColor, kvImageBackgroundColorFill);

if (error == kvImageNoError) {

memcpy(pixels, tmp, length);

}

free(tmp);

}

}

CGDataProviderRef provider = CGDataProviderCreateWithData(pixels, pixels, length, YYCGDataProviderReleaseDataCallback);

if (!provider) {

free(pixels);

return NULL;

}

pixels = NULL; // hold by provider

CGImageRef image = CGImageCreate(width, height, bitsPerComponent, bitsPerPixel, bytesPerRow, YYCGColorSpaceGetDeviceRGB(), bitmapInfo, provider, NULL, false, kCGRenderingIntentDefault);

CFRelease(provider);

if (decoded) *decoded = YES;

return image;

}

#endif

return NULL;

}

从这个函数的名字看出来,就是获取一张没有渲染的image

1获取_YYImageDecoderFrame

2.当_source 不是nil,获取image,这种图片是缓存的。

《1》extendToCanvas 是yes,将图片解析成bitmap。

3当_apngSource 不是nil ,获取image。这是真的apng来说的

4.当_webpSource 不是nil,获取image,这是真对webp来说的

这里主要的介绍完毕了。图片的具体解析不做介绍。不懂可以留言,共同研究,这里还有个YYImageEncoder 就是逆运算,暂时不做介绍。

做个图吧。看看组织结构

类似这个结构

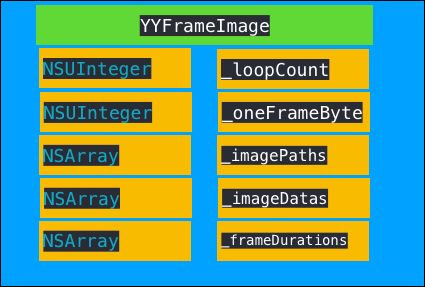

YYFrameImage

这个类记录动画执行的相关操作实现了协议YYAnimatedImage

结构

初始化

四个初始化方法

- (nullable instancetype)initWithImagePaths:(NSArray*)paths oneFrameDuration:(NSTimeInterval)oneFrameDuration loopCount:(NSUInteger)loopCount;

- (nullable instancetype)initWithImagePaths:(NSArray*)paths frameDurations:(NSArray*)frameDurations loopCount:(NSUInteger)loopCount;

- (nullable instancetype)initWithImageDataArray:(NSArray*)dataArray oneFrameDuration:(NSTimeInterval)oneFrameDuration loopCount:(NSUInteger)loopCount;

- (nullable instancetype)initWithImageDataArray:(NSArray *)dataArray frameDurations:(NSArray *)frameDurations loopCount:(NSUInteger)loopCount;

这里只看最后一个初始化方法就行了,其他都一样。

- (instancetype)initWithImageDataArray:(NSArray *)dataArray frameDurations:(NSArray *)frameDurations loopCount:(NSUInteger)loopCount {

if (dataArray.count == 0) return nil;

if (dataArray.count != frameDurations.count) return nil;

NSData *firstData = dataArray[0];

CGFloat scale = [UIScreen mainScreen].scale;

UIImage *firstCG = [[[UIImage alloc] initWithData:firstData] imageByDecoded];

self = [self initWithCGImage:firstCG.CGImage scale:scale orientation:UIImageOrientationUp];

if (!self) return nil;

long frameByte = CGImageGetBytesPerRow(firstCG.CGImage) * CGImageGetHeight(firstCG.CGImage);

_oneFrameBytes = (NSUInteger)frameByte;

_imageDatas = dataArray.copy;

_frameDurations = frameDurations.copy;

_loopCount = loopCount;

return self;

}

就是给相关参数做检验,赋值。

接下来看看协议YYAnimatedImage 协议的几个实现。

- (UIImage *)animatedImageFrameAtIndex:(NSUInteger)index {

if (_imagePaths) {

if (index >= _imagePaths.count) return nil;

NSString *path = _imagePaths[index];

CGFloat scale = [path pathScale];

NSData *data = [NSData dataWithContentsOfFile:path];

return [[UIImage imageWithData:data scale:scale] imageByDecoded];

} else if (_imageDatas) {

if (index >= _imageDatas.count) return nil;

NSData *data = _imageDatas[index];

return [[UIImage imageWithData:data scale:[UIScreen mainScreen].scale] imageByDecoded];

} else {

return index == 0 ? self : nil;

}

}

这里获取的图片是临时生成的,我认为这里要是讲究效率,空间换时间,将原始数据转换到image数组中,直接获取更快。(但是创建的时候转换会慢写)我猜测这里获取图片应该是在异步线程里。这个类是关联YYAnimatedImageView 类的。我们看看YYAnimatedImageView 类咋实现的

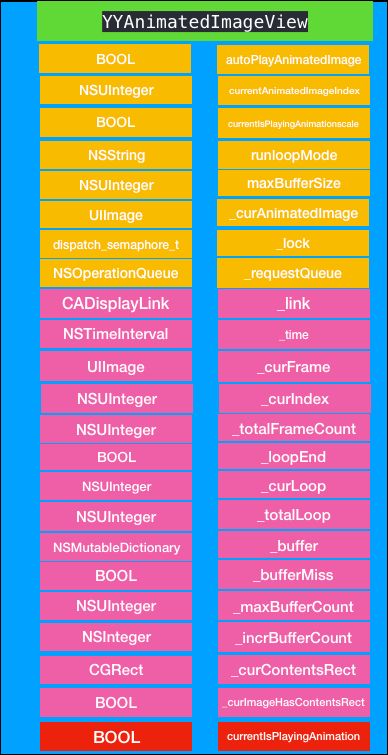

YYAnimatedImageView

这个专门做动画的类

初始化

- (instancetype)init {

self = [super init];

_runloopMode = NSRunLoopCommonModes;

_autoPlayAnimatedImage = YES;

return self;

}

- (instancetype)initWithFrame:(CGRect)frame {

self = [super initWithFrame:frame];

_runloopMode = NSRunLoopCommonModes;

_autoPlayAnimatedImage = YES;

return self;

}

简单初始化,

- (instancetype)initWithImage:(UIImage *)image {

self = [super init];

_runloopMode = NSRunLoopCommonModes;

_autoPlayAnimatedImage = YES;

self.frame = (CGRect) {CGPointZero, image.size };

self.image = image;

return self;

}

- (instancetype)initWithImage:(UIImage *)image highlightedImage:(UIImage *)highlightedImage {

self = [super init];

_runloopMode = NSRunLoopCommonModes;

_autoPlayAnimatedImage = YES;

CGSize size = image ? image.size : highlightedImage.size;

self.frame = (CGRect) {CGPointZero, size };

self.image = image;

self.highlightedImage = highlightedImage;

return self;

}

这两个是带image的初始化

这里要注意,该类重写了好多方法

self.image=image会调用setImage: 方法

重写的四个image方法

- (void)setImage:(UIImage *)image {

if (self.image == image) return;

[self setImage:image withType:YYAnimatedImageTypeImage];

}

- (void)setHighlightedImage:(UIImage *)highlightedImage {

if (self.highlightedImage == highlightedImage) return;

[self setImage:highlightedImage withType:YYAnimatedImageTypeHighlightedImage];

}

- (void)setAnimationImages:(NSArray *)animationImages {

if (self.animationImages == animationImages) return;

[self setImage:animationImages withType:YYAnimatedImageTypeImages];

}

- (void)setHighlightedAnimationImages:(NSArray *)highlightedAnimationImages {

if (self.highlightedAnimationImages == highlightedAnimationImages) return;

[self setImage:highlightedAnimationImages withType:YYAnimatedImageTypeHighlightedImages];

}

设置图片的image才是动画的开始

- (void)setImage:(id)image withType:(YYAnimatedImageType)type {

[self stopAnimating];

if (_link) [self resetAnimated];

_curFrame = nil;

switch (type) {

case YYAnimatedImageTypeNone: break;

case YYAnimatedImageTypeImage: super.image = image; break;

case YYAnimatedImageTypeHighlightedImage: super.highlightedImage = image; break;

case YYAnimatedImageTypeImages: super.animationImages = image; break;

case YYAnimatedImageTypeHighlightedImages: super.highlightedAnimationImages = image; break;

}

[self imageChanged];

}

根据type类型设置super的image

这里根据_link 调用-resetAnimated方法。先不管_link 看下面的这个函数

- (void)imageChanged { YYAnimatedImageType newType = [self currentImageType]; id newVisibleImage = [self imageForType:newType]; NSUInteger newImageFrameCount = 0; BOOL hasContentsRect = NO; if ([newVisibleImage isKindOfClass:[UIImage class]] && [newVisibleImage conformsToProtocol:@protocol(YYAnimatedImage)]) { newImageFrameCount = ((UIImage*) newVisibleImage).animatedImageFrameCount; if (newImageFrameCount > 1) { hasContentsRect = [((UIImage*) newVisibleImage) respondsToSelector:@selector(animatedImageContentsRectAtIndex:)]; } } if (!hasContentsRect && _curImageHasContentsRect) { if (!CGRectEqualToRect(self.layer.contentsRect, CGRectMake(0, 0, 1, 1)) ) { [CATransaction begin]; [CATransaction setDisableActions:YES]; self.layer.contentsRect = CGRectMake(0, 0, 1, 1); [CATransaction commit]; } } _curImageHasContentsRect = hasContentsRect; if (hasContentsRect) { CGRect rect = [((UIImage *) newVisibleImage) animatedImageContentsRectAtIndex:0];

[self setContentsRect:rect forImage:newVisibleImage];

}

if (newImageFrameCount > 1) {

[self resetAnimated];

_curAnimatedImage = newVisibleImage;

_curFrame = newVisibleImage;

_totalLoop = _curAnimatedImage.animatedImageLoopCount;

_totalFrameCount = _curAnimatedImage.animatedImageFrameCount;

[self calcMaxBufferCount];

}

[self setNeedsDisplay];

[self didMoved];

}

1.获取image动画类型

2.获取image

3.要是image是UIImage类型不是数组,并且实现了协议YYAnimatedImage 就获取下执行动画的帧数,要是执行帧数大于1,检测是否响应@selector(animatedImageContentsRectAtIndex:方法

4要是检测当前没有实现@selector(animatedImageContentsRectAtIndex:方法,而以前的image实现了@selector(animatedImageContentsRectAtIndex:方法,那么将layer的contentRect设置为nil。其实这个操作就是删除以前的layer图片。

5 调用@selector(animatedImageContentsRectAtIndex: 方法获取rect

6.要是动画的帧数大于1 你、,那么冲设动画

7.设置layer重新绘制

8 .开启动画

这里有个 [self resetAnimated];方法

看看实现

- (void)resetAnimated {

if (!_link) {

_lock = dispatch_semaphore_create(1);

_buffer = [NSMutableDictionary new];

_requestQueue = [[NSOperationQueue alloc] init];

_requestQueue.maxConcurrentOperationCount = 1;

_link = [CADisplayLink displayLinkWithTarget:[YYWeakProxy proxyWithTarget:self] selector:@selector(step:)];

if (_runloopMode) {

[_link addToRunLoop:[NSRunLoop mainRunLoop] forMode:_runloopMode];

}

_link.paused = YES;

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(didReceiveMemoryWarning:) name:UIApplicationDidReceiveMemoryWarningNotification object:nil];

[[NSNotificationCenter defaultCenter] addObserver:self selector:@selector(didEnterBackground:) name:UIApplicationDidEnterBackgroundNotification object:nil];

}

[_requestQueue cancelAllOperations];

LOCK(

if (_buffer.count) {

NSMutableDictionary *holder = _buffer;

_buffer = [NSMutableDictionary new];

dispatch_async(dispatch_get_global_queue(DISPATCH_QUEUE_PRIORITY_LOW, 0), ^{

// Capture the dictionary to global queue,

// release these images in background to avoid blocking UI thread.

[holder class];

});

}

);

_link.paused = YES;

_time = 0;

if (_curIndex != 0) {

[self willChangeValueForKey:@"currentAnimatedImageIndex"];

_curIndex = 0;

[self didChangeValueForKey:@"currentAnimatedImageIndex"];

}

_curAnimatedImage = nil;

_curFrame = nil;

_curLoop = 0;

_totalLoop = 0;

_totalFrameCount = 1;

_loopEnd = NO;

_bufferMiss = NO;

_incrBufferCount = 0;

}

这里初始化了好多参数。关键参数有_requestQueue ,_link. 这里的_link 是类型CADisplayLink( 为啥用这个计时器而不用timer)

这里_buffer 进行重置,重置_curIndex

- (void)didMoved {

if (self.autoPlayAnimatedImage) {

if(self.superview && self.window) {

[self startAnimating];

} else {

[self stopAnimating];

}

}

}

检测是否要进行动画

我们看看执行动画

- (void)startAnimating {

YYAnimatedImageType type = [self currentImageType];

if (type == YYAnimatedImageTypeImages || type == YYAnimatedImageTypeHighlightedImages) {

NSArray *images = [self imageForType:type];

if (images.count > 0) {

[super startAnimating];

self.currentIsPlayingAnimation = YES;

}

} else {

if (_curAnimatedImage && _link.paused) {

_curLoop = 0;

_loopEnd = NO;

_link.paused = NO;

self.currentIsPlayingAnimation = YES;

}

}

}

1当动画是数组的时候,运用UiimageView 的自带动画系统进行动画

2.当动画不是image的时候,检测要是计时器是暂停的,那么就开启计时器,标记动画执行执行。

我们看看计时器执行的方法-step:

- (void)step:(CADisplayLink *)link { UIImage *image = _curAnimatedImage;

NSMutableDictionary *buffer = _buffer;

UIImage *bufferedImage = nil;

NSUInteger nextIndex = (_curIndex + 1) % _totalFrameCount;

BOOL bufferIsFull = NO;

if (!image) return;

if (_loopEnd) { // view will keep in last frame

[self stopAnimating];

return;

}

NSTimeInterval delay = 0;

if (!_bufferMiss) {

_time += link.duration;

delay = [image animatedImageDurationAtIndex:_curIndex];

if (_time < delay) return;

_time -= delay;

if (nextIndex == 0) {

_curLoop++;

if (_curLoop >= _totalLoop && _totalLoop != 0) {

_loopEnd = YES;

[self stopAnimating];

[self.layer setNeedsDisplay]; // let system call `displayLayer:` before runloop sleep

return; // stop at last frame

}

}

delay = [image animatedImageDurationAtIndex:nextIndex];

if (_time > delay) _time = delay; // do not jump over frame

}

LOCK(

bufferedImage = buffer[@(nextIndex)];

if (bufferedImage) {

if ((int)_incrBufferCount < _totalFrameCount) {

[buffer removeObjectForKey:@(nextIndex)];

}

[self willChangeValueForKey:@"currentAnimatedImageIndex"];

_curIndex = nextIndex;

[self didChangeValueForKey:@"currentAnimatedImageIndex"];

_curFrame = bufferedImage == (id)[NSNull null] ? nil : bufferedImage;

if (_curImageHasContentsRect) {

_curContentsRect = [image animatedImageContentsRectAtIndex:_curIndex];

[self setContentsRect:_curContentsRect forImage:_curFrame];

}

nextIndex = (_curIndex + 1) % _totalFrameCount;

_bufferMiss = NO;

if (buffer.count == _totalFrameCount) {

bufferIsFull = YES;

}

} else {

_bufferMiss = YES;

}

)//LOCK

if (!_bufferMiss) {

[self.layer setNeedsDisplay]; // let system call `displayLayer:` before runloop sleep

}

if (!bufferIsFull && _requestQueue.operationCount == 0) { // if some work not finished, wait for next opportunity

_YYAnimatedImageViewFetchOperation *operation = [_YYAnimatedImageViewFetchOperation new];

operation.view = self;

operation.nextIndex = nextIndex;

operation.curImage = image;

[_requestQueue addOperation:operation];

}

}

1获取image

2.获取buffer

3获取下一帧index

4.要是循环结束就停止动画

5 _timer 累加到超过delay。

6.当下一帧是0说明循环一圈,检查是否结束循环。结束就停止动画,并且重新绘制layer的contents

7.检查buffer中是否有下一帧。没有标记_bufferMiss =YES,有,进行相关操作

8.在operation 执行操作

我们看看再这个_YYAnimatedImageViewFetchOperation 线程中执行啥操作

- (void)main {

__strong YYAnimatedImageView *view = _view;

if (!view) return;

if ([self isCancelled]) return;

view->_incrBufferCount++;

if (view->_incrBufferCount == 0) [view calcMaxBufferCount];

if (view->_incrBufferCount > (NSInteger)view->_maxBufferCount) {

view->_incrBufferCount = view->_maxBufferCount;

}

NSUInteger idx = _nextIndex;

NSUInteger max = view->_incrBufferCount < 1 ? 1 : view->_incrBufferCount;

NSUInteger total = view->_totalFrameCount;

view = nil;

for (int i = 0; i < max; i++, idx++) {

@autoreleasepool {

if (idx >= total) idx = 0;

if ([self isCancelled]) break;

__strong YYAnimatedImageView *view = _view;

if (!view) break;

LOCK_VIEW(BOOL miss = (view->_buffer[@(idx)] == nil));

if (miss) {

UIImage *img = [_curImage animatedImageFrameAtIndex:idx];

img = img.imageByDecoded;

if ([self isCancelled]) break;

LOCK_VIEW(view->_buffer[@(idx)] = img ? img : [NSNull null]);

view = nil;

}

}

}

}

这个是main函数。同步执行。

1获取YYAnimatedImageView

2.增加buffer数量

3.检查bufferCount

4.将buffer 数据填入

- (void)calcMaxBufferCount {

int64_t bytes = (int64_t)_curAnimatedImage.animatedImageBytesPerFrame;

if (bytes == 0) bytes = 1024;

int64_t total = [UIDevice currentDevice].memoryTotal;

int64_t free = [UIDevice currentDevice].memoryFree;

int64_t max = MIN(total * 0.2, free * 0.6);

max = MAX(max, BUFFER_SIZE);

if (_maxBufferSize) max = max > _maxBufferSize ? _maxBufferSize : max;

double maxBufferCount = (double)max / (double)bytes;

maxBufferCount = YY_CLAMP(maxBufferCount, 1, 512);

_maxBufferCount = maxBufferCount;

}

这个函数计算最大buffer 可以保存图片的数量。不过这里这样写不知道有啥好处。

逻辑比较混乱。做个图吧

这个设计的很巧妙,获取image是从buffer中获取,所有image的具体编解码都是异步线程中加载到buffer中。

YYSpriteSheetImage

这个函数与YYFrameImage 都是UIimage 的扩展,配合YYAnimatedImageView

- (instancetype)initWithSpriteSheetImage:(UIImage *)image

contentRects:(NSArray *)contentRects

frameDurations:(NSArray *)frameDurations

loopCount:(NSUInteger)loopCount {

if (!image.CGImage) return nil;

if (contentRects.count < 1 || frameDurations.count < 1) return nil;

if (contentRects.count != frameDurations.count) return nil;

self = [super initWithCGImage:image.CGImage scale:image.scale orientation:image.imageOrientation];

if (!self) return nil;

_contentRects = contentRects.copy;

_frameDurations = frameDurations.copy;

_loopCount = loopCount;

return self;

}

在这个函数有个变量_contentRects 记录的是一张图片上的图片的位置信息。

这里实现了这个方法

- (CGRect)animatedImageContentsRectAtIndex:(NSUInteger)index {

if (index >= _contentRects.count) return CGRectZero;

return ((NSValue *)_contentRects[index]).CGRectValue;

}

理解如图

外界传入的image 是 蓝色图

_contentRects 记录的是每个红色图起始点和大小。

_frameDurations 记录的是每一帧动画执行的间隔时间。

动画执行其实就是红色图片的一帧一帧的显示而已