ELK环境部署

基础环境

系统:Centos7.3

防火墙、selinux:关闭

机器环境:至少两台

192.168.1.182 elk-node1

192.168.1.183 elk-node2

Master-slave模式

机器环境:jdk1.8+,nginx或apache

下载并安装GPG Key

rpm --import https://packages.elastic.co/GPG-KEY-elasticsearch

添加yum仓库

vi /etc/yum.repos.d/elasticsearch.repo

[elasticsearch-2.x]

name=Elasticsearch repository for 2.x packages

baseurl=http://packages.elastic.co/elasticsearch/2.x/centos

gpgcheck=1

gpgkey=http://packages.elastic.co/GPG-KEY-elasticsearch

enabled=1

安装elasticsearch

yum install -y elasticsearch

配置部署(先进行elk-node1的配置)

1)配置修改配置文件

[root@elk-node1 ~]# mkdir -p /data/es-data

[root@elk-node1 ~]# vi /etc/elasticsearch/elasticsearch.yml //将里面内容清空,配置下面内容

cluster.name: ceshi # 组名(同一个组,组名必须一致)

node.name: elk-node1 # 节点名称,建议和主机名一致

path.data: /data/es-data # 数据存放的路径

path.logs: /var/log/elasticsearch/ # 日志存放的路径

bootstrap.mlockall: true # 锁住内存,不被使用到交换分区去

network.host: 0.0.0.0 # 网络设置

http.port: 9200 # 端口

2)启动并查看

[root@elk-node1 ~]# chown -R elasticsearch.elasticsearch /data/

[root@elk-node1 ~]# systemctl start elasticsearch

[root@elk-node1 ~]# systemctl status elasticsearch

CGroup: /system.slice/elasticsearch.service

└─3005 /bin/java -Xms256m -Xmx1g -Djava.awt.headless=true -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSI...

注意:上面可以看出elasticsearch设置的内存最小256m,最大1g

[root@linux-node1 src]# netstat -antlp |egrep "9200|9300"

tcp6 0 0 :::9200 ::: LISTEN 3005/java

tcp6 0 0 :::9300 ::: LISTEN 3005/java

然后通过web访问(访问的浏览器最好用google浏览器)

http://192.168.1.182:9200/

4)接下来安装插件,使用插件进行查看~ (下面两个插件要在elk-node1和elk-node2上都要安装)

4.1)安装head插件

a)插件安装方法一

[root@elk-node1 src]# /usr/share/elasticsearch/bin/plugin install mobz/elasticsearch-head

b)插件安装方法二

首先下载head插件,下载到/usr/loca/src目录下

下载地址:https://github.com/mobz/elasticsearch-head

[root@elk-node1 src]# unzip elasticsearch-head-master.zip

[root@elk-node1 src]# ls

elasticsearch-head-master elasticsearch-head-master.zip

在/usr/share/elasticsearch/plugins目录下创建head目录

然后将上面下载的elasticsearch-head-master.zip解压后的文件都移到/usr/share/elasticsearch/plugins/head下

接着重启elasticsearch服务即可!

[root@elk-node1 plugins]# mkdir head

[root@elk-node1 plugins]# ls

head

[root@elk-node1 head]# pwd

/usr/share/elasticsearch/plugins/head

[root@elk-node1 head]# chown -R elasticsearch:elasticsearch /usr/share/elasticsearch/plugins

[root@elk-node1 head]# ll

total 40

-rw-r--r--. 1 elasticsearch elasticsearch 104 Sep 28 01:57 elasticsearch-head.sublime-project

-rw-r--r--. 1 elasticsearch elasticsearch 2171 Sep 28 01:57 Gruntfile.js

-rw-r--r--. 1 elasticsearch elasticsearch 3482 Sep 28 01:57 grunt_fileSets.js

-rw-r--r--. 1 elasticsearch elasticsearch 1085 Sep 28 01:57 index.html

-rw-r--r--. 1 elasticsearch elasticsearch 559 Sep 28 01:57 LICENCE

-rw-r--r--. 1 elasticsearch elasticsearch 795 Sep 28 01:57 package.json

-rw-r--r--. 1 elasticsearch elasticsearch 100 Sep 28 01:57 plugin-descriptor.properties

-rw-r--r--. 1 elasticsearch elasticsearch 5211 Sep 28 01:57 README.textile

drwxr-xr-x. 5 elasticsearch elasticsearch 4096 Sep 28 01:57 _site

drwxr-xr-x. 4 elasticsearch elasticsearch 29 Sep 28 01:57 src

drwxr-xr-x. 4 elasticsearch elasticsearch 66 Sep 28 01:57 test

[root@elk-node1 _site]# systemctl restart elasticsearch

插件访问(最好提前将elk-node2节点的配置和插件都安装后,再来进行访问和数据插入测试)

http://192.168.1.182:9200/_plugin/head/

下面进行节点elk-node2的配置 (如上的两个插件也在elk-node2上同样安装)

注释:其实两个的安装配置基本上是一样的。

[root@elk-node2 src]# mkdir -p /data/es-data

[root@elk-node2 ~]# cat /etc/elasticsearch/elasticsearch.yml

cluster.name: ceshi

node.name: elk-node2

path.data: /data/es-data

path.logs: /var/log/elasticsearch/

bootstrap.mlockall: true

network.host: 0.0.0.0

http.port: 9200

discovery.zen.ping.multicast.enabled: false

discovery.zen.ping.unicast.hosts: ["192.168.1.182", "192.168.1.183"]

修改权限配置

[root@elk-node2 src]# chown -R elasticsearch.elasticsearch /data/

启动服务

[root@elk-node2 src]# systemctl start elasticsearch

[root@elk-node2 src]# systemctl status elasticsearch

● elasticsearch.service - Elasticsearch

Loaded: loaded (/usr/lib/systemd/system/elasticsearch.service; enabled; vendor preset: disabled)

Active: active (running) since Wed 2018-08-28 16:49:41 CST; 1 weeks 3 days ago

Docs: http://www.elastic.co

Process: 17798 ExecStartPre=/usr/share/elasticsearch/bin/elasticsearch-systemd-pre-exec (code=exited, status=0/SUCCESS)

Main PID: 17800 (java)

CGroup: /system.slice/elasticsearch.service

└─17800 /bin/java -Xms256m -Xmx1g -Djava.awt.headless=true -XX:+UseParNewGC -XX:+UseConcMarkSweepGC -XX:CMSInitiatingOccupancyFra...

09 13:42:22 elk-node2 elasticsearch[17800]: [2016-10-09 13:42:22,295][WARN ][transport ] [elk-node2] Transport res...943817]

09 13:42:23 elk-node2 elasticsearch[17800]: [2016-10-09 13:42:23,111][WARN ][transport ] [elk-node2] Transport res...943846]

................

................

查看端口

[root@elk-node2 src]# netstat -antlp|egrep "9200|9300"

tcp6 0 0 :::9200 ::: LISTEN 2928/java

tcp6 0 0 :::9300 ::: LISTEN 2928/java

tcp6 0 0 127.0.0.1:48200 127.0.0.1:9300 TIME_WAIT -

tcp6 0 0 ::1:41892 ::1:9300 TIME_WAIT -

测试:

访问插件:

http://192.168.1.182:9200/_plugin/head/

添加yum仓库

[root@hadoop-node1 ~]# vi /etc/yum.repos.d/logstash.repo

[logstash-2.1]

name=Logstash repository for 2.1.x packages

baseurl=http://packages.elastic.co/logstash/2.1/centos

gpgcheck=1

gpgkey=http://packages.elastic.co/GPG-KEY-elasticsearch

enabled=1

安装logstash

[root@elk-node1 ~]# yum install -y logstash

logstash启动

[root@elk-node1 ~]# systemctl start logstash

[root@elk-node1 ~]# systemctl status logstash

● logstash.service - LSB: Starts Logstash as a daemon.

Loaded: loaded (/etc/rc.d/init.d/logstash; bad; vendor preset: disabled)

Active: active (exited) since 一 2018-09-03 16:36:07 CST; 19h ago

Docs: man:systemd-sysv-generator(8)

Process: 1699 ExecStart=/etc/rc.d/init.d/logstash start (code=exited, status=0/SUCCESS)

9月 03 16:36:07 elk-node1 systemd[1]: Starting LSB: Starts Logstash as a daemon....

9月 03 16:36:07 elk-node1 logstash[1699]: logstash started.

9月 03 16:36:07 elk-node1 systemd[1]: Started LSB: Starts Logstash as a daemon..

数据的测试

1)基本的输入输出

[root@elk-node1 ~]# /opt/logstash/bin/logstash -e 'input { stdin{} } output { stdout{} }'

Settings: Default filter workers: 1

Logstash startup completed

hello #输入这个

2018-08-28T04:41:07.690Z elk-node1 hello #输出这个

lihongwu #输入这个

2018-08-28T04:41:10.608Z elk-node1 lihongwu #输出这个

logstash的配置和文件的编写

1)logstash的配置

简单的配置方式:

[root@elk-node1 ~]# vi /etc/logstash/conf.d/01-logstash.conf

input { stdin { } }

output {

elasticsearch { hosts => ["192.168.1.182:9200"]}

stdout { codec => rubydebug }

}

它的执行:

[root@elk-node1 ~]# /opt/logstash/bin/logstash -f /etc/logstash/conf.d/01-logstash.conf

Settings: Default filter workers: 1

Logstash startup completed

beijing #输入内容

{ #输出下面信息

"message" => "beijing",

"@version" => "1",

"@timestamp" => "2018-08-28T04:41:48.401Z",

"host" => "elk-node1"

}

(3)Kibana安装配置

1)kibana的安装:

[root@elk-node1 ~]# cd /usr/local/src

[root@elk-node1 src]# wget https://download.elastic.co/kibana/kibana/kibana-4.3.1-linux-x64.tar.gz

[root@elk-node1 src]# tar zxf kibana-4.3.1-linux-x64.tar.gz

[root@elk-node1 src]# mv kibana-4.3.1-linux-x64 /usr/local/

[root@elk-node1 src]# ln -s /usr/local/kibana-4.3.1-linux-x64/ /usr/local/kibana

2)修改配置文件:

[root@elk-node1 config]# pwd

/usr/local/kibana/config

[root@elk-node1 config]# cp kibana.yml kibana.yml.bak

[root@elk-node1 config]# vi kibana.yml

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://192.168.1.182:9200"

kibana.index: ".kibana"

因为他一直运行在前台,要么选择开一个窗口,要么选择使用screen。

安装并使用screen启动kibana:

[root@elk-node1 ~]# yum -y install screen

[root@elk-node1 ~]# screen #这样就另开启了一个终端窗口

[root@elk-node1 ~]# /usr/local/kibana/bin/kibana

log [17:23:19.867] [info][status][plugin:kibana] Status changed from uninitialized to green - Ready

log [17:23:19.911] [info][status][plugin:elasticsearch] Status changed from uninitialized to yellow - Waiting for Elasticsearch

log [17:23:19.941] [info][status][plugin:kbn_vislib_vis_types] Status changed from uninitialized to green - Ready

log [17:23:19.953] [info][status][plugin:markdown_vis] Status changed from uninitialized to green - Ready

log [17:23:19.963] [info][status][plugin:metric_vis] Status changed from uninitialized to green - Ready

log [17:23:19.995] [info][status][plugin:spyModes] Status changed from uninitialized to green - Ready

log [17:23:20.004] [info][status][plugin:statusPage] Status changed from uninitialized to green - Ready

log [17:23:20.010] [info][status][plugin:table_vis] Status changed from uninitialized to green - Ready

然后按ctrl+a+d组合键,暂时断开screen会话

这样在上面另启的screen屏里启动的kibana服务就一直运行在前台了....

[root@elk-node1 ~]# screen -ls

There is a screen on:

15041.pts-0.elk-node1 (Detached)

1 Socket in /var/run/screen/S-root.

注:screen重新连接会话

下例显示当前有两个处于detached状态的screen会话,你可以使用screen -r

[root@tivf18 root]# screen –ls

There are screens on:

8736.pts-1.tivf18 (Detached)

8462.pts-0.tivf18 (Detached)

2 Sockets in /root/.screen.

[root@tivf18 root]# screen -r 8736

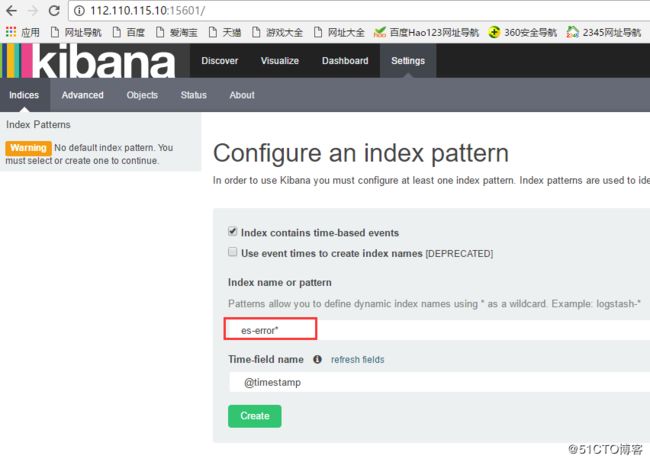

(3)访问kibana:http://192.168.1.182:5601/

如下,如果是添加上面设置的java日志收集信息,则在下面填写es-error;如果是添加上面设置的系统日志信息system,以此类型(可以从logstash界面看到日志收集项)