Flink的窗口策略

概述

Windows是流计算的核心。Windows将流分成有限大小的“buckets”,我们可以在其上应用聚合计算表(ProcessWindowFunction, ReduceFunction,AggregateFunction or FoldFunction)等。在Flink中编写一个窗口计算的基本结构如下:

keyed Windows

stream

.keyBy(...)

.window(...) <- 必须制定: 窗口类型

[.trigger(...)] <- 可选: "trigger" (都有默认 触发器),决定窗口什么时候触发

[.evictor(...)] <- 可选: "evictor" (默认 没有剔出),剔出窗口中的元素

[.allowedLateness(...)] <- 可选: "lateness" (默认 0),不允许又迟到的数据

[.sideOutputLateData(...)] <- 可选: "output tag" 将迟到的数据输出到 指定流中

.reduce/aggregate/fold/apply() <- 必须指定: "function",实现对窗口数据的聚合计算

[.getSideOutput(...)] <- 可选: "output tag" 获取Sideout的数据,一般处理迟到数据。

Non-keyed windows

stream

.windowAll(...) <- 必须制定: 窗口类型

[.trigger(...)] <- 可选: "trigger" (都有默认 触发器),决定窗口什么时候触发

[.evictor(...)] <- 可选: "evictor" (默认 没有剔出),剔出窗口中的元素

[.allowedLateness(...)] <- 可选: "lateness" (默认 0),不允许又迟到的数据

[.sideOutputLateData(...)] <- 可选: "output tag" 将迟到的数据输出到 指定流中

.reduce/aggregate/fold/apply() <- 必须指定: "function",实现对窗口数据的聚合计算

[.getSideOutput(...)] <- 可选: "output tag" 获取Sideout的数据,一般处理迟到数据。

Window Lifecycle(窗口生命周期)

简而言之,当第一个属于这个窗口的元素抵达的时候,窗口正式创建,当时间(事件时间或处理时间)超过其结束timestamp加上用户指定的允许延迟(see Window Functions)时,窗口将被完全删除。Flink保证只删除基于时间的窗口,而不能删除其他类型的窗口,例如全局窗口(see Window Assigners).

此外每一个窗口都包含一个触发器 (see Triggers)和一个函数(ProcessWindowFunction``ReduceFunction,AggregateFunction or FoldFunction) (see Window Functions)。该函数将包含要应用于窗口内容的计算,而“触发器”指定了在什么条件下可以将窗口视为要应用的功能。

除上述内容外,您还可以指定一个Evictor (see Evictors) ,该触发器将在触发触发器后以及应用此功能之前和/或之后从窗口中删除元素。

Window Assigners(窗口分配器)

窗口分配器定义了如何将元素分配给窗口。这是通过在window(…)(对于键流)或windowAll()(对于非键流)调用中指定您选择的WindowAssigner来完成的。

WindowAssigner负责将每个传入元素分配给一个或多个窗口。 Flink带有针对最常见用例的预定义窗口分配器,即滚动窗口,滑动窗口,会话窗口和全局窗口。您还可以通过扩展WindowAssigner类来实现自定义窗口分配器。所有内置窗口分配器(全局窗口除外)均基于时间将元素分配给窗口,时间可以是处理时间,也可以是事件时间。

Sliding Windows

package com.hw.demo08

import java.text.SimpleDateFormat

import org.apache.flink.streaming.api.scala.StreamExecutionEnvironment

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.ProcessWindowFunction

import org.apache.flink.streaming.api.windowing.assigners.SlidingProcessingTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.util.Collector

/**

* @aurhor:fql

* @date 2019/10/21 9:06

* @type:滑动窗口

*/

object SlindingWindow {

def main(args: Array[String]): Unit = {

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.socketTextStream("CentOS",7788)

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(_._1)

.window(SlidingProcessingTimeWindows.of(Time.seconds(4),Time.seconds(2)))

.process(new ProcessWindowFunction[(String,Int),String,String,TimeWindow] {

override def process(key: String, context: Context,

elements: Iterable[(String, Int)],

out: Collector[String]): Unit ={

val sdf = new SimpleDateFormat("HH:mm:ss")

val window = context.window

println(sdf.format(window.getStart)+"\t"+sdf.format(window.getEnd))

for(e<-elements){

println(e+"\t")

}

println()

}

})

fsEnv.execute("Sliding Window")

}

}

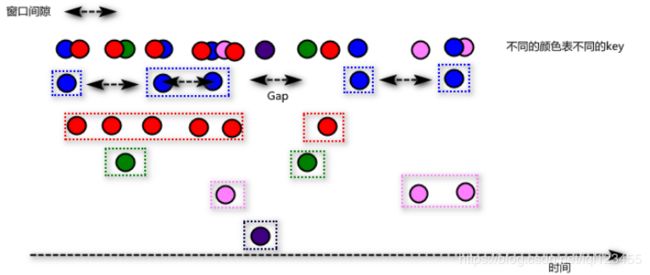

Session Windows(MergerWindow)

通过计算元素的时间间隔,如果时间间隔小于Session gap则合并到一个窗口中。如果大于时间间隔,则窗口关闭,后续的元素属于新的窗口大小,底层本质上做的是窗口合并。

package com.hw.demo08

import java.text.SimpleDateFormat

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.WindowFunction

import org.apache.flink.streaming.api.windowing.assigners.ProcessingTimeSessionWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.util.Collector

/**

* @aurhor:fql

* @date 2019/10/21 9:06

* @type:Session窗口

*/

object SessionWindow {

def main(args: Array[String]): Unit = {

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.socketTextStream("CentOS",7788)

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(_._1)

.window(ProcessingTimeSessionWindows.withGap(Time.seconds(5)))

.apply(new WindowFunction[(String,Int),String,String,TimeWindow]{

override def apply(key: String, window: TimeWindow, input: Iterable[(String, Int)], out: Collector[String]): Unit = {

val sdf = new SimpleDateFormat("HH:mm:ss")

println(sdf.format(window.getStart)+"\t"+sdf.format(window.getEnd))

for(e<- input){

print(e+"\t")

}

println()

}

})

fsEnv.execute("Sliding Window")

}

}

Global Windows

会将所有相同key元素放到一个全局的窗口中,默认该窗口永远都不会闭合(永远都不会触发),因为该窗口没有默认的窗口触发器Trigger,因此需要用户自定义Trigger。

package com.hw.demo08

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.WindowFunction

import org.apache.flink.streaming.api.windowing.assigners.GlobalWindows

import org.apache.flink.streaming.api.windowing.triggers.CountTrigger

import org.apache.flink.streaming.api.windowing.windows.GlobalWindow

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

import org.apache.flink.util.Collector

/**

* @aurhor:fql

* @date 2019/10/21 19:09

* @type: 全局窗口

*/

object GlobalWindow {

def main(args: Array[String]): Unit = {

var env = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "CentOS:9092")

props.setProperty("group.id", "g1")

val lines=env.addSource(new FlinkKafkaConsumer("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_, 1))

.keyBy(_._1)

.window(GlobalWindows.create())

.trigger(CountTrigger.of[GlobalWindow](3))

.apply(new WindowFunction[(String, Int), String, String, GlobalWindow] {

override def apply(key: String, window: GlobalWindow, input: Iterable[(String, Int)], out: Collector[String]): Unit = {

println("=======window========")

for (e <- input) {

print(e + "\t")

}

println()

}

})

env.execute("window")

}

}

Window Functions

当系统认定窗口就绪之后会调用Window Functions对窗口实现聚合计算。常见的WIndows Functions有以下形式: ReduceFunction, AggregateFunction, FoldFunction 或者ProcessWindowFunction|WindowFunction(古董|旧版)。

ReduceFunction

package com.hw.demo09

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

/**

* @aurhor:fql

* @date 2019/10/21 20:22

* @type:

*/

object ReduceFunction {

def main(args: Array[String]): Unit = {

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties() //设置kafka的配置文件

props.setProperty("bootstrap.servers", "CentOS:9092")

props.setProperty("group.id", "g1")

val lines=fsEnv.addSource(new FlinkKafkaConsumer("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.window(TumblingProcessingTimeWindows.of(Time.seconds(5))) //设置为滑动窗口

.reduce(new SumReduceFunction)

.print()

fsEnv.execute("ReduceFunction")

}

}

package com.hw.demo09

import org.apache.flink.api.common.functions.ReduceFunction

/**

* @aurhor:fql

* @date 2019/10/21 20:19

* @type:

*/

class SumReduceFunction extends ReduceFunction[(String,Int)]{

override def reduce(value1: (String, Int), value2: (String, Int)): (String, Int) = {

(value1._1,value1._2+value2._2)

}

}

AggregateFunction

package com.hw.demo09

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

/**

* @aurhor:fql

* @date 2019/10/21 20:37

* @type:

*/

object AggregateFunction {

def main(args: Array[String]): Unit = {

val props = new Properties()

props.setProperty("bootstrap.servers", "CentOS:9092")

props.setProperty("group.id", "g1")

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.addSource(new FlinkKafkaConsumer("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.window(TumblingProcessingTimeWindows.of(Time.seconds(5)))

.aggregate(new SumAggregateFunction)

.print()

fsEnv.execute("AggregateFunction")

}

}

package com.hw.demo09

import org.apache.flink.api.common.functions.AggregateFunction

/**

* @aurhor:fql

* @date 2019/10/21 20:29

* @type:

*/

class SumAggregateFunction extends AggregateFunction[(String,Int),(String,Int),(String,Int)] {

//返回一个新的累加器,对应于一个空的聚合

override def createAccumulator(): (String, Int) = {

("",0)

}

//返回合并状态的累加器

override def merge(a: (String, Int), b: (String, Int)): (String, Int) = {

(a._1,a._2+b._2)

}

/**

*

* @param value value The value to add

* @param accumulator The accumulator to add the value to

* @param accumulator

* @return

*/

override def add(value: (String, Int), accumulator: (String, Int)): (String, Int) = {

(value._1,accumulator._2+value._2)

}

//返回最终的Aggregate结果

override def getResult(accumulator: (String, Int)): (String, Int) = {

accumulator

}

}

FoldFunction

package com.hw.demo09

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

/**

* @aurhor:fql

* @date 2019/10/21 20:56

* @type:

*/

object FoldFunction {

def main(args: Array[String]): Unit = {

val props = new Properties()

props.setProperty("bootstrap.servers", "CentOS:9092")

props.setProperty("group.id", "g1")

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.addSource(new FlinkKafkaConsumer("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(0)

.window(TumblingProcessingTimeWindows.of(Time.seconds(3)))

.fold(("",0L),new SumFoldFunction)

.print()

fsEnv.execute("FoldFunction")

}

}

package com.hw.demo09

import org.apache.flink.api.common.functions.FoldFunction

/**

* @aurhor:fql

* @date 2019/10/21 20:48

* @type:

*/

class SumFoldFunction extends FoldFunction[(String,Int),(String,Long)]{

/** @param accumulator The initial value, and accumulator.

* @param value The value from the group to "fold" into the accumulator.

* @return The accumulator that is at the end of the "folding" the group.

* 99

*/

override def fold(accumulator: (String, Long), value: (String, Int)): (String, Long) ={

(value._1,accumulator._2+value._2)

}

}

ProcessWindowFunction

package com.hw.demo09

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.ProcessWindowFunction

import org.apache.flink.streaming.api.windowing.assigners.SlidingProcessingTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

import org.apache.flink.util.Collector

/**

* @aurhor:fql

* @date 2019/10/21 21:02

* @type:

*/

object ProcessWindowFunction {

def main(args: Array[String]): Unit = {

var fsEnv=StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "CentOS:9092")

props.setProperty("group.id", "g1")

fsEnv.addSource(new FlinkKafkaConsumer("topic01",new SimpleStringSchema(),props))

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(_._1)

.window(SlidingProcessingTimeWindows.of(Time.seconds(4),Time.seconds(2)))

.process(new ProcessWindowFunction[(String,Int),(String,Int),String,TimeWindow]{

override def process(key: String, context: Context,

elements: Iterable[(String, Int)],

out: Collector[(String,Int)]): Unit = {

val results = elements.reduce((v1,v2)=>(v1._1,v1._2+v2._2))

out.collect(results)

}

}).print()

fsEnv.execute("window")

}

}

globalState() | windowState()

globalState(), which allows access to keyed state that is not scoped to a windowwindowState(), which allows access to keyed state that is also scoped to the window

var env=StreamExecutionEnvironment.getExecutionEnvironment

val globalTag = new OutputTag[(String,Int)]("globalTag")

val countsStream = env.socketTextStream("centos", 7788)

.flatMap(_.split("\\s+"))

.map((_, 1))

.keyBy(_._1)

.window(TumblingProcessingTimeWindows.of(Time.seconds(4), Time.seconds(2)))

.process(new ProcessWindowFunction[(String, Int), (String, Int), String, TimeWindow] {

var wvds: ValueStateDescriptor[Int] = _

var gvds: ValueStateDescriptor[Int] = _

override def open(parameters: Configuration): Unit = {

wvds = new ValueStateDescriptor[Int]("window-value", createTypeInformation[Int])

gvds = new ValueStateDescriptor[Int]("global-value", createTypeInformation[Int])

}

override def process(key: String, context: Context,

elements: Iterable[(String, Int)],

out: Collector[(String, Int)]): Unit = {

val total = elements.map(_._2).sum

val ws = context.windowState.getState(wvds)

val gs=context.globalState.getState(gvds)

val historyWindowValue = ws.value()

val historyGlobalValue = gs.value()

out.collect((key, historyWindowValue + total))

context.output(globalTag, (key, historyGlobalValue + total))

ws.update(historyWindowValue + total)

gs.update(historyGlobalValue + total)

}

})

countsStream.print("窗口统计")

countsStream.getSideOutput(globalTag).print("全局输出")

env.execute("window")

ReduceFunction+ProcessWindowFunction

var env=StreamExecutionEnvironment.getExecutionEnvironment

val globalTag = new OutputTag[(String,Int)]("globalTag")

val countsStream = env.socketTextStream("centos", 7788)

.flatMap(_.split("\\s+"))

.map((_, 1))

.keyBy(_._1)

.window(TumblingProcessingTimeWindows.of(Time.seconds(4), Time.seconds(2)))

.reduce(new SumReduceFunction,new ProcessWindowFunction[(String, Int), (String, Int), String, TimeWindow] {

override def process(key: String, context: Context,

elements: Iterable[(String, Int)],

out: Collector[(String, Int)]): Unit = {

val total = elements.map(_._2).sum

out.collect((key, total))

}

})

countsStream.print("窗口统计")

countsStream.getSideOutput(globalTag).print("全局输出")

env.execute("window")

var env=StreamExecutionEnvironment.getExecutionEnvironment

val countsStream = env.socketTextStream("centos", 7788)

.flatMap(_.split("\\s+"))

.map((_, 1))

.keyBy(_._1)

.window(TumblingProcessingTimeWindows.of(Time.seconds(4), Time.seconds(2)))

.fold(("",0L),new SumFoldFunction,new ProcessWindowFunction[(String, Long), (String, Long), String, TimeWindow] {

override def process(key: String, context: Context,

elements: Iterable[(String, Long)],

out: Collector[(String, Long)]): Unit = {

val total = elements.map(_._2).sum

out.collect((key, total))

}

}).print()

env.execute("window")

WindowFunction(不常用)

遗产或古董,一般用ProcessWindowFunction替代。

在某些可以使用ProcessWindowFunction的地方,您也可以使用WindowFunction。这是ProcessWindowFunction的较旧版本,提供的上下文信息较少,并且没有某些高级功能,例如,每个窗口的键状态。该接口将在某个时候被弃用。

env.socketTextStream("centos",7788)

.flatMap(_.split("\\s+"))

.map((_,1))

.keyBy(_._1) //不能按照position进行keyBy()

.window(TumblingProcessingTimeWindows.of(Time.seconds(1)))

.apply(new WindowFunction[(String,Int),(String,Int),String,TimeWindow] {

override def apply(key: String,

window: TimeWindow,

input: Iterable[(String, Int)],

out: Collector[(String, Int)]): Unit = {

out.collect((key,input.map(_._2).sum))

}

}).print()

env.execute("window")

Triggers(触发器)

当一个窗口被窗口函数认定可以去执行的时候,一个触发器将被触发。每一个windowAssigner有一个默认的Trigger。如果默认的Trigger不符合自己的需要,可以自定义Trigger。

| WindowAssigners | 触发器 |

|---|---|

| global window | NeverTrigger |

| event-time window | EventTimeTrigger |

| processing-time window | ProcessingTimeTrigger |

触发器接口具有五种方法,这些方法允许触发器对不同事件做出反应:

- The

onElement()method is called for each element that is added to a window. - The

onEventTime()method is called when a registered event-time timer fires. - The

onProcessingTime()method is called when a registered processing-time timer fires. - The

onMerge()method is relevant for stateful triggers and merges the states of two triggers when their corresponding windows merge, e.g. when using session windows. - Finally the

clear()method performs any action needed upon removal of the corresponding window.

DeltaTrigger

var env=StreamExecutionEnvironment.getExecutionEnvironment

val deltaTrigger = DeltaTrigger.of[(String,Double),GlobalWindow](2.0,new DeltaFunction[(String,Double)] {

override def getDelta(oldDataPoint: (String, Double), newDataPoint: (String, Double)): Double = {

newDataPoint._2-oldDataPoint._2

}

},createTypeInformation[(String,Double)].createSerializer(env.getConfig))

env.socketTextStream("centos",7788)

.map(_.split("\\s+"))

.map(ts=>(ts(0),ts(1).toDouble))

.keyBy(0)

.window(GlobalWindows.create())

.trigger(deltaTrigger)

.reduce((v1:(String,Double),v2:(String,Double))=>(v1._1,v1._2+v2._2))

.print()

env.execute("window")

evictor(剔出)

evictor发器触发后,应用窗口功能之前和/或之后从窗口中删除元素。为此,Evictor界面有两种方法:

public interface Evictor<T, W extends Window> extends Serializable {

void evictBefore(Iterable<TimestampedValue<T>> elements, int size, W window, EvictorContext evictorContext);

void evictAfter(Iterable<TimestampedValue<T>> elements, int size, W window, EvictorContext evictorContext);

}

ErrorEvitor

package com.hw.demo10

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.scala.function.AllWindowFunction

import org.apache.flink.streaming.api.windowing.assigners.TumblingProcessingTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

import org.apache.flink.util.Collector

/**

* @aurhor:fql

* @date 2019/10/21 21:56

* @type:

*/

object ErrorEvitor {

def main(args: Array[String]): Unit = {

val props = new Properties()

props.setProperty("bootstrap.servers", "CentOS:9092")

props.setProperty("group.id", "g1")

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.addSource(new FlinkKafkaConsumer("topic01",new SimpleStringSchema(),props))

.windowAll(TumblingProcessingTimeWindows.of(Time.seconds(1)))

.evictor(new ErrorEvictor(true))

.apply(new AllWindowFunction[String,String,TimeWindow] {

override def apply(window: TimeWindow, input: Iterable[String], out: Collector[String]): Unit = {

for(e<-input){

out.collect(e)

}

print()

}

}).print()

fsEnv.execute("window")

}

}

package com.hw.demo10

import java.lang

import org.apache.flink.streaming.api.windowing.evictors.Evictor

import org.apache.flink.streaming.api.windowing.windows.TimeWindow

import org.apache.flink.streaming.runtime.operators.windowing.TimestampedValue

/**

* @aurhor:fql

* @date 2019/10/21 21:47

* @type:

*/

class ErrorEvictor(isBefore:Boolean)extends Evictor[String,TimeWindow] {

/**

*

* @param elements 当前面板中的元素

* @param size 当前面板元素的数量

* @param window The { @link Window}

* @param evictorContext 上下文

*/

override def evictBefore(elements: lang.Iterable[TimestampedValue[String]],

size: Int,

window: TimeWindow,

evictorContext: Evictor.EvictorContext): Unit = {

if (isBefore) {

evictor(elements, size, window, evictorContext)

}

}

/**

*

* @param elements 当前面板中的元素

* @param size 当前面板元素的数量

* @param window The { @link Window}

* @param evictorContext 上下文

*/

override def evictAfter(elements: lang.Iterable[TimestampedValue[String]], size: Int, window: TimeWindow, evictorContext: Evictor.EvictorContext): Unit = {

if (!isBefore) {

evictor(elements, size, window, evictorContext)

}

}

private def evictor(elements: lang.Iterable[TimestampedValue[String]], size: Int, window: TimeWindow, evictorContext: Evictor.EvictorContext): Unit = {

val iterator = elements.iterator()

while (iterator.hasNext) {

val it = iterator.next()

if (it.getValue.contains("error")) {

//将 含有error数据剔出

iterator.remove()

}

}

}

}

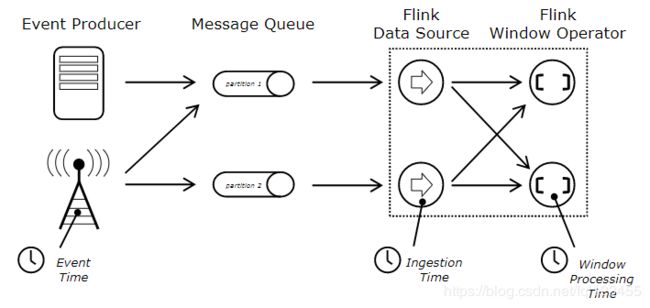

Event Time

Flink在做窗口计算的时候支持以下语义的Window:Processing time、Event time、Ingestion time

Processing time:使用处理节点时间,计算窗口。

Event time:使用事件产生时间,计算窗口-精确。

Ingestion time:数据进入到Flink的时间,一般是通过SourceFunction指定时间

默认Flink使用的是ProcessingTime ,因此一般情况下如果用户需要使用 Event time/Ingestion time需要设置时间属性

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

//window 操作

fsEnv.execute("event time")

涉及基于EventTime处理,用户必须声明水位线的计算策略,系统需要给出每一个流计算水位线的时间T,只有窗口的end time<= watermarker(T)的时候,窗口才会被触发。在Flink当中需要用户实现水位线计算的方式,系统并不提供实现,触发水位线的计算方式有两种1,:基于定时interval(推荐),2:通过记录触发,每一天记录系统会立即更新水位线。

定时

package com.hw.demo11

import org.apache.flink.streaming.api.functions.AssignerWithPeriodicWatermarks

import org.apache.flink.streaming.api.watermark.Watermark

/**

* @aurhor:fql

* @date 2019/10/21 22:29

* @type: 定时

*/

class AccessLogAssignerWithPeriodicWatermarks extends AssignerWithPeriodicWatermarks[AccessLog] {

private var maxSeeTime:Long=0L

private var maxOrderness:Long=2000L

override def getCurrentWatermark: Watermark = {

return new Watermark(maxSeeTime-maxOrderness)

}

override def extractTimestamp(element: AccessLog, previousElementTimestamp: Long): Long = {

maxSeeTime=Math.max(maxSeeTime,element.timestamp)

element.timestamp

}

}

基于记录

package com.hw.demo11

import org.apache.flink.streaming.api.functions.AssignerWithPunctuatedWatermarks

import org.apache.flink.streaming.api.watermark.Watermark

/**

* @aurhor:fql

* @date 2019/10/21 22:36

* @type: 基于记录

*/

class AccessLogAssignerWithPunctuatedWatermarks extends AssignerWithPunctuatedWatermarks[AccessLog]{

private var maxSeeTime:Long=0L

private var maxOrderness:Long=2000L

override def checkAndGetNextWatermark(lastElement: AccessLog, extractedTimestamp: Long): Watermark = {

new Watermark(maxSeeTime-maxOrderness)

}

override def extractTimestamp(element: AccessLog, previousElementTimestamp: Long): Long = {

maxSeeTime=Math.max(maxSeeTime,element.timestamp)

element.timestamp

}

}

Watermarker

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

fsEnv.getConfig.setAutoWatermarkInterval(1000)//设置水位线定期计算频率 1s/每次

fsEnv.setParallelism(1)

//模块信息 时间

fsEnv.socketTextStream("CentOS",8888)

.map(line=> line.split("\\s+"))

.map(ts=>AccessLog(ts(0),ts(1).toLong))

.assignTimestampsAndWatermarks(new AccessLogAssignerWithPeriodicWatermarks)

.keyBy(accessLog=>accessLog.channel)

.window(TumblingEventTimeWindows.of(Time.seconds(4)))

.process(new ProcessWindowFunction[AccessLog,String,String,TimeWindow] {

override def process(key: String, context: Context, elements: Iterable[AccessLog], out: Collector[String]): Unit = {

val sdf = new SimpleDateFormat("HH:mm:ss")

val window = context.window

val currentWatermark = context.currentWatermark

println("window:"+sdf.format(window.getStart)+"\t"+sdf.format(window.getEnd)+" \t watermarker:"+sdf.format(currentWatermark))

for(e<-elements){

val AccessLog(channel:String,timestamp:Long)=e

out.collect(channel+"\t"+sdf.format(timestamp))

}

}

})

.print()

迟到数据的处理

Flink支持对迟到数据处理,如果watermaker - window end < allow late time 记录可以参与窗口计算,否则Flink将too late数据丢弃。

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

fsEnv.getConfig.setAutoWatermarkInterval(1000)//设置水位线定期计算频率 1s/每次

fsEnv.setParallelism(1)

//模块信息 时间

fsEnv.socketTextStream("CentOS",8888)

.map(line=> line.split("\\s+"))

.map(ts=>AccessLog(ts(0),ts(1).toLong))

.assignTimestampsAndWatermarks(new AccessLogAssignerWithPeriodicWatermarks)

.keyBy(accessLog=>accessLog.channel)

.window(TumblingEventTimeWindows.of(Time.seconds(4)))

.allowedLateness(Time.seconds(2))

.process(new ProcessWindowFunction[AccessLog,String,String,TimeWindow] {

override def process(key: String, context: Context, elements: Iterable[AccessLog], out: Collector[String]): Unit = {

val sdf = new SimpleDateFormat("HH:mm:ss")

val window = context.window

val currentWatermark = context.currentWatermark

println("window:"+sdf.format(window.getStart)+"\t"+sdf.format(window.getEnd)+" \t watermarker:"+sdf.format(currentWatermark))

for(e<-elements){

val AccessLog(channel:String,timestamp:Long)=e

out.collect(channel+"\t"+sdf.format(timestamp))

}

}

})

.print()

fsEnv.execute("event time")

Flink默认对too late数据采取的是丢弃,如果用户想拿到过期的数据,可以使用sideout方式

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

fsEnv.getConfig.setAutoWatermarkInterval(1000)//设置水位线定期计算频率 1s/每次

fsEnv.setParallelism(1)

val lateTag = new OutputTag[AccessLog]("latetag")

//模块信息 时间

val keyedWindowStream=fsEnv.socketTextStream("CentOS",8888)

.map(line=> line.split("\\s+"))

.map(ts=>AccessLog(ts(0),ts(1).toLong))

.assignTimestampsAndWatermarks(new AccessLogAssignerWithPeriodicWatermarks)

.keyBy(accessLog=>accessLog.channel)

.window(TumblingEventTimeWindows.of(Time.seconds(4)))

.allowedLateness(Time.seconds(2))

.sideOutputLateData(lateTag)

.process(new ProcessWindowFunction[AccessLog,String,String,TimeWindow] {

override def process(key: String, context: Context, elements: Iterable[AccessLog], out: Collector[String]): Unit = {

val sdf = new SimpleDateFormat("HH:mm:ss")

val window = context.window

val currentWatermark = context.currentWatermark

println("window:"+sdf.format(window.getStart)+"\t"+sdf.format(window.getEnd)+" \t watermarker:"+sdf.format(currentWatermark))

for(e<-elements){

val AccessLog(channel:String,timestamp:Long)=e

out.collect(channel+"\t"+sdf.format(timestamp))

}

}

})

keyedWindowStream.print("正常:")

keyedWindowStream.getSideOutput(lateTag).print("too late:")

fsEnv.execute("event time")

注:当流中存在多个水位线,系统在计算的时候取最低。

Joining

Window Join

基本语法

stream.join(otherStream)

.where(<KeySelector>)

.equalTo(<KeySelector>)

.window(<WindowAssigner>)

.apply(<JoinFunction>)

Tumbling Window Join

package com.hw.demo12

import java.util.Properties

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.streaming.api.scala._

import org.apache.flink.streaming.api.windowing.assigners.TumblingEventTimeWindows

import org.apache.flink.streaming.api.windowing.time.Time

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer

/**

* @aurhor:fql

* @date 2019/10/22 8:47

* @type:

*/

object TumblingWindowJoin {

def main(args: Array[String]): Unit = {

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

val props = new Properties()

props.setProperty("bootstrap.servers", "CentOS:9092")

props.setProperty("group.id", "g1")

fsEnv.setParallelism(1) //设置并行度

val userStream = fsEnv.addSource(new FlinkKafkaConsumer("topic01", new SimpleStringSchema(), props))

.map(line => line.split("\\s+"))

.map(ts => User(ts(0), ts(1), ts(2).toLong))

.assignTimestampsAndWatermarks(new UserAssignerWithPeriodicWatermarks)

.setParallelism(1)

val prop = new Properties()

prop.setProperty("bootstrap.servers", "CentOS:9092")

prop.setProperty("group.id", "g1")

val orderStream = fsEnv.addSource(new FlinkKafkaConsumer("topic02", new SimpleStringSchema(), prop))

.map(line => line.split("\\s+"))

.map(ts => OrderItem(ts(0), ts(1), ts(2).toDouble, ts(3).toLong))

.assignTimestampsAndWatermarks(new OrderItemWithPeriodicWatermarks)

.setParallelism(1)

userStream.join(orderStream)

.where(user=>user.id)

.equalTo(orderStream=>orderStream.uid)

.window(TumblingEventTimeWindows.of(Time.seconds(4)))

.apply((u,o)=>{

(u.id,u.name,o.name,o.price,o.timestamp)

})

.print()

fsEnv.execute("FlinkStreamSlidingWindowJoin")

}

}

case class OrderItem(uid:String,name:String,price:Double,timestamp:Long)

case class User(id:String,name:String,timestamp:Long)

package com.hw.demo12

import java.text.SimpleDateFormat

import org.apache.flink.streaming.api.functions.AssignerWithPeriodicWatermarks

import org.apache.flink.streaming.api.watermark.Watermark

/**

* @aurhor:fql

* @date 2019/10/22 9:12

* @type:

*/

class OrderItemWithPeriodicWatermarks extends AssignerWithPeriodicWatermarks[OrderItem] {

private var maxSeeTime:Long=0L

private var maxOrderness:Long=2000L

val sdf = new SimpleDateFormat("HH:mm:ss")

override def getCurrentWatermark: Watermark = {

return new Watermark(maxSeeTime-maxOrderness)

}

override def extractTimestamp(element: OrderItem, previousElementTimestamp: Long): Long = {

maxSeeTime=Math.max(maxSeeTime,element.timestamp)

element.timestamp

}

}

package com.hw.demo12

import java.text.SimpleDateFormat

import org.apache.flink.streaming.api.functions.AssignerWithPeriodicWatermarks

import org.apache.flink.streaming.api.watermark.Watermark

/**

* @aurhor:fql

* @date 2019/10/22 9:02

* @type: 水位线的设置

*/

class UserAssignerWithPeriodicWatermarks extends AssignerWithPeriodicWatermarks[User]{

private var maxSeeTime:Long=0L

private var maxOrderness:Long=2000L

val sdf = new SimpleDateFormat("HH:mm:ss")

//获取当前的水位线

override def getCurrentWatermark: Watermark = {

return new Watermark(maxSeeTime-maxOrderness)

}

//提取时间戳

/**

*

* @param element

* @param previousElementTimestamp

* @return

*/

override def extractTimestamp(element: User, previousElementTimestamp: Long): Long = {

maxSeeTime=Math.max(maxSeeTime,element.timestamp)

element.timestamp

}

}

Sliding Window Join

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

fsEnv.getConfig.setAutoWatermarkInterval(1000)

fsEnv.setParallelism(1)

//001 zhangsan 1571627570000

val userStream = fsEnv.socketTextStream("CentOS",7788)

.map(line=>line.split("\\s+"))

.map(ts=>User(ts(0),ts(1),ts(2).toLong))

.assignTimestampsAndWatermarks(new UserAssignerWithPeriodicWatermarks)

.setParallelism(1)

//001 apple 4.5 1571627570000L

val orderStream = fsEnv.socketTextStream("CentOS",8899)

.map(line=>line.split("\\s+"))

.map(ts=>OrderItem(ts(0),ts(1),ts(2).toDouble,ts(3).toLong))

.assignTimestampsAndWatermarks(new OrderItemWithPeriodicWatermarks)

.setParallelism(1)

userStream.join(orderStream)

.where(user=>user.id)

.equalTo(orderItem=> orderItem.uid)

.window(SlidingEventTimeWindows.of(Time.seconds(4),Time.seconds(2)))

.apply((u,o)=>{

(u.id,u.name,o.name,o.price,o.ts)

})

.print()

fsEnv.execute("FlinkStreamTumblingWindowJoin")

Session Window Join

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

fsEnv.getConfig.setAutoWatermarkInterval(1000)

fsEnv.setParallelism(1)

//001 zhangsan 1571627570000

val userStream = fsEnv.socketTextStream("CentOS",7788)

.map(line=>line.split("\\s+"))

.map(ts=>User(ts(0),ts(1),ts(2).toLong))

.assignTimestampsAndWatermarks(new UserAssignerWithPeriodicWatermarks)

.setParallelism(1)

//001 apple 4.5 1571627570000L

val orderStream = fsEnv.socketTextStream("CentOS",8899)

.map(line=>line.split("\\s+"))

.map(ts=>OrderItem(ts(0),ts(1),ts(2).toDouble,ts(3).toLong))

.assignTimestampsAndWatermarks(new OrderItemWithPeriodicWatermarks)

.setParallelism(1)

userStream.join(orderStream)

.where(user=>user.id)

.equalTo(orderItem=> orderItem.uid)

.window(EventTimeSessionWindows.withGap(Time.seconds(5)))

.apply((u,o)=>{

(u.id,u.name,o.name,o.price,o.ts)

})

.print()

fsEnv.execute("FlinkStreamSessionWindowJoin")

Interval Join

间隔连接使用共同的key连接两个流(现在将它们分别称为A和B)的元素,并且流B的元素具有与流A的元素时间戳相对时间间隔的时间戳。

This can also be expressed more formally as b.timestamp ∈ [a.timestamp + lowerBound; a.timestamp + upperBound] ora.timestamp + lowerBound <= b.timestamp <= a.timestamp + upperBound

val fsEnv = StreamExecutionEnvironment.getExecutionEnvironment

fsEnv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)

fsEnv.getConfig.setAutoWatermarkInterval(1000)

fsEnv.setParallelism(1)

//001 zhangsan 1571627570000

val userStream = fsEnv.socketTextStream("CentOS",7788)

.map(line=>line.split("\\s+"))

.map(ts=>User(ts(0),ts(1),ts(2).toLong))

.assignTimestampsAndWatermarks(new UserAssignerWithPeriodicWatermarks)

.setParallelism(1)

.keyBy(_.id)

//001 apple 4.5 1571627570000L

val orderStream = fsEnv.socketTextStream("CentOS",8899)

.map(line=>line.split("\\s+"))

.map(ts=>OrderItem(ts(0),ts(1),ts(2).toDouble,ts(3).toLong))

.assignTimestampsAndWatermarks(new OrderItemWithPeriodicWatermarks)

.setParallelism(1)

.keyBy(_.uid)

userStream.intervalJoin(orderStream)

.between(Time.seconds(-1),Time.seconds(1))

.process(new ProcessJoinFunction[User,OrderItem,String]{

override def processElement(left: User, right: OrderItem, ctx: ProcessJoinFunction[User, OrderItem, String]#Context, out: Collector[String]): Unit = {

println(left+" \t"+right)

out.collect(left.id+" "+left.name+" "+right.name+" "+ right.price+" "+right.ts)

}

})

.print()

fsEnv.execute("FlinkStreamSessionWindowJoin")