kubernetes cni网络详解

1.CNI介绍

一直以来,kubernetes 并没有专门的网络模块负责网络配置,它需要用户在主机上已经配置好网络。

kubernetes 对网络的要求是:

- 容器之间(包括同一台主机上的容器,和不同主机的容器)可以互相通信

- 容器和集群中所有的节点也能直接通信

kubernetes 网络的发展方向是希望通过插件的方式来集成不同的网络方案, CNI 就是这一努力的结果。CNI只专注解决容器网络连接和容器销毁时的资源释放,提供一套框架,所以CNI可以支持大量不同的网络模式,并且容易实现。

2.网络创建步骤

在Kubernetes创建Pod后CNI提供网络的过程主要分三个步骤:

- Kubelet runtime创建network namespace

- Kubelete触发CNI插件,指定网络类型(网络类型决定哪一个CNI plugin将会被使用)

- CNI插件将创建veth pair, 检查IPAM类型和数据,触发IPAM插件,获取空闲的IP地址并将地址分配给容器的网络接口

2.1 kubelet runtime创建network namespace

kubelet先创建pause容器,并为这个pause容器生成一个network namespace,然后把这个network namespace关联到pause容器上。

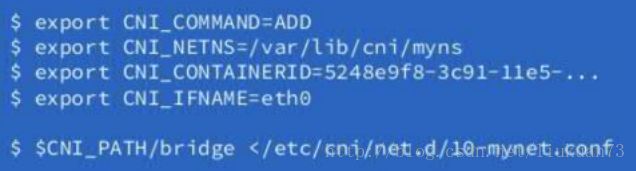

2.2 Kubelet触发CNI插件

在触发cni插件的时候会将cni的配置load给cni插件

执行CNI需要传入以下变量:

# 添加或者删除网卡

CNI_COMMAND=ADD/DEL

# 容器的ID

CNI_CONTAINERID=xxxxxxxxxxxxxxxxxxx

# 容器网络空间主机映射路径

CNI_NETNS=/proc/4390/ns/net

# CNI参数,使用分号分开,主要是POD的一些相关信息

CNI_ARGS=IgnoreUnknown=1;K8S_POD_NAMESPACE=default;K8S_POD_NAME=22-my-nginx-2523304718-7stgs;K8S_POD_INFRA_CONTAINER_ID=xxxxxxxxxxxxxxxx

# 容器内网卡的名称

CNI_IFNAME=eth0

# CNI二进制文件路径

CNI_PATH=/opt/cni/bin.2.3 kubelet调用相应CNI插件

3.CNI网络插件

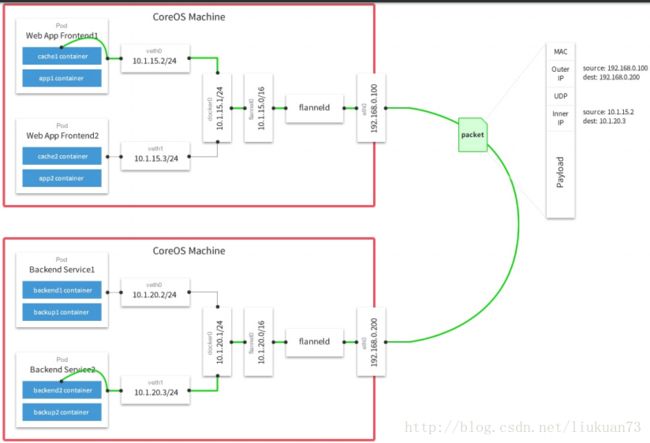

3.1 flannel

flannel原理如下图所示,flannel不是本文介绍重点,不做详细介绍。

3.2 calico

calico在CNM和CNI两大阵营都扮演着比较重要的角色。既有着不俗的性能表现,提供了很好的隔离性,还有不错的ACL控制能力。

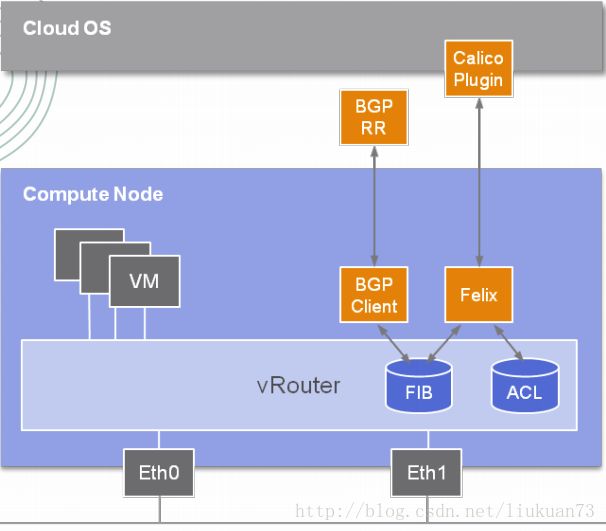

Calico 是一个三层的数据中心网络方案,基于三层路由,不需要二层网桥。而且方便集成 OpenStack 这种 IaaS 云架构,能够提供高效可控的 VM、容器、裸机之间的通信。

Calico的BGP模式在每一个计算节点利用Linux Kernel实现了一个高效的vRouter来负责数据转发,而每个vRouter通过BGP协议负责把自己上运行的workload的路由信息向整个Calico网络内传播——小规模部署可以直接互联,大规模下可通过指定的BGP route reflector来完成。所有的workload之间的数据流量都是通过IP路由的方式完成互联的。

Calico 节点组网可以直接利用数据中心的网络结构(支持 L2 或者 L3),不需要额外的 NAT、端口映射、隧道或者 VXLAN overlay network,扩展性和性能都很好。与其他容器网络方案相比,Calico 还有一大优势:丰富而灵活的network policy。用户可以通过动态定义各个节点上的 ACL 规则,控制进出容器的数据包,来提供Workload的多租户隔离、安全组以及其他可达性限制等功能,实现业务需求。

如上图所示,这样保证这个方案的简单可控,而且没有封包解包,节约CPU计算资源的同时,提高了整个网络的性能。

3.2.1 Calico架构

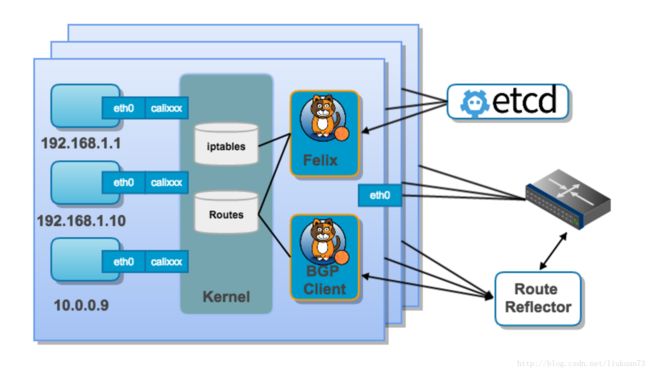

结合上面这张图,我们来过一遍BGP模式下Calico的核心组件:

- Felix(Calico Agent):跑在每台需要运行Workload的节点上的守护进程,主要负责配置路由及ACLs等信息来确保Endpoint的连通状态;根据对接的编排框架的不同,felix主要包含以下功能:

- 网络接口管理:把接口的一些信息告诉内核,让内核正确的处理这个接口的链路,特殊情况下,会去响应ARP请求,允许ip forwarding等。

- 路由管理:在节点上把endpoints的路由配置到Linux kernel FIB(forwarding information base), 保障包正确的到达节点的endpoint上,我的理解endpoints是节点上的虚拟网卡

- ACL管理:准入控制列表,设置内核的ACL,保证只有合法的包才可以在链路上发送,保障安全。

- 状态报告:把节点的网络状态信息写入etcd。

- The Orchestrator plugin:通过该插件更好地与编排系统(k8s/mesos/openstack等)进行集成。(The purpose of these plugins is to bind Calico more tightly into the orchestrator, allowing users to manage the Calico network just as they’d manage network tools that were built into the orchestrator.)工作包括:

- API转化:编排系统 kubernetes openstack等有自己的API,编排插件翻译成calico的数据模型存到calico的数据库中。

- 信息反馈:把网络状态的一些信息反馈给上层的编排调度系统

etcd:分布式键值存储,主要负责网络元数据一致性,确保Calico网络状态的准确性;用途有:

- 数据存储

- 各组件之间通信

BGP Client(BIRD):主要负责把Felix写入Kernel的路由信息分发到当前Calico网络,确保Workload间的通信的有效性;BIRD Bird是一个BGP client,它会主动读取felix在host上设置的路由信息,然后通过BGP协议广播出去。

BGP Route Reflector(BIRD):大规模部署时使用,摒弃所有节点互联的 mesh 模式(每个BGP客户端之间都会相互连接,会以 N^2次方增长),reflector负责client之间的连接,防止它们需要两两相连。通过一个或者多个BGP Route Reflector来完成集中式的路由分发;为了冗余,可以部署多个reflectors, 它仅仅包含控制面,endpoint之间的数据不经过它们。

- birdc是bird的client,可以用来查看bird的状态,例如

- 查看配置的协议: birdctl -s /var/run/calico/bird.ctl show protocols

- 查看所有的路由: birdctl -s /var/run/calico/bird.ctl show route

可以到birdc中查看更多的命令。

架构详情请见官网

3.2.2 Calico模式

3.2.2.1 BGP模式

BGP模式流量分析

<1>流量从容器中到达主机的过程

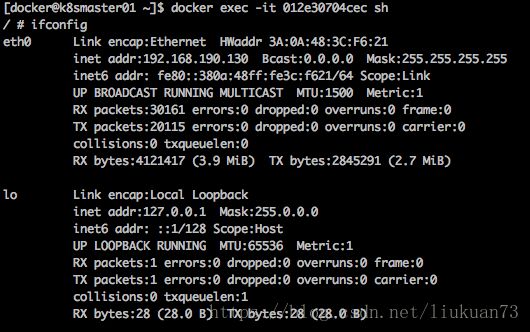

cni-plugin会在指定的network ns中创建veth pair。位于容器中的veth,将被设置ip,在容器中可以看到内部ip地址是启动kube-proxy时指定的--cluster-cidr=192.168.0.0/16中的一个:

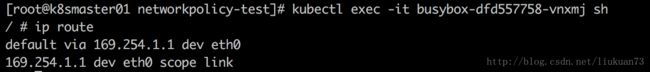

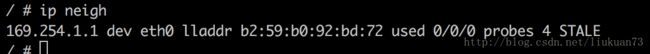

并将169.254.1.1设置为默认路由,在容器内可以看到:

因为169.254.1.1是无效IP,因此,cni-plugin还要在容器内设置一条静态arp:

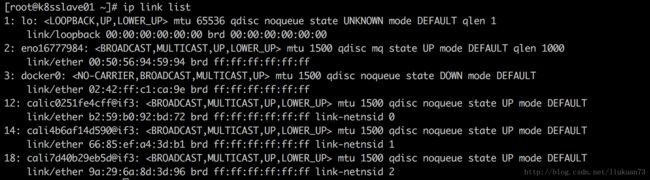

在主机上查看网络接口,可以找到和上述mac地址一致的一个calixxx接口:

由以上可见:169.254.1.1的mac地址被设置为了veth设备在host中的一端veth的mac地址,容器中所有的报文就会发送到主机的veth端。

<2>流量从主机到达其他pod的过程

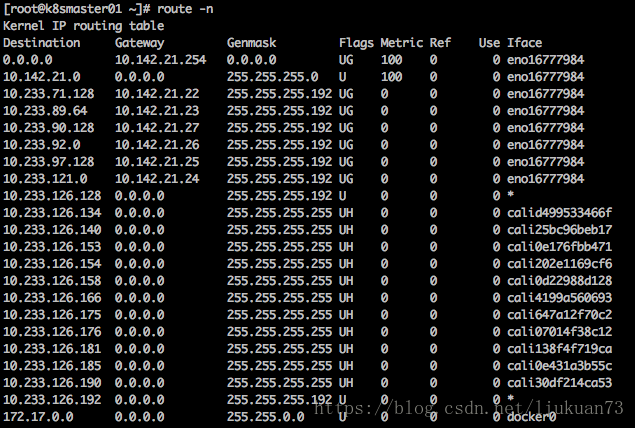

查看主机路由表:

可以看到到10.233.64.0/18这个pod网段的流量主要走两种iface:calixxxxx和eno16777984(主机网卡),走calixxxx的是访问在同一主机的pod的流量;走eno16777984的是访问其他主机的pod的流量,然后Gateway直接就是所要访问的pod所在主机的ip。

<3>与docker bridge模式对比

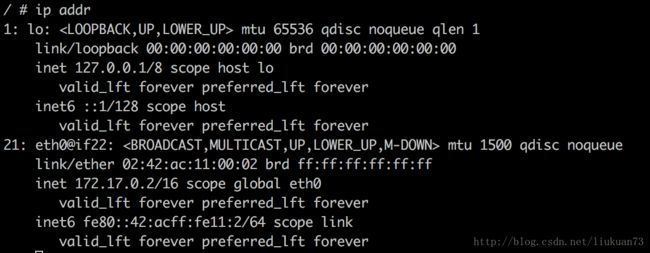

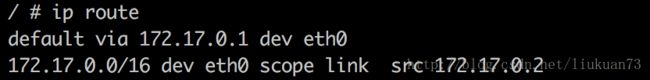

单纯使用docker bridge模式起容器的话可以看到内部ip地址是docker0网桥那个网段的内部地址:

同样会创建veth pair。位于容器中的veth,将被设置ip,并设置bridge0网桥172.17.0.1为默认路由,在容器内可以看到:

因为172.17.0.1是docker0网桥的有效IP,因此,这里就没有再像calico模式一样设设置一条静态arp了。

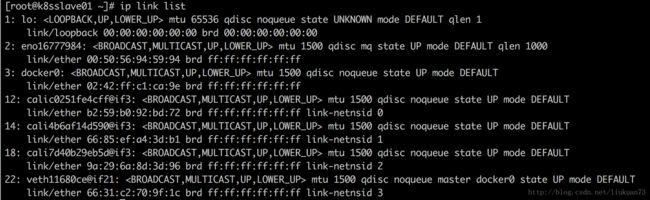

在主机上查看网络接口,可以找到对应在主机上的vethxxx接口:

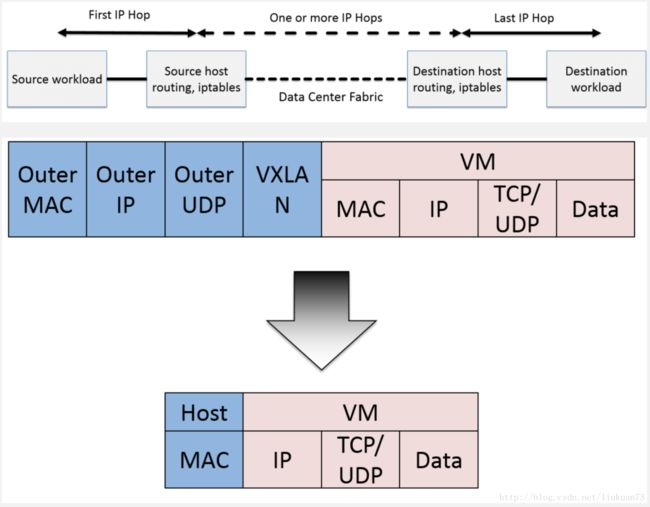

3.2.2.2 IP-in-IP模式

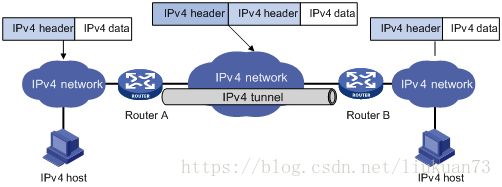

Calico的BGP模式在主机间跨子网的情况下要求网关的路由器也支持BGP协议才可以,当数据中心环境不满足这个要求时,可以考虑使用ipip模式。

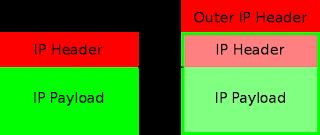

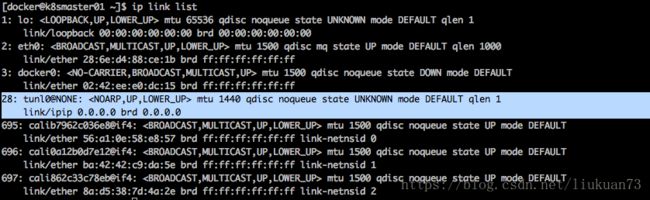

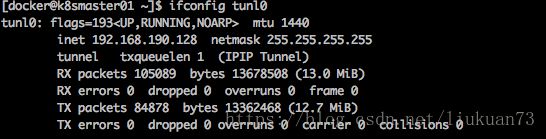

IPIP从字面来理解,就是把一个IP数据包又套在一个IP包里。是把 IP 层封装到 IP 层的一个 tunnel,看起来似乎是浪费,实则不然。它的作用其实基本上就相当于一个基于IP层的网桥!我们知道,普通的网桥是基于mac层的,根本不需 IP,而这个 ipip 则是通过两端的路由做一个 tunnel,把两个本来不通的网络通过点对点连接起来。启用IPIP模式时,Calico将在各Node上创建一个名为”tunl0”的虚拟网络接口。ipip 的源代码在内核 net/ipv4/ipip.c 中可以找到。

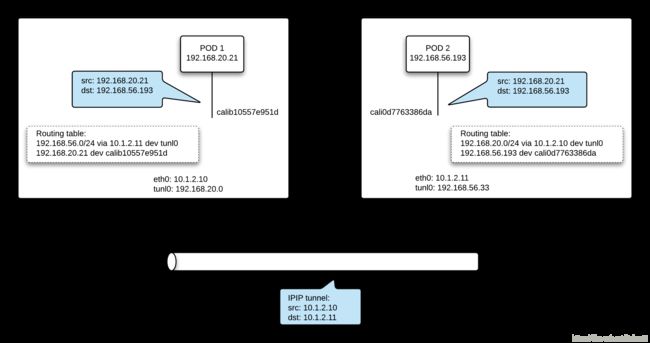

ipip模式流量分析

<1>流量从容器中到达主机的过程

同BGP模式

<2>流量从主机到达其他pod的过程

启用IPIP模式时,Calico将在node上创建一个tunl0的虚拟网卡做隧道使用,如以下2图所示:

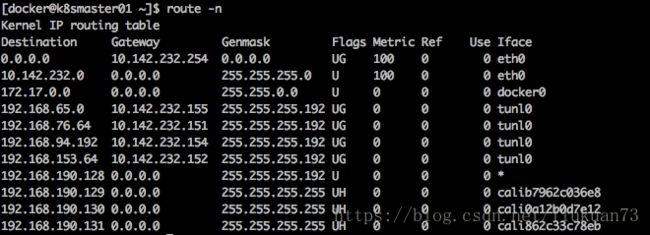

查看主机路由表:

可以看到到192.168.x.x这个pod网段的流量主要走两种iface:calixxxxx和tunl0,走calixxxx的是访问在同一主机的pod的流量;走tunl0的是访问其他主机的pod的流量,然后Gateway直接就是所要访问的pod所在主机的ip。

配置方法请见官网

3.2.2.3 cross-subnet模式

同子网内的机器间采用bgp模式,跨子网的机器间采用ipip模式。要想使用cross-subnet模式,需要配置如下两项:

- 配置ipPool,具体配置方式请见官网

Configuring cross-subnet IP-in-IP部分 - 配置calico-node,具体配置方式请见官网,尤其注意可能需要手动修改node子网掩码(虽然calico会自动探测并设置主机ip和子网掩码,但很多时候设置的子网掩码是32,因此需要手动改一下)。

4.calico部署

4.1 前提

根据官方文档,calico部署前,已有的k8s集群需做好以下配置:

- kubelet需要添加如下启动参数:

--network-plugin=cni//指定网络插件类型--cni-conf-dir=/etc/cni/net.d//指定cni配置文件目录--cni-bin-dir=/opt/cni/bin//指定cni可执行文件目录

- kube-proxy必须采用iptables proxy mode

--proxy-mode=iptables(1.2 以后是默认模式) - kubec-proxy 组件启动方式不能采用

--masquerade-all启动,因为会与 Calico policy 冲突

备注:

1.--cni-conf-dir和--cni-bin-dir这两个参数在启动calico-node pod的install-cni容器的时候也会指定挂载进容器,需要和这里设置的一样。

2.net.d下有如下内容:

[root@k8smaster01 net.d]# ll

总用量 12

-rw-rw-r--. 1 root root 1490 12月 5 21:47 10-calico.conf

-rw-r--r--. 1 root root 273 12月 5 21:47 calico-kubeconfig

drwxr-xr-x. 2 root root 4096 12月 5 21:47 calico-tls其中,10-calico.conf是一些calico的基本配置项,内容如下:

{

"name": "k8s-pod-network",

"cniVersion": "0.1.0",

"type": "calico",

"etcd_endpoints": "https://10.142.21.21:2379",

"etcd_key_file": "/etc/cni/net.d/calico-tls/etcd-key",

"etcd_cert_file": "/etc/cni/net.d/calico-tls/etcd-cert",

"etcd_ca_cert_file": "/etc/cni/net.d/calico-tls/etcd-ca",

"log_level": "info",

"mtu": 1500,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s",

"k8s_api_root": "https://10.233.0.1:443",

"k8s_auth_token": "eyJhbGciOiJSUzI1NiIsInR5cCI6IkpXVCJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJjYWxpY28tbm9kZS10b2tlbi1qNHg0bSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50Lm5hbWUiOiJjYWxpY28tbm9kZSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VydmljZS1hY2NvdW50LnVpZCI6ImE4N2ZiZjY1LWQzNTctMTFlNy1hZTVkLTAwNTA1Njk0ZWI2YSIsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlLXN5c3RlbTpjYWxpY28tbm9kZSJ9.om9TzY_4uYSx2b0kVooTmlA_xrHlL0vpGd4m6Pxq4s--CwQHQb5yvOy3qtMqpRlQrSliDRSLUi1O5QQXfjWNrbyk206B4F0k9NsZi0s-1ZzNVhwI6hsatXl8NZ0qb4LQ2rulm5uM9ykKwXnGpQyCghtwlcBGqQmY63VrBdZHxD3NeyCJ9HqM8BfhclpfzepN-ADADhNn59m96cSaXPJbogVOoMdntXn9x9kn_VaQn5A-XIBNQDMGrUhz1dWCOFfG3nVlxAbo24UcxurvtX4gWyxlUTrkc195i_cnuTbYlIMfBCgKsnuf9QMryn5EWULC5IMB8hPW9Bm1e8fUIHeMvQ"

},

"kubernetes": {

"kubeconfig": "/etc/cni/net.d/calico-kubeconfig"

}

}3.我部署的是calico2.6.2版本,该版本涉及的各组件版本信息请见:https://docs.projectcalico.org/v2.6/releases/#v2.6.2。

Calico 依赖 etcd 在不同主机间共享和交换信息,存储 Calico 网络状态。Calico 网络中的每个主机都需要运行 Calico 组件,提供容器 interface 管理、动态路由、动态 ACL、报告状态等功能。

4.2 calicoctl部署

4.2.1 calicoctl安装

到这里获取calicoctl并安装,1.9及以前版本的k8s下载1.6.3版本的calicoctl,1.10及以后的k8s下载3.1.1的calicoct:https://console.bluemix.net/docs/containers/cs_network_policy.html#cli_install。

sudo wget -O /usr/local/bin/calicoctl https://github.com/projectcalico/calicoctl/releases/download/v1.6.3/calicoctl

sudo chmod a+x /usr/local/bin/calicoctl4.2.2 calicoctl配置

calicoctl默认是会读取/etc/calico/calicoctl.cfg的配置文件(也可以通过--config选项来指定要读取的配置文件),配置里指定etcd集群的地址,文件的格式请见官网。

示例:

apiVersion: v1

kind: calicoApiConfig

metadata:

spec:

datastoreType: "etcdv2"

etcdEndpoints: "https://10.142.232.21:2379,https://10.142.232.22:2379,https://10.142.232.23:2379"

etcdKeyFile: "/etc/calico/certs/key.pem"

etcdCertFile: "/etc/calico/certs/cert.crt"

etcdCACertFile: "/etc/calico/certs/ca_cert.crt"4.2.3 常用calicoctl命令

查看ip pool

calicoctl get ipPool查看节点状态信息

calicoctl node status创建ipPool

calicoctl apply -f - << EOF

apiVersion: v1

kind: ipPool

metadata:

cidr: 192.168.0.0/16

spec:

ipip:

enabled: true

nat-outgoing: true

EOF修改calico网络模式,由BGP改为ipip:

calicoctl apply -f - << EOF

apiVersion: v1

kind: ipPool

metadata:

cidr: 192.168.0.0/16

spec:

ipip:

enabled: true

mode: always

nat-outgoing: true

EOF4.3 calico核心组件部署

主要涉及3个组件:

- calico/node:v2.6.2

- calico/cni:v1.11.0

- calico/kube-controllers:v1.0.0

使用kubernetes部署,使用的yaml文件请见:https://github.com/projectcalico/calico/blob/v2.6.2/master/getting-started/kubernetes/installation/hosted/calico.yaml

下面以我的实际文件为例进行讲解:

4.3.1 calico-config(configmap)

kind: ConfigMap

apiVersion: v1

metadata:

name: calico-config

namespace: kube-system

data:

# Configure this with the location of your etcd cluster.

etcd_endpoints: "https://10.142.21.21:2379"

# Configure the Calico backend to use.

calico_backend: "bird"

# The CNI network configuration to install on each node.

cni_network_config: |-

{

"name": "k8s-pod-network",

"cniVersion": "0.1.0",

"type": "calico",

"etcd_endpoints": "__ETCD_ENDPOINTS__",

"etcd_key_file": "__ETCD_KEY_FILE__",

"etcd_cert_file": "__ETCD_CERT_FILE__",

"etcd_ca_cert_file": "__ETCD_CA_CERT_FILE__",

"log_level": "info",

"mtu": 1500,

"ipam": {

"type": "calico-ipam"

},

"policy": {

"type": "k8s",

"k8s_api_root": "https://__KUBERNETES_SERVICE_HOST__:__KUBERNETES_SERVICE_PORT__",

"k8s_auth_token": "__SERVICEACCOUNT_TOKEN__"

},

"kubernetes": {

"kubeconfig": "__KUBECONFIG_FILEPATH__"

}

}

# If you're using TLS enabled etcd uncomment the following.

# You must also populate the Secret below with these files.

etcd_ca: "/calico-secrets/etcd-ca"

etcd_cert: "/calico-secrets/etcd-cert"

etcd_key: "/calico-secrets/etcd-key"说明:

1.这个configmap给calico-node配置env使用

2.calico-config的data.etcd_endpoints(endpoints为etcd的真实环境地址)

4.3.2 calico-etcd-secrets(secret)

# The following contains k8s Secrets for use with a TLS enabled etcd cluster.

# For information on populating Secrets, see http://kubernetes.io/docs/user-guide/secrets/

apiVersion: v1

kind: Secret

type: Opaque

metadata:

name: calico-etcd-secrets

namespace: kube-system

data:

# Populate the following files with etcd TLS configuration if desired, but leave blank if

# not using TLS for etcd.

# This self-hosted install expects three files with the following names. The values

# should be base64 encoded strings of the entire contents of each file.

etcd-key: 太长了,省略...

etcd-cert: 太长了,省略...

etcd-ca: 太长了,省略...说明:

1. 这个secret用来给calico-node和kube-controller连接etcd使用

2. calico-etcd-secrets的etcd-key、etcd-cert、etcd-ca,分别使用/etc/ssl/etcd/ssl/目录下的node-k8smaster01-key.pem、node-k8smaster01.pem、ca.pem(根据实际环境的证书数据填写。)的base64编码(生成base64编码:base64 /etc/ssl/etcd/ssl/node-k8smaster01-key.pem)

4.3.3 calico-node(daemonset)

# This manifest installs the calico/node container, as well

# as the Calico CNI plugins and network config on

# each master and worker node in a Kubernetes cluster.

kind: DaemonSet

apiVersion: extensions/v1beta1

metadata:

name: calico-node

namespace: kube-system

labels:

k8s-app: calico-node

spec:

selector:

matchLabels:

k8s-app: calico-node

template:

metadata:

labels:

k8s-app: calico-node

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

/cheduler.alpha.kubernetes.io/tolerations: |

[{"key": "dedicated", "value": "master", "effect": "NoSchedule" },

{"key":"CriticalAddonsOnly", "operator":"Exists"}]

spec:

hostNetwork: true

serviceAccountName: calico-node

# Minimize downtime during a rolling upgrade or deletion; tell Kubernetes to do a "force

# deletion": https://kubernetes.io/docs/concepts/workloads/pods/pod/#termination-of-pods.

terminationGracePeriodSeconds: 0

containers:

# Runs calico/node container on each Kubernetes node. This

# container programs network policy and routes on each

# host.

- name: calico-node

image: quay.io/calico/node:v2.6.2

env:

# The location of the Calico etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Choose the backend to use.

- name: CALICO_NETWORKING_BACKEND

valueFrom:

configMapKeyRef:

name: calico-config

key: calico_backend

# Cluster type to identify the deployment type

- name: CLUSTER_TYPE

value: "k8s,bgp"

# Disable file logging so `kubectl logs` works.

- name: CALICO_DISABLE_FILE_LOGGING

value: "true"

# Set Felix endpoint to host default action to ACCEPT.

- name: FELIX_DEFAULTENDPOINTTOHOSTACTION

value: "ACCEPT"

# Disable IPv6 on Kubernetes.

- name: FELIX_IPV6SUPPORT

value: "false"

# Set Felix logging to "info"

- name: FELIX_LOGSEVERITYSCREEN

value: "info"

# Set MTU for tunnel device used if ipip is enabled

- name: FELIX_IPINIPMTU

value: "1440"

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

# Auto-detect the BGP IP address.

- name: IP

value: ""

- name: FELIX_HEALTHENABLED

value: "true"

securityContext:

privileged: true

resources:

requests:

cpu: 250m

livenessProbe:

httpGet:

path: /liveness

port: 9099

periodSeconds: 10

initialDelaySeconds: 10

failureThreshold: 6

readinessProbe:

httpGet:

path: /readiness

port: 9099

periodSeconds: 10

volumeMounts:

- mountPath: /lib/modules

name: lib-modules

readOnly: true

- mountPath: /var/run/calico

name: var-run-calico

readOnly: false

- mountPath: /calico-secrets

name: etcd-certs

# This container installs the Calico CNI binaries

# and CNI network config file on each node.

- name: install-cni

image: quay.io/calico/cni:v1.11.0

command: ["/install-cni.sh"]

env:

# The location of the Calico etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# The CNI network config to install on each node.

- name: CNI_NETWORK_CONFIG

valueFrom:

configMapKeyRef:

name: calico-config

key: cni_network_config

volumeMounts:

- mountPath: /host/opt/cni/bin

name: cni-bin-dir

- mountPath: /host/etc/cni/net.d

name: cni-net-dir

- mountPath: /calico-secrets

name: etcd-certs

volumes:

# Used by calico/node.

- name: lib-modules

hostPath:

path: /lib/modules

- name: var-run-calico

hostPath:

path: /var/run/calico

# Used to install CNI.

- name: cni-bin-dir

hostPath:

path: /opt/cni/bin

- name: cni-net-dir

hostPath:

path: /etc/cni/net.d

# Mount in the etcd TLS secrets.

- name: etcd-certs

secret:

secretName: calico-etcd-secrets备注:

1.calico-node的CALICO_IPV4POOL_CIDR参数(注:需要根据实际环境更改)

2.每个pod里除pause外有两个容器:calico-node和install-cni,其中calico-node这个容器里起了多个进程,有:calico-felix、bird、bird6、confd(confd根据etcd上状态信息,与本地模板,生成并更新BIRD配置)

3.根据环境里的所有节点的taints,为daemon/calico-node添加tolerations参数,使其能在每个节点上都能部署。例如如下参数:

spec:

tolerations:

- key: "nginx-ingress-controller"

operator: "Equal"

value: "true"

effect: "NoSchedule"

hostNetwork: true这是为了taints的节点能够部署calico-node。其中的key和value为标记的taints。

4.3.4 calico-kube-controllers(deployment)

# This manifest deploys the Calico Kubernetes controllers.

# See https://github.com/projectcalico/kube-controllers

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: |

[{"key": "dedicated", "value": "master", "effect": "NoSchedule" },

{"key":"CriticalAddonsOnly", "operator":"Exists"}]

spec:

# The controllers can only have a single active instance.

replicas: 1

strategy:

type: Recreate

template:

metadata:

name: calico-kube-controllers

namespace: kube-system

labels:

k8s-app: calico-kube-controllers

spec:

# The controllers must run in the host network namespace so that

# it isn't governed by policy that would prevent it from working.

hostNetwork: true

serviceAccountName: calico-kube-controllers

containers:

- name: calico-kube-controllers

image: quay.io/calico/kube-controllers:v1.0.0

env:

# The location of the Calico etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints

# Location of the CA certificate for etcd.

- name: ETCD_CA_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_ca

# Location of the client key for etcd.

- name: ETCD_KEY_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_key

# Location of the client certificate for etcd.

- name: ETCD_CERT_FILE

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_cert

volumeMounts:

# Mount in the etcd TLS secrets.

- mountPath: /calico-secrets

name: etcd-certs

volumes:

# Mount in the etcd TLS secrets.

- name: etcd-certs

secret:

secretName: calico-etcd-secrets4.3.5 calico-policy-controller(deployment)

# This deployment turns off the old "policy-controller". It should remain at 0 replicas, and then

# be removed entirely once the new kube-controllers deployment has been deployed above.

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: calico-policy-controller

namespace: kube-system

labels:

k8s-app: calico-policy

spec:

# Turn this deployment off in favor of the kube-controllers deployment above.

replicas: 0

strategy:

type: Recreate

template:

metadata:

name: calico-policy-controller

namespace: kube-system

labels:

k8s-app: calico-policy

spec:

hostNetwork: true

serviceAccountName: calico-kube-controllers

containers:

- name: calico-policy-controller

image: quay.io/calico/kube-controllers:v1.0.0

env:

# The location of the Calico etcd cluster.

- name: ETCD_ENDPOINTS

valueFrom:

configMapKeyRef:

name: calico-config

key: etcd_endpoints4.3.6 calico-kube-controllers(serviceaccount)

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-kube-controllers

namespace: kube-system4.3.7 calico-node(serviceaccount)

apiVersion: v1

kind: ServiceAccount

metadata:

name: calico-node

namespace: kube-system整体流量具体怎么走的,请参考(有时间再整理):

http://blog.csdn.net/liukuan73/article/details/78635711

http://blog.csdn.net/liukuan73/article/details/78837424

http://cizixs.com/2017/03/30/kubernetes-introduction-service-and-kube-proxy?utm_source=tuicool&utm_medium=referral

http://www.lijiaocn.com/%E9%A1%B9%E7%9B%AE/2017/03/27/Kubernetes-kube-proxy.html

参考

1.http://lameleg.com/tech/calico-architecture.html

2.http://www.lijiaocn.com/%E9%A1%B9%E7%9B%AE/2017/08/04/calico-arch.html

3.http://blog.csdn.net/felix_yujing/article/details/55213239

4.https://mritd.me/2017/07/31/calico-yml-bug/

5.http://v.youku.com/v_show/id_XMzE2MzM5MzMwNA==.html

6.https://neuvector.com/network-security/kubernetes-networking/

7.https://platform9.com/blog/kubernetes-networking-achieving-high-performance-with-calico/

8.http://www.dockermall.com/there-are-clouds-container-network-those-things/