kubernetes部署EFK日志系统

EFK简介

Kubernetes 开发了一个 Elasticsearch 附加组件来实现集群的日志管理。这是一个 Elasticsearch、Fluentd 和 Kibana 的组合。

- Elasticsearch 是一个搜索引擎,负责存储日志并提供查询接口;

- Fluentd 负责从 Kubernetes 搜集日志,每个node节点上面的fluentd监控并收集该节点上面的系统日志,并将处理过后的日志信息发送给Elasticsearch;

- Kibana 提供了一个 Web GUI,用户可以浏览和搜索存储在 Elasticsearch 中的日志。

通过在每台node上部署一个以DaemonSet方式运行的fluentd来收集每台node上的日志。Fluentd将docker日志目录/var/lib/docker/containers和/var/log目录挂载到Pod中,然后Pod会在node节点的/var/log/pods目录中创建新的目录,可以区别不同的容器日志输出,该目录下有一个日志文件链接到/var/lib/docker/contianers目录下的容器日志输出。

部署环境装备

软件版本:

Elasticsearch版本:elasticsearch:v6.5.4

Kibana版本:kibana:6.5.4

Fluentd版本:fluentd-elasticsearch:v2.3.2

kubernetes版本:v1.13.0

集群节点规划:

网络CNI:flannel

集群节点:master节点x1,node节点x2

kubeadm搭建集群参考:

https://blog.csdn.net/networken/article/details/84991940

部署EFK

下载yaml文件

Elasticsearch 附加组件本身会作为 Kubernetes 的应用在集群里运行,其 YAML 配置文件可从kubernetes官方 github仓库获取:

https://github.com/kubernetes/kubernetes/tree/master/cluster/addons/fluentd-elasticsearch

将这些 YAML 文件下载到本地目录,例如efk目录:

# mkdir efk && cd efk

wget \

https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/es-statefulset.yaml

wget \

https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/es-service.yaml

wget \

https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/fluentd-es-configmap.yaml

wget \

https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/fluentd-es-ds.yaml

wget \

https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/kibana-service.yaml

wget \

https://raw.githubusercontent.com/kubernetes/kubernetes/master/cluster/addons/fluentd-elasticsearch/kibana-deployment.yaml

查看下载的yml文件:

[centos@k8s-master efk]$ ll

total 36

-rw-rw-r-- 1 centos centos 382 Dec 26 14:50 es-service.yaml

-rw-rw-r-- 1 centos centos 2871 Dec 26 14:55 es-statefulset.yaml

-rw-rw-r-- 1 centos centos 15528 Dec 26 18:12 fluentd-es-configmap.yaml

-rw-rw-r-- 1 centos centos 2833 Dec 26 18:40 fluentd-es-ds.yaml

-rw-rw-r-- 1 centos centos 1052 Dec 26 14:50 kibana-deployment.yaml

-rw-rw-r-- 1 centos centos 354 Dec 26 14:50 kibana-service.yaml

[centos@k8s-master elk]$

解决镜像拉取问题

elastic官方镜像仓库:https://www.docker.elastic.co

使用vim打开以下3个yaml文件,输入/image回车,定位到3个容器镜像的拉取路径:

#es-statefulset.yaml

k8s.gcr.io/elasticsearch:v6.3.0

#fluentd-es-ds.yaml

k8s.gcr.io/fluentd-elasticsearch:v2.2.0

#kibana-deployment.yaml

docker.elastic.co/kibana/kibana-oss:6.3.2

其中前2个k8s.gcr.io镜像路径国内无法访问,这里将elasticsearch镜像替换为其官方仓库镜像,fluentd-elasticsearch镜像从google container构建到个人dockerhub仓库,kibana镜像默认为官方仓库镜像,这里仅修改镜像版本,最终镜像路径及版本如下:

#es-statefulset.yaml

docker.elastic.co/elasticsearch/elasticsearch-oss:6.5.4

#fluentd-es-ds.yaml

willdockerhub/fluentd-elasticsearch:v2.3.2

#kibana-deployment.yaml

docker.elastic.co/kibana/kibana-oss:6.5.4

以上镜像也可以从dockerhub或者阿里云镜像仓库自行搜索获取,镜像获取及构建方法参考:

https://blog.csdn.net/networken/article/details/84571373

yaml文件中的镜像路径替换结果:

[centos@k8s-master elk]$ vim es-statefulset.yaml

......

#- image: k8s.gcr.io/elasticsearch:v6.3.0

- image: docker.elastic.co/elasticsearch/elasticsearch-oss:6.5.4

......

[centos@k8s-master elk]$ vim fluentd-es-ds.yaml

......

#image: k8s.gcr.io/fluentd-elasticsearch:v2.2.0

image: willdockerhub/fluentd-elasticsearch:v2.3.2

......

[centos@k8s-master efk]$ vim kibana-deployment.yaml

......

#image: docker.elastic.co/kibana/kibana-oss:6.3.2

image: docker.elastic.co/kibana/kibana-oss:6.5.4

......

由于版本改变,注意修改yaml文件中默认字段的版本参数:

[centos@k8s-master efk]$ sed -i 's/v6.3.0/v6.5.4/g' es-statefulset.yaml

[centos@k8s-master efk]$ sed -i 's/v2.2.0/v2.3.2/g' fluentd-es-ds.yaml

[centos@k8s-master efk]$ sed -i 's/6.3.2/6.5.4/g' kibana-deployment.yaml

解决elasticsearch pod无法启动问题

elasticsearch-loggin的 pod不断的restart,无法正常启动

[centos@k8s-master elk]$ kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

......

elasticsearch-logging-0 0/1 ImagePullBackOff 0 87m 10.244.2.32 k8s-node2 <none> <none>

[centos@k8s-master ~]$ kubectl describe pod elasticsearch-logging-0 -n kube-system

......

Events:

Type Reason Age From Message

---- ------ ---- ---- -------

Normal Scheduled 8m50s default-scheduler Successfully assigned kube-system/elasticsearch-logging-0 to k8s-node1

Normal Pulled 8m49s kubelet, k8s-node1 Container image "alpine:3.6" already present on machine

Normal Created 8m49s kubelet, k8s-node1 Created container

Normal Started 8m49s kubelet, k8s-node1 Started container

Normal Pulling 8m48s kubelet, k8s-node1 pulling image "willdockerhub/elasticsearch:v6.3.0"

Normal Pulled 7m32s kubelet, k8s-node1 Successfully pulled image "willdockerhub/elasticsearch:v6.3.0"

Normal Created 3m32s (x5 over 7m32s) kubelet, k8s-node1 Created container

Normal Pulled 3m32s (x4 over 5m45s) kubelet, k8s-node1 Container image "willdockerhub/elasticsearch:v6.3.0" already present on machine

Normal Started 3m31s (x5 over 7m32s) kubelet, k8s-node1 Started container

Warning BackOff 3m8s (x7 over 5m30s) kubelet, k8s-node1 Back-off restarting failed container

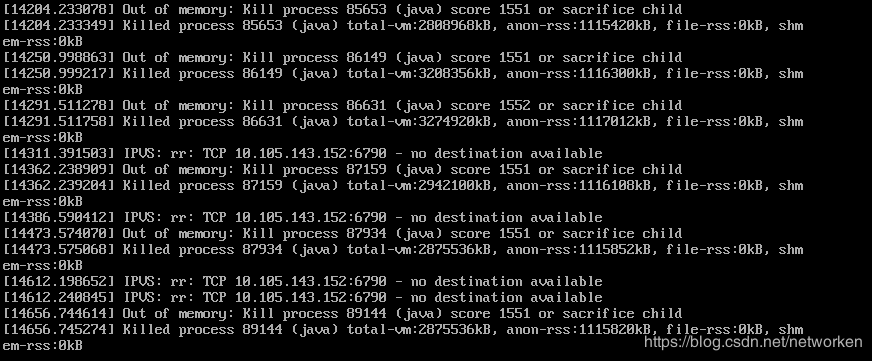

VMware Workstation的终端console上可以看出是因为java的oom引起的:

我这里kubernetes集群中的node节点仅仅配置了2G内存,可能是内存资源不足导致的,这里将所有node节点内存调整为4G, pod运行恢复正常。

参考:http://blog.51cto.com/ylw6006/2071943

Node节点打标签

Fluentd 是以 DaemonSet 的形式运行在 Kubernetes 集群中,这样就可以保证集群中每个 Node 上都会启动一个 Fluentd,我们在 Master 节点创建 Fluented 服务,最终会在各个 Node 上运行,查看fluentd-es-ds.yaml 信息:

[centos@k8s-master efk]$ kubectl get -f fluentd-es-ds.yaml

NAME SECRETS AGE

serviceaccount/fluentd-es 1 70m

NAME AGE

clusterrole.rbac.authorization.k8s.io/fluentd-es 70m

NAME AGE

clusterrolebinding.rbac.authorization.k8s.io/fluentd-es 70m

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

daemonset.apps/fluentd-es-v2.2.1 0 0 0 0 0 beta.kubernetes.io/fluentd-ds-ready=true 70m

通过输出内容我们发现 NODE-SELECTOR 选项为 beta.kubernetes.io/fluentd-ds-ready=true,这个选项说明 fluentd 只会调度到设置了标签 beta.kubernetes.io/fluentd-ds-ready=true 的 Node 节点,否则fluentd的pod无法正常启动。

查看集群中 Node 节点是否有这个标签:

[centos@k8s-master efk]$ kubectl describe nodes k8s-node1

Name: k8s-node1

Roles: <none>

Labels: beta.kubernetes.io/arch=amd64

beta.kubernetes.io/os=linux

kubernetes.io/hostname=k8s-node1

......

发现没有这个标签,为需要收集日志的Node 打上这个标签:

[centos@k8s-master elk]$ kubectl label node k8s-node1 beta.kubernetes.io/fluentd-ds-ready=true

node/k8s-node1 labeled

[centos@k8s-master elk]$ kubectl label node k8s-node2 beta.kubernetes.io/fluentd-ds-ready=true

node/k8s-node2 labeled

部署yaml文件

所有准备工作完成后,通过 kubectl apply -f .直接进行部署:

[centos@k8s-master elk]$ kubectl apply -f .

service/elasticsearch-logging created

serviceaccount/elasticsearch-logging created

clusterrole.rbac.authorization.k8s.io/elasticsearch-logging created

clusterrolebinding.rbac.authorization.k8s.io/elasticsearch-logging created

statefulset.apps/elasticsearch-logging created

configmap/fluentd-es-config-v0.1.6 created

serviceaccount/fluentd-es created

clusterrole.rbac.authorization.k8s.io/fluentd-es created

clusterrolebinding.rbac.authorization.k8s.io/fluentd-es created

daemonset.apps/fluentd-es-v2.2.1 created

deployment.apps/kibana-logging created

service/kibana-logging created

[centos@k8s-master elk]$

所有资源都部署在 kube-system Namespace里,镜像拉取可能需要一段时间,拉取失败也可以登录对应节点手动拉取。

查看pod状态:

[centos@k8s-master efk]$ kubectl get pod -n kube-system -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

coredns-78d4cf999f-7hv9m 1/1 Running 6 15d 10.244.0.15 k8s-master <none> <none>

coredns-78d4cf999f-lj5rg 1/1 Running 6 15d 10.244.0.14 k8s-master <none> <none>

elasticsearch-logging-0 1/1 Running 0 97m 10.244.1.105 k8s-node1 <none> <none>

elasticsearch-logging-1 1/1 Running 0 96m 10.244.2.95 k8s-node2 <none> <none>

etcd-k8s-master 1/1 Running 6 15d 192.168.92.56 k8s-master <none> <none>

fluentd-es-v2.3.2-jkkgp 1/1 Running 0 97m 10.244.1.104 k8s-node1 <none> <none>

fluentd-es-v2.3.2-kj4f7 1/1 Running 0 97m 10.244.2.93 k8s-node2 <none> <none>

kibana-logging-7b59799486-wg97l 1/1 Running 0 97m 10.244.2.94 k8s-node2 <none> <none>

kube-apiserver-k8s-master 1/1 Running 6 15d 192.168.92.56 k8s-master <none> <none>

kube-controller-manager-k8s-master 1/1 Running 7 15d 192.168.92.56 k8s-master <none> <none>

kube-flannel-ds-amd64-ccjrp 1/1 Running 6 15d 192.168.92.56 k8s-master <none> <none>

kube-flannel-ds-amd64-lzx5v 1/1 Running 9 15d 192.168.92.58 k8s-node2 <none> <none>

kube-flannel-ds-amd64-qtnx6 1/1 Running 7 15d 192.168.92.57 k8s-node1 <none> <none>

kube-proxy-89d96 1/1 Running 0 7h32m 192.168.92.57 k8s-node1 <none> <none>

kube-proxy-t2vfx 1/1 Running 0 7h32m 192.168.92.56 k8s-master <none> <none>

kube-proxy-w6pl4 1/1 Running 0 7h32m 192.168.92.58 k8s-node2 <none> <none>

kube-scheduler-k8s-master 1/1 Running 7 15d 192.168.92.56 k8s-master <none> <none>

kubernetes-dashboard-847f8cb7b8-wrm4l 1/1 Running 8 15d 10.244.2.80 k8s-node2 <none> <none>

tiller-deploy-7bf99c9559-8p7w4 1/1 Running 5 6d21h 10.244.1.90 k8s-node1 <none> <none>

[centos@k8s-master efk]$

查看其它信息:

[centos@k8s-master efk]$ kubectl get daemonset -n kube-system

NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

fluentd-es-v2.2.1 2 2 2 2 2 beta.kubernetes.io/fluentd-ds-ready=true 164m

......

[centos@k8s-master efk]$ kubectl get statefulset -n kube-system

NAME READY AGE

elasticsearch-logging 2/2 6m32s

[centos@k8s-master efk]$ kubectl get service -n kube-system

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

elasticsearch-logging ClusterIP 10.107.236.158 <none> 9200/TCP 6m51s

kibana-logging ClusterIP 10.97.214.200 <none> 5601/TCP 6m51s

......

[centos@k8s-master efk]$

查看pod日志信息:

[centos@k8s-master efk]$ kubectl logs -f elasticsearch-logging-0 -n kube-system

[centos@k8s-master efk]$ kubectl logs -f fluentd-es-v2.3.2-jkkgp -n kube-system

[centos@k8s-master efk]$ kubectl logs -f kibana-logging-7b59799486-wg97 -n kube-system

访问 kibana

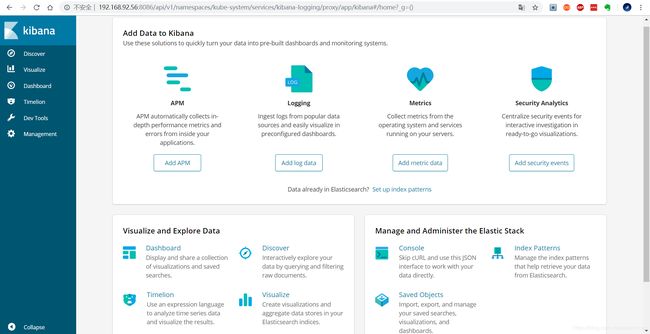

通过proxy代理将kibana服务暴露出来:

kubectl proxy --address=‘192.168.92.56’ --port=8086 --accept-hosts=’^*$’

[centos@k8s-master efk]$ kubectl proxy --address='192.168.92.56' --port=8086 --accept-hosts='^*$'

Starting to serve on 192.168.92.56:8086

浏览器重新访问,端口改为8086:

http://192.168.92.56:8086/api/v1/namespaces/kube-system/services/kibana-logging/proxy

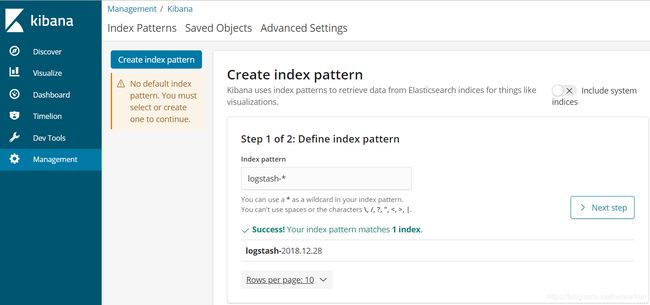

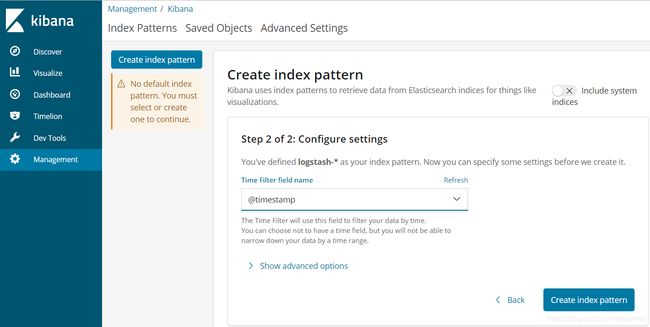

我们需要创建一个index-pattern索引,点击导航栏 “Management” -> “index pattern”, Index pattern默认 logstash-* 即可:

“Time-field name” 默认 @timestamp,最后点击 “Create” 即可完成索引创建。

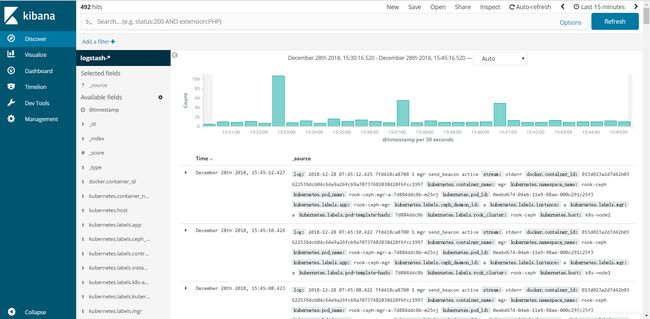

等待一会,查看边栏中的”Discover”,如果你看到类似下面截图中的日志内容输出,说明kibana可以正常从elasticsearch获取数据了: