使用CNN卷积神经网络进行情感分析

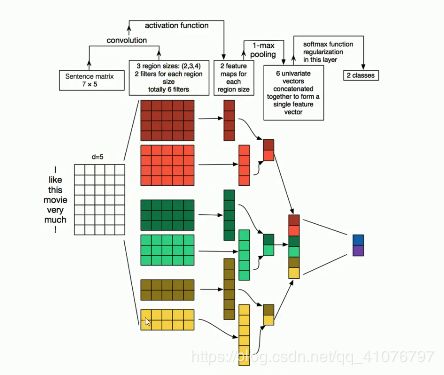

说先看一下这个图,它大体介绍了CNN的自然语言处理流程:

1.首先每个单词对应一行,d=5表示分了5个维度,一般是分128维,300维之类的,这里为了方便,用d=5。

这样的话矩阵就是7*5

2.然后第一步进行卷积的操作,分别使用了四行的卷积核两个,三行的卷积核两个,两行的卷积核两个。然后分别对7*5的矩阵进行卷积,对于7*5的话,4*5放上去可以向下移动4次,所以产生了4*1矩阵(feature map),3*5的则可以移动5次,所以得到5*1的矩阵,同理,2*5的得到6*1的矩阵。

3.然后第二步进行池化操作,图中使用的是max pooling,是最大值池化,所以前面产生的两个4*1的feature map分别取最大的元素,组成一个2*1的矩阵。同理前面产生的5*1、6*1的矩阵也要执行该操作。最终6个feature map产生了3个池化之后的矩阵

4.然后再把3个池化后的矩阵拼接起来形成6*1的feature vector。

5.然后这是一个全连接层,假如我们需要分两个类,则对这6个神经元进行分类,产生两个神经元作为输出。

下面是代码实现,

第一步,各种超参数设定

import tensorflow as tf

import numpy as np

import os

import time

import datetime

import data_helpers

from text_cnn import TextCNN

from tensorflow.contrib import learn

import pickle

# Parameters

# ==================================================

# Data loading params

#validation数据集占比 0.1 百分之十

tf.flags.DEFINE_float("dev_sample_percentage", .1, "Percentage of the training data to use for validation")

#正样本 电影正面评论

tf.flags.DEFINE_string("positive_data_file", "./data/rt-polaritydata/rt-polarity.pos", "Data source for the positive data.")

#负样本 电影负面评论

tf.flags.DEFINE_string("negative_data_file", "./data/rt-polaritydata/rt-polarity.neg", "Data source for the negative data.")

# Model Hyperparameters

#词向量长度

tf.flags.DEFINE_integer("embedding_dim", 128, "Dimensionality of character embedding (default: 128)")

#卷积核大小 使用3 4 5行的卷积核,列肯定是128列

tf.flags.DEFINE_string("filter_sizes", "3,4,5", "Comma-separated filter sizes (default: '3,4,5')")

#每一种卷积核的个数

tf.flags.DEFINE_integer("num_filters", 64, "Number of filters per filter size (default: 128)")

#dropout参数

tf.flags.DEFINE_float("dropout_keep_prob", 0.5, "Dropout keep probability (default: 0.5)")

#l2正则化参数

tf.flags.DEFINE_float("l2_reg_lambda", 0.0005, "L2 regularization lambda (default: 0.0)")

# Training parameters

#批次大小,就是每个批次有多少行的数据

tf.flags.DEFINE_integer("batch_size", 64, "Batch Size (default: 64)")

#迭代周期

tf.flags.DEFINE_integer("num_epochs", 6, "Number of training epochs (default: 200)")

#多少step测试一次 每运算多少次做一个测试

tf.flags.DEFINE_integer("evaluate_every", 100, "Evaluate model on dev set after this many steps (default: 100)")

#每多少次保存一次模型

tf.flags.DEFINE_integer("checkpoint_every", 100, "Save model after this many steps (default: 100)")

#最多保存5个模型,如果满了,每新增一个就删除一个,只保留最新的5个模型

tf.flags.DEFINE_integer("num_checkpoints", 5, "Number of checkpoints to store (default: 5)")

# Misc Parameters

#tensorflow会自动选择一个存在并且支持的设备上来运行operation

tf.flags.DEFINE_boolean("allow_soft_placement", True, "Allow device soft device placement")

#获取你的operations核Tensor被指派到哪个设备上运行

tf.flags.DEFINE_boolean("log_device_placement", False, "Log placement of ops on devices")

#flags解析

FLAGS = tf.flags.FLAGS

FLAGS._parse_flags()

#打印所有参数

print("\nParameters:")

for attr, value in sorted(FLAGS.__flags.items()):

print("{}={}".format(attr.upper(), value))

print("")注释讲的很清楚了,这里不过多描述

第二步

# Data Preparation

# ==================================================

# Load data

print("Loading data...")

#把正样本和负样本传入

x_text, y = data_helpers.load_data_and_labels(FLAGS.positive_data_file, FLAGS.negative_data_file)

# Build vocabulary

#一行数据最多的词汇数 因为我们要保证句子的尺寸是一样的,即长度是一样的。

max_document_length = max([len(x.split(" ")) for x in x_text])

#词典

vocab_processor = learn.preprocessing.VocabularyProcessor(max_document_length)

# 其中VocabularyProcessor(max_document_length,min_frequency=0,vocabulary=None, tokenizer_fn=None)的构造函数中有4个参数

# max_document_length是文档的最大长度。如果文本的长度大于最大长度,那么它会被剪切,反之则用0填充

# min_frequency词频的最小值,出现次数>最小词频 的词才会被收录到词表中

# vocabulary CategoricalVocabulary 对象,不太清楚使用方法

# tokenizer_fn tokenizer function,讲句子或给定文本格式 token化得函数,可以理解为分词函数

#把原来的句子进行特殊处理,单词赋予编号

x = np.array(list(vocab_processor.fit_transform(x_text)))

# 如果长度低于56自动补0

# x:[

# [4719 59 182 34 190 804 0 0 0 0

# 0 0 0 0 0……]

# [129 ……]

# ]

print("x_shape:", x.shape)

print("y_shape:", y.shape)

# Randomly shuffle data

#对数据进行打乱的操作

np.random.seed(10)

shuffle_indices = np.random.permutation(np.arange(len(y)))

x_shuffled = x[shuffle_indices]

y_shuffled = y[shuffle_indices]

# Split train/test set

# TODO: This is very crude, should use cross-validation

#切分 数据集分为两部分 90%训练 10验证

dev_sample_index = -1 * int(FLAGS.dev_sample_percentage * float(len(y)))

x_train, x_dev = x_shuffled[:dev_sample_index], x_shuffled[dev_sample_index:]

y_train, y_dev = y_shuffled[:dev_sample_index], y_shuffled[dev_sample_index:]

print("Vocabulary Size: {:d}".format(len(vocab_processor.vocabulary_)))

print("Train/Dev split: {:d}/{:d}".format(len(y_train), len(y_dev)))

print("x:",x_train[0:5])

print("y:",y_train[0:5])这里调用了data_helpers.py里的写好的一个函数来引入正样本和负样本,我们看一下这个函数是怎么写的

def load_data_and_labels(positive_data_file, negative_data_file):

"""

Loads MR polarity data from files, splits the data into words and generates labels.

Returns split sentences and labels.

"""

# Load data from files

positive_examples = list(open(positive_data_file, "r", encoding='utf-8').readlines())

#去掉头尾的空格 形状['I like english','how are you',……]

positive_examples = [s.strip() for s in positive_examples]

negative_examples = list(open(negative_data_file, "r", encoding='utf-8').readlines())

negative_examples = [s.strip() for s in negative_examples]

# Split by words

#合并

x_text = positive_examples + negative_examples

#句子预处理

#['句子','句子','']

x_text = [clean_str(sent) for sent in x_text]

# Generate labels

#生成labels,因为就分两个类,正面评论,负面评论

positive_labels = [[0, 1] for _ in positive_examples]#形状[[0,1],[0,1],……]

negative_labels = [[1, 0] for _ in negative_examples]#形状[[1,0],[1,0],……]

y = np.concatenate([positive_labels, negative_labels], 0)#[[0,1],[0,1],……,[1,0],[1,0]]

return [x_text, y]

额,都是简单的基本操作,这些东西我觉着自己看看就行,注释里也写的很清楚了,我们的目标就是得到句子以及对应的标签

这个函数返回的就是我们处理好的数据,格式:[['句子',……],[[0,1],[0,1],……,[1,0],[1,0],……]

然后计算所有句子中,单词数最多是几,方便padding操作。然后就是定义词典以及进行padding补零操作,这里用到了learn.preprocessing.VocabularyProcessor函数,很强。

然后将数据集进行切分,9比1。

最后就是训练喽~

# Training

# ==================================================

with tf.Graph().as_default():

session_conf = tf.ConfigProto(

allow_soft_placement=FLAGS.allow_soft_placement,

log_device_placement=FLAGS.log_device_placement)

sess = tf.Session(config=session_conf)

with sess.as_default():

cnn = TextCNN(

sequence_length=x_train.shape[1],#sequence_length:最长词汇数

num_classes=y_train.shape[1],#num_classes:分类数

vocab_size=len(vocab_processor.vocabulary_),#vocab_size:总词汇数

embedding_size=FLAGS.embedding_dim,#embedding_size:词向量长度

filter_sizes=list(map(int, FLAGS.filter_sizes.split(","))),#filter_sizes:卷积核的尺寸3 4 5

num_filters=FLAGS.num_filters,#num_filters:卷积核的数量

l2_reg_lambda=FLAGS.l2_reg_lambda)#l2_reg_lambda_l2正则化系数

# Define Training procedure

#其实就是统计当前训练的步数

global_step = tf.Variable(0, name="global_step", trainable=False)

#优化器

optimizer = tf.train.AdamOptimizer(1e-3)

#计算梯度 ——minimize()的第一部分

grads_and_vars = optimizer.compute_gradients(cnn.loss)

#将计算出来的梯度应用到变量上,是函数minimize()的第二部分

#返回一个应用指定的梯度的操作operation,对global_step做自增操作

##综上所述,上面三行代码,就相当于global_step = tf.Variable(0, name="global_step", trainable=False).minimize(cnn.loss)

train_op = optimizer.apply_gradients(grads_and_vars, global_step=global_step)

# Keep track of gradient values and sparsity (optional)

#保存变量的梯度值

grad_summaries = []

for g, v in grads_and_vars:#g是梯度,v是变量

if g is not None:

grad_hist_summary = tf.summary.histogram("{}/grad/hist".format(v.name), g)

sparsity_summary = tf.summary.scalar("{}/grad/sparsity".format(v.name), tf.nn.zero_fraction(g))

grad_summaries.append(grad_hist_summary)

grad_summaries.append(sparsity_summary)

grad_summaries_merged = tf.summary.merge(grad_summaries)

# Output directory for models and summaries

#定义输出路径

timestamp = str(int(time.time()))

out_dir = os.path.abspath(os.path.join(os.path.curdir, "runs", timestamp))

print("Writing to {}\n".format(out_dir))

# Summaries for loss and accuracy

#记录一下loss值和准确率的值

loss_summary = tf.summary.scalar("loss", cnn.loss)

acc_summary = tf.summary.scalar("accuracy", cnn.accuracy)

# Train Summaries

#训练部分

#merge一下

train_summary_op = tf.summary.merge([loss_summary, acc_summary, grad_summaries_merged])

#路径定义

train_summary_dir = os.path.join(out_dir, "summaries", "train")

#写入

train_summary_writer = tf.summary.FileWriter(train_summary_dir, sess.graph)

# Dev summaries

#测试部分

dev_summary_op = tf.summary.merge([loss_summary, acc_summary])

dev_summary_dir = os.path.join(out_dir, "summaries", "dev")

dev_summary_writer = tf.summary.FileWriter(dev_summary_dir, sess.graph)

# Checkpoint directory. Tensorflow assumes this directory already exists so we need to create it

#保存模型的路径

checkpoint_dir = os.path.abspath(os.path.join(out_dir, "checkpoints"))

checkpoint_prefix = os.path.join(checkpoint_dir, "model")

if not os.path.exists(checkpoint_dir):

os.makedirs(checkpoint_dir)

#保存模型,最多保存5个

saver = tf.train.Saver(tf.global_variables(), max_to_keep=FLAGS.num_checkpoints)

# Write vocabulary

vocab_processor.save(os.path.join(out_dir, "vocab"))

# Initialize all variables

sess.run(tf.global_variables_initializer())

def train_step(x_batch, y_batch):

"""

A single training step

"""

feed_dict = {

cnn.input_x: x_batch,

cnn.input_y: y_batch,

cnn.dropout_keep_prob: FLAGS.dropout_keep_prob

}

_, step, summaries, loss, accuracy = sess.run(

[train_op, global_step, train_summary_op, cnn.loss, cnn.accuracy],

feed_dict)

time_str = datetime.datetime.now().isoformat()

print("{}: step {}, loss {:g}, acc {:g}".format(time_str, step, loss, accuracy))

train_summary_writer.add_summary(summaries, step)

def dev_step(x_batch, y_batch, writer=None):

"""

Evaluates model on a dev set

"""

feed_dict = {

cnn.input_x: x_batch,

cnn.input_y: y_batch,

cnn.dropout_keep_prob: 1.0

}

step, summaries, loss, accuracy = sess.run(

[global_step, dev_summary_op, cnn.loss, cnn.accuracy],

feed_dict)

time_str = datetime.datetime.now().isoformat()

print("{}: step {}, loss {:g}, acc {:g}".format(time_str, step, loss, accuracy))

if writer:

writer.add_summary(summaries, step)

# Generate batches

batches = data_helpers.batch_iter(

list(zip(x_train, y_train)), FLAGS.batch_size, FLAGS.num_epochs)

# Training loop. For each batch...

for batch in batches:

x_batch, y_batch = zip(*batch)

train_step(x_batch, y_batch)

current_step = tf.train.global_step(sess, global_step)

#测试

if current_step % FLAGS.evaluate_every == 0:

print("\nEvaluation:")

dev_step(x_dev, y_dev, writer=dev_summary_writer)

print("")

#保存模型

if current_step % FLAGS.checkpoint_every == 0:

path = saver.save(sess, checkpoint_prefix, global_step=current_step)

print("Saved model checkpoint to {}\n".format(path))

创建一个会话,然后我们看一下TextCNN干了嘛,

text_cnn.py

import tensorflow as tf

import numpy as np

class TextCNN(object):

"""

A CNN for text classification.

Uses an embedding layer, followed by a convolutional, max-pooling and softmax layer.

"""

#sequence_length:最长词汇数

#num_classes:分类数

#vocab_size:总词汇数

#embedding_size:词向量长度

#filter_sizes:卷积核的尺寸3 4 5

#num_filters:卷积核的数量

#l2_reg_lambda_l2正则化系数

def __init__(

self, sequence_length, num_classes, vocab_size,

embedding_size, filter_sizes, num_filters, l2_reg_lambda=0.0):

# Placeholders for input, output and dropout

self.input_x = tf.placeholder(tf.int32, [None, sequence_length], name="input_x")

self.input_y = tf.placeholder(tf.float32, [None, num_classes], name="input_y")

#dropout系数

self.dropout_keep_prob = tf.placeholder(tf.float32, name="dropout_keep_prob")

# Keeping track of l2 regularization loss (optional)

l2_loss = tf.constant(0.0)

# Embedding layer

with tf.device('/cpu:0'), tf.name_scope("embedding"):

#vocab_size词典大小

self.W = tf.Variable(

tf.random_uniform([vocab_size, embedding_size], -1.0, 1.0),

name="W")

#[batch_size,sequeue_length,embedding_size]

self.embedded_chars = tf.nn.embedding_lookup(self.W, self.input_x)

#添加一个维度,[batch_size,sequence_length,embedding_size,1]有点类似于图片哦~

self.embedded_chars_expanded = tf.expand_dims(self.embedded_chars, -1)

# Create a convolution + maxpool layer for each filter size

pooled_outputs = []

#三种不同尺寸的卷积,所以用了一个循环

for i, filter_size in enumerate(filter_sizes):

with tf.name_scope("conv-maxpool-%s" % filter_size):

# Convolution Layer

filter_shape = [filter_size, embedding_size, 1, num_filters]

#下面是卷积的参数

W = tf.Variable(tf.truncated_normal(filter_shape, stddev=0.1), name="W")

b = tf.Variable(tf.constant(0.1, shape=[num_filters]), name="b")

#卷积操作

conv = tf.nn.conv2d(

self.embedded_chars_expanded,#需要卷积的矩阵

W,#权值

strides=[1, 1, 1, 1],#步长

padding="VALID",

name="conv")

# Apply nonlinearity

#激活函数

h = tf.nn.relu(tf.nn.bias_add(conv, b), name="relu")

# Maxpooling over the outputs

#池化

pooled = tf.nn.max_pool(

h,

ksize=[1, sequence_length - filter_size + 1, 1, 1],

strides=[1, 1, 1, 1],

padding='VALID',

name="pool")

pooled_outputs.append(pooled)

# Combine all the pooled features

#卷积核的数量*卷积核的尺寸种类

num_filters_total = num_filters * len(filter_sizes)

#三个池化层的输出拼接起来

self.h_pool = tf.concat(pooled_outputs, 3)

#转化为一维的向量

self.h_pool_flat = tf.reshape(self.h_pool, [-1, num_filters_total])

# Add dropout

with tf.name_scope("dropout"):

self.h_drop = tf.nn.dropout(self.h_pool_flat, self.dropout_keep_prob)

# Final (unnormalized) scores and predictions

#全连接层

with tf.name_scope("output"):

W = tf.get_variable(

"W",

shape=[num_filters_total, num_classes],#[64*3,2]

initializer=tf.contrib.layers.xavier_initializer())

b = tf.Variable(tf.constant(0.1, shape=[num_classes]), name="b")

#l2正则化

l2_loss += tf.nn.l2_loss(W)

l2_loss += tf.nn.l2_loss(b)

self.scores = tf.nn.softmax(tf.nn.xw_plus_b(self.h_drop, W, b, name="scores"))

#最终预测值

self.predictions = tf.argmax(self.scores, 1, name="predictions")

# Calculate mean cross-entropy loss

with tf.name_scope("loss"):

losses = tf.nn.softmax_cross_entropy_with_logits(logits=self.scores, labels=self.input_y)

self.loss = tf.reduce_mean(losses) + l2_reg_lambda * l2_loss

# Accuracy

with tf.name_scope("accuracy"):

correct_predictions = tf.equal(self.predictions, tf.argmax(self.input_y, 1))

self.accuracy = tf.reduce_mean(tf.cast(correct_predictions, "float"), name="accuracy")

在这个类的对象初始化的过程中,我们传入了几个超参数,分别是最长词汇数,分类数,总词汇数,词向量长度,卷积核尺寸,卷积核数量,l2正则化系数。

然后设置了几个占位符,然后重点嵌入层来了

with tf.device('/cpu:0'), tf.name_scope("embedding"):

#vocab_size词典大小

self.W = tf.Variable(

tf.random_uniform([vocab_size, embedding_size], -1.0, 1.0),

name="W")

#[batch_size,sequeue_length,embedding_size]

self.embedded_chars = tf.nn.embedding_lookup(self.W, self.input_x)

#添加一个维度,[batch_size,sequence_length,embedding_size,1]有点类似于图片哦~

self.embedded_chars_expanded = tf.expand_dims(self.embedded_chars, -1)W就是所有词的词向量矩阵,然后embedded_chars是输入句子所对应的的词向量矩阵,返回的就是[batch_size,sequence_length,embedding_size],然后手动为其添加了一个维度。

然后又是一个重点:卷积池化层。

我们先看一下tf.nn.conv2d函数

def conv2d(x,W):

#2d是二维的意思

#x是一个tensor,形状是[batch,in_height,in_width,in_channels]NHWC关系,分别是批次大小(本例batch_size=100),图片高度,图片宽度,通道数(黑白照片是1,彩色是3)

#w是一个滤波器,tensor,形状是[filter_height,filter_width,in_channels,out_channels],滤波器长,宽,输入和输出通道数

#步长参数,strides[0]=strides[3]=1,strides[1]代表x方向的步长,strides[2]代表y方向的步长

#padding:一个字符串,要么是'SAME'要么是'VALID',对应两种卷积方法,前者补零,后者不会超出平面外部

————————————————

版权声明:本文为CSDN博主「CtrlZ1」的原创文章,遵循CC 4.0 by-sa版权协议,转载请附上原文出处链接及本声明。

原文链接:https://blog.csdn.net/qq_41076797/article/details/99212303我们看tf.nn.conv2d执行卷积,输入需要卷积的矩阵self.embedded_chars_expanded,要求它的格式是[batch_size,in_height,in_width,in_channels],这就是为什么要加一个维度的原因,这里和照片有点相似,其实这个in_height和in_width很耐人寻味,前一个是传入的句子的最大长度,后面是对应的句子中每个词的词向量维度,从矩阵来看正好是高和宽。w是一个[卷积核尺寸,词向量长度,1,卷积核个数]的矩阵,步长是1,1,padding方案是valid,即不进行补零,那样的话矩阵大小会发生变化。根据w,我们知道卷积窗口大小是卷积核尺寸*词向量长度,由于输入矩阵宽度也是词向量长度,所以只能向下卷积,所以卷积之后的矩阵格式为[bath_size,in_height-卷积核尺寸+1,1,num_filter]即[64,最长句子单词数-2,1,64]。然后由于卷积核的个数,有多少个卷积核,返回多少个这种矩阵(feature map)。

然后进行激活函数。

不用过多解释了吧。 返回依然是[bath_size,in_height-卷积核尺寸+1,1,num_filter]=[bath_size,sequence_length -3or4or5+1,1,64]即[64,最长句子单词数-2or3or4,1,64]

然后进行池化操作,池化窗口尺寸是(sequence_length - filter_size + 1)*1,和我们返回的矩阵是一样的,这样的话池化之后返回的矩阵就是[batch_size,1,1,64]

然后把三次池化后的输出拼接起来,转化为一维向量[batch_size,64*3],然后dropout,然后又是重要的一层,全连接层:

#全连接层

with tf.name_scope("output"):

W = tf.get_variable(

"W",

shape=[num_filters_total, num_classes],#[64*3,2]

initializer=tf.contrib.layers.xavier_initializer())

b = tf.Variable(tf.constant(0.1, shape=[num_classes]), name="b")

#l2正则化

l2_loss += tf.nn.l2_loss(W)

l2_loss += tf.nn.l2_loss(b)

self.scores = tf.nn.softmax(tf.nn.xw_plus_b(self.h_drop, W, b, name="scores"))

#最终预测值

self.predictions = tf.argmax(self.scores, 1, name="predictions")

# Calculate mean cross-entropy loss

with tf.name_scope("loss"):

losses = tf.nn.softmax_cross_entropy_with_logits(logits=self.scores, labels=self.input_y)

self.loss = tf.reduce_mean(losses) + l2_reg_lambda * l2_loss

# Accuracy

with tf.name_scope("accuracy"):

correct_predictions = tf.equal(self.predictions, tf.argmax(self.input_y, 1))

self.accuracy = tf.reduce_mean(tf.cast(correct_predictions, "float"), name="accuracy")经过softmax之后返回的scores是一个[batch_size,2]的矩阵,和真实值input_y比较可以得到损失值losses,然后我们分别计算平均损失值和准确率。到此就结束了。

再回头看就很简单了,什么优化器啊,什么计算梯度啊,什么保存模型啊,等等就没啥可说的了,都是常规操作。