Apache Flink 各类关键数据格式读取/SQL支持

目前事件归并分为两种,一种为实时的归并,即基于Kafka内的数据进行归并和事件生成;一种是周期性的归并,即基于Hive中的数据进行数据的归并和事件生成。

基于SQL归并时Spark Streaming支持的输入/输出数据如下:

| 数据类型 |

Flink支持情况 |

|---|---|

| Kafka | 需要定义schema |

| HDFS(parquet/csv/textfile) | 读取parquet需要使用AvroParquetInputFormat csv/textfile有readCsvFile和TextFileInput |

| Hive | 1.需要启用hive service metastore来提供thrift metastore接口 2.需要依赖flink-hcatalog来进行读取 |

| JDBC(PostgreSQL) | JDBCInputFormat |

下面就Apache Flink是否支持上述格式进行测试。

1.Kafka

首先需要定义一个POJO类,用于代表从kafka读取的dstream里的内容:

package com.flinklearn.models;

/**

* Created by dell on 2018/10/23.

*/

public class TamAlert {

private String msg;

public TamAlert(){}

public String getMsg() {

return msg;

}

public void setMsg(String msg) {

this.msg = msg;

}

}其次,在Flink的DataStream上执行SQL与Spark比较不同,对于Spark而言一直是stream的transform、registerTempTable动作,而在Flink上需要将DataStream转换为Table,才能执行相关SQL,而如果要进行transform需要再次将Table转为DataFrame才可以。

代码如下:

package com.flinklearn.main

import java.util.Properties

import com.alibaba.fastjson.{JSON}

import com.flinklearn.models.TamAlert

import org.apache.flink.api.common.serialization.SimpleStringSchema

import org.apache.flink.api.common.typeinfo.TypeInformation

import org.apache.flink.streaming.api.scala.{DataStream, StreamExecutionEnvironment}

import org.apache.flink.streaming.connectors.kafka.FlinkKafkaConsumer010

import org.apache.flink.streaming.api.scala._

import org.apache.flink.table.api.{TableEnvironment, Types}

import org.apache.flink.table.api.scala.StreamTableEnvironment

import org.apache.flink.table.api.scala._

import scala.collection.mutable.ArrayBuffer

/**

* Created by dell on 2018/10/22.

*/

class Main {

def startApp(): Unit = {

val properties = new Properties()

properties.setProperty("bootstrap.servers", "brokerserver")

properties.setProperty("group.id", "com.flinklearn.main.Main")

val env = StreamExecutionEnvironment.getExecutionEnvironment

//从kafka读取数据,得到stream

val stream:DataStream[TamAlert] = env

.addSource(new FlinkKafkaConsumer010[String]("mytopic", new SimpleStringSchema(), properties))

.map(line => {

var rtn:TamAlert = null

try{

val temp = JSON.parseObject(line).getJSONObject("payload")

rtn = new TamAlert()

rtn.setMsg(temp.getString("msg"))

}catch{

case ex:Exception => {

ex.printStackTrace()

}

}

rtn

}).filter(line=>line!=null)

//将stream注册为temp_alert表,并打印msg字段

val tableEnv:StreamTableEnvironment = TableEnvironment.getTableEnvironment(env)

tableEnv.registerDataStream("temp_alert", stream,

'msg)

val httpTable = tableEnv.sqlQuery("select msg from temp_alert")

val httpStream = tableEnv.toAppendStream[(String,String,Integer)](httpTable)

httpStream.print()

env.execute("Kafka sql test.")

}

}

object Main {

def main(args:Array[String]):Unit = {

new Main().startApp()

}

}2.HDFS

2.1 Parquet

对于HDFS Parquet格式的数据,Apache Flink并不如Spark一般有十分方便的read.parquet()接口,需要借助AvroParquetInputFormat来读取相应文件。具体操作步骤如下:

1.在pom.xml中引入相应的依赖

org.apache.flink

flink-hadoop-compatibility_2.11

1.6.1

org.apache.flink

flink-avro

1.6.1

org.apache.parquet

parquet-avro

1.10.0

org.apache.hadoop

hadoop-mapreduce-client-core

3.1.1

org.apache.hadoop

hadoop-hdfs

3.1.1

org.apache.hadoop

hadoop-core

1.2.1

2.使用avsc文件定义schema

{"namespace": "com.flinklearn.models",

"type": "record",

"name": "AvroTamAlert",

"fields": [

{"name": "msg", "type": ["string","null"]}

]

}3.使用avro-tools生成对应的java类,并将java文件拷贝到项目里,本例子中是AvroTamAlert.java:

4.使用AvroParquetInputFormat来读取parquet文件:

package com.flinklearn.main

import java.util.Arrays

import com.flinklearn.models.{AvroTamAlert}

import org.apache.avro.Schema

import org.apache.avro.util.Utf8

import org.apache.flink.api.java.hadoop.mapreduce.HadoopInputFormat

import org.apache.flink.api.java.tuple.Tuple2

import org.apache.flink.api.scala.{ExecutionEnvironment}

import org.apache.flink.table.api.TableEnvironment

import org.apache.hadoop.fs.{FileSystem, Path}

import org.apache.hadoop.mapreduce.Job

import org.apache.hadoop.mapreduce.lib.input.{FileInputFormat}

import org.apache.flink.api.scala._

import org.apache.parquet.avro.AvroParquetInputFormat

import org.apache.flink.table.api.scala._

/**

* Created by dell on 2018/10/23.

*/

class Main {

def startApp(): Unit ={

val env = ExecutionEnvironment.getExecutionEnvironment

val job = Job.getInstance()

val dIf = new HadoopInputFormat[Void, AvroTamAlert](new AvroParquetInputFormat(), classOf[Void], classOf[AvroTamAlert], job)

FileInputFormat.addInputPath(job, new Path("/user/hive/warehouse/xx.db/yy"))

AvroParquetInputFormat.setAvroReadSchema(job, AvroTamAlert.getClassSchema)

val dataset = env.createInput(dIf).map(line=>line.f1).map(line=>(line.getSip.toString,line.getDip.toString,line.getDport))

val tableEnv = TableEnvironment.getTableEnvironment(env)

tableEnv.registerDataSet("tmp_table", dataset, 'msg)

val table = tableEnv.sqlQuery("select msg from tmp_table")

tableEnv.toDataSet[(String,String,Integer)](table).print()

env.execute("start hdfs parquet test")

}

}

object Main {

def main(args:Array[String]):Unit = {

new Main().startApp()

}

}2.2 CSV

需要添加的参数在2.3小节中。

package com.flinklearn.main

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.table.api.TableEnvironment

import org.apache.flink.api.scala._

import org.apache.flink.table.api.scala._

/**

* Created by dell on 2018/10/25.

*/

object Main {

def main(args:Array[String]):Unit = {

val env = ExecutionEnvironment.getExecutionEnvironment

val dataset:DataSet[(String,Integer)] = env.readCsvFile("hdfs://ip:8020/mytest")

val tableEnv = TableEnvironment.getTableEnvironment(env)

tableEnv.registerDataSet("tmp_table", dataset, 'name, 'num)

val table = tableEnv.sqlQuery("select name,num from tmp_table")

val rtnDataset = tableEnv.toDataSet[(String,Integer)](table)

rtnDataset.print()

env.execute("test hdfs csvfile")

}

}2.3 TextFile

有几个关键的参数必须加到flink-conf.yaml文件中:

第一个参数指定Hadoop的配置文件

第二个参数指定模式为旧模式,因为flink1.6.1是用的scala2.11,使用scala接口会存在一定的问题(报jobgraph生成失败,目前还不清楚具体原因)

第三个参数指定类加载顺序(如果不指定,会报hdfs 不可读取块错误)

同时,需要将flink-hadoop-compatibility_2.11-1.6.1.jar放到flink/lib文件夹下,pom里打包没有用。

以上操作做完,就可以正确的读取hdfs上的文件了:

package com.flinklearn.main

import org.apache.flink.api.java.io.RowCsvInputFormat

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.api.scala.hadoop.mapreduce.HadoopInputFormat

import org.apache.flink.hadoopcompatibility.scala.HadoopInputs

import org.apache.flink.table.api.TableEnvironment

import org.apache.hadoop.fs.Path

import org.apache.hadoop.io.{LongWritable, Text}

import org.apache.hadoop.mapreduce.Job

import org.apache.hadoop.mapreduce.lib.input.{CombineTextInputFormat, TextInputFormat, FileInputFormat}

import org.apache.flink.api.scala._

import org.apache.flink.table.api.scala._

/**

* Created by dell on 2018/10/25.

*/

object Main {

def main(args:Array[String]):Unit = {

val env = ExecutionEnvironment.getExecutionEnvironment

val dataset:DataSet[(LongWritable,Text)] = env.createInput(HadoopInputs.readHadoopFile[LongWritable,Text](

new CombineTextInputFormat,

classOf[LongWritable],

classOf[Text],

"/mytest"

))

val transDataset = dataset.map(line=>{

val lines = line._2.toString.split(",")

if(lines.length == 2){

(lines(0).toString,lines(1).toInt)

}else{

null

}

}).filter(line=>line!=null)

print(transDataset.count())

val tableEnv = TableEnvironment.getTableEnvironment(env)

tableEnv.registerDataSet("tmp_table", transDataset, 'name, 'num)

val table = tableEnv.sqlQuery("select name,num from tmp_table")

val rtnDataset = tableEnv.toDataSet[(String,Integer)](table)

rtnDataset.print()

env.execute("test hdfs textfile")

}

}3.Hive

1.下载flink-hcatalog源码并添加到自己的项目中(不要用它的jar包,因为它依赖的hive库版本都太低了),路径:http://central.maven.org/maven2/org/apache/flink/flink-hcatalog/1.6.1/flink-hcatalog-1.6.1-sources.jar

2.在pom文件中添加依赖:

org.apache.hadoop

hadoop-common

2.7.3

org.apache.flink

flink-hadoop-fs

1.6.1

com.jolbox

bonecp

0.8.0.RELEASE

com.twitter

parquet-hive-bundle

1.6.0

org.apache.hive

hive-exec

1.2.0

org.apache.hive

hive-metastore

1.2.0

org.apache.hive

hive-cli

1.2.0

org.apache.hive

hive-common

1.2.0

org.apache.hive

hive-service

1.2.0

org.apache.hive

hive-shims

1.2.0

org.apache.hive.hcatalog

hive-hcatalog-core

1.2.2

org.apache.thrift

libfb303

0.9.3

pom

3.在flink-lib中添加下面所有jar:

accumulo-core-1.6.0.jar derby-10.11.1.1.jar hive-serde-1.2.0.jar mail-1.4.1.jar

accumulo-fate-1.6.0.jar derbyclient-10.14.2.0.jar hive-service-1.2.0.jar maven-scm-api-1.4.jar

accumulo-start-1.6.0.jar eigenbase-properties-1.1.5.jar hive-shims-0.20S-1.2.0.jar maven-scm-provider-svn-commons-1.4.jar

accumulo-trace-1.6.0.jar flink-dist_2.11-1.6.1.jar hive-shims-0.23-1.2.0.jar maven-scm-provider-svnexe-1.4.jar

activation-1.1.jar flink-hadoop-compatibility_2.11-1.6.1.jar hive-shims-1.2.0.jar netty-3.7.0.Final.jar

ant-1.9.1.jar flink-python_2.11-1.6.1.jar hive-shims-common-1.2.0.jar opencsv-2.3.jar

ant-launcher-1.9.1.jar flink-shaded-hadoop2-uber-1.6.1.jar hive-shims-scheduler-1.2.0.jar oro-2.0.8.jar

antlr-2.7.7.jar geronimo-annotation_1.0_spec-1.1.1.jar hive-testutils-1.2.0.jar paranamer-2.3.jar

antlr-runtime-3.4.jar geronimo-jaspic_1.0_spec-1.0.jar httpclient-4.4.jar parquet-hadoop-bundle-1.6.0.jar

apache-curator-2.6.0.pom geronimo-jta_1.1_spec-1.1.1.jar httpcore-4.4.jar parquet-hive-bundle-1.6.0.jar

apache-log4j-extras-1.2.17.jar groovy-all-2.1.6.jar ivy-2.4.0.jar pentaho-aggdesigner-algorithm-5.1.5-jhyde.jar

asm-commons-3.1.jar guava-14.0.1.jar janino-2.7.6.jar php

asm-tree-3.1.jar guava-15.0.jar jcommander-1.32.jar plexus-utils-1.5.6.jar

avro-1.7.5.jar hamcrest-core-1.1.jar jdo-api-3.0.1.jar postgresql-42.0.0.jar

bonecp-0.8.0.RELEASE.jar hive-accumulo-handler-1.2.0.jar jetty-all-7.6.0.v20120127.jar py

calcite-avatica-1.2.0-incubating.jar hive-ant-1.2.0.jar jetty-all-server-7.6.0.v20120127.jar regexp-1.3.jar

calcite-core-1.2.0-incubating.jar hive-beeline-1.2.0.jar jline-2.12.jar servlet-api-2.5.jar

calcite-linq4j-1.2.0-incubating.jar hive-cli-1.2.0.jar joda-time-2.5.jar slf4j-log4j12-1.7.7.jar

curator-client-2.6.0.jar hive-common-1.2.0.jar jpam-1.1.jar snappy-java-1.0.5.jar

curator-framework-2.6.0.jar hive-contrib-1.2.0.jar json-20090211.jar ST4-4.0.4.jar

curator-recipes-2.6.0.jar hive-exec-1.2.0.jar jsr305-3.0.0.jar stax-api-1.0.1.jar

datanucleus-api-jdo-3.2.1.jar hive-hbase-handler-1.2.0.jar jta-1.1.jar stringtemplate-3.2.1.jar

datanucleus-api-jdo-3.2.6.jar hive-hcatalog-core-1.2.2.jar junit-4.11.jar super-csv-2.2.0.jar

datanucleus-core-3.2.10.jar hive-hwi-1.2.0.jar libfb303-0.9.2.jar tempus-fugit-1.1.jar

datanucleus-core-3.2.2.jar hive-jdbc-1.2.0.jar libthrift-0.9.2.jar velocity-1.5.jar

datanucleus-rdbms-3.2.1.jar hive-jdbc-1.2.0-standalone.jar log4j-1.2.16.jar xz-1.0.jar

datanucleus-rdbms-3.2.9.jar hive-metastore-1.2.0.jar log4j-1.2.17.jar zookeeper-3.4.6.jar

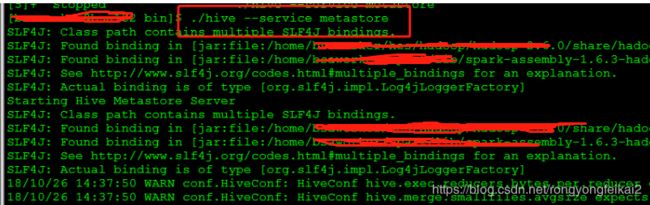

4.下载hive1.2.0版本(根据自己的需要来),将hive-site.xml拷贝一份到hive/conf目录下;启动hive thrift metastore

5.即可以读取hive表:

package com.flinklearn.main

import com.flinklearn.models.Alert

import org.apache.flink.api.scala.ExecutionEnvironment

import org.apache.flink.api.scala._

import org.apache.hadoop.conf.Configuration

/**

* Created by dell on 2018/10/25.

*/

object Main {

def main(args:Array[String]):Unit = {

val conf = new Configuration()

conf.set("hive.metastore.local", "false")

conf.set("hive.metastore.uris", "thrift://ip:9083")

val env = ExecutionEnvironment.getExecutionEnvironment

val dataset = env.createInput(new HCatInputFormat[Alert]("db", "tb", conf))

dataset.first(10).print()

env.execute("flink hive test")

}

}4.JDBC

package com.flinklearn.main;

import org.apache.flink.api.common.typeinfo.TypeInformation;

import org.apache.flink.api.java.ExecutionEnvironment;

import org.apache.flink.api.java.io.jdbc.JDBCInputFormat;

import org.apache.flink.api.java.operators.DataSource;

import org.apache.flink.api.java.typeutils.RowTypeInfo;

/**

* Created by dell on 2018/10/29.

*/

public class Main {

public static void main(String[] args){

try {

ExecutionEnvironment env = ExecutionEnvironment.getExecutionEnvironment();

JDBCInputFormat inputFormat = JDBCInputFormat.buildJDBCInputFormat()

.setDrivername("org.postgresql.Driver")

.setDBUrl("jdbc:postgresql://ip:port/nsc")

.setUsername("username")

.setPassword("password")

.setQuery("select xx,yy from zz")

.setRowTypeInfo(new RowTypeInfo(TypeInformation.of(String.class), TypeInformation.of(String.class)))

.finish();

DataSource source = env.createInput(inputFormat);

source.print();

env.execute("jdbc test");

} catch (Exception e) {

e.printStackTrace();

}

}

}