linux内核研究--watchdog实现分析

/kernel/watchdog.c

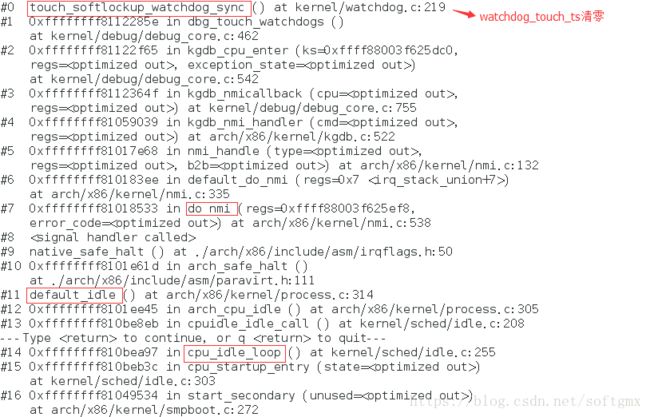

tatic DEFINE_PER_CPU(unsigned long, watchdog_touch_ts); //记录是时间戳,主要在watchdog线程中更新

static DEFINE_PER_CPU(struct task_struct *, softlockup_watchdog);

static DEFINE_PER_CPU(struct hrtimer, watchdog_hrtimer);

static DEFINE_PER_CPU(bool, softlockup_touch_sync);

static DEFINE_PER_CPU(bool, soft_watchdog_warn);

static DEFINE_PER_CPU(unsigned long, hrtimer_interrupts); //在hrtimer处理函数中更新

static DEFINE_PER_CPU(unsigned long, soft_lockup_hrtimer_cnt);

static DEFINE_PER_CPU(struct task_struct *, softlockup_task_ptr_saved);

#ifdef CONFIG_HARDLOCKUP_DETECTOR

static DEFINE_PER_CPU(bool, hard_watchdog_warn);

static DEFINE_PER_CPU(bool, watchdog_nmi_touch);

static DEFINE_PER_CPU(unsigned long, hrtimer_interrupts_saved); //在is_hardlockup中更新,更新为hrtimer_interrupts

static DEFINE_PER_CPU(struct perf_event *, watchdog_ev);

/* Commands for resetting the watchdog */

static void __touch_watchdog(void)

{

__this_cpu_write(watchdog_touch_ts, get_timestamp());

}

static void watchdog(unsigned int cpu)

{

__this_cpu_write(soft_lockup_hrtimer_cnt,

__this_cpu_read(hrtimer_interrupts));

__touch_watchdog();

}

static struct smp_hotplug_thread watchdog_threads = {

.store = &softlockup_watchdog,

.thread_should_run = watchdog_should_run,

.thread_fn = watchdog, //线程函数就是更新当前CPU的watchdog_touch_ts变量的值

.thread_comm = "watchdog/%u",

.setup = watchdog_enable,

.cleanup = watchdog_cleanup,

.park = watchdog_disable,

.unpark = watchdog_enable,

};

//启动nmi中断来检查hardlockup

//启动了一个高精度定时器,定时器的处理函数watchdog_timer_fn 负责检查是否发生了softlockup

static void watchdog_enable(unsigned int cpu)

{

struct hrtimer *hrtimer = raw_cpu_ptr(&watchdog_hrtimer);

/* kick off the timer for the hardlockup detector */

hrtimer_init(hrtimer, CLOCK_MONOTONIC, HRTIMER_MODE_REL);

hrtimer->function = watchdog_timer_fn;

/* Enable the perf event */

watchdog_nmi_enable(cpu);

/* done here because hrtimer_start can only pin to smp_processor_id() */

hrtimer_start(hrtimer, ns_to_ktime(sample_period),

HRTIMER_MODE_REL_PINNED);

/* initialize timestamp */

watchdog_set_prio(SCHED_FIFO, MAX_RT_PRIO - 1);

__touch_watchdog();

}

/* watchdog kicker functions */

static enum hrtimer_restart watchdog_timer_fn(struct hrtimer *hrtimer)

{

unsigned long touch_ts = __this_cpu_read(watchdog_touch_ts);

struct pt_regs *regs = get_irq_regs();

int duration;

int softlockup_all_cpu_backtrace = sysctl_softlockup_all_cpu_backtrace;

/* kick the hardlockup detector */

watchdog_interrupt_count();

/* kick the softlockup detector */

wake_up_process(__this_cpu_read(softlockup_watchdog));

/* .. and repeat */

hrtimer_forward_now(hrtimer, ns_to_ktime(sample_period));

if (touch_ts == 0) {

if (unlikely(__this_cpu_read(softlockup_touch_sync))) {

/*

* If the time stamp was touched atomically

* make sure the scheduler tick is up to date.

*/

__this_cpu_write(softlockup_touch_sync, false);

sched_clock_tick();

}

/* Clear the guest paused flag on watchdog reset */

kvm_check_and_clear_guest_paused();

__touch_watchdog();

return HRTIMER_RESTART;

}

/* check for a softlockup

* This is done by making sure a high priority task is

* being scheduled. The task touches the watchdog to

* indicate it is getting cpu time. If it hasn't then

* this is a good indication some task is hogging the cpu

*/

duration = is_softlockup(touch_ts);

if (unlikely(duration)) {

/*

* If a virtual machine is stopped by the host it can look to

* the watchdog like a soft lockup, check to see if the host

* stopped the vm before we issue the warning

*/

if (kvm_check_and_clear_guest_paused())

return HRTIMER_RESTART;

/* only warn once */

if (__this_cpu_read(soft_watchdog_warn) == true) {

/*

* When multiple processes are causing softlockups the

* softlockup detector only warns on the first one

* because the code relies on a full quiet cycle to

* re-arm. The second process prevents the quiet cycle

* and never gets reported. Use task pointers to detect

* this.

*/

if (__this_cpu_read(softlockup_task_ptr_saved) !=

current) {

__this_cpu_write(soft_watchdog_warn, false);

__touch_watchdog();

}

return HRTIMER_RESTART;

}

if (softlockup_all_cpu_backtrace) {

/* Prevent multiple soft-lockup reports if one cpu is already

* engaged in dumping cpu back traces

*/

if (test_and_set_bit(0, &soft_lockup_nmi_warn)) {

/* Someone else will report us. Let's give up */

__this_cpu_write(soft_watchdog_warn, true);

return HRTIMER_RESTART;

}

}

pr_emerg("BUG: soft lockup - CPU#%d stuck for %us! [%s:%d]\n",

smp_processor_id(), duration,

current->comm, task_pid_nr(current));

__this_cpu_write(softlockup_task_ptr_saved, current);

print_modules();

print_irqtrace_events(current);

if (regs)

show_regs(regs);

else

dump_stack();

if (softlockup_all_cpu_backtrace) {

/* Avoid generating two back traces for current

* given that one is already made above

*/

trigger_allbutself_cpu_backtrace();

clear_bit(0, &soft_lockup_nmi_warn);

/* Barrier to sync with other cpus */

smp_mb__after_atomic();

}

add_taint(TAINT_SOFTLOCKUP, LOCKDEP_STILL_OK);

if (softlockup_panic)

panic("softlockup: hung tasks");

__this_cpu_write(soft_watchdog_warn, true);

} else

__this_cpu_write(soft_watchdog_warn, false);

return HRTIMER_RESTART;

}

//启动nmi中断来检查hardlockup

static int watchdog_nmi_enable(unsigned int cpu)

{

struct perf_event_attr *wd_attr;

struct perf_event *event = per_cpu(watchdog_ev, cpu);

/*

* Some kernels need to default hard lockup detection to

* 'disabled', for example a guest on a hypervisor.

*/

if (!watchdog_hardlockup_detector_is_enabled()) {

event = ERR_PTR(-ENOENT);

goto handle_err;

}

/* is it already setup and enabled? */

if (event && event->state > PERF_EVENT_STATE_OFF)

goto out;

/* it is setup but not enabled */

if (event != NULL)

goto out_enable;

wd_attr = &wd_hw_attr;

wd_attr->sample_period = hw_nmi_get_sample_period(watchdog_thresh);

/* Try to register using hardware perf events */

event = perf_event_create_kernel_counter(wd_attr, cpu, NULL, watchdog_overflow_callback, NULL);

handle_err:

/* save cpu0 error for future comparision */

if (cpu == 0 && IS_ERR(event))

cpu0_err = PTR_ERR(event);

if (!IS_ERR(event)) {

/* only print for cpu0 or different than cpu0 */

if (cpu == 0 || cpu0_err)

pr_info("enabled on all CPUs, permanently consumes one hw-PMU counter.\n");

goto out_save;

}

/* skip displaying the same error again */

if (cpu > 0 && (PTR_ERR(event) == cpu0_err))

return PTR_ERR(event);

/* vary the KERN level based on the returned errno */

if (PTR_ERR(event) == -EOPNOTSUPP)

pr_info("disabled (cpu%i): not supported (no LAPIC?)\n", cpu);

else if (PTR_ERR(event) == -ENOENT)

pr_warn("disabled (cpu%i): hardware events not enabled\n",

cpu);

else

pr_err("disabled (cpu%i): unable to create perf event: %ld\n",

cpu, PTR_ERR(event));

return PTR_ERR(event);

/* success path */

out_save:

per_cpu(watchdog_ev, cpu) = event;

out_enable:

perf_event_enable(per_cpu(watchdog_ev, cpu));

out:

return 0;

}

/* Callback function for perf event subsystem */

static void watchdog_overflow_callback(struct perf_event *event,

struct perf_sample_data *data,

struct pt_regs *regs)

{

/* Ensure the watchdog never gets throttled */

event->hw.interrupts = 0;

if (__this_cpu_read(watchdog_nmi_touch) == true) {

__this_cpu_write(watchdog_nmi_touch, false);

return;

}

/* check for a hardlockup

* This is done by making sure our timer interrupt

* is incrementing. The timer interrupt should have

* fired multiple times before we overflow'd. If it hasn't

* then this is a good indication the cpu is stuck

*/

if (is_hardlockup()) {

int this_cpu = smp_processor_id();

/* only print hardlockups once */

if (__this_cpu_read(hard_watchdog_warn) == true)

return;

if (hardlockup_panic)

panic("Watchdog detected hard LOCKUP on cpu %d",

this_cpu);

else

WARN(1, "Watchdog detected hard LOCKUP on cpu %d",

this_cpu);

__this_cpu_write(hard_watchdog_warn, true);

return;

}

__this_cpu_write(hard_watchdog_warn, false);

return;

}

#ifdef CONFIG_HARDLOCKUP_DETECTOR

//hardlockup检查逻辑

static int is_hardlockup(void)

{

unsigned long hrint = __this_cpu_read(hrtimer_interrupts);

if (__this_cpu_read(hrtimer_interrupts_saved) == hrint)

return 1;

__this_cpu_write(hrtimer_interrupts_saved, hrint);

return 0;

}

#endif

//softlockup检查逻辑

static int is_softlockup(unsigned long touch_ts)

{

unsigned long now = get_timestamp();

/* Warn about unreasonable delays: */

if (time_after(now, touch_ts + get_softlockup_thresh()))

return now - touch_ts;

return 0;

}