centos7 k8s1.5.2(kubernetes) etcd flannel dns dashboard nginx tomcat slb haproxy keepalived

1. etcd 部署

yum安装etcd[root@3a9e34429b75 /]# yum install etcd -y

设置开机自启动

[root@3a9e34429b75 /]# systemctl enable etcd

Created symlink /etc/systemd/system/multi-user.target.wants/etcd.service, pointing to /usr/lib/systemd/system/etcd.service.检查当前启动的端口

[root@3a9e34429b75 /]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name 开启etcd

[root@3a9e34429b75 /]# systemctl start etcd

[root@94805217bd96 /]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 127.0.0.1:2379 0.0.0.0:* LISTEN -

tcp 0 0 127.0.0.1:2380 0.0.0.0:* LISTEN - 验证 etcd

[root@94805217bd96 /]# etcdctl set name mayun

mayun

[root@94805217bd96 /]# etcdctl get name

mayun

[root@94805217bd96 /]# etcdctl -C http://127.0.0.1:2379 cluster-health

member 8e9e05c52164694d is healthy: got healthy result from http://localhost:2379

cluster is healthy

[root@94805217bd96 /]# etcd 配置 /etc/etcd/etcd.conf

# [member]

ETCD_NAME=master

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

#ETCD_WAL_DIR=""

#ETCD_SNAPSHOT_COUNT="10000"

#ETCD_HEARTBEAT_INTERVAL="100"

#ETCD_ELECTION_TIMEOUT="1000"

#ETCD_LISTEN_PEER_URLS="http://localhost:2380"

ETCD_LISTEN_CLIENT_URLS="http://0.0.0.0:2379"

#ETCD_MAX_SNAPSHOTS="5"

#ETCD_MAX_WALS="5"

#ETCD_CORS=""

#

#[cluster]

#ETCD_INITIAL_ADVERTISE_PEER_URLS="http://localhost:2380"

# if you use different ETCD_NAME (e.g. test), set ETCD_INITIAL_CLUSTER value for this name, i.e. "test=http://..."

#ETCD_INITIAL_CLUSTER="default=http://localhost:2380"

#ETCD_INITIAL_CLUSTER_STATE="new"

#ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_ADVERTISE_CLIENT_URLS="http://0.0.0.0:2379"

#ETCD_DISCOVERY=""

#ETCD_DISCOVERY_SRV=""

#ETCD_DISCOVERY_FALLBACK="proxy"

#ETCD_DISCOVERY_PROXY=""

#ETCD_STRICT_RECONFIG_CHECK="false"

#ETCD_AUTO_COMPACTION_RETENTION="0"

#

#[proxy]

#ETCD_PROXY="off"

#ETCD_PROXY_FAILURE_WAIT="5000"

#ETCD_PROXY_REFRESH_INTERVAL="30000"

#ETCD_PROXY_DIAL_TIMEOUT="1000"

#ETCD_PROXY_WRITE_TIMEOUT="5000"

#ETCD_PROXY_READ_TIMEOUT="0"

#

#[security]

#ETCD_CERT_FILE=""

#ETCD_KEY_FILE=""

#ETCD_CLIENT_CERT_AUTH="false"

#ETCD_TRUSTED_CA_FILE=""

#ETCD_AUTO_TLS="false"

#ETCD_PEER_CERT_FILE=""

#ETCD_PEER_KEY_FILE=""

#ETCD_PEER_CLIENT_CERT_AUTH="false"

#ETCD_PEER_TRUSTED_CA_FILE=""

#ETCD_PEER_AUTO_TLS="false"

#

#[logging]

#ETCD_DEBUG="false"

# examples for -log-package-levels etcdserver=WARNING,security=DEBUG

#ETCD_LOG_PACKAGE_LEVELS=""配置完成后退出,保存。

2. 安装证书

本文是基于Git-hub中make-ca-cert方式自己建立,方法如下:

先把github中kubernetes代码都下到要安装master的机器上。

# git clone https://github.com/kubernetes/kubernetes

修改make-ca-cert.sh,将第30行修改为kube(基于kube的组进行启动)

# update the below line with the group that exists on Kubernetes Master.

/* Use the user group with which you are planning to run kubernetes services */

cert_group=${CERT_GROUP:-kube}

运行make-ca-cert.sh

# cd kubernetes/cluster/saltbase/salt/generate-cert/

./make-ca-cert.sh 10.20.0.1这里10.20.0.1是master节点的ip,运行完后发现在/srv/kubernetes目录下已经把相关的key都生成了,把这些key考到所有的minion节点的相同目录。

这里生产的证书就是要在k8s-master中配置用到的。

3. k8s master 部署

安装 kubernetes[root@44dd34165e5e /]# yum install kubernetes -y配置 master,在kubernetes master上需要运行以下组件:

Kubernets API Server Kubernets Controller Manager Kubernets Scheduler

配置 /etc/kubernetes/apiserver

###

# kubernetes system config

#

# The following values are used to configure the kube-apiserver

#

# The address on the local server to listen to.

KUBE_API_ADDRESS="--insecure-bind-address=0.0.0.0"

# The port on the local server to listen on.

# KUBE_API_PORT="--port=8080"

# Port minions listen on

# KUBELET_PORT="--kubelet-port=10250"

# Comma separated list of nodes in the etcd cluster

KUBE_ETCD_SERVERS="--etcd-servers=http://10.3.14.8:2379"

# Address range to use for services

KUBE_SERVICE_ADDRESSES="--service-cluster-ip-range=10.20.0.0/16"

# default admission control policies

KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ServiceAccount,ResourceQuota"

#KUBE_ADMISSION_CONTROL="--admission-control=NamespaceLifecycle,NamespaceExists,LimitRanger,SecurityContextDeny,ResourceQuota"

# Add your own!

KUBE_API_ARGS="--secure-port=443 --client-ca-file=/srv/kubernetes/ca.crt --tls-cert-file=/srv/kubernetes/server.cert --tls-private-key-file=/srv/kubernetes/server.key"

#KUBE_API_ARGS=""配置 /etc/kubernetes/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.3.14.8:8080"配置 /etc/kubernetes/controller-manager

###

# The following values are used to configure the kubernetes controller-manager

# defaults from config and apiserver should be adequate

# Add your own!

KUBE_CONTROLLER_MANAGER_ARGS="--root-ca-file=/srv/kubernetes/ca.crt --service-account-private-key-file=/srv/kubernetes/server.key"

#KUBE_CONTROLLER_MANAGER_ARGS=""配置开机自启动,并启动

[root@44dd34165e5e /]# systemctl enable kube-apiserver

[root@44dd34165e5e /]# systemctl start kube-apiserver

[root@44dd34165e5e /]# systemctl enable kube-controller-manager

[root@44dd34165e5e /]# systemctl start kube-controller-manager

[root@44dd34165e5e /]# systemctl enable kube-scheduler

[root@44dd34165e5e /]# systemctl start kube-scheduler

4. 安装node

同安装master的命令一样,

yum install kubernetes

其实 master和node 是可以选择对应的包安装的,我是为了省事,直接把所有的都装上了,根据需要修改和启动。

node主要两部分,kubelet、kube-proxy

vi /etc/kubernetes/config

###

# kubernetes system config

#

# The following values are used to configure various aspects of all

# kubernetes services, including

#

# kube-apiserver.service

# kube-controller-manager.service

# kube-scheduler.service

# kubelet.service

# kube-proxy.service

# logging to stderr means we get it in the systemd journal

KUBE_LOGTOSTDERR="--logtostderr=true"

# journal message level, 0 is debug

KUBE_LOG_LEVEL="--v=0"

# Should this cluster be allowed to run privileged docker containers

KUBE_ALLOW_PRIV="--allow-privileged=false"

# How the controller-manager, scheduler, and proxy find the apiserver

KUBE_MASTER="--master=http://10.3.14.8:8080"vi /etc/kubernetes/kubelet

###

# kubernetes kubelet (minion) config

# The address for the info server to serve on (set to 0.0.0.0 or "" for all interfaces)

KUBELET_ADDRESS="--address=0.0.0.0"

# The port for the info server to serve on

# KUBELET_PORT="--port=10250"

# You may leave this blank to use the actual hostname

KUBELET_HOSTNAME="--hostname-override=10.3.14.10"

# location of the api-server

KUBELET_API_SERVER="--api-servers=http://10.3.14.8:8080"

# pod infrastructure container

KUBELET_POD_INFRA_CONTAINER="--pod-infra-container-image=registry.access.redhat.com/rhel7/pod-infrastructure:latest"

# Add your own!

KUBELET_ARGS="--cluster_dns=10.20.10.10 --cluster_domain=cluster.local"设置开机自启动,并启动

systemctl enable kubelet

systemctl start kubelet

systemctl enable kube-proxy

systemctl start kube-proxy

查看启动

[root@iz2ze0fq2isg8vphkpos5sz ~]# netstat -lnpt

Active Internet connections (only servers)

Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

tcp 0 0 0.0.0.0:22 0.0.0.0:* LISTEN 2021/sshd

tcp 0 0 127.0.0.1:10248 0.0.0.0:* LISTEN 25448/kubelet

tcp 0 0 127.0.0.1:10249 0.0.0.0:* LISTEN 25813/kube-proxy

tcp6 0 0 :::10250 :::* LISTEN 25448/kubelet

tcp6 0 0 :::10255 :::* LISTEN 25448/kubelet

tcp6 0 0 :::4194 :::* LISTEN 25448/kubelet 注:因服务器资源原因,将原来10.3.14.8 上的 master、etcd 迁到 172.20.4.132 了,肯定有同学看到后面发现ip同前面不对应,这里说明下^_^

5. 安装flannel

flannel 主要是用于集群内的pod 网络划分和通信

安装命令

yum install flannel

配置 vi /etc/sysconfig/flanneld

# Flanneld configuration options

# etcd url location. Point this to the server where etcd runs

FLANNEL_ETCD_ENDPOINTS="http://10.3.14.8:2379"

# etcd config key. This is the configuration key that flannel queries

# For address range assignment

FLANNEL_ETCD_PREFIX="/k8s.com/network"

# Any additional options that you want to pass

#FLANNEL_OPTIONS=""因此还需要在etcd上配置,这里的FLANNEL_ETCD_PREFIX 的值就是来自于etcd。

[root@iz2ze0fq2isg8vphkpos5rz /]# etcdctl set /k8s.com/network/config '{ "Network": "172.17.0.0/16" }'

{ "Network": "172.17.0.0/16" }

[root@iz2ze0fq2isg8vphkpos5rz /]# etcdctl get /k8s.com/network/config

{ "Network": "172.17.0.0/16" }

[root@iz2ze0fq2isg8vphkpos5rz /]# 同时还需要上传节点网络配置

先编写config.json

[root@iz2ze0fq2isg8vphkpos5sz k8s]# vi config.json

{

"Network": "172.17.0.0/16",

"SubnetLen": 24,

"Backend": {

"Type": "vxlan",

"VNI": 7890

}

}

~上传命令

[root@iz2ze0fq2isg8vphkpos5sz k8s]# curl -L http://172.20.4.132:2379/v2/keys/k8s.com/network/config -XPUT --data-urlencode [email protected]

{"action":"set","node":{"key":"/k8s.com/network/config","value":"{\n\"Network\": \"172.17.0.0/16\",\n\"SubnetLen\": 24,\n\"Backend\": {\n

\"Type\": \"vxlan\",\n \"VNI\": 7890\n }\n }\n","modifiedIndex":69677,"createdIndex":69677},"prevNode":{"key":"/k8s.com/network/config"

,"value":"{\"Network\":\"172.17.0.0/16\"}","modifiedIndex":68840,"createdIndex":68840}}配置自启动并启动

systemctl enable flanneld

systemctl start flanneld

查看本机ip配置,出现了flannel的网络

[root@iz2ze0fq2isg8vphkpos5uz ~]# ifconfig

docker0: flags=4099 mtu 1450

inet 172.17.8.1 netmask 255.255.255.0 broadcast 0.0.0.0

ether 02:42:37:12:ef:68 txqueuelen 0 (Ethernet)

RX packets 2125904 bytes 331673249 (316.3 MiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 4013911 bytes 464273110 (442.7 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

eth0: flags=4163 mtu 1500

inet 10.3.14.10 netmask 255.255.255.0 broadcast 10.3.14.255

ether 00:16:3e:06:1d:eb txqueuelen 1000 (Ethernet)

RX packets 5319293 bytes 1706527445 (1.5 GiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 3124713 bytes 472762966 (450.8 MiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

flannel.7890: flags=4163 mtu 1450

inet 172.17.8.0 netmask 255.255.255.255 broadcast 0.0.0.0

ether d2:a2:e8:fd:f2:00 txqueuelen 0 (Ethernet)

RX packets 2776 bytes 282277 (275.6 KiB)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 2551 bytes 376099 (367.2 KiB)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0

lo: flags=73 mtu 65536

inet 127.0.0.1 netmask 255.0.0.0

loop txqueuelen 1 (Local Loopback)

RX packets 18 bytes 976 (976.0 B)

RX errors 0 dropped 0 overruns 0 frame 0

TX packets 18 bytes 976 (976.0 B)

TX errors 0 dropped 0 overruns 0 carrier 0 collisions 0 通过k8s master 可以查看管理的节点

[root@iz2ze0fq2isg8vphkpos5rz dashboard]# kubectl get node -s 10.3.14.8:8080

NAME STATUS AGE

10.3.14.10 Ready 14s

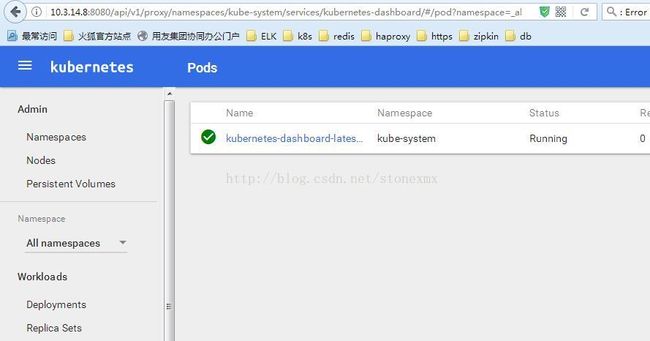

10.3.14.11 Ready 2m6. 安装dashboard

查看当前deployment、svc

[root@iz2ze0fq2isg8vphkpos5rz dashboard]# kubectl get deployment -s 10.3.14.8:8080 --all-namespaces

No resources found.[root@iz2ze0fq2isg8vphkpos5rz dashboard]# kubectl get svc -s 10.3.14.8:8080 --all-namespaces

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes 10.20.0.1 443/TCP 17h dashboard的deployment

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

# Keep the name in sync with image version and

# gce/coreos/kube-manifests/addons/dashboard counterparts

name: kubernetes-dashboard-latest

namespace: kube-system

spec:

replicas: 1

template:

metadata:

labels:

k8s-app: kubernetes-dashboard

version: latest

kubernetes.io/cluster-service: "true"

spec:

containers:

- name: kubernetes-dashboard

image: registry.cn-hangzhou.aliyuncs.com/google-containers/kubernetes-dashboard-amd64:v1.4.2

resources:

# keep request = limit to keep this container in guaranteed class

limits:

cpu: 100m

memory: 50Mi

requests:

cpu: 100m

memory: 50Mi

ports:

- containerPort: 9090

args:

- --apiserver-host=http://10.3.14.8:8080

livenessProbe:

httpGet:

path: /

port: 9090

initialDelaySeconds: 30

timeoutSeconds: 30svc

apiVersion: v1

kind: Service

metadata:

name: kubernetes-dashboard

namespace: kube-system

labels:

k8s-app: kubernetes-dashboard

kubernetes.io/cluster-service: "true"

spec:

selector:

k8s-app: kubernetes-dashboard

ports:

- port: 80

targetPort: 9090创建deployment、pod

[root@iz2ze0fq2isg8vphkpos5rz dashboard]# kubectl get svc --all-namespaces

NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes 10.20.0.1 443/TCP 5m

kube-system kubernetes-dashboard 10.20.87.235 80/TCP 2m

[root@iz2ze0fq2isg8vphkpos5rz dashboard]# [root@iz2ze0fq2isg8vphkpos5rz dashboard]# kubectl create -f dashboard.yaml

deployment "kubernetes-dashboard-latest" created

[root@iz2ze0fq2isg8vphkpos5rz dashboard]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

kube-system kubernetes-dashboard-latest-2600502794-n48m3 1/1 Running 0 15s

[root@iz2ze0fq2isg8vphkpos5rz dashboard]# 创建svc

[root@iz2ze0fq2isg8vphkpos5rz dashboard]# kubectl create -f dashboardsvc.yaml

service "kubernetes-dashboard" created

[root@iz2ze0fq2isg8vphkpos5rz dashboard]# kubectl get svc --all-namespaces

NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes 10.20.0.1 443/TCP 2m

kube-system kubernetes-dashboard 10.20.87.235 80/TCP 9s 访问 http://10.3.14.8:8080/ui

7. 安装DNS

dns configmap 配置 kubedns-cm.yaml

# Copyright 2016 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

apiVersion: v1

kind: ConfigMap

metadata:

name: kube-dns

namespace: kube-system

labels:

addonmanager.kubernetes.io/mode: EnsureExistsdns serviceaccount 配置 kubedns-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: kube-dns

namespace: kube-system

labels:

kubernetes.io/cluster-service: "true"

addonmanager.kubernetes.io/mode: Reconciledns pod 配置 kubedns-deployment.yaml

# Copyright 2016 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# TODO - At some point, we need to rename all skydns-*.yaml.* files to kubedns-*.yaml.*

# Should keep target in cluster/addons/dns-horizontal-autoscaler/dns-horizontal-autoscaler.yaml

# in sync with this file.

# __MACHINE_GENERATED_WARNING__

apiVersion: extensions/v1beta1

kind: Deployment

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

spec:

replicas: 1

# replicas: not specified here:

# 1. In order to make Addon Manager do not reconcile this replicas parameter.

# 2. Default is 1.

# 3. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

rollingUpdate:

maxSurge: 10%

maxUnavailable: 0

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

annotations:

scheduler.alpha.kubernetes.io/critical-pod: ''

scheduler.alpha.kubernetes.io/tolerations: '[{"key":"CriticalAddonsOnly", "operator":"Exists"}]'

spec:

containers:

- name: kubedns

image: myhub.fdccloud.com/library/kubedns-amd64:1.9

volumeMounts:

- name: tz-config

mountPath: /etc/localtime

resources:

# TODO: Set memory limits when we've profiled the container for large

# clusters, then set request = limit to keep this container in

# guaranteed class. Currently, this container falls into the

# "burstable" category so the kubelet doesn't backoff from restarting it.

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

livenessProbe:

httpGet:

path: /healthz-kubedns

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /readiness

port: 8081

scheme: HTTP

# we poll on pod startup for the Kubernetes master service and

# only setup the /readiness HTTP server once that's available.

initialDelaySeconds: 3

timeoutSeconds: 5

args:

- --domain=cluster.local.

- --dns-port=10053

- --config-map=kube-dns

- --kube-master-url=http://172.20.4.132:8080

# This should be set to v=2 only after the new image (cut from 1.5) has

# been released, otherwise we will flood the logs.

- --v=0

#__PILLAR__FEDERATIONS__DOMAIN__MAP__

env:

- name: PROMETHEUS_PORT

value: "10055"

ports:

- containerPort: 10053

name: dns-local

protocol: UDP

- containerPort: 10053

name: dns-tcp-local

protocol: TCP

- containerPort: 10055

name: metrics

protocol: TCP

- name: dnsmasq

image: myhub.fdccloud.com/library/kube-dnsmasq-amd64:1.4

volumeMounts:

- name: tz-config

mountPath: /etc/localtime

livenessProbe:

httpGet:

path: /healthz-dnsmasq

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --cache-size=1000

- --no-resolv

- --server=127.0.0.1#10053

#- --log-facility=- #注释掉该行

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

# see: https://github.com/kubernetes/kubernetes/issues/29055 for details

resources:

requests:

cpu: 150m

memory: 10Mi

- name: dnsmasq-metrics

image: myhub.fdccloud.com/library/dnsmasq-metrics-amd64:1.0

volumeMounts:

- name: tz-config

mountPath: /etc/localtime

livenessProbe:

httpGet:

path: /metrics

port: 10054

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

args:

- --v=2

- --logtostderr

ports:

- containerPort: 10054

name: metrics

protocol: TCP

resources:

requests:

memory: 10Mi

- name: healthz

image: myhub.fdccloud.com/library/exechealthz-amd64:1.2

volumeMounts:

- name: tz-config

mountPath: /etc/localtime

resources:

limits:

memory: 50Mi

requests:

cpu: 10m

# Note that this container shouldn't really need 50Mi of memory. The

# limits are set higher than expected pending investigation on #29688.

# The extra memory was stolen from the kubedns container to keep the

# net memory requested by the pod constant.

memory: 50Mi

args:

- --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1 >/dev/null

- --url=/healthz-dnsmasq

- --cmd=nslookup kubernetes.default.svc.cluster.local 127.0.0.1:10053 >/dev/null

- --url=/healthz-kubedns

- --port=8080

- --quiet

ports:

- containerPort: 8080

protocol: TCP

dnsPolicy: Default # Don't use cluster DNS.

volumes:

- name: tz-config

hostPath:

path: /usr/share/zoneinfo/Asia/Shanghaidns svc 配置 kubedns-svc.yaml

# Copyright 2016 The Kubernetes Authors.

#

# Licensed under the Apache License, Version 2.0 (the "License");

# you may not use this file except in compliance with the License.

# You may obtain a copy of the License at

#

# http://www.apache.org/licenses/LICENSE-2.0

#

# Unless required by applicable law or agreed to in writing, software

# distributed under the License is distributed on an "AS IS" BASIS,

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

# See the License for the specific language governing permissions and

# limitations under the License.

# TODO - At some point, we need to rename all skydns-*.yaml.* files to kubedns-*.yaml.*

# __MACHINE_GENERATED_WARNING__

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "KubeDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.20.10.10

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCPkubectl create -f kubedns-cm.yaml

kubectl create -f kubedns-sa.yaml

kubectl create -f kubedns-deployment.yaml

kubectl create -f kubedns-svc.yaml

查看 deployment pod svc

[root@yzb-centos72-3 dns]# kubectl get deployment --all-namespaces

NAMESPACE NAME DESIRED CURRENT UP-TO-DATE AVAILABLE AGE

kube-system kube-dns 1 1 1 1 19m

kube-system kubernetes-dashboard-latest 1 1 1 1 19h

nginx-ns nginx-depl 1 1 1 1 32m[root@yzb-centos72-3 dns]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default busybox 1/1 Running 1 1h

kube-system kube-dns-4025732692-4rs8d 4/4 Running 0 19m

kube-system kubernetes-dashboard-latest-3685189279-5j204 1/1 Running 2 19h

nginx-ns nginx-depl-3484537133-4g31s 1/1 Running 0 33m[root@yzb-centos72-3 dns]# kubectl get svc --all-namespaces

NAMESPACE NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default kubernetes 10.20.0.1 443/TCP 19h

kube-system kube-dns 10.20.10.10 53/UDP,53/TCP 1h

kube-system kubernetes-dashboard 10.20.64.165 80/TCP 19h

nginx-ns nginx-svc 10.20.202.116 6612/TCP 59m 测试pod busybox.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

name: busybox

role: master

name: busybox

spec:

containers:

- name: busybox

image: myhub.fdccloud.com/library/busybox

command:

- sleep

- "3600"kubectl create -f busybox.yaml

查看pod

[root@yzb-centos72-3 dns]# kubectl get pod --all-namespaces

NAMESPACE NAME READY STATUS RESTARTS AGE

default busybox 1/1 Running 1 1h

kube-system kube-dns-4025732692-4rs8d 4/4 Running 0 23m

kube-system kubernetes-dashboard-latest-3685189279-5j204 1/1 Running 2 19h

nginx-ns nginx-depl-3484537133-4g31s 1/1 Running 0 36测试

[root@yzb-centos72-3 dns]# kubectl exec -it busybox -- nslookup kubernetes

Server: 10.20.10.10

Address 1: 10.20.10.10 kube-dns.kube-system.svc.cluster.local

Name: kubernetes

Address 1: 10.20.0.1 kubernetes.default.svc.cluster.local相关yaml下载:

http://download.csdn.net/detail/stonexmx/9880990