MongoDB分片式高可用集群搭建

高可用性即HA(High Availability)指的是通过尽量缩短因日常维护操作(计划)和突发的系统崩溃(非计划)所导致的停机时间,以提高系统和应用的可用性。

一、高可用集群的解决方案

高可用性即HA(High Availability)指的是通过尽量缩短因日常维护操作(计划)和突发的系统崩溃(非计划)所导致的停机时间,以提高系统和应用的可用性。

计算机系统的高可用在不同的层面上有不同的表现:

(1)网络高可用

由于网络存储的快速发展,网络冗余技术被不断提升,提高IT系统的高可用性的关键应用就是网络高可用性,网络高可用性与网络高可靠性是有区别的,网络高可用性是通过匹配冗余的网络设备实现网络设备的冗余,达到高可用的目的。

比如冗余的交换机,冗余的路由器等

(2)服务器高可用

服务器高可用主要使用的是服务器集群软件或高可用软件来实现。

(3)存储高可用

使用软件或硬件技术实现存储的高度可用性。其主要技术指标是存储切换功能,数据复制功能,数据快照功能等。当一台存储出现故障时,另一台备用的存储可以快速切换,达一存储不停机的目的。

二、MongoDB的高可用集群配置

高可用集群,即High Availability Cluster,简称HA Cluster。

集群(cluster)就是一组计算机,它们作为一个整体向用户提供一组网络资源。

这些单个的计算机系统 就是集群的节点(node)。

搭建高可用集群需要合理的配置多台计算机之间的角色,数据恢复,一致性等,主要有以下几种方式:

(1)主从方式 (非对称方式)

主机工作,备机处于监控准备状况;当主机宕机时,备机接管主机的一切工作,待主机恢复正常后,按使用者的设定以自动或手动方式将服务切换到主机上运行,数据的一致性通过共享存储系统解决。

(2)双机双工方式(互备互援)

两台主机同时运行各自的服务工作且相互监测情况,当任一台主机宕机时,另一台主机立即接管它的一切工作,保证工作实时,应用服务系统的关键数据存放在共享存储系统中。

(3)集群工作方式(多服务器互备方式)

多台主机一起工作,各自运行一个或几个服务,各为服务定义一个或多个备用主机,当某个主机故障时,运行在其上的服务就可以被其它主机接管。

MongoDB集群配置的实践也遵循了这几个方案,主要有主从结构,副本集方式和Sharding分片方式。

三、Master-Slave主从结构

主从架构一般用于备份或者做读写分离。一般有一主一从设计和一主多从设计。

由两种角色构成:

(1)主(Master)

可读可写,当数据有修改的时候,会将oplog同步到所有连接的salve上去。

(2)从(Slave)

只读不可写,自动从Master同步数据。

特别的,对于Mongodb来说,并不推荐使用Master-Slave架构,因为Master-Slave其中Master宕机后不能自动恢复,推荐使用Replica Set,后面会有介绍,除非Replica的节点数超过50,才需要使用Master-Slave架构,正常情况是不可能用那么多节点的。

还有一点,Master-Slave不支持链式结构,Slave只能直接连接Master。Redis的Master-Slave支持链式结构,Slave可以连接Slave,成为Slave的Slave。

四、Relica Set副本集方式

Mongodb的Replica Set即副本集方式主要有两个目的,一个是数据冗余做故障恢复使用,当发生硬件故障或者其它原因造成的宕机时,可以使用副本进行恢复。

另一个是做读写分离,读的请求分流到副本上,减轻主(Primary)的读压力。

1.Primary和Secondary搭建的Replica Set

Replica Set是mongod的实例集合,它们有着同样的数据内容。包含三类角色:

(1)主节点(Primary)

接收所有的写请求,然后把修改同步到所有Secondary。一个Replica Set只能有一个Primary节点,当Primary挂掉后,其他Secondary或者Arbiter节点会重新选举出来一个主节点。默认读请求也是发到Primary节点处理的,需要转发到Secondary需要客户端修改一下连接配置。

(2)副本节点(Secondary)

与主节点保持同样的数据集。当主节点挂掉的时候,参与选主。

(3)仲裁者(Arbiter)

不保有数据,不参与选主,只进行选主投票。使用Arbiter可以减轻数据存储的硬件需求,Arbiter跑起来几乎没什么大的硬件资源需求,但重要的一点是,在生产环境下它和其他数据节点不要部署在同一台机器上。

注意,一个自动failover的Replica Set节点数必须为奇数,目的是选主投票的时候要有一个大多数才能进行选主决策。

(4)选主过程

其中Secondary宕机,不受影响,若Primary宕机,会进行重新选主:

2.使用Arbiter搭建Replica Set

偶数个数据节点,加一个Arbiter构成的Replica Set方式:

>>Sharding分片技术

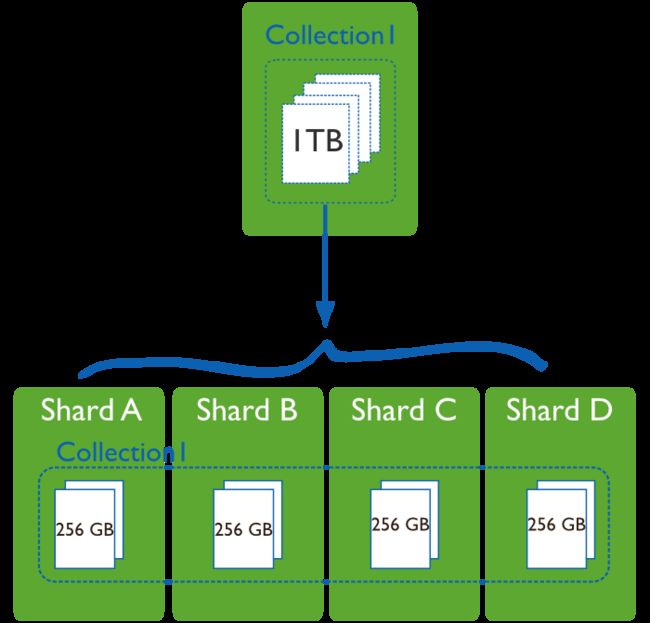

当数据量比较大的时候,我们需要把数据分片运行在不同的机器中,以降低CPU、内存和IO的压力,Sharding就是数据库分片技术。

MongoDB分片技术类似MySQL的水平切分和垂直切分,数据库主要由两种方式做Sharding:垂直扩展和横向切分。

垂直扩展的方式就是进行集群扩展,添加更多的CPU,内存,磁盘空间等。

横向切分则是通过数据分片的方式,通过集群统一提供服务:

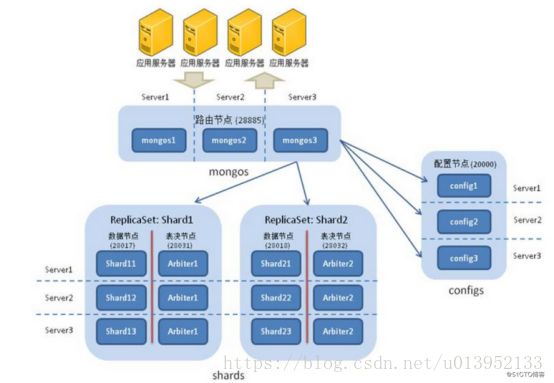

(1)MongoDB的Sharding架构

(2)MongoDB分片架构中的角色

A.数据分片(Shards)

用来保存数据,保证数据的高可用性和一致性。可以是一个单独的mongod实例,也可以是一个副本集。

在生产环境下Shard一般是一个Replica Set,以防止该数据片的单点故障。所有Shard中有一个PrimaryShard,里面包含未进行划分的数据集合:

B.查询路由(Query Routers)

路由就是mongos的实例,客户端直接连接mongos,由mongos把读写请求路由到指定的Shard上去。

一个Sharding集群,可以有一个mongos,也可以有多mongos以减轻客户端请求的压力。

C.配置服务器(Config servers)

保存集群的元数据(metadata),包含各个Shard的路由规则。

五、MongoDB分片式高可用集群搭建

架构图:

集群配置:

三个mongos,三个config server,单个服务器上面运行不通角色的shard(为了后期数据分片均匀,将三台shard在各个

(1)软件下载

服务器上充当不同的角色。),在一个节点内采用 replica set 保证高可用,对应主机与端口信息如下:

主机名 |

IP地址 |

组件mongos |

组件config server |

shard |

Mongo1 |

192.168.189.129 |

端口:20000 |

端口:20001 |

主节点: 22001 |

副本节点:22002 |

||||

仲裁节点:22003 |

||||

Mongo2 |

192.168.189.130 |

端口:20000 |

端口:20001 |

仲裁节点:22001 |

主节点: 22002 |

||||

副本节点:22003 |

||||

Mongo3 |

192.168.189.131 |

端口:20000 |

端口:20001 |

副本节点:22001 |

仲裁节点:22002 |

||||

主节点: 22003 |

集群部署:

(1)软件下载

下载地址:MongoDB下载

使用xshell工具将安装包拷贝到虚拟机中

解压安装包 tar -zxvf xxxxxxxxxx.tar

移动到安装目录 mv mongodb-linux-x86_64-xxx /usr/local/mongodb

添加环境变量 echo "export PATH=\$PATH:/usr/local/mongodb/bin【解压后移动过去会存在一个目录,所以需要配置】" > /etc/profile.d/mongodb.sh (这个里面要小心了!)

使环境变量生效 source /etc/profile.d/mongodb.sh

(2)创建目录

分别在mongodb-1/mongodb-2/mongodb-3创建目录及日志文件

mkdir -p /data/mongodb/mongos/{log,conf}

mkdir -p /data/mongodb/mongoconf/{data,log,conf}

mkdir -p /data/mongodb/shard1/{data,log,conf}

mkdir -p /data/mongodb/shard2/{data,log,conf}

mkdir -p /data/mongodb/shard3/{data,log,conf}

touch /data/mongodb/mongos/log/mongos.log

touch /data/mongodb/mongoconf/log/mongoconf.log

touch /data/mongodb/shard1/log/shard1.log

touch /data/mongodb/shard2/log/shard2.log

touch /data/mongodb/shard3/log/shard3.log(3)配置config server 副本集

在三台服务器上配置config server副本集配置文件mongoconf.conf,并启动服务。

dbpath=/data/mongodb/mongoconf/data

logpath=/data/mongodb/mongoconf/log/mongoconf.log

logappend=true

bind_ip=xx.xx.xx.xx

port=21000

journal=true

fork=true

syncdelay=60

oplogSize=1000

configsvr=true

replSet=replconf #config server配置集replconf启动config server

mongod -f /data/mongodb/mongoconf/conf/mongoconf.conf登录一台服务器进行配置服务器副本集初始化

mongo 192.168.189.129:21000use adminconfig = {_id:"replconf",members:[

{_id:0,host:"192.168.189.129:21000"},

{_id:1,host:"192.168.189.130:21000"},

{_id:2,host:"192.168.189.131:21000"},]

}rs.initiate(config);查看集群状态:

replconf:PRIMARY> rs.status()

{

"set" : "replconf",

"date" : ISODate("2018-05-25T07:45:50.186Z"),

"myState" : 1,

"term" : NumberLong(1),

"configsvr" : true,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.189.129:21000",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 384,

"optime" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-05-25T07:45:45Z"),

"electionTime" : Timestamp(1527234143, 1),

"electionDate" : ISODate("2018-05-25T07:42:23Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.189.130:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 217,

"optime" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-05-25T07:45:45Z"),

"optimeDurableDate" : ISODate("2018-05-25T07:45:45Z"),

"lastHeartbeat" : ISODate("2018-05-25T07:45:49.824Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T07:45:48.815Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.189.129:21000",

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.189.131:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 217,

"optime" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1527234345, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-05-25T07:45:45Z"),

"optimeDurableDate" : ISODate("2018-05-25T07:45:45Z"),

"lastHeartbeat" : ISODate("2018-05-25T07:45:49.823Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T07:45:48.814Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.189.129:21000",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1527234345, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(1527234132, 1),

"electionId" : ObjectId("7fffffff0000000000000001")

},

"$clusterTime" : {

"clusterTime" : Timestamp(1527234345, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}此时config server集群已经配置完成,mongodb1为primary,mongdb2/mongodb3为secondary, 如果mongodb1发生异常(可通过db.runCommand("shutdownServer") 关闭mongod服务),一但主节点挂了其他从节点自动接替变成主节点。

replconf:PRIMARY> rs.status()

{

"set" : "replconf",

"date" : ISODate("2018-05-25T07:50:18.122Z"),

"myState" : 1,

"term" : NumberLong(2),

"configsvr" : true,

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

},

"appliedOpTime" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

},

"durableOpTime" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.189.129:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 34,

"optime" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2018-05-25T07:50:08Z"),

"optimeDurableDate" : ISODate("2018-05-25T07:50:08Z"),

"lastHeartbeat" : ISODate("2018-05-25T07:50:17.635Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T07:50:18.072Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.189.131:21000",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "192.168.189.130:21000",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 581,

"optime" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2018-05-25T07:50:08Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1527234525, 1),

"electionDate" : ISODate("2018-05-25T07:48:45Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 2,

"name" : "192.168.189.131:21000",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 483,

"optime" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

},

"optimeDurable" : {

"ts" : Timestamp(1527234608, 1),

"t" : NumberLong(2)

},

"optimeDate" : ISODate("2018-05-25T07:50:08Z"),

"optimeDurableDate" : ISODate("2018-05-25T07:50:08Z"),

"lastHeartbeat" : ISODate("2018-05-25T07:50:17.627Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T07:50:18.013Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.189.130:21000",

"configVersion" : 1

}

],

"ok" : 1,

"operationTime" : Timestamp(1527234608, 1),

"$gleStats" : {

"lastOpTime" : Timestamp(0, 0),

"electionId" : ObjectId("7fffffff0000000000000002")

},

"$clusterTime" : {

"clusterTime" : Timestamp(1527234608, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

}

}遇到的问题以及解决方式:

出现如下错误,需关闭防火墙,然后在配置文件里面配置bind_id.

(4)配置shard集群

三台服务器均进行shard集群配置,在shard1的conf中创建文件shard.conf

dbpath=/data/mongodb/shard1/data

logpath=/data/mongodb/shard1/log/shard1.log

bind_ip=xx.xx.xx.xx

port=22001

logappend=true

#nohttpinterface=true

fork=true

oplogSize=4096

journal=true

#engine=wiredTiger

#cacheSizeGB=38G

smallfiles=true

shardsvr=true

replSet=shard1

启动shard服务:

mongod -f /data/mongodb/shard1/conf/shard.conf

查看此时服务已经正常启动,shard1的22001端口已经正常监听,接下来登录mongodb2服务器进行shard1副本集初始化。

> use admin;

switched to db admin

> config={_id:"shard1",members:[

... {_id:0,host:"192.168.189.129:22001"},

... {_id:1,host:"192.168.189.130:22001",},

... {_id:2,host:"192.168.189.131:22001",arbiterOnly:true},]

... }

{

"_id" : "shard1",

"members" : [

{

"_id" : 0,

"host" : "192.168.189.129:22001"

},

{

"_id" : 1,

"host" : "192.168.189.130:22001"

},

{

"_id" : 2,

"host" : "192.168.189.131:22001",

"arbiterOnly" : true

}

]

}

> rs.initiate(config);

{ "ok" : 1 }查看集群状态:

shard1:SECONDARY> rs.status();

{

"set" : "shard1",

"date" : ISODate("2018-05-25T08:10:42.103Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1527235841, 1),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1527235841, 1),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1527235841, 1),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1527235841, 1),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.189.129:22001",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 22,

"optime" : {

"ts" : Timestamp(1527235831, 2),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1527235831, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-05-25T08:10:31Z"),

"optimeDurableDate" : ISODate("2018-05-25T08:10:31Z"),

"lastHeartbeat" : ISODate("2018-05-25T08:10:40.411Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T08:10:40.945Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.189.130:22001",

"configVersion" : 1

},

{

"_id" : 1,

"name" : "192.168.189.130:22001",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 256,

"optime" : {

"ts" : Timestamp(1527235841, 1),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-05-25T08:10:41Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1527235830, 1),

"electionDate" : ISODate("2018-05-25T08:10:30Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 2,

"name" : "192.168.189.131:22001",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 22,

"lastHeartbeat" : ISODate("2018-05-25T08:10:40.413Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T08:10:41.847Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

}

],

"ok" : 1

}此时shard1 副本集已经配置完成,mongo2为primary,mongo3为arbiter,mongo1为secondary。

同样的操作进行shard2配置和shard3配置,在mongo1上进行shard2的副本集初始化,在mongo3上进行 初始化shard3副本集初始化。

shard2配置文件

dbpath=/data/mongodb/shard2/data

logpath=/data/mongodb/shard2/log/shard2.log

bind_ip=xx.xx.xx.xx

port=22002

logappend=true

#nohttpinterface = true

fork=true

oplogSize=4096

journal=true

#engine=wiredTiger

#cacheSizeGB=38G

smallfiles=true

shardsvr=true

replSet=shard2启动服务:

mongod -f /data/mongodb/shard2/conf/shard.confshard3配置文件:

dbpath=/data/mongodb/shard3/data

logpath=/data/mongodb/shard3/log/shard3.log

bind_ip=192.168.189.129

port=22003

logappend=true

#nohttpinterface = true

fork=true

oplogSize=4096

journal=true

#engine=wiredTiger

#cacheSizeGB=38G

smallfiles=true

shardsvr=true

replSet=shard3启动服务:

mongod -f /data/mongodb/shard3/conf/shard.conf在mongo1上进行shard2的副本集初始化:

> use admin;

switched to db admin

> config={_id:"shard2",members:[

... {_id:0,host:"192.168.189.129:22002"},

... {_id:1,host:"192.168.189.130:22002",arbiterOnly:true},

... {_id:2,host:"192.168.189.131:22002"},]

... }

{

"_id" : "shard2",

"members" : [

{

"_id" : 0,

"host" : "192.168.189.129:22002"

},

{

"_id" : 1,

"host" : "192.168.189.130:22002",

"arbiterOnly" : true

},

{

"_id" : 2,

"host" : "192.168.189.131:22002"

}

]

}

> rs.initiate(config);

{ "ok" : 1 }查看集群状态:

shard2:SECONDARY> rs.status();

{

"set" : "shard2",

"date" : ISODate("2018-05-25T08:32:01.225Z"),

"myState" : 1,

"term" : NumberLong(1),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(1527237115, 2),

"t" : NumberLong(1)

},

"readConcernMajorityOpTime" : {

"ts" : Timestamp(1527237115, 2),

"t" : NumberLong(1)

},

"appliedOpTime" : {

"ts" : Timestamp(1527237115, 2),

"t" : NumberLong(1)

},

"durableOpTime" : {

"ts" : Timestamp(1527237115, 2),

"t" : NumberLong(1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.189.129:22002",

"health" : 1,

"state" : 1,

"stateStr" : "PRIMARY",

"uptime" : 718,

"optime" : {

"ts" : Timestamp(1527237115, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-05-25T08:31:55Z"),

"infoMessage" : "could not find member to sync from",

"electionTime" : Timestamp(1527237114, 1),

"electionDate" : ISODate("2018-05-25T08:31:54Z"),

"configVersion" : 1,

"self" : true

},

{

"_id" : 1,

"name" : "192.168.189.130:22002",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 17,

"lastHeartbeat" : ISODate("2018-05-25T08:32:00.203Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T08:31:59.675Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.189.131:22002",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 17,

"optime" : {

"ts" : Timestamp(1527237115, 2),

"t" : NumberLong(1)

},

"optimeDurable" : {

"ts" : Timestamp(1527237115, 2),

"t" : NumberLong(1)

},

"optimeDate" : ISODate("2018-05-25T08:31:55Z"),

"optimeDurableDate" : ISODate("2018-05-25T08:31:55Z"),

"lastHeartbeat" : ISODate("2018-05-25T08:32:00.203Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T08:32:00.845Z"),

"pingMs" : NumberLong(0),

"syncingTo" : "192.168.189.129:22002",

"configVersion" : 1

}

],

"ok" : 1

}在mongo3上进行 初始化shard3副本集初始化:

> use admin;

switched to db admin

>

> config={_id:"shard3",members:[

... {_id:0,host:"192.168.189.129:22003",arbiterOnly:true},

... {_id:1,host:"192.168.189.130:22003"},

... {_id:2,host:"192.168.189.131:22003"},]

... }

{

"_id" : "shard3",

"members" : [

{

"_id" : 0,

"host" : "192.168.189.129:22003",

"arbiterOnly" : true

},

{

"_id" : 1,

"host" : "192.168.189.130:22003"

},

{

"_id" : 2,

"host" : "192.168.189.131:22003"

}

]

}

> rs.initiate(config);

{ "ok" : 1 }查看集群状态:

shard3:SECONDARY> rs.status();

{

"set" : "shard3",

"date" : ISODate("2018-05-25T08:34:43.641Z"),

"myState" : 2,

"term" : NumberLong(0),

"heartbeatIntervalMillis" : NumberLong(2000),

"optimes" : {

"lastCommittedOpTime" : {

"ts" : Timestamp(0, 0),

"t" : NumberLong(-1)

},

"appliedOpTime" : {

"ts" : Timestamp(1527237276, 1),

"t" : NumberLong(-1)

},

"durableOpTime" : {

"ts" : Timestamp(1527237276, 1),

"t" : NumberLong(-1)

}

},

"members" : [

{

"_id" : 0,

"name" : "192.168.189.129:22003",

"health" : 1,

"state" : 7,

"stateStr" : "ARBITER",

"uptime" : 6,

"lastHeartbeat" : ISODate("2018-05-25T08:34:43.324Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T08:34:42.773Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

},

{

"_id" : 1,

"name" : "192.168.189.130:22003",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 6,

"optime" : {

"ts" : Timestamp(1527237276, 1),

"t" : NumberLong(-1)

},

"optimeDurable" : {

"ts" : Timestamp(1527237276, 1),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("2018-05-25T08:34:36Z"),

"optimeDurableDate" : ISODate("2018-05-25T08:34:36Z"),

"lastHeartbeat" : ISODate("2018-05-25T08:34:43.329Z"),

"lastHeartbeatRecv" : ISODate("2018-05-25T08:34:43.434Z"),

"pingMs" : NumberLong(0),

"configVersion" : 1

},

{

"_id" : 2,

"name" : "192.168.189.131:22003",

"health" : 1,

"state" : 2,

"stateStr" : "SECONDARY",

"uptime" : 445,

"optime" : {

"ts" : Timestamp(1527237276, 1),

"t" : NumberLong(-1)

},

"optimeDate" : ISODate("2018-05-25T08:34:36Z"),

"infoMessage" : "could not find member to sync from",

"configVersion" : 1,

"self" : true

}

],

"ok" : 1

}此时shard集群全部已经配置完毕。

(5)配置路由服务器mongos

目前三台服务器的配置服务器和分片服务器均已启动,配置三台mongos服务器,由于mongos服务器的配置是从内存中加载,所以自己没有存在数据目录configdb连接为配置服务器集群。

logpath=/data/mongodb/mongos/log/mongos.log

logappend=true

bind_ip=192.168.189.129

port=20000

maxConns=1000

configdb=replconf/192.168.189.129:21000,192.168.189.130:21000,192.168.189.131:21000

fork=true

启动mongos服务:

mongos -f /data/mongodb/mongos/conf/mongos.conf

登录任意一台mongos:

mongos> use admin;

switched to db admin

mongos> db.runCommand({addshard:"shard1/192.168.189.129:22001,192.168.189.130:22001,192.168.189.131:22001"})

{

"shardAdded" : "shard1",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1527238521, 6),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1527238521, 6)

}mongos> db.runCommand({addshard:"shard2/192.168.189.129:22002,192.168.189.130:22002,192.168.189.131:22002"})

{

"shardAdded" : "shard2",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1527238579, 5),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1527238579, 5)

}mongos> db.runCommand({addshard:"shard3/192.168.189.129:22003,192.168.189.130:22003,192.168.189.131:22003"})

{

"shardAdded" : "shard3",

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1527238639, 6),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1527238639, 6)

}查看集群:

mongos> sh.status();

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("5b07be61af6c40924aaed879")

}

shards:

{ "_id" : "shard1", "host" : "shard1/192.168.189.129:22001,192.168.189.130:22001", "state" : 1 }

{ "_id" : "shard2", "host" : "shard2/192.168.189.129:22002,192.168.189.131:22002", "state" : 1 }

{ "_id" : "shard3", "host" : "shard3/192.168.189.130:22003,192.168.189.131:22003", "state" : 1 }

active mongoses:

"3.6.5" : 1

autosplit:

Currently enabled: yes

balancer:

Currently enabled: yes

Currently running: no

Failed balancer rounds in last 5 attempts: 0

Migration Results for the last 24 hours:

No recent migrations

databases:

{ "_id" : "config", "primary" : "config", "partitioned" : true }

config.system.sessions

shard key: { "_id" : 1 }

unique: false

balancing: true

chunks:

shard1 1

{ "_id" : { "$minKey" : 1 } } -->> { "_id" : { "$maxKey" : 1 } } on : shard1 Timestamp(1, 0) (6)测试

目前配置服务、路由服务、分片服务、副本集服务都已经串联起来了,此时进行数据插入,数据能够自动分片。连接在mongos上让指定的数据库、指定的集合分片生效。注意:设置分片需要在admin数据库进行。

use admin

db.runCommand( { enablesharding :"testdb"}); #开启database库分片功能

db.runCommand( { shardcollection : "testdb.table1",key : {_id:"hashed"} } ) #指定数据库里需要分片的集合tables和片键_id设置testdb的 table1 表需要分片,根据 _id 自动分片到 shard1 ,shard2,shard3 上面去。

查看分片信息:

mongos> db.runCommand({listshards:1})

{

"shards" : [

{

"_id" : "shard1",

"host" : "shard1/192.168.189.129:22001,192.168.189.130:22001",

"state" : 1

},

{

"_id" : "shard2",

"host" : "shard2/192.168.189.129:22002,192.168.189.131:22002",

"state" : 1

},

{

"_id" : "shard3",

"host" : "shard3/192.168.189.130:22003,192.168.189.131:22003",

"state" : 1

}

],

"ok" : 1,

"$clusterTime" : {

"clusterTime" : Timestamp(1527238886, 1),

"signature" : {

"hash" : BinData(0,"AAAAAAAAAAAAAAAAAAAAAAAAAAA="),

"keyId" : NumberLong(0)

}

},

"operationTime" : Timestamp(1527238886, 1)

}测试插入数据:

use testdb;

for (var i = 1; i <= 100000; i++) db.table1.save({_id:i,"test1":"testval1"});查看分片情况:

db.table1.stats()此时架构中的mongos,config server,shard集群均已经搭建部署完毕,在实际生成环境话需要对前端的mongos做高可用来提示整体高可用。

参考文档:

MongoDB高可用集群配置的几种方案

搭建高可用mongodb集群(二)—— 副本集

搭建高可用MongoDB集群(四):分片

centos7 搭建mongodb集群