【原】JDK8线程池源码全面分析

目录

线程池的基本概念

程池的创建

Core and maximum pool sizes与workQueue

Keep-alive times

threadFactory

handler

线程池的workQueue

队列的操作方法

ArrayBlockingQueue

notEmpty和notFull条件

take和put方法

dequeue和enqueue方法

offer和poll方法

LinkedBlockingQueue

take和put方法

dequeue和enqueue方法

offer和poll方法

PriorityBlockingQueue

offer和poll方法

take和put方法

SynchronousQueue

take和put方法

非公平策略:TransferStack

公平策略:TransferQueue

DelayQueue

take和put方法

线程池的拒绝策略

线程池中线程的创建与回收

提交任务

执行任务

线程的回收

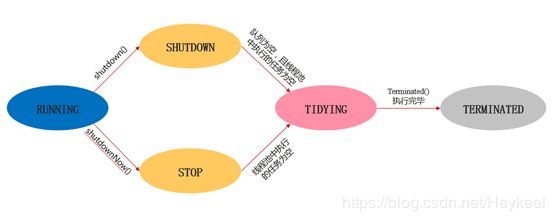

线程池的状态

RUNNING

SHUTDOWN

STOP

TIDYING

TERMINATED

常用线程池

固定数目线程的线程池(FixedThreadPool)

特点

使用场景

可缓存线程的线程池(CachedThreadPool)

特点

使用场景

单线程的线程池(SingleThreadExecutor)

特点

使用场景

定时及周期执行的线程池(ScheduledThreadPool)

特点

使用场景

线程池的基本概念

线程池是一种池化技术,其最核心的思想就是把宝贵的资源放到一个池子中,每次使用都从里面获取,用完之后又放回池子供任务使用。使用线程池有以下优势:

- 避免增加创建线程和销毁线程的资源损耗

因为线程其实也是一个对象,创建一个对象,需要经过类加载过程,销毁一个对象,需要走GC垃圾回收流程,都是需要资源开销的。

- 提高响应速度

如果任务到达了,相对于从线程池拿线程,重新去创建一条线程执行,速度肯定慢很多。

- 重复利用

线程用完,再放回池子,可以达到重复利用的效果,节省资源。

程池的创建

在JDK中,线程池由ThreadPoolExecutor进行创建,它的构造函数如下:

public ThreadPoolExecutor(int corePoolSize,

int maximumPoolSize,

long keepAliveTime,

TimeUnit unit,

BlockingQueue workQueue,

ThreadFactory threadFactory,

RejectedExecutionHandler handler)

整理出来即是:

- corePoolSize:线程池核心线程数最大值

- maximumPoolSize:线程池最大线程数大小

- keepAliveTime:线程池中非核心线程空闲的存活时间大小

- unit:线程空闲存活时间单位

- workQueue:存放任务的阻塞队列

- threadFactory:用于设置创建线程的工厂,可以给创建的线程设置有意义的名字

- handler:线城池的饱和策略事件,主要有四种类型

Core and maximum pool sizes与workQueue

由ThreadPoolExecutor创建的线程池会根据corePoolSize和maximumPoolSize自动调整线程池的大小,而workQueue用于转移或者持有向线程池提交的任务,该队列与线程池大小相互作用。

- 运行中的线程数量小于corePoolSize时,则执行器(Executor)总是创建新线程执行任务,而不是将任务放入workQueue。

- 运行中的线程数量大于或者等于corePoolSize时,则执行器(Executor)总是添加一个新线程,而不是将任务放入workQueue。

- 新任务已经不能被加入workQueue中时,如果线程数量未达到maximumPoolSize,那么将创建新线程去执行该任务,否则将采取拒绝任务的策略。

Keep-alive times

如果线程池中的线程数量超过corePoolSize,那么多余的线程如果超出keepAliveTime处于空闲(idle)中,那么这些线程将被终结(terminated)。

threadFactory

用于为线程池创建新的线程。所有的线程池的线程均是由threadFactory通过addWorker方法创建。所有调用者均需要处理addWorker创建线程失败的场景,这反映了用户或者系统的拒绝策略。

handler

在workQueue饱和或者线程池shutdown状态下的拒绝策略。

线程池的workQueue

线程池共有5种工作队列:ArrayBlockingQueue、LinkedBlockingQueue、PriorityBlockingQueue、SynchronousQueue和DelayQueue。

队列的操作方法

|

|

抛出异常 |

特殊值 |

阻塞 |

超时 |

| 插入 |

add(e) |

offer(e) |

put(e) |

offer(e, time, unit) |

| 移除 |

remove() |

poll() |

take() |

poll(time, unit) |

| 检查 |

element() |

peek() |

不可用 |

不可用 |

ArrayBlockingQueue

ArrayBlockingQueue属于先进先出(FIFO)排序,在线程池具有有限maximumPoolSizes场景下,可以帮助避免资源耗尽,但是却不便于调节和控制。适用于较大的workQueue,但是却拥有较小maximumPoolSizes场景。这样会导致系统吞吐量比较低。因为系统可调用的线程比线程池所最大线程数量的多。如果workQueue比较小,且需要较大的线程池,这使CPU更加繁忙,但是却增加了系统调度开销,也会降低吞吐量。

notEmpty和notFull条件

notEmpty条件用于在workQueue为空的时候,使线程进入wait状态,notFull条件用于workQueue满额的时候,使线程进入wait状态。这个两个条件用于实现take()和put(e)方法的阻塞功能。

take和put方法

public E take() throws InterruptedException {

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

while (count == 0)

notEmpty.await();

return dequeue();

} finally {

lock.unlock();

}

}

public void put(E e) throws InterruptedException {

checkNotNull(e);

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

while (count == items.length)

notFull.await();

enqueue(e);

} finally {

lock.unlock();

}

}

当执行take方法时,如果count == 0成立,则利用notEmpty条件使当前线程进入wait状态,而不是真正的阻塞,此时不占用CPU。put方法与take方法逻辑类似。

dequeue和enqueue方法

private E dequeue() {

// assert lock.getHoldCount() == 1;

// assert items[takeIndex] != null;

final Object[] items = this.items;

@SuppressWarnings("unchecked")

E x = (E) items[takeIndex];

items[takeIndex] = null;

if (++takeIndex == items.length)

takeIndex = 0;

count--;

if (itrs != null)

itrs.elementDequeued();

notFull.signal();

return x;

}

private void enqueue(E x) {

// assert lock.getHoldCount() == 1;

// assert items[putIndex] == null;

final Object[] items = this.items;

items[putIndex] = x;

if (++putIndex == items.length)

putIndex = 0;

count++;

notEmpty.signal();

}

当执行dequeue方法结束后,利用notEmpty条件唤醒一个由于获取workQueue满额时被休眠的线程。dequeue方法与enqueue方法逻辑类似。

offer和poll方法

public boolean offer(E e) {

checkNotNull(e);

final ReentrantLock lock = this.lock;

lock.lock();

try {

if (count == items.length)

return false;

else {

enqueue(e);

return true;

}

} finally {

lock.unlock();

}

}

public E poll() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return (count == 0) ? null : dequeue();

} finally {

lock.unlock();

}

}

ArrayBlockingQueue的offer和poll方法都是使用同一个锁,队列的出队和入队相互之间存在锁竞争,同样地,put和take方法,add和remove方法均有这种情况,它们均不具备并发读写的功能。

LinkedBlockingQueue

LinkedBlockingQueue属于先进先出(FIFO)排序,相比于ArrayBlockingQueue,它可以不指定的容量大小,其默认最大值为Integer. MAX_VALUE,并且利用双锁模型,实现插入和移除完全的并行。值得注意的是,队列头的值为null,队列尾的next节点为null。

/**

* Head of linked list.

* Invariant: head.item == null

*/

transient Node head;

/**

* Tail of linked list.

* Invariant: last.next == null

*/

private transient Node last;

take和put方法

public E take() throws InterruptedException {

E x;

int c = -1;

final AtomicInteger count = this.count;

final ReentrantLock takeLock = this.takeLock;

takeLock.lockInterruptibly();

try {

while (count.get() == 0) {

notEmpty.await();

}

x = dequeue();

c = count.getAndDecrement();

if (c > 1)

notEmpty.signal();

} finally {

takeLock.unlock();

}

if (c == capacity)

signalNotFull();

return x;

}

public void put(E e) throws InterruptedException {

if (e == null) throw new NullPointerException();

int c = -1;

Node node = new Node(e);

final ReentrantLock putLock = this.putLock;

final AtomicInteger count = this.count;

putLock.lockInterruptibly();

try {

while (count.get() == capacity) {

notFull.await();

}

enqueue(node);

c = count.getAndIncrement();

if (c + 1 < capacity)

notFull.signal();

} finally {

putLock.unlock();

}

if (c == 0)

signalNotEmpty();

}

private void signalNotFull() {

final ReentrantLock putLock = this.putLock;

putLock.lock();

try {

notFull.signal();

} finally {

putLock.unlock();

}

}

private void signalNotEmpty() {

final ReentrantLock takeLock = this.takeLock;

takeLock.lock();

try {

notEmpty.signal();

} finally {

takeLock.unlock();

}

}

LinkedBlockingQueue由链表实现,因此take和put实际上作用在不同的Node对象上,因此可以分别用takeLock和putLock分别作用于队列头和队列尾,并2个创建notEmpty和putLock条件实现线程的休眠和唤醒。由于队列头和队列尾用的不是同一个锁,因此也实现了入队和出队的并发。由于入队和出队的并发,因此记录queue大小的变量count需要使用原子类型实现。

dequeue和enqueue方法

private void enqueue(Node node) {

// assert putLock.isHeldByCurrentThread();

// assert last.next == null;

last = last.next = node;

}

private E dequeue() {

// assert takeLock.isHeldByCurrentThread();

// assert head.item == null;

Node h = head;

Node first = h.next;

h.next = h; // help GC

head = first;

E x = first.item;

first.item = null;

return x;

}

offer和poll方法

public boolean offer(E e) {

if (e == null) throw new NullPointerException();

final AtomicInteger count = this.count;

if (count.get() == capacity)

return false;

int c = -1;

Node node = new Node(e);

final ReentrantLock putLock = this.putLock;

putLock.lock();

try {

if (count.get() < capacity) {

enqueue(node);

c = count.getAndIncrement();

if (c + 1 < capacity)

notFull.signal();

}

} finally {

putLock.unlock();

}

if (c == 0)

signalNotEmpty();

return c >= 0;

}

public E poll() {

final AtomicInteger count = this.count;

if (count.get() == 0)

return null;

E x = null;

int c = -1;

final ReentrantLock takeLock = this.takeLock;

takeLock.lock();

try {

if (count.get() > 0) {

x = dequeue();

c = count.getAndDecrement();

if (c > 1)

notEmpty.signal();

}

} finally {

takeLock.unlock();

}

if (c == capacity)

signalNotFull();

return x;

}

PriorityBlockingQueue

PriorityBlockingQueue是一个无界的优先队列,内部由数组实现,虽然在不指定容量的情况默认为11,但是在插入队列时,有扩容机制tryGrow,最大容量为Integer.MAX_VALUE-8。

private void tryGrow(Object[] array, int oldCap) {

lock.unlock(); // must release and then re-acquire main lock

Object[] newArray = null;

if (allocationSpinLock == 0 &&

UNSAFE.compareAndSwapInt(this, allocationSpinLockOffset,

0, 1)) {

try {

int newCap = oldCap + ((oldCap < 64) ?

(oldCap + 2) : // grow faster if small

(oldCap >> 1));

if (newCap - MAX_ARRAY_SIZE > 0) { // possible overflow

int minCap = oldCap + 1;

if (minCap < 0 || minCap > MAX_ARRAY_SIZE)

throw new OutOfMemoryError();

newCap = MAX_ARRAY_SIZE;

}

if (newCap > oldCap && queue == array)

newArray = new Object[newCap];

} finally {

allocationSpinLock = 0;

}

}

if (newArray == null) // back off if another thread is allocating

Thread.yield();

lock.lock();

if (newArray != null && queue == array) {

queue = newArray;

System.arraycopy(array, 0, newArray, 0, oldCap);

}

}

优先队列插入的数据,需要满足2个条件之一:

- 插入数据类型实现了Comparable

- 优先队列Comparator不为null

若队列存在Comparator则以Comparator为准。该队列内部只有1个锁,由于是无界队列,只存在notEmpty条件。

offer和poll方法

public boolean offer(E e) {

if (e == null)

throw new NullPointerException();

final ReentrantLock lock = this.lock;

lock.lock();

int n, cap;

Object[] array;

while ((n = size) >= (cap = (array = queue).length))

tryGrow(array, cap);

try {

Comparator cmp = comparator;

if (cmp == null)

siftUpComparable(n, e, array);

else

siftUpUsingComparator(n, e, array, cmp);

size = n + 1;

notEmpty.signal();

} finally {

lock.unlock();

}

return true;

}

public boolean offer(E e, long timeout, TimeUnit unit) {

return offer(e); // never need to block

}

public E poll() {

final ReentrantLock lock = this.lock;

lock.lock();

try {

return dequeue();

} finally {

lock.unlock();

}

}

private E dequeue() {

int n = size - 1;

if (n < 0)

return null;

else {

Object[] array = queue;

E result = (E) array[0];

E x = (E) array[n];

array[n] = null;

Comparator cmp = comparator;

if (cmp == null)

siftDownComparable(0, x, array, n);

else

siftDownUsingComparator(0, x, array, n, cmp);

size = n;

return result;

}

}

因为PriorityBlockingQueue为无界队列,因此offer没有超时设置一说。

take和put方法

public E take() throws InterruptedException {

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

E result;

try {

while ( (result = dequeue()) == null)

notEmpty.await();

} finally {

lock.unlock();

}

return result;

}

public void put(E e) {

offer(e); // never need to block

}

PriorityBlockingQueue是无界队列,因此优先队列的put方法是没有阻塞操作的。

SynchronousQueue

SynchronousQueue的put和take操作需要2个不同的线程配对实现,即put操作需要等待另一个线程take操作,它没有任何内部容量,因此不能实现peek操作。整个队列不存在lock,但是大量运用cas操作实现乐观锁,以及通过使用自旋操作对线程的调度实现优化。

SynchronousQueue不存在内容容量,并不代表不存在内部存储介质,否则也无法记录哪些线程对它进行了什么操作,也就无从谈及公平策略或非公平策略了。公平策略和非公平策略分别由不同的内部存储介质数据结构实现。

- 公平策略:TransferQueue

- 非公平策略:TransferStack

public SynchronousQueue(boolean fair) {

transferer = fair ? new TransferQueue() : new TransferStack();

}

take和put方法

public E take() throws InterruptedException {

E e = transferer.transfer(null, false, 0);

if (e != null)

return e;

Thread.interrupted();

throw new InterruptedException();

}

public void put(E e) throws InterruptedException {

if (e == null) throw new NullPointerException();

if (transferer.transfer(e, false, 0) == null) {

Thread.interrupted();

throw new InterruptedException();

}

}

take和put方法均是由transferer.transfer方法实现,他们的差异在于传入的值为null还是e。因此理解take和put方法的关键在于理解transferer.transfer方法。SynchronousQueue的策略不同,transferer实例的实现方法也不同,因此需要分开分析。参考https://www.jianshu.com/p/d5e2e3513ba3。

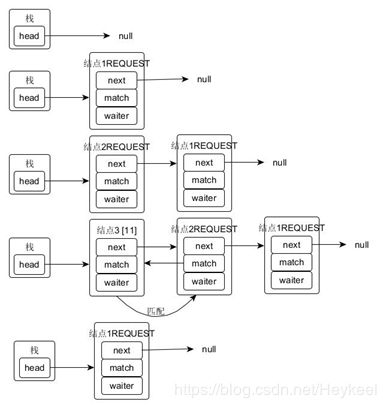

非公平策略:TransferStack

- 当队列为空时,先put后take

- 当队列为空时,先take后put

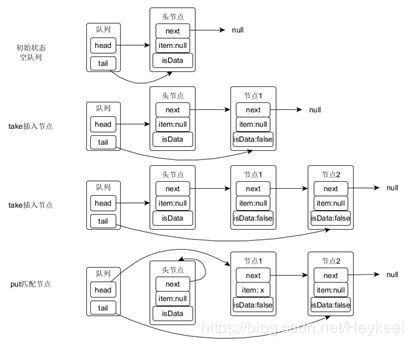

公平策略:TransferQueue

- 当队列为空时,先put后take

- 当队列为空时,先take后put

DelayQueue

DelayQueue是一个无界的阻塞队列,内部由PriorityQueue存储元素,而PriorityQueue虽然核心是数组,但是具备自动扩容的功能,因此作用和PriorityBlockingQueue类似,只是缺少阻塞功能,因此在DelayQueue获取内部元素时,一般会使用PriorityQueue的peek方法判断队列是否为空,然后再做对应的处理。

DelayQueue的元素都必须实现Delayed接口,而Delayed还是Comparable的子接口,因此它的元素具备延迟和比较的功能。值得注意的是DelayQueue的元素需要自己维护过期时间,如下所示:

public class TestDelay implements Delayed

{

/**

* 过期时间

*/

private final long expire;

/**

* 数据

*/

private final String data;

public TestDelay(long expire)

{

long now = System.currentTimeMillis();

this.expire = expire + now;

this.data = new Date(now).toString() + " thread: " + Thread.currentThread().getName();

}

public String getData()

{

return data;

}

@Override

public long getDelay(TimeUnit unit)

{

return unit.convert(this.expire - System.currentTimeMillis(), TimeUnit.MILLISECONDS);

}

@Override

public int compareTo(Delayed o)

{

return (int) (this.getDelay(TimeUnit.MILLISECONDS) - o.getDelay(TimeUnit.MILLISECONDS));

}

}

take和put方法

public void put(E e) {

offer(e);

}

public boolean offer(E e) {

final ReentrantLock lock = this.lock;

lock.lock();

try {

q.offer(e);

if (q.peek() == e) {

leader = null;

available.signal();

}

return true;

} finally {

lock.unlock();

}

}

public E take() throws InterruptedException {

final ReentrantLock lock = this.lock;

lock.lockInterruptibly();

try {

for (;;) {

E first = q.peek();

if (first == null)

available.await();

else {

long delay = first.getDelay(NANOSECONDS);

if (delay <= 0)

return q.poll();

first = null; // don't retain ref while waiting

if (leader != null)

available.await();

else {

Thread thisThread = Thread.currentThread();

leader = thisThread;

try {

available.awaitNanos(delay);

} finally {

if (leader == thisThread)

leader = null;

}

}

}

}

} finally {

if (leader == null && q.peek() != null)

available.signal();

lock.unlock();

}

}

线程池的拒绝策略

线程池的拒绝策略分为4种

- AbortPolicy:抛出一个异常,默认的。

- DiscardPolicy:直接丢弃任务,不做任何处理。

- DiscardOldestPolicy:丢弃队列里最老的任务,将当前这个任务继续提交给线程池。

- CallerRunsPolicy:交给线程池调用所在的线程进行处理。

/**

* A handler for rejected tasks that throws a

* {@code RejectedExecutionException}.

*/

public static class AbortPolicy implements RejectedExecutionHandler {

/**

* Creates an {@code AbortPolicy}.

*/

public AbortPolicy() { }

/**

* Always throws RejectedExecutionException.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

* @throws RejectedExecutionException always

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

throw new RejectedExecutionException("Task " + r.toString() +

" rejected from " +

e.toString());

}

}

/**

* A handler for rejected tasks that silently discards the

* rejected task.

*/

public static class DiscardPolicy implements RejectedExecutionHandler {

/**

* Creates a {@code DiscardPolicy}.

*/

public DiscardPolicy() { }

/**

* Does nothing, which has the effect of discarding task r.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

}

}

/**

* A handler for rejected tasks that discards the oldest unhandled

* request and then retries {@code execute}, unless the executor

* is shut down, in which case the task is discarded.

*/

public static class DiscardOldestPolicy implements RejectedExecutionHandler {

/**

* Creates a {@code DiscardOldestPolicy} for the given executor.

*/

public DiscardOldestPolicy() { }

/**

* Obtains and ignores the next task that the executor

* would otherwise execute, if one is immediately available,

* and then retries execution of task r, unless the executor

* is shut down, in which case task r is instead discarded.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

if (!e.isShutdown()) {

e.getQueue().poll();

e.execute(r);

}

}

}

/**

* A handler for rejected tasks that runs the rejected task

* directly in the calling thread of the {@code execute} method,

* unless the executor has been shut down, in which case the task

* is discarded.

*/

public static class CallerRunsPolicy implements RejectedExecutionHandler {

/**

* Creates a {@code CallerRunsPolicy}.

*/

public CallerRunsPolicy() { }

/**

* Executes task r in the caller's thread, unless the executor

* has been shut down, in which case the task is discarded.

*

* @param r the runnable task requested to be executed

* @param e the executor attempting to execute this task

*/

public void rejectedExecution(Runnable r, ThreadPoolExecutor e) {

if (!e.isShutdown()) {

r.run();

}

}

}

线程池中线程的创建与回收

提交任务

线程池的任务提交方式有2种,一种是execute方法,另一种是submit方法。execute和submit都属于线程池的方法,execute只能提交Runnable类型的任务,而submit既能提交Runnable类型任务也能提交Callable类型任务。

- execute会直接抛出任务执行时的异常,submit会吃掉异常,可通过Future的get方法将任务执行时的异常重新抛出。

- execute所属顶层接口是Executor,submit所属顶层接口是ExecutorService,实现类ThreadPoolExecutor重写了execute方法,抽象类AbstractExecutorService重写了submit方法。

通过addWorker方法创建worker,worker包含了执行任务的thread和task。

public Future submit(Runnable task, T result) {

if (task == null) throw new NullPointerException();

RunnableFuture ftask = newTaskFor(task, result);

execute(ftask);

return ftask;

}

/**

* Executes the given task sometime in the future. The task

* may execute in a new thread or in an existing pooled thread.

*

* If the task cannot be submitted for execution, either because this

* executor has been shutdown or because its capacity has been reached,

* the task is handled by the current {@code RejectedExecutionHandler}.

*

* @param command the task to execute

* @throws RejectedExecutionException at discretion of

* {@code RejectedExecutionHandler}, if the task

* cannot be accepted for execution

* @throws NullPointerException if {@code command} is null

*/

public void execute(Runnable command) {

if (command == null)

throw new NullPointerException();

/*

* Proceed in 3 steps:

*

* 1. If fewer than corePoolSize threads are running, try to

* start a new thread with the given command as its first

* task. The call to addWorker atomically checks runState and

* workerCount, and so prevents false alarms that would add

* threads when it shouldn't, by returning false.

*

* 2. If a task can be successfully queued, then we still need

* to double-check whether we should have added a thread

* (because existing ones died since last checking) or that

* the pool shut down since entry into this method. So we

* recheck state and if necessary roll back the enqueuing if

* stopped, or start a new thread if there are none.

*

* 3. If we cannot queue task, then we try to add a new

* thread. If it fails, we know we are shut down or saturated

* and so reject the task.

*/

int c = ctl.get();

if (workerCountOf(c) < corePoolSize) {

if (addWorker(command, true))

return;

c = ctl.get();

}

if (isRunning(c) && workQueue.offer(command)) {

int recheck = ctl.get();

if (! isRunning(recheck) && remove(command))

reject(command);

else if (workerCountOf(recheck) == 0)

addWorker(null, false);

}

else if (!addWorker(command, false))

reject(command);

}

执行任务

线程池的线程由threadFactory创建,通过addWorker绑定线程和firstTask到一个worker当中,实际执行任务的是worker的run方法。

我们可以看到runWorker方法中有一个while循环,循环的条件是task为null或者getTask方法获取到的task为null。getTask方法其核心就是从workQueue中获取任务。

/** Delegates main run loop to outer runWorker */

public void run() {

runWorker(this);

}

/**

* Main worker run loop. Repeatedly gets tasks from queue and

* executes them, while coping with a number of issues:

*

* 1. We may start out with an initial task, in which case we

* don't need to get the first one. Otherwise, as long as pool is

* running, we get tasks from getTask. If it returns null then the

* worker exits due to changed pool state or configuration

* parameters. Other exits result from exception throws in

* external code, in which case completedAbruptly holds, which

* usually leads processWorkerExit to replace this thread.

*

* 2. Before running any task, the lock is acquired to prevent

* other pool interrupts while the task is executing, and then we

* ensure that unless pool is stopping, this thread does not have

* its interrupt set.

*

* 3. Each task run is preceded by a call to beforeExecute, which

* might throw an exception, in which case we cause thread to die

* (breaking loop with completedAbruptly true) without processing

* the task.

*

* 4. Assuming beforeExecute completes normally, we run the task,

* gathering any of its thrown exceptions to send to afterExecute.

* We separately handle RuntimeException, Error (both of which the

* specs guarantee that we trap) and arbitrary Throwables.

* Because we cannot rethrow Throwables within Runnable.run, we

* wrap them within Errors on the way out (to the thread's

* UncaughtExceptionHandler). Any thrown exception also

* conservatively causes thread to die.

*

* 5. After task.run completes, we call afterExecute, which may

* also throw an exception, which will also cause thread to

* die. According to JLS Sec 14.20, this exception is the one that

* will be in effect even if task.run throws.

*

* The net effect of the exception mechanics is that afterExecute

* and the thread's UncaughtExceptionHandler have as accurate

* information as we can provide about any problems encountered by

* user code.

*

* @param w the worker

*/

final void runWorker(Worker w) {

Thread wt = Thread.currentThread();

Runnable task = w.firstTask;

w.firstTask = null;

w.unlock(); // allow interrupts

boolean completedAbruptly = true;

try {

while (task != null || (task = getTask()) != null) {

w.lock();

// If pool is stopping, ensure thread is interrupted;

// if not, ensure thread is not interrupted. This

// requires a recheck in second case to deal with

// shutdownNow race while clearing interrupt

if ((runStateAtLeast(ctl.get(), STOP) ||

(Thread.interrupted() &&

runStateAtLeast(ctl.get(), STOP))) &&

!wt.isInterrupted())

wt.interrupt();

try {

beforeExecute(wt, task);

Throwable thrown = null;

try {

task.run();

} catch (RuntimeException x) {

thrown = x; throw x;

} catch (Error x) {

thrown = x; throw x;

} catch (Throwable x) {

thrown = x; throw new Error(x);

} finally {

afterExecute(task, thrown);

}

} finally {

task = null;

w.completedTasks++;

w.unlock();

}

}

completedAbruptly = false;

} finally {

processWorkerExit(w, completedAbruptly);

}

}

/**

* Performs blocking or timed wait for a task, depending on

* current configuration settings, or returns null if this worker

* must exit because of any of:

* 1. There are more than maximumPoolSize workers (due to

* a call to setMaximumPoolSize).

* 2. The pool is stopped.

* 3. The pool is shutdown and the queue is empty.

* 4. This worker timed out waiting for a task, and timed-out

* workers are subject to termination (that is,

* {@code allowCoreThreadTimeOut || workerCount > corePoolSize})

* both before and after the timed wait, and if the queue is

* non-empty, this worker is not the last thread in the pool.

*

* @return task, or null if the worker must exit, in which case

* workerCount is decremented

*/

private Runnable getTask() {

boolean timedOut = false; // Did the last poll() time out?

for (;;) {

int c = ctl.get();

int rs = runStateOf(c);

// Check if queue empty only if necessary.

if (rs >= SHUTDOWN && (rs >= STOP || workQueue.isEmpty())) {

decrementWorkerCount();

return null;

}

int wc = workerCountOf(c);

// Are workers subject to culling?

boolean timed = allowCoreThreadTimeOut || wc > corePoolSize;

if ((wc > maximumPoolSize || (timed && timedOut))

&& (wc > 1 || workQueue.isEmpty())) {

if (compareAndDecrementWorkerCount(c))

return null;

continue;

}

try {

Runnable r = timed ?

workQueue.poll(keepAliveTime, TimeUnit.NANOSECONDS) :

workQueue.take();

if (r != null)

return r;

timedOut = true;

} catch (InterruptedException retry) {

timedOut = false;

}

}

}

线程的回收

线程回收的奥秘藏在while循环条件的getTask方法当中,当执行的worker为核心线程时,通过workQueue.take()获取任务,从之前对workQueue的分析知道take方法为阻塞方法,如果workQueue为空,则该线程进入休眠状态,当workQueue有新的任务时,该线程又会被唤醒,以此实现了核心线程的重复利用。如果是非核心线程,则workQueue.poll()方法获取任务,并且设置了超时时间,如果一定时间内无法获取新的任务,那么该线程就被系统回收。

线程池的状态

RUNNING

- 状态说明:线程池处在RUNNING状态时,能够接收新任务,以及对已添加的任务进行处理。

- 状态切换:线程池的初始化状态是RUNNING。换句话说,线程池被一旦被创建,就处于RUNNING状态,并且线程池中的任务数为0。

SHUTDOWN

- 状态说明:线程池处在SHUTDOWN状态时,不接收新任务,但能处理已添加的任务。

- 状态切换:调用线程池的shutdown()接口时,线程池由RUNNING -> SHUTDOWN。

STOP

- 状态说明:线程池处在STOP状态时,不接收新任务,不处理已添加的任务,并且会中断正在处理的任务。

- 状态切换:调用线程池的shutdownNow()接口时,线程池由(RUNNING or SHUTDOWN ) -> STOP。

TIDYING

- 状态说明:当所有的任务已终止,ctl记录的”任务数量”为0,线程池会变为TIDYING状态。当线程池变为TIDYING状态时,会执行钩子函数terminated()。terminated()在ThreadPoolExecutor类中是空的,若用户想在线程池变为TIDYING时,进行相应的处理;可以通过重载terminated()函数来实现。

- 状态切换:当线程池在SHUTDOWN状态下,阻塞队列为空并且线程池中执行的任务也为空时,就会由 SHUTDOWN -> TIDYING。当线程池在STOP状态下,线程池中执行的任务为空时,就会由STOP -> TIDYING。

TERMINATED

- 状态说明:线程池彻底终止,就变成TERMINATED状态。

- 状态切换:线程池处在TIDYING状态时,执行完terminated()之后,就会由 TIDYING -> TERMINATED。

常用线程池

固定数目线程的线程池(FixedThreadPool)

/**

* Creates a thread pool that reuses a fixed number of threads

* operating off a shared unbounded queue. At any point, at most

* {@code nThreads} threads will be active processing tasks.

* If additional tasks are submitted when all threads are active,

* they will wait in the queue until a thread is available.

* If any thread terminates due to a failure during execution

* prior to shutdown, a new one will take its place if needed to

* execute subsequent tasks. The threads in the pool will exist

* until it is explicitly {@link ExecutorService#shutdown shutdown}.

*

* @param nThreads the number of threads in the pool

* @return the newly created thread pool

* @throws IllegalArgumentException if {@code nThreads <= 0}

*/

public static ExecutorService newFixedThreadPool(int nThreads) {

return new ThreadPoolExecutor(nThreads, nThreads,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue());

}

特点

- 核心线程数和最大线程数大小一样

- 没有所谓的非空闲时间,即keepAliveTime为0

- 阻塞队列为无界队列LinkedBlockingQueue,队列最大值为Integer.MAX_VALUE

使用场景

FixedThreadPool适用于处理CPU密集型的任务,确保CPU在长期被工作线程使用的情况下,尽可能的少的分配线程,即适用执行长期的任务。

可缓存线程的线程池(CachedThreadPool)

/**

* Creates a thread pool that creates new threads as needed, but

* will reuse previously constructed threads when they are

* available. These pools will typically improve the performance

* of programs that execute many short-lived asynchronous tasks.

* Calls to {@code execute} will reuse previously constructed

* threads if available. If no existing thread is available, a new

* thread will be created and added to the pool. Threads that have

* not been used for sixty seconds are terminated and removed from

* the cache. Thus, a pool that remains idle for long enough will

* not consume any resources. Note that pools with similar

* properties but different details (for example, timeout parameters)

* may be created using {@link ThreadPoolExecutor} constructors.

*

* @return the newly created thread pool

*/

public static ExecutorService newCachedThreadPool() {

return new ThreadPoolExecutor(0, Integer.MAX_VALUE,

60L, TimeUnit.SECONDS,

new SynchronousQueue());

}

特点

- 核心线程数为0

- 最大线程数为Integer.MAX_VALUE

- 阻塞队列是SynchronousQueue

- 非核心线程空闲存活时间为60秒

使用场景

用于并发执行大量短期的小任务。当提交任务的速度大于处理任务的速度时,每次提交一个任务,就必然会创建一个线程。极端情况下会创建过多的线程,耗尽 CPU 和内存资源。由于空闲 60 秒的线程会被终止,长时间保持空闲的 CachedThreadPool 不会占用任何资源。

单线程的线程池(SingleThreadExecutor)

/**

* Creates an Executor that uses a single worker thread operating

* off an unbounded queue. (Note however that if this single

* thread terminates due to a failure during execution prior to

* shutdown, a new one will take its place if needed to execute

* subsequent tasks.) Tasks are guaranteed to execute

* sequentially, and no more than one task will be active at any

* given time. Unlike the otherwise equivalent

* {@code newFixedThreadPool(1)} the returned executor is

* guaranteed not to be reconfigurable to use additional threads.

*

* @return the newly created single-threaded Executor

*/

public static ExecutorService newSingleThreadExecutor() {

return new FinalizableDelegatedExecutorService

(new ThreadPoolExecutor(1, 1,

0L, TimeUnit.MILLISECONDS,

new LinkedBlockingQueue()));

}

特点

- 核心线程数为1

- 最大线程数也为1

- 阻塞队列是LinkedBlockingQueue

- keepAliveTime为0

使用场景

适用于串行执行任务的场景,一个任务一个任务地执行。

定时及周期执行的线程池(ScheduledThreadPool)

/**

* Creates a thread pool that can schedule commands to run after a

* given delay, or to execute periodically.

* @param corePoolSize the number of threads to keep in the pool,

* even if they are idle

* @return a newly created scheduled thread pool

* @throws IllegalArgumentException if {@code corePoolSize < 0}

*/

public static ScheduledExecutorService newScheduledThreadPool(int corePoolSize) {

return new ScheduledThreadPoolExecutor(corePoolSize);

}

/**

* Creates a new {@code ScheduledThreadPoolExecutor} with the

* given core pool size.

*

* @param corePoolSize the number of threads to keep in the pool, even

* if they are idle, unless {@code allowCoreThreadTimeOut} is set

* @throws IllegalArgumentException if {@code corePoolSize < 0}

*/

public ScheduledThreadPoolExecutor(int corePoolSize) {

super(corePoolSize, Integer.MAX_VALUE, 0, NANOSECONDS,

new DelayedWorkQueue());

}

特点

- 最大线程数为Integer.MAX_VALUE

- 阻塞队列是DelayedWorkQueue

- keepAliveTime为0

使用场景

周期性执行任务的场景,需要限制线程数量的场景。