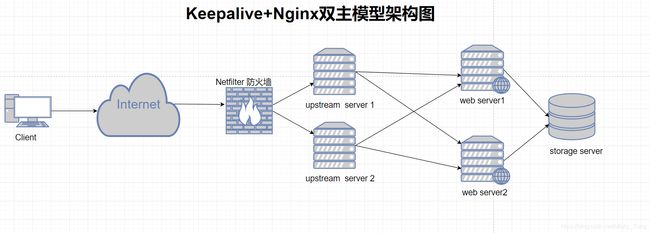

Keepalived+Nginx双主切换实验

二、实验环境说明

| 角色说明 | ip地址 |

|---|---|

| 调度器1 | 192.168.126.141 |

| 调度器2 | 192.168.126.139 |

| Web server1 | 192.168.126.128 |

| Web server2 | 192.168.126.138 |

| 虚拟ip地址1 | 192.168.126.140 |

| 虚拟ip地址2 | 192.168.126.150 |

三、实验配置

注意事项:首先我们需要在两个调度器上可以互相解析主机名(需要与本地hosts文件相同),而且需要关闭iptables防火墙与selinux;而后要在调度器两端分别安装好keepalived和nginx程序包。

1)第一步,配置后端web服务器站点资源文件

web server1端:

[root@web1 ~]#vim /var/www/html/index.html

Page from web1

[root@master ~]# systemctl restart httpd

[root@web1 ~]# ss -tunlp | grep 80 //web服务已经启动

tcp LISTEN 0 128 :::80 :::* users:(("httpd",6753,4),("httpd",6752,4),("httpd",6751,4),("httpd",6750,4),("httpd",6749,4),("httpd",6740,4))

[root@web1 ~]#

web server2端:

[root@db ~]# vim /var/www/html/index.html

Page from web2

[root@db ~]# service httpd restart

停止 httpd: [确定]

正在启动 httpd:httpd: apr_sockaddr_info_get() failed for db

httpd: Could not reliably determine the server's fully qualified domain name, using 127.0.0.1 for ServerName

[确定]

[root@db ~]# ss -tunlp | grep 80 //web服务已经正常启动

tcp LISTEN 0 128 :::80 :::* users:(("httpd",3213,4))

[root@db ~]#

2)配置nginx成为负载均衡调度器

我们需要在调度器端各自安装nginx程序包,先使nginx可以正常进行的web服务资源调度。

编译安装nginx程序请看:https://blog.csdn.net/Micky_Yang/article/details/88647953

[root@director1 ~]#vim /usr/local/nginx/conf/nginx.conf

http {

include mime.types;

default_type application/octet-stream;

upstream webserv{ //在http配置段配置upstream服务器群,名称为webserv;

server 192.168.126.128:80 weight=1; //调度的后端服务器地址,权重为1;

server 192.168.126.138:80 weight=1; //调度的后端服务器地址,权重也为1,轮询方式;

}

location / {

proxy_pass http://webserv; //在server端配置一个location匹配段,把所有请求本地的资源

调度到名称为webserv的负载均衡集群中;

}

//把此配置文件拷贝到director2端一份,并在两端启动nginx

[root@director1 ~]# scp /usr/local/nginx/conf/nginx.conf director2:/usr/local/nginx/conf/nginx.conf

nginx.conf 100% 2829 1.2MB/s 00:00

[root@director1 ~]#

[root@director1 ~]# /usr/local/nginx/sbin/nginx

[root@director1 ~]# ss -tunlp | grep 80

tcp LISTEN 0 128 *:80 *:* users:(("nginx",pid=79475,fd=6),("nginx",pid=79474,fd=6))

[root@director1 ~]#

[root@director1 ~]# curl 192.168.126.141

Page from web1

[root@director1 ~]# curl 192.168.126.141

Page from web2

[root@director1 ~]# curl 192.168.126.141

Page from web1

[root@director1 ~]# curl 192.168.126.141

Page from web2

//调度器1端已经成功实现负载均衡效果

[root@director1 ~]#

调度器2配置:

[root@director2 ~]# /usr/local/nginx/sbin/nginx

[root@director2 ~]# ss -tunlp | grep 80

tcp LISTEN 0 128 *:80 *:* users:(("nginx",pid=77953,fd=6),("nginx",pid=77952,fd=6))

[root@director2 ~]#

[root@director2 ~]# curl 192.168.126.139

Page from web1

[root@director2 ~]# curl 192.168.126.139

Page from web2

[root@director2 ~]# curl 192.168.126.139

Page from web1

[root@director2 ~]# curl 192.168.126.139

Page from web2

//调度器2端也已经成功实现负载均衡效果

[root@director2 ~]#

3)配置keepalived,添加虚拟ip实现互为主备

调度器1配置:

! Configuration File for keepalived

global_defs { //global全局配置段

notification_email {

root@localhost //设置邮件的接受人地址

}

notification_email_from [email protected] //发送邮件的人,此处可以自行定义;

smtp_server 127.0.0.1 //配置邮件服务器地址,默认为本地即可;

smtp_connect_timeout 30 //连接邮件服务器的超时时间;

router_id LVS_DEVEL

}

vrrp_script chk_mt { //虚拟ip地址切换脚本

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -2

}

vrrp_script chk_nginx { //检测nginx是否在线脚本;如果nginx服务不在线,则当前节点权重减10;

script "killall -0 nginx &> /dev/null"

interval 1

weight -10

}

vrrp_instance VI_1 { //第一个虚拟路由器实例1配置

state MASTER //初始状态为主角色;

interface ens33 //虚拟地址所在的网卡接口名称;

virtual_router_id 51 //虚拟路由器实例的id号,同一个虚拟路由器实例两端id号必须相同;

priority 100 //优先级,数字越大,优先级越高;

advert_int 1 //健康状态监测时长间隔;

authentication { //认证相关配置

auth_type PASS //字符认证

auth_pass 1111 //认证密码

}

virtual_ipaddress {

192.168.126.140 //配置虚拟ip地址;

}

track_script {

chk_nginx //此处调用上面的nginx状态检查脚本

}

}

vrrp_instance VI_2 { //虚拟路由器实例二的配置段

state BACKUP //因为是互相为主备,所以第二个虚拟路由器实例的初始状态为备节点;

interface ens33 //虚拟地址所在的网卡接口名称;

virtual_router_id 61 //虚拟路由器实例的id号,同一个虚拟路由器实例两端id号必须相同;

priority 99 //优先级设定;

advert_int 1 //状态检查时长间隔;

authentication { //认证类型

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

192.168.126.150 //虚拟路由器实例二的ip地址;

}

track_script {

chk_nginx //同样调用nginx健康状态检查脚本;

}

}

调度器2配置:

! Configuration File for keepalived

global_defs {

notification_email {

root@localhost

}

notification_email_from [email protected]

smtp_server 127.0.0.1

smtp_connect_timeout 30

router_id LVS_DEVEL

}

vrrp_script chk_mt {

script "[[ -f /etc/keepalived/down ]] && exit 1 || exit 0"

interval 1

weight -2

}

vrrp_script chk_nginx {

script "killall -0 nginx &> /dev/null"

interval 1

weight -10

}

vrrp_instance VI_1 {

state BACKUP //初始状态为备虚拟节点;

interface eno16777736

virtual_router_id 51

priority 99 //优先级要小于调度器1的虚拟路由器1的优先级;

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

192.168.126.140 //虚拟路由器1所关联的虚拟ip地址;

}

track_script {

chk_nginx

}

}

vrrp_instance VI_2 {

state MASTER //既然是双主模型,此时虚拟路由器2的配置应该为主,同样提供虚拟ip地址实现负载均衡服务

interface eno16777736 //虚拟地址的网卡接口地址;

virtual_router_id 61

priority 100 //优先级要大于调度器1的虚拟路由器2的优先级;

advert_int 1

authentication {

auth_type PASS

auth_pass 2222

}

virtual_ipaddress {

192.168.126.150 //配置虚拟路由器实例2的ip地址

}

track_script {

chk_nginx //同样都使用nginx健康状态检查脚本;

}

}

4)启动服务,使用虚拟IP地址访问服务

调度器1:

[root@director1 ~]# systemctl restart keepalived

[root@director1 ~]# ip addr list

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8f:12:c4 brd ff:ff:ff:ff:ff:ff

inet 192.168.126.141/24 brd 192.168.126.255 scope global noprefixroute dynamic ens33

valid_lft 1014sec preferred_lft 1014sec

inet 192.168.126.140/32 scope global ens33 //第一个虚拟ip地址已经在调度器1

valid_lft forever preferred_lft forever

inet6 fe80::1729:4a5d:513a:e120/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@director1 ~]# curl 192.168.126.141

Page from web1

[root@director1 ~]# curl 192.168.126.141

Page from web2

[root@director1 ~]# curl 192.168.126.141

Page from web1

[root@director1 ~]# curl 192.168.126.141

Page from web2

//实现调度服务已然没有任何问题

[root@director1 ~]#

调度器2:

[root@director2 ~]# systemctl restart keepalived

[root@director2 ~]# ip addr list

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:60:18:fd brd ff:ff:ff:ff:ff:ff

inet 192.168.126.139/24 brd 192.168.126.255 scope global noprefixroute dynamic eno16777736

valid_lft 1490sec preferred_lft 1490sec

inet 192.168.126.150/32 scope global eno16777736 //第二个虚拟路由器ip地址已在调度器2上

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe60:18fd/64 scope link noprefixroute

valid_lft forever preferred_lft forever

[root@director2 ~]# curl 192.168.126.150

Page from web1

[root@director2 ~]# curl 192.168.126.150

Page from web2

[root@director2 ~]# curl 192.168.126.150

Page from web1

[root@director2 ~]# curl 192.168.126.150

Page from web2

//此时,调度器2也已然可以利用虚拟路由ip地址提供负载均衡服务

[root@director2 ~]#

5)模拟故障,查看是否可以实现虚拟ip地址切换

我们先停掉调度器1端的nginx负载均衡服务,查看虚拟ip地址1是否可以切换到调度器2上

[root@director1 ~]# /usr/local/nginx/sbin/nginx -s stop

[root@director1 ~]#

[root@director1 ~]# ip addr list

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: ens33: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8f:12:c4 brd ff:ff:ff:ff:ff:ff

inet 192.168.126.141/24 brd 192.168.126.255 scope global noprefixroute dynamic ens33

valid_lft 1396sec preferred_lft 1396sec //此时已经没有虚拟ip地址1;

inet6 fe80::1729:4a5d:513a:e120/64 scope link noprefixroute

valid_lft forever preferred_lft forever

3: ens36: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:8f:12:ce brd ff:ff:ff:ff:ff:ff

[root@director1 ~]#

在调度器2端查看是否有从调度器1端切换过来的虚拟ip地址1

[root@director2 ~]# ip addr list

1: lo: mtu 65536 qdisc noqueue state UNKNOWN group default qlen 1000

link/loopback 00:00:00:00:00:00 brd 00:00:00:00:00:00

inet 127.0.0.1/8 scope host lo

valid_lft forever preferred_lft forever

inet6 ::1/128 scope host

valid_lft forever preferred_lft forever

2: eno16777736: mtu 1500 qdisc pfifo_fast state UP group default qlen 1000

link/ether 00:0c:29:60:18:fd brd ff:ff:ff:ff:ff:ff

inet 192.168.126.139/24 brd 192.168.126.255 scope global noprefixroute dynamic eno16777736

valid_lft 1122sec preferred_lft 1122sec

inet 192.168.126.150/32 scope global eno16777736

valid_lft forever preferred_lft forever

inet 192.168.126.140/32 scope global eno16777736 //此时已然有第一个虚拟ip地址

valid_lft forever preferred_lft forever

inet6 fe80::20c:29ff:fe60:18fd/64 scope link noprefixroute

valid_lft forever preferred_lft forever

我们再查看使用两个虚拟ip地址请求web资源是否正常

[root@director2 ~]# curl 192.168.126.140

Page from web1

[root@director2 ~]# curl 192.168.126.140

Page from web2

[root@director2 ~]#

[root@director2 ~]#

[root@director2 ~]#

[root@director2 ~]# curl 192.168.126.150

Page from web1

[root@director2 ~]# curl 192.168.126.150

Page from web2

[root@director2 ~]# curl 192.168.126.150

Page from web1

[root@director2 ~]# curl 192.168.126.150

Page from web2

//已然没有任何问题,调度器2已接手两个虚拟ip地址,提供负载均衡服务器

[root@director2 ~]#

实验结果:通过以上实验,我们已经可以到达双主故障自动切换,提供负载均衡服务的高可用性,无论哪一端的调度器出现问题,提供虚拟服务器的ip地址都会切换到另一个调度器上,达到服务的高可用性。