hive任务优化-Current usage: 2.0 GB of 2 GB physical memory used; 4.0 GB of 16.2 GB virtual memory used.

目录

错误背景

错误信息定位

client端日志

APPlication日志

map和reduce单个错误日志

错误分析

解决方案

1. 取消虚拟内存的检查(不建议):

2.增大mapreduce.map.memory.mb 或者 mapreduce.reduce.memory.mb (建议)

3.适当增大 yarn.nodemanager.vmem-pmem-ratio的大小

4.换成sparkSQL任务(骚的一比,强烈推荐)

小结

错误背景

大概是job运行超过了map和reduce设置的内存大小,导致任务失败 ,就是写了一个hql语句运行在大数据平台上面,发现报错了。

错误信息定位

client端日志

INFO : converting to local hdfs://hacluster/tenant/yxs/product/resources/resources/jar/f3c06465-4af1-4756-894e-ce74ec11b9c3.jar

INFO : Added [/opt/huawei/Bigdata/tmp/hivelocaltmp/session_resources/2d0a2efc-776c-4ccc-957d-927079862ab2_resources/f3c06465-4af1-4756-894e-ce74ec11b9c3.jar] to class path

INFO : Added resources: [hdfs://hacluster/tenant/yxs/product/resources/resources/jar/f3c06465-4af1-4756-894e-ce74ec11b9c3.jar]

INFO : Number of reduce tasks not specified. Estimated from input data size: 2

INFO : In order to change the average load for a reducer (in bytes):

INFO : set hive.exec.reducers.bytes.per.reducer=

INFO : In order to limit the maximum number of reducers:

INFO : set hive.exec.reducers.max=

INFO : In order to set a constant number of reducers:

INFO : set mapreduce.job.reduces=

INFO : number of splits:10

INFO : Submitting tokens for job: job_1567609664100_85580

INFO : Kind: HDFS_DELEGATION_TOKEN, Service: ha-hdfs:hacluster

INFO : Kind: HIVE_DELEGATION_TOKEN, Service: HiveServer2ImpersonationToken

INFO : The url to track the job: https://yiclouddata03-szzb:26001/proxy/application_1567609664100_85580/

INFO : Starting Job = job_1567609664100_85580, Tracking URL = https://yiclouddata03-szzb:26001/proxy/application_1567609664100_85580/

INFO : Kill Command = /opt/huawei/Bigdata/FusionInsight_HD_V100R002C80SPC203/install/FusionInsight-Hive-1.3.0/hive-1.3.0/bin/..//../hadoop/bin/hadoop job -kill job_1567609664100_85580

INFO : Hadoop job information for Stage-6: number of mappers: 10; number of reducers: 2

INFO : 2019-09-24 16:16:17,686 Stage-6 map = 0%, reduce = 0%

INFO : 2019-09-24 16:16:27,299 Stage-6 map = 20%, reduce = 0%, Cumulative CPU 10.12 sec

INFO : 2019-09-24 16:16:28,474 Stage-6 map = 30%, reduce = 0%, Cumulative CPU 30.4 sec

INFO : 2019-09-24 16:16:29,664 Stage-6 map = 70%, reduce = 0%, Cumulative CPU 83.44 sec

INFO : 2019-09-24 16:16:30,841 Stage-6 map = 90%, reduce = 0%, Cumulative CPU 115.79 sec

INFO : 2019-09-24 16:16:32,004 Stage-6 map = 91%, reduce = 0%, Cumulative CPU 134.73 sec

INFO : 2019-09-24 16:16:44,928 Stage-6 map = 92%, reduce = 0%, Cumulative CPU 223.25 sec

INFO : 2019-09-24 16:16:55,613 Stage-6 map = 93%, reduce = 0%, Cumulative CPU 284.27 sec

INFO : 2019-09-24 16:17:03,797 Stage-6 map = 94%, reduce = 0%, Cumulative CPU 313.69 sec

INFO : 2019-09-24 16:17:11,881 Stage-6 map = 90%, reduce = 0%, Cumulative CPU 115.79 sec

INFO : 2019-09-24 16:18:12,546 Stage-6 map = 90%, reduce = 0%, Cumulative CPU 115.79 sec

INFO : 2019-09-24 16:19:04,473 Stage-6 map = 91%, reduce = 0%, Cumulative CPU 185.47 sec

INFO : 2019-09-24 16:19:13,683 Stage-6 map = 92%, reduce = 0%, Cumulative CPU 223.35 sec

INFO : 2019-09-24 16:19:22,825 Stage-6 map = 93%, reduce = 0%, Cumulative CPU 281.97 sec

INFO : 2019-09-24 16:19:32,053 Stage-6 map = 94%, reduce = 0%, Cumulative CPU 314.97 sec

INFO : 2019-09-24 16:19:54,143 Stage-6 map = 95%, reduce = 0%, Cumulative CPU 377.36 sec

INFO : 2019-09-24 16:19:56,520 Stage-6 map = 90%, reduce = 0%, Cumulative CPU 115.79 sec

INFO : 2019-09-24 16:20:09,338 Stage-6 map = 91%, reduce = 0%, Cumulative CPU 181.59 sec

INFO : 2019-09-24 16:20:18,574 Stage-6 map = 92%, reduce = 0%, Cumulative CPU 217.27 sec

INFO : 2019-09-24 16:20:27,772 Stage-6 map = 93%, reduce = 0%, Cumulative CPU 266.25 sec

INFO : 2019-09-24 16:20:40,439 Stage-6 map = 94%, reduce = 0%, Cumulative CPU 305.32 sec

INFO : 2019-09-24 16:20:57,751 Stage-6 map = 90%, reduce = 0%, Cumulative CPU 115.79 sec

INFO : 2019-09-24 16:21:11,624 Stage-6 map = 91%, reduce = 0%, Cumulative CPU 183.87 sec

INFO : 2019-09-24 16:21:20,948 Stage-6 map = 92%, reduce = 0%, Cumulative CPU 219.12 sec

INFO : 2019-09-24 16:21:31,427 Stage-6 map = 93%, reduce = 0%, Cumulative CPU 282.71 sec

INFO : 2019-09-24 16:21:39,754 Stage-6 map = 94%, reduce = 0%, Cumulative CPU 317.99 sec

INFO : 2019-09-24 16:21:45,519 Stage-6 map = 100%, reduce = 100%, Cumulative CPU 115.79 sec

INFO : MapReduce Total cumulative CPU time: 1 minutes 55 seconds 790 msec

ERROR : Ended Job = job_1567609664100_85580 with errors

任务-T_6260893799950704_20190924161555945_1_1 运行失败,失败原因:java.sql.SQLException: Error while processing statement: FAILED: Execution Error, return code 2 from org.apache.hadoop.hive.ql.exec.mr.MapRedTask

at org.apache.hive.jdbc.HiveStatement.execute(HiveStatement.java:283)

at org.apache.hive.jdbc.HiveStatement.executeQuery(HiveStatement.java:379)

at com.dtwave.dipper.dubhe.node.executor.runner.impl.Hive2TaskRunner.doRun(Hive2TaskRunner.java:244)

at com.dtwave.dipper.dubhe.node.executor.runner.BasicTaskRunner.execute(BasicTaskRunner.java:100)

at com.dtwave.dipper.dubhe.node.executor.TaskExecutor.run(TaskExecutor.java:32)

at java.util.concurrent.Executors$RunnableAdapter.call(Executors.java:511)

at java.util.concurrent.FutureTask.run(FutureTask.java:266)

at java.util.concurrent.ThreadPoolExecutor.runWorker(ThreadPoolExecutor.java:1149)

at java.util.concurrent.ThreadPoolExecutor$Worker.run(ThreadPoolExecutor.java:624)

at java.lang.Thread.run(Thread.java:748)

任务运行失败(Failed) 看完错误是不是一脸懵逼,两眼茫然...怀疑人生,哈哈...

APPlication日志

看这个能看出啥错误呀,需要去yarn里面看application任务运行日志如下所示:

2019-09-24 16:16:27,712 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 3

2019-09-24 16:16:27,712 INFO [ContainerLauncher #2] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_CLEANUP for container container_e29_1567609664100_85580_01_000011 taskAttempt attempt_1567609664100_85580_m_000009_0

2019-09-24 16:16:27,713 INFO [ContainerLauncher #2] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: KILLING attempt_1567609664100_85580_m_000009_0

2019-09-24 16:16:27,713 INFO [ContainerLauncher #2] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : yiclouddata04-SZZB:26009

2019-09-24 16:16:27,997 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:10 AssignedReds:0 CompletedMaps:3 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:16:28,005 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000009

2019-09-24 16:16:28,006 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000011

2019-09-24 16:16:28,006 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000003

2019-09-24 16:16:28,006 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:28,006 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:16:28,006 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:7 AssignedReds:0 CompletedMaps:3 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:16:28,006 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000008_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:28,006 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000009_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:28,006 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000007_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:28,557 INFO [IPC Server handler 7 on 27102] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Done acknowledgement from attempt_1567609664100_85580_m_000006_0

2019-09-24 16:16:28,558 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Task Attempt attempt_1567609664100_85580_m_000006_0 finished. Firing CONTAINER_AVAILABLE_FOR_REUSE event to ContainerAllocator

2019-09-24 16:16:28,558 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1567609664100_85580_m_000006_0 TaskAttempt Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:28,558 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: Task succeeded with attempt attempt_1567609664100_85580_m_000006_0

2019-09-24 16:16:28,558 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1567609664100_85580_m_000006 Task Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:28,559 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 4

2019-09-24 16:16:28,560 INFO [ContainerLauncher #5] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_CLEANUP for container container_e29_1567609664100_85580_01_000007 taskAttempt attempt_1567609664100_85580_m_000006_0

2019-09-24 16:16:28,560 INFO [ContainerLauncher #5] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: KILLING attempt_1567609664100_85580_m_000006_0

2019-09-24 16:16:28,560 INFO [ContainerLauncher #5] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : yiclouddata05-SZZB:26009

2019-09-24 16:16:28,851 INFO [IPC Server handler 10 on 27102] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Done acknowledgement from attempt_1567609664100_85580_m_000005_0

2019-09-24 16:16:28,852 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Task Attempt attempt_1567609664100_85580_m_000005_0 finished. Firing CONTAINER_AVAILABLE_FOR_REUSE event to ContainerAllocator

2019-09-24 16:16:28,852 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1567609664100_85580_m_000005_0 TaskAttempt Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:28,852 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: Task succeeded with attempt attempt_1567609664100_85580_m_000005_0

2019-09-24 16:16:28,852 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1567609664100_85580_m_000005 Task Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:28,853 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 5

2019-09-24 16:16:28,856 INFO [ContainerLauncher #8] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_CLEANUP for container container_e29_1567609664100_85580_01_000008 taskAttempt attempt_1567609664100_85580_m_000005_0

2019-09-24 16:16:28,856 INFO [ContainerLauncher #8] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: KILLING attempt_1567609664100_85580_m_000005_0

2019-09-24 16:16:28,856 INFO [ContainerLauncher #8] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : yiclouddata16-SZZB:26009

2019-09-24 16:16:28,986 INFO [IPC Server handler 16 on 27102] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Done acknowledgement from attempt_1567609664100_85580_m_000004_0

2019-09-24 16:16:28,987 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Task Attempt attempt_1567609664100_85580_m_000004_0 finished. Firing CONTAINER_AVAILABLE_FOR_REUSE event to ContainerAllocator

2019-09-24 16:16:28,987 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1567609664100_85580_m_000004_0 TaskAttempt Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:28,987 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: Task succeeded with attempt attempt_1567609664100_85580_m_000004_0

2019-09-24 16:16:28,988 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1567609664100_85580_m_000004 Task Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:28,989 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 6

2019-09-24 16:16:28,989 INFO [ContainerLauncher #6] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_CLEANUP for container container_e29_1567609664100_85580_01_000005 taskAttempt attempt_1567609664100_85580_m_000004_0

2019-09-24 16:16:28,990 INFO [ContainerLauncher #6] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: KILLING attempt_1567609664100_85580_m_000004_0

2019-09-24 16:16:28,990 INFO [ContainerLauncher #6] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : yiclouddata10-SZZB:26009

2019-09-24 16:16:29,006 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:7 AssignedReds:0 CompletedMaps:6 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:16:29,008 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000008

2019-09-24 16:16:29,009 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000007

2019-09-24 16:16:29,009 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000005_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:29,009 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:29,009 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:16:29,009 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000006_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:29,009 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:5 AssignedReds:0 CompletedMaps:6 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:16:29,582 INFO [IPC Server handler 12 on 27102] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Done acknowledgement from attempt_1567609664100_85580_m_000002_0

2019-09-24 16:16:29,584 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Task Attempt attempt_1567609664100_85580_m_000002_0 finished. Firing CONTAINER_AVAILABLE_FOR_REUSE event to ContainerAllocator

2019-09-24 16:16:29,584 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1567609664100_85580_m_000002_0 TaskAttempt Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:29,584 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: Task succeeded with attempt attempt_1567609664100_85580_m_000002_0

2019-09-24 16:16:29,584 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1567609664100_85580_m_000002 Task Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:29,584 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 7

2019-09-24 16:16:29,585 INFO [ContainerLauncher #4] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_CLEANUP for container container_e29_1567609664100_85580_01_000010 taskAttempt attempt_1567609664100_85580_m_000002_0

2019-09-24 16:16:29,586 INFO [ContainerLauncher #4] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: KILLING attempt_1567609664100_85580_m_000002_0

2019-09-24 16:16:29,586 INFO [ContainerLauncher #4] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : yiclouddata14-SZZB:26009

2019-09-24 16:16:30,009 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:5 AssignedReds:0 CompletedMaps:7 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:16:30,013 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000010

2019-09-24 16:16:30,013 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000005

2019-09-24 16:16:30,013 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000002_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:30,013 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:30,013 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:16:30,013 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:3 AssignedReds:0 CompletedMaps:7 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:16:30,013 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000004_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:30,416 INFO [IPC Server handler 6 on 27102] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Done acknowledgement from attempt_1567609664100_85580_m_000001_0

2019-09-24 16:16:30,417 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Task Attempt attempt_1567609664100_85580_m_000001_0 finished. Firing CONTAINER_AVAILABLE_FOR_REUSE event to ContainerAllocator

2019-09-24 16:16:30,417 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1567609664100_85580_m_000001_0 TaskAttempt Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:30,417 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: Task succeeded with attempt attempt_1567609664100_85580_m_000001_0

2019-09-24 16:16:30,418 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1567609664100_85580_m_000001 Task Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:30,418 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 8

2019-09-24 16:16:30,419 INFO [ContainerLauncher #3] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_CLEANUP for container container_e29_1567609664100_85580_01_000004 taskAttempt attempt_1567609664100_85580_m_000001_0

2019-09-24 16:16:30,419 INFO [ContainerLauncher #3] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: KILLING attempt_1567609664100_85580_m_000001_0

2019-09-24 16:16:30,419 INFO [ContainerLauncher #3] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : yiclouddata12-SZZB:26009

2019-09-24 16:16:30,440 INFO [IPC Server handler 7 on 27102] org.apache.hadoop.mapred.TaskAttemptListenerImpl: Done acknowledgement from attempt_1567609664100_85580_m_000003_0

2019-09-24 16:16:30,442 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Task Attempt attempt_1567609664100_85580_m_000003_0 finished. Firing CONTAINER_AVAILABLE_FOR_REUSE event to ContainerAllocator

2019-09-24 16:16:30,442 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: attempt_1567609664100_85580_m_000003_0 TaskAttempt Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:30,442 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: Task succeeded with attempt attempt_1567609664100_85580_m_000003_0

2019-09-24 16:16:30,442 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskImpl: task_1567609664100_85580_m_000003 Task Transitioned from RUNNING to SUCCEEDED

2019-09-24 16:16:30,442 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.JobImpl: Num completed Tasks: 9

2019-09-24 16:16:30,443 INFO [ContainerLauncher #7] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: Processing the event EventType: CONTAINER_REMOTE_CLEANUP for container container_e29_1567609664100_85580_01_000002 taskAttempt attempt_1567609664100_85580_m_000003_0

2019-09-24 16:16:30,446 INFO [ContainerLauncher #7] org.apache.hadoop.mapreduce.v2.app.launcher.ContainerLauncherImpl: KILLING attempt_1567609664100_85580_m_000003_0

2019-09-24 16:16:30,447 INFO [ContainerLauncher #7] org.apache.hadoop.yarn.client.api.impl.ContainerManagementProtocolProxy: Opening proxy : yiclouddata11-SZZB:26009

2019-09-24 16:16:30,556 INFO [IPC Server handler 8 on 27102] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID : jvm_1567609664100_85580_m_31885837205506 asked for a task

2019-09-24 16:16:30,556 INFO [IPC Server handler 8 on 27102] org.apache.hadoop.mapred.TaskAttemptListenerImpl: JVM with ID: jvm_1567609664100_85580_m_31885837205506 is invalid and will be killed.

2019-09-24 16:16:31,013 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Before Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:3 AssignedReds:0 CompletedMaps:9 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:16:31,017 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000004

2019-09-24 16:16:31,017 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000002

2019-09-24 16:16:31,017 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:31,017 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000001_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:31,017 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:16:31,017 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:1 AssignedReds:0 CompletedMaps:9 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:16:31,017 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000003_0: Container killed by the ApplicationMaster.

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143

2019-09-24 16:16:34,026 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:34,026 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:16:36,032 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:36,032 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:16:47,061 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:47,061 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:16:58,089 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:58,090 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:16:59,092 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:16:59,092 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:17:06,109 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:17:06,109 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:17:08,113 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:17:08,113 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:17:09,115 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:17:09,115 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:17:10,117 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:17:10,117 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:17:11,121 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Received completed container container_e29_1567609664100_85580_01_000006

2019-09-24 16:17:11,122 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Recalculating schedule, headroom=

2019-09-24 16:17:11,122 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: Reduce slow start threshold not met. completedMapsForReduceSlowstart 10

2019-09-24 16:17:11,122 INFO [RMCommunicator Allocator] org.apache.hadoop.mapreduce.v2.app.rm.RMContainerAllocator: After Scheduling: PendingReds:2 ScheduledMaps:0 ScheduledReds:0 AssignedMaps:0 AssignedReds:0 CompletedMaps:9 CompletedReds:0 ContAlloc:10 ContRel:0 HostLocal:8 RackLocal:1

2019-09-24 16:17:11,122 INFO [AsyncDispatcher event handler] org.apache.hadoop.mapreduce.v2.app.job.impl.TaskAttemptImpl: Diagnostics report from attempt_1567609664100_85580_m_000000_0: Container [pid=44860,containerID=container_e29_1567609664100_85580_01_000006] is running beyond physical memory limits. Current usage: 2.0 GB of 2 GB physical memory used; 4.0 GB of 16.2 GB virtual memory used. Killing container.

Dump of the process-tree for container_e29_1567609664100_85580_01_000006 :

|- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE

|- 44881 44860 44860 44860 (java) 21865 1198 4183670784 526521 /opt/huawei/Bigdata/common/runtime0/jdk1.8.0_162//bin/java -Djava.security.auth.login.config=/opt/huawei/Bigdata/FusionInsight_Current/1_11_NodeManager/etc/jaas-zk.conf -Dzookeeper.server.principal=zookeeper/hadoop.hadoop.com -Dzookeeper.request.timeout=120000 -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Xmx2048M -Djava.net.preferIPv4Stack=true -Djava.security.krb5.conf=/opt/huawei/Bigdata/common/runtime/krb5.conf -Djava.io.tmpdir=/srv/BigData/hadoop/data6/nm/localdir/usercache/yxs_product/appcache/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/srv/BigData/hadoop/data10/nm/containerlogs/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 10.240.250.133 27102 attempt_1567609664100_85580_m_000000_0 31885837205510

|- 44860 44857 44860 44860 (bash) 2 1 116031488 374 /bin/bash -c /opt/huawei/Bigdata/common/runtime0/jdk1.8.0_162//bin/java -Djava.security.auth.login.config=/opt/huawei/Bigdata/FusionInsight_Current/1_11_NodeManager/etc/jaas-zk.conf -Dzookeeper.server.principal=zookeeper/hadoop.hadoop.com -Dzookeeper.request.timeout=120000 -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Xmx2048M -Djava.net.preferIPv4Stack=true -Djava.security.krb5.conf=/opt/huawei/Bigdata/common/runtime/krb5.conf -Djava.io.tmpdir=/srv/BigData/hadoop/data6/nm/localdir/usercache/yxs_product/appcache/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/srv/BigData/hadoop/data10/nm/containerlogs/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 10.240.250.133 27102 attempt_1567609664100_85580_m_000000_0 31885837205510 1>/srv/BigData/hadoop/data10/nm/containerlogs/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006/stdout 2>/srv/BigData/hadoop/data10/nm/containerlogs/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006/stderr

Container killed on request. Exit code is 143

Container exited with a non-zero exit code 143 map和reduce单个错误日志

然后我其实还是没有看出来有啥子错误,继续找详细看map和reduce报错信息:

错误日志如下

Container [pid=44860,containerID=container_e29_1567609664100_85580_01_000006] is running beyond physical memory limits. Current usage: 2.0 GB of 2 GB physical memory used; 4.0 GB of 16.2 GB virtual memory used. Killing container. Dump of the process-tree for container_e29_1567609664100_85580_01_000006 : |- PID PPID PGRPID SESSID CMD_NAME USER_MODE_TIME(MILLIS) SYSTEM_TIME(MILLIS) VMEM_USAGE(BYTES) RSSMEM_USAGE(PAGES) FULL_CMD_LINE |- 44881 44860 44860 44860 (java) 21865 1198 4183670784 526521 /opt/huawei/Bigdata/common/runtime0/jdk1.8.0_162//bin/java -Djava.security.auth.login.config=/opt/huawei/Bigdata/FusionInsight_Current/1_11_NodeManager/etc/jaas-zk.conf -Dzookeeper.server.principal=zookeeper/hadoop.hadoop.com -Dzookeeper.request.timeout=120000 -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Xmx2048M -Djava.net.preferIPv4Stack=true -Djava.security.krb5.conf=/opt/huawei/Bigdata/common/runtime/krb5.conf -Djava.io.tmpdir=/srv/BigData/hadoop/data6/nm/localdir/usercache/yxs_product/appcache/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/srv/BigData/hadoop/data10/nm/containerlogs/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 10.240.250.133 27102 attempt_1567609664100_85580_m_000000_0 31885837205510 |- 44860 44857 44860 44860 (bash) 2 1 116031488 374 /bin/bash -c /opt/huawei/Bigdata/common/runtime0/jdk1.8.0_162//bin/java -Djava.security.auth.login.config=/opt/huawei/Bigdata/FusionInsight_Current/1_11_NodeManager/etc/jaas-zk.conf -Dzookeeper.server.principal=zookeeper/hadoop.hadoop.com -Dzookeeper.request.timeout=120000 -server -XX:NewRatio=8 -Djava.net.preferIPv4Stack=true -Xmx2048M -Djava.net.preferIPv4Stack=true -Djava.security.krb5.conf=/opt/huawei/Bigdata/common/runtime/krb5.conf -Djava.io.tmpdir=/srv/BigData/hadoop/data6/nm/localdir/usercache/yxs_product/appcache/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006/tmp -Dlog4j.configuration=container-log4j.properties -Dyarn.app.container.log.dir=/srv/BigData/hadoop/data10/nm/containerlogs/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006 -Dyarn.app.container.log.filesize=0 -Dhadoop.root.logger=INFO,CLA -Dhadoop.root.logfile=syslog org.apache.hadoop.mapred.YarnChild 10.240.250.133 27102 attempt_1567609664100_85580_m_000000_0 31885837205510 1>/srv/BigData/hadoop/data10/nm/containerlogs/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006/stdout 2>/srv/BigData/hadoop/data10/nm/containerlogs/application_1567609664100_85580/container_e29_1567609664100_85580_01_000006/stderr Container killed on request. Exit code is 143 Container exited with a non-zero exit code 143Container [pid=44860,containerID=container_e29_1567609664100_85580_01_000006] is running beyond physical memory limits. Current usage: 2.0 GB of 2 GB physical memory used; 4.0 GB of 16.2 GB virtual memory used. Killing container.

ok,看到这里终于找到错误原因了。

错误分析

首先检查yarn上面配置信息

ERROR:Container [pid=44860,containerID=container_e29_1567609664100_85580_01_000006] is running beyond physical memory limits. Current usage: 2.0 GB of 2 GB physical memory used; 4.0 GB of 16.2 GB virtual memory used. Killing container.

2.0 GB:任务所占的物理内存

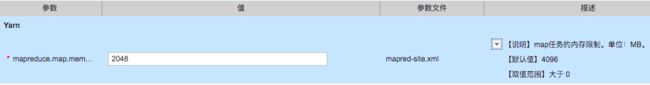

2GB: mapreduce.map.memory.mb 参数默认设置大小

4.0 GB:程序占用的虚拟内存

16.2 GB: mapreduce.map.memory.mb 乘以 yarn.nodemanager.vmem-pmem-ratio 得到的

其中 yarn.nodemanager.vmem-pmem-ratio 是 虚拟内存和物理内存比例,在yarn-site.xml中设置,默认是2.1

很明显,container需要占用了超过了任务的物理内存限制(running beyond physical memory limits)。所以kill掉了这个container。

上面只是map中产生的报错,当然也有可能在reduce中报错,如果是reduce中,那么就是mapreduce.reduce.memory.db * yarn.nodemanager.vmem-pmem-ratio

物理内存:真实的硬件设备(内存条)

虚拟内存:利用磁盘空间虚拟出的一块逻辑内存,用作虚拟内存的磁盘空间被称为交换空间(Swap Space)。(为了满足物理内存的不足而提出的策略)

linux会在物理内存不足时,使用交换分区的虚拟内存。内核会将暂时不用的内存块信息写到交换空间,这样以来,物理内存得到了释放,这块内存就可以用于其它目的,当需要用到原始的内容时,这些信息会被重新从交换空间读入物理内存。

解决方案

1. 取消虚拟内存的检查(不建议):

在yarn-site.xml或者程序中中设置yarn.nodemanager.vmem-check-enabled为false

yarn.nodemanager.vmem-check-enabled

false

Whether virtual memory limits will be enforced for containers.

除了物理内存超了,也有可能是虚拟内存超了,同样也可以设置物理内存的检查为

yarn.nodemanager.pmem-check-enabled :false个人认为这种办法并不太好,如果程序有内存泄漏等问题,取消这个检查,可能会导致集群崩溃。

2.增大mapreduce.map.memory.mb 或者 mapreduce.reduce.memory.mb (建议)

3.适当增大 yarn.nodemanager.vmem-pmem-ratio的大小

为物理内存增大对应的虚拟内存, 但是这个参数也不能太离谱

4.换成sparkSQL任务(骚的一比,强烈推荐)

小结

任务内存问题,主要分为两块,一块是物理内存,一块是虚拟内存,哪个超过了任务都会报错的,适当地修改对应的参数,就可以将任务继续运行了。如果任务所占用的内存太过离谱,更多考虑的应该是程序是否有内存泄漏,是否存在数据倾斜等,优先程序解决此类问题。终极解法:拆分数据,将数据均分成多个任务,进行操作~

或者选择spark哦~

6 的飞起!!!