图像描述show and tell代码im2txt阅读(TensorFlow官方实现)杂七杂八记录(草稿二:模型构建及训练部分)

模型构建及训练部分代码

0 概述

核心代码文件:

show_and_tell_model.py为整体模型的构建,包括读取TFRecord构建输入,导入GoogLe Net构建Encoder部分,及LSTM构建Decoder部分等。ops/*.py定义了模型构建时相关函数的详细定义。train.py为模型的训练具体操作。

预训练模型下载地址:https://github.com/tensorflow/models/tree/master/research/slim

1 模型构建

模型构建参考show_and_tell_model.py,定义了一个ShowAndTellModel类,调用其build()函数及构建整体模型,包括以下各子部分:

- 构建输入,即Tensorflow的数据读取部分(TFRecord)

- 构建图像嵌入,即导入GoogLe Net(Inception V3),用于提取图像特征(即Encoder部分)

- 构建输入序列嵌入,前面提到我们把图像描述的单词转换为其id序列号,在此处使用一个词嵌入矩阵(初始化,待学习),把单词转换为嵌入表示

- 构建语言模型部分,即LSTM,用于生成图像描述的单词(即Decoder部分)

- 剩下俩函数是导入已保存模型继续训练所需的相关参数设置

def build(self):

"""Creates all ops for training and evaluation."""

self.build_inputs()

self.build_image_embeddings()

self.build_seq_embeddings()

self.build_model()

self.setup_inception_initializer()

self.setup_global_step()

1.1 构建输入

构建输入由build_inputs()定义。

TensorFlow使用"文件名队列+内存队列"双队列的形式读入文件:

- 对于文件名队列,使用

tf.train.string_input_producer函数,传入一个文件名list,自动将其转为一个文件名队列 - 内存队列不需要自己建立,只需要使用reader对象从文件名队列中读取数据即可

这部分代码流程如下:

- 使用

tf.train.string_input_producer函数创建一个TFRecord文件队列 - 然后使用

reader.read()从TFRecord文件中获取一条记录,添加到数据队列input_queue中(由tf.RandomShuffleQueue()定义) - 然后从

input_queue中dequeue一条记录,使用tf.parse_single_sequence_example()函数将其解码为(图像数据,描述数据),并将图像数据还原为RGB数据 - 并根据描述数据构建输入序列、输出序列及描述掩码数据,使用

tf.train.batch_join()构造一个batch的数据,送入模型进行训练

参考下一篇博客,这部分数据读取的部分完全可以替换为使用tf.data模块读取。

构建输入部分获取了如下信息:

- 图像数据:

self.images - 描述数据(输入序列):

self.input_seqs - 描述数据(输出序列):

self.target_seqs - 描述数据掩码:

self.input_mask

1.2 构建图像嵌入(GoogLe Net,Inception V3)

构建图像嵌入由build_image_embedding()定义。

def build_image_embeddings(self):

"""Builds the image model subgraph and generates image embeddings.

Inputs:

self.images

Outputs:

self.image_embeddings

"""

# 构造Inception V3网络结构

inception_output = image_embedding.inception_v3(self.images, trainable=self.train_inception,

is_training=self.is_training())

# 获取Inception V3网络参数

self.inception_variables = tf.get_collection(tf.GraphKeys.GLOBAL_VARIABLES, scope="InceptionV3")

# Map inception output into embedding space.

# 把Inception V3输出投影到embedding_size(全连接层)

with tf.variable_scope("image_embedding") as scope:

image_embeddings = tf.contrib.layers.fully_connected(

inputs=inception_output,

num_outputs=self.config.embedding_size,

activation_fn=None,

weights_initializer=self.initializer,

biases_initializer=None,

scope=scope)

# Save the embedding size in the graph.

tf.constant(self.config.embedding_size, name="embedding_size")

self.image_embeddings = image_embeddings

Inception V3的定义位于ops/image_embedding.py中,由函数inception_v3()定义:

def inception_v3(images, trainable=True, is_training=True, weight_decay=0.00004,

stddev=0.1, dropout_keep_prob=0.8, use_batch_norm=True,

batch_norm_params=None, add_summaries=True, scope="InceptionV3"):

"""Builds an Inception V3 subgraph for image embeddings.

Args:

...

weight_decay: Coefficient for weight regularization.

stddev: The standard deviation of the trunctated normal weight initializer.

...

Returns:

end_points: A dictionary of activations from inception_v3 layers.

"""

# Only consider the inception model to be in training mode if it's trainable.

is_inception_model_training = trainable and is_training

# 参数设置

# batch_norm_params

if use_batch_norm:

# Default parameters for batch normalization.

if not batch_norm_params:

batch_norm_params = {...} # (太长了)

else:

batch_norm_params = None

# weights_regularizer 参数正则化函数

if trainable:

weights_regularizer = tf.contrib.layers.l2_regularizer(weight_decay)

else:

weights_regularizer = None

with tf.variable_scope(scope, "InceptionV3", [images]) as scope:

# 控制slim.conv2d和slim.fully_connected超参数默认值;

with slim.arg_scope(

[slim.conv2d, slim.fully_connected],

weights_regularizer=weights_regularizer, # 参数正则化

trainable=trainable):

with slim.arg_scope(

[slim.conv2d],

weights_initializer=tf.truncated_normal_initializer(stddev=stddev), # 参数初始化

activation_fn=tf.nn.relu, # 接relu激活函数

normalizer_fn=slim.batch_norm, # 进行批正则化

normalizer_params=batch_norm_params): # 批正则化参数

# 调用tf.contrib.slim.python.slim.nets.inception_v3.inception_v3_base

# 构造基本inception v3网络结构

net, end_points = inception_v3_base(images, scope=scope)

# 在特征映射后面加上平均池化和dropout,并将数据扁平化处理

with tf.variable_scope("logits"):

shape = net.get_shape() # 单张图片特征映射维度大小

net = slim.avg_pool2d(net, shape[1:3], padding="VALID", scope="pool")

net = slim.dropout(

net,

keep_prob=dropout_keep_prob,

is_training=is_inception_model_training,

scope="dropout")

net = slim.flatten(net, scope="flatten")

# Add summaries.

if add_summaries:

for v in end_points.values():

tf.contrib.layers.summaries.summarize_activation(v)

return net

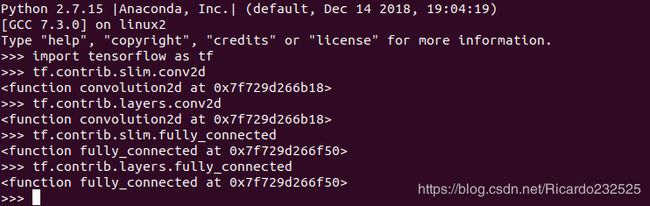

从上面代码看出,在利用inception_v3_base构造网络之前使用了slim.arg_scope设置了slim.conv2d和slim.fully_connected的默认超参数;但是在inception_v3_base源码中,网络的构造使用的是layers.conv2d。

因为slim.**和layers.**相关同名函数应该就是一样的,查看源码可以发现,slim.conv2d和slim.fully_connected应该是导入的tf.contrib.layers中的相关函数,并且在tensorflow中调用两个函数返回的地址是相同的。

问题:inception_v3_base()构造的结构不是完整的结构

1.3 构建输入序列嵌入

此处我们获取的训练数据中,图像描述是以单词在词汇表中的id号序列表示,在训练时则需要构造一个词嵌入矩阵,将对应的单词id转换为对应的词嵌入向量。

def build_seq_embeddings(self):

"""Builds the input sequence embeddings.

Inputs:

self.input_seqs 图像描述单词id序列

Outputs:

self.seq_embeddings 图像描述单词词嵌入向量序列

"""

with tf.variable_scope("seq_embedding"), tf.device("/cpu:0"): # 将词嵌入矩阵置于CPU上

embedding_map = tf.get_variable(

name="map",

# 词嵌入矩阵大小,单词个数×嵌入向量维度

shape=[self.config.vocab_size, self.config.embedding_size],

initializer=self.initializer)

# 将单词id序列,转换为词嵌入向量序列

seq_embeddings = tf.nn.embedding_lookup(embedding_map, self.input_seqs)

self.seq_embeddings = seq_embeddings

1.4 构建语言模型部分

只考虑与训练过程有关的代码,

def build_model(self):

"""Builds the model.

Inputs:

self.image_embeddings

self.seq_embeddings

self.target_seqs (training and eval only)

self.input_mask (training and eval only)

Outputs:

self.total_loss (training and eval only)

self.target_cross_entropy_losses (training and eval only)

self.target_cross_entropy_loss_weights (training and eval only)

"""

# This LSTM cell has biases and outputs tanh(new_c) * sigmoid(o), but the

# modified LSTM in the "Show and Tell" paper has no biases and outputs

# new_c * sigmoid(o).

lstm_cell = tf.contrib.rnn.BasicLSTMCell(

num_units=self.config.num_lstm_units, state_is_tuple=True)

if self.mode == "train":

lstm_cell = tf.contrib.rnn.DropoutWrapper(

lstm_cell,

input_keep_prob=self.config.lstm_dropout_keep_prob,

output_keep_prob=self.config.lstm_dropout_keep_prob)

with tf.variable_scope("lstm", initializer=self.initializer) as lstm_scope:

# Feed the image embeddings to set the initial LSTM state.

# zero_state第一个参数为batch_size,得到一个全0的零状态

zero_state = lstm_cell.zero_state(

batch_size=self.image_embeddings.get_shape()[0], dtype=tf.float32)

# 调用call函数,调用形式为(output, state) = call(input, state),输入input维度为(batch_size, input_size)

# 其中output为h,state为c和h的组合(state_is_tuple==True的情况)

# 因此此处得到的initial_state包含两部分:一部分是c,一部分是h,维度应该都是self.config.num_lstm_units

_, initial_state = lstm_cell(self.image_embeddings, zero_state)

# Allow the LSTM variables to be reused.

lstm_scope.reuse_variables()

if self.mode == "inference":

... # 非训练使用

else:

# Run the batch of sequence embeddings through the LSTM.

# sequence_length为一个batch每条描述序列的长度

sequence_length = tf.reduce_sum(self.input_mask, 1)

# 如果加入注意力机制,dynamic_rnn是否可以动态更改输入

# 使用dynamic_rnn的话,输入inputs的维度为(batch_size, time_steps, input_size)

# 得到的output是time_steps步里所有的输出,维度为(batch_size, time_steps, output_size)

lstm_outputs, _ = tf.nn.dynamic_rnn(cell=lstm_cell,

inputs=self.seq_embeddings,

sequence_length=sequence_length,

initial_state=initial_state,

dtype=tf.float32,

scope=lstm_scope)

# Stack batches vertically.

lstm_outputs = tf.reshape(lstm_outputs, [-1, lstm_cell.output_size])

with tf.variable_scope("logits") as logits_scope:

logits = tf.contrib.layers.fully_connected(

inputs=lstm_outputs,

num_outputs=self.config.vocab_size,

activation_fn=None,

weights_initializer=self.initializer,

scope=logits_scope)

if self.mode == "inference":

tf.nn.softmax(logits, name="softmax")

else:

targets = tf.reshape(self.target_seqs, [-1])

# weights用于匹配计算真实损失

weights = tf.to_float(tf.reshape(self.input_mask, [-1]))

# Compute losses.

losses = tf.nn.sparse_softmax_cross_entropy_with_logits(labels=targets, logits=logits)

batch_loss = tf.div(tf.reduce_sum(tf.multiply(losses, weights)),

tf.reduce_sum(weights),

name="batch_loss")

tf.losses.add_loss(batch_loss)

total_loss = tf.losses.get_total_loss()

# Add summaries.

tf.summary.scalar("losses/batch_loss", batch_loss)

tf.summary.scalar("losses/total_loss", total_loss)

for var in tf.trainable_variables():

tf.summary.histogram("parameters/" + var.op.name, var)

self.total_loss = total_loss

self.target_cross_entropy_losses = losses # Used in evaluation.

self.target_cross_entropy_loss_weights = weights # Used in evaluation.