备忘:MASK-RCNN训练自己的数据,配置过程

搞了3-4天,终于能够用Mask R-CNN训练自己的数据了,还是一个不错的开端。所以现在把基本的流程详细的写出来,备忘一下。其实主要还是参考了其他博主的文章。所以有需要的各位,可以参考以下链接:

https://blog.csdn.net/l297969586/article/details/79140840/

https://blog.csdn.net/qq_29462849/article/details/81037343

https://blog.csdn.net/qq_36810544/article/details/83582397

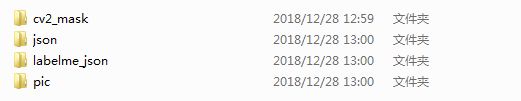

其实最主要的是准备数据集,得把各个路径配置清楚

cv2_mask

json

labelme_json

pic

#原图

1.下载代码

https://github.com/matterport/Mask_RCNN

下好了解压就可以了,然后跑demo的话,在这里就不赘述了

2.修改train_shapes.ipynb代码

1、#%matplotlib inline

2、在ShapesConfig类中,GPU_COUNT = 1,IMAGES_PER_GPU = 1两个参数自己根据自己电脑配置修改参数,由于该工程用的resnet101为主干的网络,训练需要大量的显存支持,我的图片尺寸为500*400的,IMAGES_PER_GPU 设置为2,在两个GeForce GTX TITAN X上训练显存都会溢出,所以IMAGES_PER_GPU = 1,大佬可忽略。

NUM_CLASSES = 1 + 1为你数据集的类别数,第一类为bg,我的是1类,所以为1+1

IMAGE_MIN_DIM = 500,IMAGE_MAX_DIM = 400修改为自己图片尺寸 ,不过好像MAX必须是64的倍数

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6),根据自己情况设置anchor大小

3、在全局定义一个iter_num=0

4.定义自己的类:

class DrugDataset(utils.Dataset):

添加函数

#解析labelme中得到的yaml文件,从而得到mask每一层对应的实例标签

def from_yaml_get_class(self,image_id):

info=self.image_info[image_id]

with open(info['yaml_path']) as f:

temp=yaml.load(f.read())

labels=temp['label_names']

del labels[0]

return labels

重写函数

def draw_mask(self, num_obj, mask, image):

info = self.image_info[image_id]

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] =1

return mask

重写函数

def load_shapes(self, count, img_floder, mask_floder, imglist, dataset_root_path):

"""Generate the requested number of synthetic images.

count: number of images to generate.

height, width: the size of the generated images.

"""

# Add classes,可通过这种方式扩展多个物体

self.add_class("shapes", 1, "liefeng") # 黑色素瘤

for i in range(count):

# 获取图片宽和高

filestr = imglist[i].split(".")[0]

# print(imglist[i],"-->",cv_img.shape[1],"--->",cv_img.shape[0])

# print("id-->", i, " imglist[", i, "]-->", imglist[i],"filestr-->",filestr)

# filestr = filestr.split("_")[1]

mask_path = mask_floder + "/" + filestr + ".png"

#print("filestr=",filestr)

#print("mask_path",mask_path)

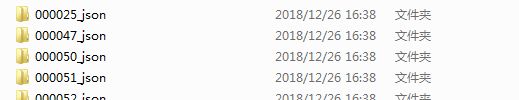

yaml_path = dataset_root_path + "labelme_json/" + filestr + "_json/info.yaml"

#print("yaml_path",yaml_path)

#print(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_floder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path) def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

global iter_num

#print("image_id", image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img, image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("liefeng") != -1:

# print "box"

labels_form.append("liefeng")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)整体代码,基本上都没变

# coding: utf-8

# # Mask R-CNN - Train on Shapes Dataset

#

#

# This notebook shows how to train Mask R-CNN on your own dataset. To keep things simple we use a synthetic dataset of shapes (squares, triangles, and circles) which enables fast training. You'd still need a GPU, though, because the network backbone is a Resnet101, which would be too slow to train on a CPU. On a GPU, you can start to get okay-ish results in a few minutes, and good results in less than an hour.

#

# The code of the *Shapes* dataset is included below. It generates images on the fly, so it doesn't require downloading any data. And it can generate images of any size, so we pick a small image size to train faster.

# In[1]:

import os

import sys

import random

import math

import re

import time

import numpy as np

import cv2

import matplotlib

import matplotlib.pyplot as plt

import yaml #新加入

"""

显示了如何在您自己的数据集上训练Mask R-CNN。 这款笔记本引入了一个玩具数据集(Shapes)来演示

新数据集的训练。

"""

# Root directory of the project

ROOT_DIR = os.path.abspath("../")

# Import Mask RCNN

sys.path.append(ROOT_DIR) # To find local version of the library

from mrcnn.config import Config

from mrcnn import utils

import mrcnn.model as modellib

from mrcnn import visualize

from mrcnn.model import log

from PIL import Image #新加入

# get_ipython().magic('matplotlib inline')

#os.environ["CUDA_VISIBLE_DEVICES"] = "0"

# Root directory of the project

ROOT_DIR = os.getcwd()#缓存函数,不用管

print(ROOT_DIR)

#ROOT_DIR = os.path.abspath("../")

# Directory to save logs and trained model

MODEL_DIR = os.path.join(ROOT_DIR, "logs")

iter_num=0

# Local path to trained weights file

COCO_MODEL_PATH = os.path.join(ROOT_DIR, "mask_rcnn_coco.h5")

print("COCO_MODEL_PATH",COCO_MODEL_PATH)

# Download COCO trained weights from Releases if needed

if not os.path.exists(COCO_MODEL_PATH):

utils.download_trained_weights(COCO_MODEL_PATH)

# ## Configurations

# In[2]:

class ShapesConfig(Config):

"""Configuration for training on the toy shapes dataset.

Derives from the base Config class and overrides values specific

to the toy shapes dataset.

"""

# Give the configuration a recognizable name

NAME = "shapes"

# Train on 1 GPU and 8 images per GPU. We can put multiple images on each

# GPU because the images are small. Batch size is 8 (GPUs * images/GPU).

GPU_COUNT = 1

IMAGES_PER_GPU = 1

# Number of classes (including background)

NUM_CLASSES = 1 + 1 # background + 3 shapes

# Use small images for faster training. Set the limits of the small side

# the large side, and that determines the image shape.

IMAGE_MIN_DIM = 448

IMAGE_MAX_DIM = 512

# Use smaller anchors because our image and objects are small

RPN_ANCHOR_SCALES = (8 * 6, 16 * 6, 32 * 6, 64 * 6, 128 * 6) # anchor side in pixels

# Reduce training ROIs per image because the images are small and have

# few objects. Aim to allow ROI sampling to pick 33% positive ROIs.

TRAIN_ROIS_PER_IMAGE = 100

# Use a small epoch since the data is simple

STEPS_PER_EPOCH = 100

# use small validation steps since the epoch is small

VALIDATION_STEPS = 50

config = ShapesConfig()

config.display()

# ## Notebook Preferences

# In[3]:

def get_ax(rows=1, cols=1, size=8):

"""Return a Matplotlib Axes array to be used in

all visualizations in the notebook. Provide a

central point to control graph sizes.

Change the default size attribute to control the size

of rendered images

"""

_, ax = plt.subplots(rows, cols, figsize=(size * cols, size * rows))

return ax

# ## Dataset

#

# Create a synthetic dataset

#

# Extend the Dataset class and add a method to load the shapes dataset, `load_shapes()`, and override the following methods:

#

# * load_image()

# * load_mask()

# * image_reference()

# In[4]:

class DrugDataset(utils.Dataset):

"""Generates the shapes synthetic dataset. The dataset consists of simple

shapes (triangles, squares, circles) placed randomly on a blank surface.

The images are generated on the fly. No file access required.

"""

# OK

def get_obj_index(self, image):

n = np.max(image)

return n

# OK

def from_yaml_get_class(self, image_id):

info = self.image_info[image_id]

with open(info['yaml_path']) as f:

temp = yaml.load(f.read())

labels = temp['label_names']

del labels[0]

return labels

# 重新写draw_mask

# OK

def draw_mask(self, num_obj, mask, image, image_id):

#print("draw_mask-->",image_id)

#print("self.image_info",self.image_info)

info = self.image_info[image_id]

#print("info-->",info)

#print("info[width]----->",info['width'],"-info[height]--->",info['height'])

for index in range(num_obj):

for i in range(info['width']):

for j in range(info['height']):

# print("image_id-->",image_id,"-i--->",i,"-j--->",j)

# print("info[width]----->",info['width'],"-info[height]--->",info['height'])

at_pixel = image.getpixel((i, j))

if at_pixel == index + 1:

mask[j, i, index] = 1

return mask

# 重新写load_shapes,里面包含自己的类别,可以任意添加

# 并在self.image_info信息中添加了path、mask_path 、yaml_path

# yaml_pathdataset_root_path = "/tongue_dateset/"

# img_floder = dataset_root_path + "rgb"

# mask_floder = dataset_root_path + "mask"

# dataset_root_path = "/tongue_dateset/"

# ?

def load_shapes(self, count, img_floder, mask_floder, imglist, dataset_root_path):

"""Generate the requested number of synthetic images.

count: number of images to generate.

height, width: the size of the generated images.

"""

# Add classes,可通过这种方式扩展多个物体

self.add_class("shapes", 1, "liefeng") # 黑色素瘤

for i in range(count):

# 获取图片宽和高

filestr = imglist[i].split(".")[0]

# print(imglist[i],"-->",cv_img.shape[1],"--->",cv_img.shape[0])

# print("id-->", i, " imglist[", i, "]-->", imglist[i],"filestr-->",filestr)

# filestr = filestr.split("_")[1]

mask_path = mask_floder + "/" + filestr + ".png"

#print("filestr=",filestr)

#print("mask_path",mask_path)

yaml_path = dataset_root_path + "labelme_json/" + filestr + "_json/info.yaml"

#print("yaml_path",yaml_path)

#print(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

cv_img = cv2.imread(dataset_root_path + "labelme_json/" + filestr + "_json/img.png")

self.add_image("shapes", image_id=i, path=img_floder + "/" + imglist[i],

width=cv_img.shape[1], height=cv_img.shape[0], mask_path=mask_path, yaml_path=yaml_path)

'''

# OK

def load_image(self, image_id):

"""Generate an image from the specs of the given image ID.

Typically this function loads the image from a file, but

in this case it generates the image on the fly from the

specs in image_info.

"""

info = self.image_info[image_id]

print(info)

print("info['bg_color']",info['bg_color'])

print("np.shape(info['bg_color']",np.shape(info['bg_color']))

bg_color = np.array(info['bg_color']).reshape([1, 1, 3])

image = np.ones([info['height'], info['width'], 3], dtype=np.uint8)

image = image * bg_color.astype(np.uint8)

for shape, color, dims in info['shapes']:

image = self.draw_shape(image, shape, dims, color)

return image

'''

# OK

def image_reference(self, image_id):

"""Return the shapes data of the image."""

info = self.image_info[image_id]

if info["source"] == "shapes":

return info["shapes"]

else:

super(self.__class__).image_reference(self, image_id)

# 重写load_mask

# OK

def load_mask(self, image_id):

"""Generate instance masks for shapes of the given image ID.

"""

global iter_num

#print("image_id", image_id)

info = self.image_info[image_id]

count = 1 # number of object

img = Image.open(info['mask_path'])

num_obj = self.get_obj_index(img)

mask = np.zeros([info['height'], info['width'], num_obj], dtype=np.uint8)

mask = self.draw_mask(num_obj, mask, img, image_id)

occlusion = np.logical_not(mask[:, :, -1]).astype(np.uint8)

for i in range(count - 2, -1, -1):

mask[:, :, i] = mask[:, :, i] * occlusion

occlusion = np.logical_and(occlusion, np.logical_not(mask[:, :, i]))

labels = []

labels = self.from_yaml_get_class(image_id)

labels_form = []

for i in range(len(labels)):

if labels[i].find("liefeng") != -1:

# print "box"

labels_form.append("liefeng")

class_ids = np.array([self.class_names.index(s) for s in labels_form])

return mask, class_ids.astype(np.int32)

'''

def draw_shape(self, image, shape, dims, color):

"""Draws a shape from the given specs."""

# Get the center x, y and the size s

x, y, s = dims

if shape == 'square':

cv2.rectangle(image, (x - s, y - s), (x + s, y + s), color, -1)

elif shape == "circle":

cv2.circle(image, (x, y), s, color, -1)

elif shape == "triangle":

points = np.array([[(x, y - s),

(x - s / math.sin(math.radians(60)), y + s),

(x + s / math.sin(math.radians(60)), y + s),

]], dtype=np.int32)

cv2.fillPoly(image, points, color)

return image

'''

'''

def random_shape(self, height, width):

"""Generates specifications of a random shape that lies within

the given height and width boundaries.

Returns a tuple of three valus:

* The shape name (square, circle, ...)

* Shape color: a tuple of 3 values, RGB.

* Shape dimensions: A tuple of values that define the shape size

and location. Differs per shape type.

"""

# Shape

shape = random.choice(["square", "circle", "triangle"])

# Color

color = tuple([random.randint(0, 255) for _ in range(3)])

# Center x, y

buffer = 20

y = random.randint(buffer, height - buffer - 1)

x = random.randint(buffer, width - buffer - 1)

# Size

s = random.randint(buffer, height // 4)

return shape, color, (x, y, s)

'''

'''

def random_image(self, height, width):

"""Creates random specifications of an image with multiple shapes.

Returns the background color of the image and a list of shape

specifications that can be used to draw the image.

"""

# Pick random background color

bg_color = np.array([random.randint(0, 255) for _ in range(3)])

# Generate a few random shapes and record their

# bounding boxes

shapes = []

boxes = []

N = random.randint(1, 4)

for _ in range(N):

shape, color, dims = self.random_shape(height, width)

shapes.append((shape, color, dims))

x, y, s = dims

boxes.append([y - s, x - s, y + s, x + s])

# Apply non-max suppression wit 0.3 threshold to avoid

# shapes covering each other

keep_ixs = utils.non_max_suppression(np.array(boxes), np.arange(N), 0.3)

shapes = [s for i, s in enumerate(shapes) if i in keep_ixs]

return bg_color, shapes

'''

# In[5]:

def train_model():

dataset_root_path="datasets/my_dataset/"

img_floder = dataset_root_path + "pic"

mask_floder = dataset_root_path + "cv2_mask"

#yaml_floder = dataset_root_path

imglist = os.listdir(img_floder)

count = len(imglist)

#width = 576

#height = 448

# Training dataset

dataset_train = DrugDataset()

dataset_train.load_shapes(count, img_floder, mask_floder, imglist,dataset_root_path)

dataset_train.prepare()

dataset_val = DrugDataset()

dataset_val.load_shapes(count, img_floder, mask_floder, imglist,dataset_root_path)

dataset_val.prepare()

# In[6]:

# Load and display random samples

#image_ids = np.random.choice(dataset_train.image_ids, 4)

#for image_id in image_ids:

# image = dataset_train.load_image(image_id)

# mask, class_ids = dataset_train.load_mask(image_id)

# visualize.display_top_masks(image, mask, class_ids, dataset_train.class_names)

# ## Create Model

# In[ ]:

# Create model in training mode

model = modellib.MaskRCNN(mode="training", config=config,

model_dir=MODEL_DIR)

# In[7]:

# Which weights to start with?

init_with = "coco" # imagenet, coco, or last

if init_with == "imagenet":

model.load_weights(model.get_imagenet_weights(), by_name=True)

elif init_with == "coco":

# Load weights trained on MS COCO, but skip layers that

# are different due to the different number of classes

# See README for instructions to download the COCO weights

model.load_weights(COCO_MODEL_PATH, by_name=True,

exclude=["mrcnn_class_logits", "mrcnn_bbox_fc",

"mrcnn_bbox", "mrcnn_mask"])

elif init_with == "last":

# Load the last model you trained and continue training

model.load_weights(model.find_last(), by_name=True)

# ## Training

#

# Train in two stages:

# 1. Only the heads. Here we're freezing all the backbone layers and training only the randomly initialized layers (i.e. the ones that we didn't use pre-trained weights from MS COCO). To train only the head layers, pass `layers='heads'` to the `train()` function.

#

# 2. Fine-tune all layers. For this simple example it's not necessary, but we're including it to show the process. Simply pass `layers="all` to train all layers.

# In[8]:

# Train the head branches

# Passing layers="heads" freezes all layers except the head

# layers. You can also pass a regular expression to select

# which layers to train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE,

epochs=20,

layers='heads')

# In[9]:

# Fine tune all layers

# Passing layers="all" trains all layers. You can also

# pass a regular expression to select which layers to

# train by name pattern.

model.train(dataset_train, dataset_val,

learning_rate=config.LEARNING_RATE / 10,

epochs=40,

layers="all")

def predict():

import skimage.io

from mrcnn import visualize

# Create models in training mode

#config = TongueConfig()

config.display()

model = modellib.MaskRCNN(mode="inference", config=config, model_dir=MODEL_DIR)

model_path = model.find_last()

# Load trained weights (fill in path to trained weights here)

assert model_path != "", "Provide path to trained weights"

print("Loading weights from ", model_path)

model.load_weights(model_path, by_name=True)

class_names = ['BG', 'liefeng']

# Load a random image from the images folder

file_names = r'C:\Users\Administrator\Desktop\test\000174.jpg' # next(os.walk(IMAGE_DIR))[2]

# image = skimage.io.imread(os.path.join(IMAGE_DIR, random.choice(file_names)))

image = skimage.io.imread(file_names)

# Run detection

results = model.detect([image], verbose=1)

# Visualize results

r = results[0]

visualize.display_instances(image, r['rois'], r['masks'], r['class_ids'], class_names, r['scores'])

predict()

"""

# In[10]:

# Save weights

# Typically not needed because callbacks save after every epoch

# Uncomment to save manually

# model_path = os.path.join(MODEL_DIR, "mask_rcnn_shapes.h5")

# model.keras_model.save_weights(model_path)

# ## Detection

# In[11]:

class InferenceConfig(ShapesConfig):

GPU_COUNT = 1

IMAGES_PER_GPU = 1

inference_config = InferenceConfig()

# Recreate the model in inference mode

model = modellib.MaskRCNN(mode="inference",

config=inference_config,

model_dir=MODEL_DIR)

# Get path to saved weights

# Either set a specific path or find last trained weights

# model_path = os.path.join(ROOT_DIR, ".h5 file name here")

model_path = model.find_last()

# Load trained weights

print("Loading weights from ", model_path)

model.load_weights(model_path, by_name=True)

# In[12]:

# Test on a random image

image_id = random.choice(dataset_val.image_ids)

original_image, image_meta, gt_class_id, gt_bbox, gt_mask = modellib.load_image_gt(dataset_val, inference_config,

image_id, use_mini_mask=False)

log("original_image", original_image)

log("image_meta", image_meta)

log("gt_class_id", gt_class_id)

log("gt_bbox", gt_bbox)

log("gt_mask", gt_mask)

visualize.display_instances(original_image, gt_bbox, gt_mask, gt_class_id,

dataset_train.class_names, figsize=(8, 8))

# In[13]:

results = model.detect([original_image], verbose=1)

r = results[0]

visualize.display_instances(original_image, r['rois'], r['masks'], r['class_ids'],

dataset_val.class_names, r['scores'], ax=get_ax())

# ## Evaluation

# In[14]:

# Compute VOC-Style mAP @ IoU=0.5

# Running on 10 images. Increase for better accuracy.

image_ids = np.random.choice(dataset_val.image_ids, 10)

APs = []

for image_id in image_ids:

# Load image and ground truth data

image, image_meta, gt_class_id, gt_bbox, gt_mask = modellib.load_image_gt(dataset_val, inference_config,

image_id, use_mini_mask=False)

molded_images = np.expand_dims(modellib.mold_image(image, inference_config), 0)

# Run object detection

results = model.detect([image], verbose=0)

r = results[0]

# Compute AP

AP, precisions, recalls, overlaps = utils.compute_ap(gt_bbox, gt_class_id, gt_mask,

r["rois"], r["class_ids"], r["scores"], r['masks'])

APs.append(AP)

print("mAP: ", np.mean(APs))

# In[ ]:

"""