TensorFlow2.0正式版发布,极简安装TF2.0(CPU&GPU)教程

原文链接: http://bdtc2019.hadooper.cn/

作者 | 小宋是呢

转载自CSDN博客

【导读】TensorFlow 2.0,昨天凌晨,正式放出了2.0版本。

不少网友表示,TensorFlow 2.0比PyTorch更好用,已经准备全面转向这个新升级的深度学习框架了。

本篇文章就带领大家用最简单地方式安装TF2.0正式版本(CPU与GPU),由我来踩坑,方便大家体验正式版本的TF2.0。

废话不多说现在正式开始教程。

1 环境准备

我目前是在Windows10上面,使用conda管理的python环境,通过conda安装cuda与cudnn(GPU支持),通过pip安装的tensorflow2.0。经过尝试只是最简单地安装方式,无需配置复杂环境。

(关于ubuntu与mac版本的安装可以仿照此方法,因为conda支持多平台,应该没什么问题,如果大家问题多的话,可以评论,我后面会会更新ubuntu安装教程)

1.0 conda环境准备

conda是很好用python管理工具,可以方便建立管理多个python环境。后面安装的步骤里我也会介绍一些常用的conda指令。

conda 我推荐使用安装miniconda,大家可以理解为精简版的anaconda,只保留了一些必备的组件,所以安装会比快上很多,同时也能满足我们管理python环境的需求。(anaconda一般在固态硬盘安装需要占用几个G内存,花费1-2个小时,miniconda一般几百M,10分钟就可以安装完成了)

miniconda推荐使用清华源下载:https://mirrors.tuna.tsinghua.edu.cn/anaconda/miniconda/

选择适合自己的版本就可以,

windows推荐地址:https://mirrors.tuna.tsinghua.edu.cn/anaconda/miniconda/Miniconda3-4.7.10-Windows-x86_64.exe

ubuntu推荐地址:https://mirrors.tuna.tsinghua.edu.cn/anaconda/miniconda/Miniconda3-4.7.10-Linux-x86_64.sh

Mac os推荐地址:https://mirrors.tuna.tsinghua.edu.cn/anaconda/miniconda/Miniconda3-4.7.10-MacOSX-x86_64.pkg

下以windows版本来安装miniconda作为演示,从上述下载合适版本,下载好后以管理员权限打开点击安装。

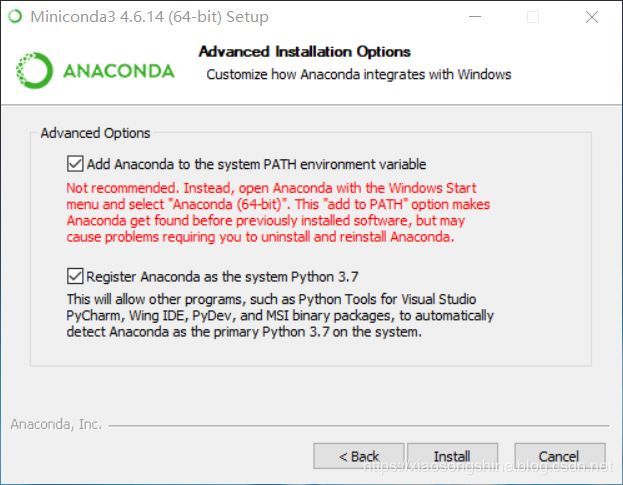

注意这两个都要勾选,一个是让我们可以直接在cmd使用conda指令,第二个是把miniconda自带的python3.7作为系统python。

安装好后就可以在cmd中使用conda指令了,cmd打开方式,windows键+R键,弹出输入框,输入cmd就进入了。也可以直接在windows搜索cmd点击运行。

下面介绍些cmd conda指令:

查看conda环境:conda env list

新建conda环境(env_name就是创建的环境名,可以自定义):conda create -n env_name

激活conda环境(ubuntu与Macos 将conda 替换为source):conda activate env_name

退出conda环境:conda deactivate

安装和卸载python包:conda install numpy # conda uninstall numpy

查看已安装python列表:conda list -n env_name

知道这些指令就可以开始使用conda新建一个环境安装TF2.0了。

1.1 TF2.0 CPU版本安装

TF CPU安装比较简单,因为不需要配置GPU,所以windows ubuntu macOS安装方式都类似,缺点就是运行速度慢,但是用于日常学习使用还是可以的。

下面以windows版本做演示:一下均在命令行操作

1.1.0 新建TF2.0 CPU环境(使用conda 新建环境指令 python==3.6表示在新建环境时同时python3.6)

conda create -n TF_2C python=3.6

当弹出 :Proceed ([y]/n)? 输入y回车

完成后就可以进入此环境

1.1.1 进入TF_2C环境

conda activate TF_2C

进入后我们就可以发现:(TF_2C)在之前路径前面,表示进入了这个环境。使用conda deactivate可以退出。

我们再次进入 conda activate TF_2C ,便于执行下述命令

1.1.2 安装TF2.0 CPU版本(后面的 -i 表示从国内清华源下载,速度比默认源快很多)

pip install tensorflow==2.0.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

如果网不好的,多执行几次。然后过一会就安装好啦。下面我们做下简单测试。

1.1.3 测试TF2.0 CPU版本(把下面代码保存到demo.py使用TF_2C python运行)

import tensorflow as tf

version = tf.__version__

gpu_ok = tf.test.is_gpu_available()

print("tf version:",version,"\nuse GPU",gpu_ok)

如果没有问题的话输出结果如下:可以看到tf 版本为2.0.0 因为是cpu版本,所以gpu 为False

tf version: 2.0.0

use GPU False

1.2 TF2.0 GPU版本安装

GPU版本和CPU类似,但是会多一步对于GPU支持的安装。下面来一步步实现。安装之前确认你的电脑拥有Nvidia的GPU

1.2.0 新建TF2.0 GPU环境(使用conda 新建环境指令 python==3.6表示在新建环境时同时python3.6)

conda create -n TF_2G python=3.6

当弹出 :Proceed ([y]/n)? 输入y回车

完成后就可以进入此环境

1.1.1 进入TF_2G环境

conda activate TF_2G

1.1.2 安装GPU版本支持,拥有Nvidia的GPU的windows一般都有默认驱动的,只需要安装cudatoolkit 与 cudnn包就可以了,要注意一点需要安装cudatoolkit 10.0 版本,注意一点,如果系统的cudatoolkit小于10.0需要更新一下至10.0

conda install cudatoolkit=10.0 cudnn

1.1.3 安装TF2.0 GPU版本(后面的 -i 表示从国内清华源下载,速度比默认源快很多)

pip install tensorflow-gpu==2.0.0 -i https://pypi.tuna.tsinghua.edu.cn/simple

如果网不好的,多执行几次。然后过一会就安装好啦。下面我们做下简单测试。

1.1.3 测试TF2.0 GPU版本(把下面代码保存到demo.py使用TF_2G python运行)

import tensorflow as tf

version = tf.__version__

gpu_ok = tf.test.is_gpu_available()

print("tf version:",version,"\nuse GPU",gpu_ok)

如果没有问题的话输出结果如下:可以看到tf 版本为2.0.0 因为是gpu版本,所以gpu 为True,这表示GPU版本安装完成了。

tf version: 2.0.0

use GPU True

1.2 最后我们测试一个使用TF2.0版本方式写的线性拟合代码

把下述代码保存为main.py

import tensorflow as tf

X = tf.constant([[1.0, 2.0, 3.0], [4.0, 5.0, 6.0]])

y = tf.constant([[10.0], [20.0]])

class Linear(tf.keras.Model):

def __init__(self):

super().__init__()

self.dense = tf.keras.layers.Dense(

units=1,

activation=None,

kernel_initializer=tf.zeros_initializer(),

bias_initializer=tf.zeros_initializer()

)

def call(self, input):

output = self.dense(input)

return output

# 以下代码结构与前节类似

model = Linear()

optimizer = tf.keras.optimizers.SGD(learning_rate=0.01)

for i in range(100):

with tf.GradientTape() as tape:

y_pred = model(X) # 调用模型 y_pred = model(X) 而不是显式写出 y_pred = a * X + b

loss = tf.reduce_mean(tf.square(y_pred - y))

grads = tape.gradient(loss, model.variables) # 使用 model.variables 这一属性直接获得模型中的所有变量

optimizer.apply_gradients(grads_and_vars=zip(grads, model.variables))

if i % 10 == 0:

print(i, loss.numpy())

print(model.variables)输出结果如下:

10 0.73648137

20 0.6172349

30 0.5172956

40 0.4335389

50 0.36334264

60 0.3045124

70 0.25520816

80 0.2138865

90 0.17925593

[, ]

原文链接:https://blog.csdn.net/xiaosongshine/article/details/101844926

(*本文为 AI科技大本营转载文章,转

载请

联系作者)

◆

精彩推荐

◆

2019 中国大数据技术大会(BDTC)历经十一载,再度火热来袭!

豪华主席阵容及百位技术专家齐聚,15 场精选专题技术和行业论坛,超强干货+技术剖析+行业实践立体解读,深入解析热门技术在行业中的实践落地。

【早鸟票】

与

【特惠学生票】

限时抢购,扫码了解详情!

推荐阅读

深度学习面临天花板,亟需更可信、可靠、安全的第三代AI技术|AI ProCon 2019

学点基本功:机器学习常用损失函数小结

AI落地遭“卡脖子”困境:为什么说联邦学习是解决良方?

10分钟搭建你的第一个图像识别模型 | 附完整代码

阿里披露AI完整布局,飞天AI平台首次亮相

程序员因接外包坐牢 456 天!两万字揭露心酸经历

限时早鸟票 | 2019 中国大数据技术大会(BDTC)超豪华盛宴抢先看!

Pandas中第二好用的函数 | 优雅的Apply

阿里开源物联网操作系统 AliOS Things 3.0 发布,集成平头哥 AI 芯片架构

雷声大雨点小:Bakkt「见光死」了吗?