Tensorflow Optimizer介绍

Tensorflow Optimizer介绍

- 1. 定义损失函数loss

- 2. 使用optimizer进行minimize损失函数loss

- (1) compute_gradients

- (2) apply_gradients

训练常规做法:

1. 定义损失函数loss

2. 使用optimizer进行minimize损失函数loss

minimize loss的由两部分组成:compute_gradients和apply_gradients

(1) compute_gradients

compute_gradients(

loss,

var_list=None,

gate_gradients=GATE_OP,

aggregation_method=None,

colocate_gradients_with_ops=False,

grad_loss=None

)

loss: 损失函数

var_list: 用于求梯度的变量

gate_gradients: 有三个可选项GATE_NONE,GATE_OP,GATE_GRAPH。GATE_NONE最高级别并发,代价是可能不可复现;GATE_OP在每个节点内部,计算完本节点全部梯度才更新参数,节点内部不并发,避免race condition;GATE_GRAPH最低级别并发,在计算好所有的梯度之后才更新参数

aggregation_method: 指定组合梯度的方法AggregationMethod

colocate_gradients_with_ops: 是否在多台gpu上并行计算梯度

grad_loss: Optional. A `Tensor` holding the gradient computed for `loss`.

(2) apply_gradients

apply_gradients(

grads_and_vars,

global_step=None,

name=None

)

grads_and_vars: List of (gradient, variable) pairs as returned by

`compute_gradients()`.

global_step: Optional `Variable` to increment by one after the

variables have been updated.

name: Optional name for the returned operation. Default to the

name passed to the `Optimizer` constructor.

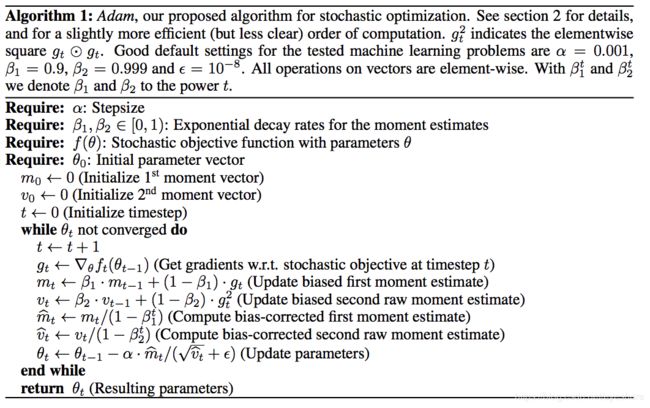

以AdamOptimizer为例:

原论文的伪代码:

Tensorflow实现:

l r t : = learning_rate ∗ ( 1 − β 2 t ) / ( 1 − β 1 t ) lr_t := \text{learning\_rate} * \sqrt{(1 - \beta_2^t) / (1 - \beta_1^t)} lrt:=learning_rate∗(1−β2t)/(1−β1t)

m t : = β 1 ∗ m t − 1 + ( 1 − β 1 ) ∗ g m_t := \beta_1 * m_{t-1} + (1 - \beta_1) * g mt:=β1∗mt−1+(1−β1)∗g

v t : = β 2 ∗ v t − 1 + ( 1 − β 2 ) ∗ g ∗ g v_t := \beta_2 * v_{t-1} + (1 - \beta_2) * g * g vt:=β2∗vt−1+(1−β2)∗g∗g

v a r i a b l e : = v a r i a b l e − l r t ∗ m t / ( v t + ϵ ) variable := variable - lr_t * m_t / (\sqrt{v_t} + \epsilon) variable:=variable−lrt∗mt/(vt+ϵ)

代码见:https://github.com/tensorflow/tensorflow/blob/master/tensorflow/python/training/adam.py

稠密向量的操作:

def _apply_dense(self, grad, var):

m = self.get_slot(var, "m")# optiminzer额外参数加入_non_slot_dict

v = self.get_slot(var, "v")

beta1_power, beta2_power = self._get_beta_accumulators() # 获取t次方

return training_ops.apply_adam(

var,

m,

v,

math_ops.cast(beta1_power, var.dtype.base_dtype),

math_ops.cast(beta2_power, var.dtype.base_dtype),

math_ops.cast(self._lr_t, var.dtype.base_dtype),

math_ops.cast(self._beta1_t, var.dtype.base_dtype),

math_ops.cast(self._beta2_t, var.dtype.base_dtype),

math_ops.cast(self._epsilon_t, var.dtype.base_dtype),

grad,

use_locking=self._use_locking).op

def _finish(self, update_ops, name_scope):

# Update the power accumulators.

with ops.control_dependencies(update_ops):

beta1_power, beta2_power = self._get_beta_accumulators()

with ops.colocate_with(beta1_power):

update_beta1 = beta1_power.assign(

beta1_power * self._beta1_t, use_locking=self._use_locking)

update_beta2 = beta2_power.assign(

beta2_power * self._beta2_t, use_locking=self._use_locking)

return control_flow_ops.group(

*update_ops + [update_beta1, update_beta2], name=name_scope)

其中beta1_power表示 β 1 t \beta_1^t β1t,beta2_power表示 β 2 t \beta_2^t β2t,t次方的实现在_finish函数中。

apply_adam具体实现代码见:https://github.com/tensorflow/tensorflow/blob/master/tensorflow/core/kernels/training_ops.cc

template <typename T>

struct ApplyAdam<CPUDevice, T> : ApplyAdamNonCuda<CPUDevice, T> {};

template <typename T>

struct ApplyAdamWithAmsgrad<CPUDevice, T> {

void operator()(const CPUDevice& d, typename TTypes<T>::Flat var,

typename TTypes<T>::Flat m, typename TTypes<T>::Flat v,

typename TTypes<T>::Flat vhat,

typename TTypes<T>::ConstScalar beta1_power,

typename TTypes<T>::ConstScalar beta2_power,

typename TTypes<T>::ConstScalar lr,

typename TTypes<T>::ConstScalar beta1,

typename TTypes<T>::ConstScalar beta2,

typename TTypes<T>::ConstScalar epsilon,

typename TTypes<T>::ConstFlat grad) {

const T alpha = lr() * Eigen::numext::sqrt(T(1) - beta2_power()) /

(T(1) - beta1_power());

m.device(d) += (grad - m) * (T(1) - beta1()); # 更新m

v.device(d) += (grad.square() - v) * (T(1) - beta2()); # 更新v

vhat.device(d) = vhat.cwiseMax(v);

var.device(d) -= (m * alpha) / (vhat.sqrt() + epsilon()); # 更新参数

}

};

稀疏向量的操作:

def _apply_sparse_shared(self, grad, var, indices, scatter_add):

beta1_power, beta2_power = self._get_beta_accumulators() # 获取t次方

beta1_power = math_ops.cast(beta1_power, var.dtype.base_dtype)

beta2_power = math_ops.cast(beta2_power, var.dtype.base_dtype)

lr_t = math_ops.cast(self._lr_t, var.dtype.base_dtype)

beta1_t = math_ops.cast(self._beta1_t, var.dtype.base_dtype)

beta2_t = math_ops.cast(self._beta2_t, var.dtype.base_dtype)

epsilon_t = math_ops.cast(self._epsilon_t, var.dtype.base_dtype)

lr = (lr_t * math_ops.sqrt(1 - beta2_power) / (1 - beta1_power))

# m_t = beta1 * m + (1 - beta1) * g_t

m = self.get_slot(var, "m")

m_scaled_g_values = grad * (1 - beta1_t)

m_t = state_ops.assign(m, m * beta1_t, use_locking=self._use_locking)

with ops.control_dependencies([m_t]):

m_t = scatter_add(m, indices, m_scaled_g_values)

# v_t = beta2 * v + (1 - beta2) * (g_t * g_t)

v = self.get_slot(var, "v")

v_scaled_g_values = (grad * grad) * (1 - beta2_t)

v_t = state_ops.assign(v, v * beta2_t, use_locking=self._use_locking)

with ops.control_dependencies([v_t]):

v_t = scatter_add(v, indices, v_scaled_g_values)

v_sqrt = math_ops.sqrt(v_t)

var_update = state_ops.assign_sub(

var, lr * m_t / (v_sqrt + epsilon_t), use_locking=self._use_locking)

return control_flow_ops.group(*[var_update, m_t, v_t])

def _finish(self, update_ops, name_scope):

# Update the power accumulators.

with ops.control_dependencies(update_ops):

beta1_power, beta2_power = self._get_beta_accumulators()

with ops.colocate_with(beta1_power):

update_beta1 = beta1_power.assign(

beta1_power * self._beta1_t, use_locking=self._use_locking)

update_beta2 = beta2_power.assign(

beta2_power * self._beta2_t, use_locking=self._use_locking)

return control_flow_ops.group(

*update_ops + [update_beta1, update_beta2], name=name_scope)

返回更新参数操作op的group(包括slot和non_slot)。

Bert实现AdamWeightDecayOptimizer的时候少了偏差纠正项,加入正则项。

def apply_gradients(self, grads_and_vars, global_step=None, name=None):

"""See base class."""

assignments = []

for (grad, param) in grads_and_vars:

if grad is None or param is None:

continue

param_name = self._get_variable_name(param.name)

m = tf.get_variable(

name=param_name + "/adam_m",

shape=param.shape.as_list(),

dtype=tf.float32,

trainable=False,

initializer=tf.zeros_initializer())

v = tf.get_variable(

name=param_name + "/adam_v",

shape=param.shape.as_list(),

dtype=tf.float32,

trainable=False,

initializer=tf.zeros_initializer())

# Standard Adam update.

next_m = (

tf.multiply(self.beta_1, m) + tf.multiply(1.0 - self.beta_1, grad))

next_v = (

tf.multiply(self.beta_2, v) + tf.multiply(1.0 - self.beta_2,

tf.square(grad)))

update = next_m / (tf.sqrt(next_v) + self.epsilon)

# Just adding the square of the weights to the loss function is *not*

# the correct way of using L2 regularization/weight decay with Adam,

# since that will interact with the m and v parameters in strange ways.

#

# Instead we want ot decay the weights in a manner that doesn't interact

# with the m/v parameters. This is equivalent to adding the square

# of the weights to the loss with plain (non-momentum) SGD.

if self._do_use_weight_decay(param_name):

update += self.weight_decay_rate * param

update_with_lr = self.learning_rate * update

next_param = param - update_with_lr

assignments.extend(

[param.assign(next_param),

m.assign(next_m),

v.assign(next_v)])

return tf.group(*assignments, name=name)