OpenCV3.3中支持向量机(Support Vector Machines, SVM)实现简介及使用

OpenCV 3.3中给出了支持向量机(Support Vector Machines)的实现,即cv::ml::SVM类,此类的声明在include/opencv2/ml.hpp文件中,实现在modules/ml/src/svm.cpp文件中,它既支持两分类,也支持多分类,还支持回归等,OpenCV中SVM的实现源自libsvm库。其中:

(1)、cv::ml::SVM类:继承自cv::ml::StateModel,而cv::ml::StateModel又继承自cv::Algorithm;

(2)、create函数:为static,new一个SVMImpl用来创建一个SVM对象;

(3)、setType/getType函数:设置/获取SVM公式类型,包括C_SVC、NU_SVC、ONE_CLASS、EPS_SVR、NU_SVR,用于指定分类、回归等,默认为C_SVC;

(4)、setGamma/getGamma函数:设置/获取核函数的γ参数,默认值为1;

(5)、setCoef0/getCoef0函数:设置/获取核函数的coef0参数,默认值为0;

(6)、setDegree/getDegree函数:设置/获取核函数的degreee参数,默认值为0;

(7)、setC/getC函数:设置/获取SVM优化问题的C参数,默认值为0;

(8)、setNu/getNu函数:设置/获取SVM优化问题的υ参数,默认值为0;

(9)、setP/getP函数:设置/获取SVM优化问题的ε参数,默认值为0;

(10)、setClassWeights/getClassWeights函数:应用在SVM::C_SVC,设置/获取weights,默认值是空cv::Mat;

(11)、setTermCriteria/getTermCriteria函数:设置/获取SVM训练时迭代终止条件,默认值是cv::TermCriteria(cv::TermCriteria::MAX_ITER + TermCriteria::EPS,1000, FLT_EPSILON);

(12)、setKernel/getKernelType函数:设置/获取SVM核函数类型,包括CUSTOM、LINEAR、POLY、RBF、SIGMOID、CHI2、INTER,默认值为RBF;

(13)、setCustomKernel函数:初始化CUSTOM核函数;

(14)、trainAuto函数:用最优参数训练SVM;

(15)、getSupportVectors/getUncompressedSupportVectors函数:获取所有的支持向量;

(16)、getDecisionFunction函数:决策函数;

(17)、getDefaultGrid/getDefaultGridPtr函数:生成SVM参数网格;

(18)、save/load函数:保存/载入已训练好的model,支持xml,yaml,json格式;

(19)、train/predict函数:用于训练/预测,均使用基类StatModel中的。

关于支持向量机的基础介绍可以参考: http://blog.csdn.net/fengbingchun/article/details/78326704

以下是测试代码,包括train和predict:

#include "opencv.hpp"

#include

#include

#include

#include

#include

#include "common.hpp"

/////////////////////////////////// Support Vector Machines ///////////////////////////

int test_opencv_svm_train()

{

// two class classifcation

const std::vector labels { 1, -1, -1, -1 };

const std::vector> trainingData{ { 501, 10 }, { 255, 10 }, { 501, 255 }, { 10, 501 } };

const int feature_length{ 2 };

const int samples_count{ (int)trainingData.size()};

CHECK(labels.size() == trainingData.size());

std::vector data(samples_count * feature_length, 0.f);

for (int i = 0; i < samples_count; ++i) {

for (int j = 0; j < feature_length; ++j) {

data[i*feature_length + j] = trainingData[i][j];

}

}

cv::Mat trainingDataMat(samples_count, feature_length, CV_32FC1, data.data());

cv::Mat labelsMat((int)samples_count, 1, CV_32SC1, (int*)labels.data());

cv::Ptr svm = cv::ml::SVM::create();

svm->setType(cv::ml::SVM::C_SVC);

svm->setKernel(cv::ml::SVM::LINEAR);

svm->setTermCriteria(cv::TermCriteria(cv::TermCriteria::MAX_ITER, 100, 1e-6));

CHECK(svm->train(trainingDataMat, cv::ml::ROW_SAMPLE, labelsMat));

const std::string save_file{ "E:/GitCode/NN_Test/data/svm_model.xml" }; // .xml, .yaml, .jsons

svm->save(save_file);

return 0;

}

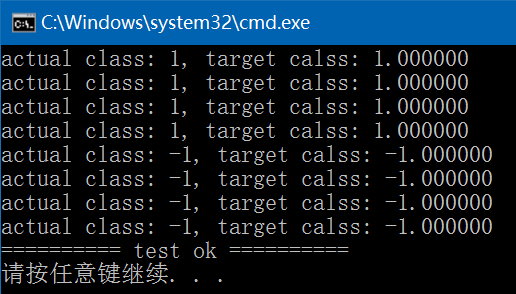

int test_opencv_svm_predict()

{

const std::string model_file { "E:/GitCode/NN_Test/data/svm_model.xml" };

const std::vector labels{ 1, 1, 1, 1, -1, -1, -1, -1 };

const std::vector> predictData{ { 490.f, 15.f }, { 480.f, 30.f }, { 511.f, 40.f }, { 473.f, 50.f },

{ 2.f, 490.f }, { 100.f, 200.f }, { 247.f, 223.f }, {510.f, 400.f} };

const int feature_length{ 2 };

const int predict_count{ (int)predictData.size() };

CHECK(labels.size() == predictData.size());

cv::Ptr svm = cv::ml::SVM::load(model_file);

for (int i = 0; i < predict_count; ++i) {

cv::Mat prdictMat = (cv::Mat_(1, 2) << predictData[i][0], predictData[i][1]);

float response = svm->predict(prdictMat);

fprintf(stdout, "actual class: %d, target calss: %f\n", labels[i], response);

}

return 0;

}

int test_opencv_svm_simple()

{

// two class classifcation

// reference: opencv-3.3.0/samples/cpp/tutorial_code/ml/introduction_to_svm/introduction_to_svm.cpp

const int width{ 512 }, height{ 512 };

cv::Mat image = cv::Mat::zeros(height, width, CV_8UC3);

const int labels[] { 1, -1, -1, -1 };

const float trainingData[][2] {{ 501, 10 }, { 255, 10 }, { 501, 255 }, { 10, 501 } };

cv::Mat trainingDataMat(4, 2, CV_32FC1, (float*)trainingData);

cv::Mat labelsMat(4, 1, CV_32SC1, (int*)labels);

cv::Ptr svm = cv::ml::SVM::create();

svm->setType(cv::ml::SVM::C_SVC);

svm->setKernel(cv::ml::SVM::LINEAR);

svm->setTermCriteria(cv::TermCriteria(cv::TermCriteria::MAX_ITER, 100, 1e-6));

svm->train(trainingDataMat, cv::ml::ROW_SAMPLE, labelsMat);

// Show the decision regions given by the SVM

cv::Vec3b green(0, 255, 0), blue(255, 0, 0);

for (int i = 0; i < image.rows; ++i) {

for (int j = 0; j < image.cols; ++j) {

cv::Mat sampleMat = (cv::Mat_(1, 2) << j, i);

float response = svm->predict(sampleMat);

if (response == 1)

image.at(i, j) = green;

else if (response == -1)

image.at(i, j) = blue;

}

}

// Show the training data

int thickness{ -1 };

int lineType{ 8 };

cv::circle(image, cv::Point(501, 10), 5, cv::Scalar(0, 0, 0), thickness, lineType);

cv::circle(image, cv::Point(255, 10), 5, cv::Scalar(255, 255, 255), thickness, lineType);

cv::circle(image, cv::Point(501, 255), 5, cv::Scalar(255, 255, 255), thickness, lineType);

cv::circle(image, cv::Point(10, 501), 5, cv::Scalar(255, 255, 255), thickness, lineType);

// Show support vectors

thickness = 2;

lineType = 8;

cv::Mat sv = svm->getUncompressedSupportVectors();

for (int i = 0; i < sv.rows; ++i) {

const float* v = sv.ptr(i);

cv::circle(image, cv::Point((int)v[0], (int)v[1]), 6, cv::Scalar(128, 128, 128), thickness, lineType);

}

cv::imwrite("E:/GitCode/NN_Test/data/result_svm_simple.png", image);

imshow("SVM Simple Example", image);

cv::waitKey(0);

return 0;

}

int test_opencv_svm_non_linear()

{

// two class classifcation

// reference: opencv-3.3.0/samples/cpp/tutorial_code/ml/non_linear_svms/non_linear_svms.cpp

const int NTRAINING_SAMPLES{ 100 }; // Number of training samples per class

const float FRAC_LINEAR_SEP{ 0.9f }; // Fraction of samples which compose the linear separable part

// Data for visual representation

const int WIDTH{ 512 }, HEIGHT{ 512 };

cv::Mat I = cv::Mat::zeros(HEIGHT, WIDTH, CV_8UC3);

// Set up training data randomly

cv::Mat trainData(2 * NTRAINING_SAMPLES, 2, CV_32FC1);

cv::Mat labels(2 * NTRAINING_SAMPLES, 1, CV_32SC1);

cv::RNG rng(100); // Random value generation class

// Set up the linearly separable part of the training data

int nLinearSamples = (int)(FRAC_LINEAR_SEP * NTRAINING_SAMPLES);

// Generate random points for the class 1

cv::Mat trainClass = trainData.rowRange(0, nLinearSamples);

// The x coordinate of the points is in [0, 0.4)

cv::Mat c = trainClass.colRange(0, 1);

rng.fill(c, cv::RNG::UNIFORM, cv::Scalar(1), cv::Scalar(0.4 * WIDTH));

// The y coordinate of the points is in [0, 1)

c = trainClass.colRange(1, 2);

rng.fill(c, cv::RNG::UNIFORM, cv::Scalar(1), cv::Scalar(HEIGHT));

// Generate random points for the class 2

trainClass = trainData.rowRange(2 * NTRAINING_SAMPLES - nLinearSamples, 2 * NTRAINING_SAMPLES);

// The x coordinate of the points is in [0.6, 1]

c = trainClass.colRange(0, 1);

rng.fill(c, cv::RNG::UNIFORM, cv::Scalar(0.6*WIDTH), cv::Scalar(WIDTH));

// The y coordinate of the points is in [0, 1)

c = trainClass.colRange(1, 2);

rng.fill(c, cv::RNG::UNIFORM, cv::Scalar(1), cv::Scalar(HEIGHT));

// Set up the labels for the classes

labels.rowRange(0, NTRAINING_SAMPLES).setTo(1); // Class 1

labels.rowRange(NTRAINING_SAMPLES, 2 * NTRAINING_SAMPLES).setTo(2); // Class 2

// Train the svm

std::cout << "Starting training process" << std::endl;

// init, Set up the support vector machines parameters

cv::Ptr svm = cv::ml::SVM::create();

svm->setType(cv::ml::SVM::C_SVC);

svm->setC(0.1);

svm->setKernel(cv::ml::SVM::LINEAR);

svm->setTermCriteria(cv::TermCriteria(cv::TermCriteria::MAX_ITER, (int)1e7, 1e-6));

svm->train(trainData, cv::ml::ROW_SAMPLE, labels);

std::cout << "Finished training process" << std::endl;

// Show the decision regions

cv::Vec3b green(0, 100, 0), blue(100, 0, 0);

for (int i = 0; i < I.rows; ++i) {

for (int j = 0; j < I.cols; ++j) {

cv::Mat sampleMat = (cv::Mat_(1, 2) << i, j);

float response = svm->predict(sampleMat);

if (response == 1) I.at(j, i) = green;

else if (response == 2) I.at(j, i) = blue;

}

}

// Show the training data

int thick = -1;

int lineType = 8;

float px, py;

// Class 1

for (int i = 0; i < NTRAINING_SAMPLES; ++i) {

px = trainData.at(i, 0);

py = trainData.at(i, 1);

circle(I, cv::Point((int)px, (int)py), 3, cv::Scalar(0, 255, 0), thick, lineType);

}

// Class 2

for (int i = NTRAINING_SAMPLES; i <2 * NTRAINING_SAMPLES; ++i) {

px = trainData.at(i, 0);

py = trainData.at(i, 1);

circle(I, cv::Point((int)px, (int)py), 3, cv::Scalar(255, 0, 0), thick, lineType);

}

// Show support vectors

thick = 2;

lineType = 8;

cv::Mat sv = svm->getUncompressedSupportVectors();

for (int i = 0; i < sv.rows; ++i) {

const float* v = sv.ptr(i);

circle(I, cv::Point((int)v[0], (int)v[1]), 6, cv::Scalar(128, 128, 128), thick, lineType);

}

imwrite("E:/GitCode/NN_Test/data/result_svm_non_linear.png", I);

imshow("SVM for Non-Linear Training Data", I);

cv::waitKey(0);

return 0;

}

GitHub: https://github.com/fengbingchun/NN_Test