简单HDFS使用Journalnode HA部署

基于之前hadoop集群,实验Journal共享实现namenode的高可用,本篇只配置Hadoop的HDFS,其他Yarn,zookeeper,等均不关系.

节点hadoop,hadoop1,hadoop2,之前的集群namenode为hadoop,这里设置hadoop1,和hadoop2为Active和Standby NN,并使用JournalNode实现共享

在集群基础上改变一下内容

hadoop@hadoop:~$ cd /opt/ha/hadoop-2.7.2/

hadoop@hadoop:hadoop-2.7.2$ gedit etc/hadoop/core-site.xml

fs.defaultFS

hdfs://hadoop1:9000

hadoop.tmp.dir

/opt/ha/hadoop-2.7.2/data/tmp

hadoop@hadoop:hadoop-2.7.2$ gedit etc/hadoop/hdfs-site.xml

dfs.namenode.name.dir

file:///opt/ha/hadoop-2.7.2/data/dfs/name

dfs.datanode.data.dir

file:///opt/ha/hadoop-2.7.2/data/dfs/data

dfs.webhdfs.enabled

true

dfs.nameservices

cluster

dfs.ha.namenodes.cluster

nn1,nn2

dfs.namenode.rpc-address.cluster.nn1

hadoop1:9000

dfs.namenode.rpc-address.cluster.nn2

hadoop2:9000

dfs.namenode.http-address.cluster.nn1

hadoop1:50070

dfs.namenode.http-address.cluster.nn2

hadoop2:50070

dfs.namenode.shared.edits.dir

qjournal://hadoop:8485;hadoop1:8485;hadoop2:8485/cluster

dfs.ha.automatic-failover.enabled

false

dfs.journalnode.edits.dir

/opt/ha/hadoop-2.7.2/data/journal

hadoop@hadoop:hadoop-2.7.2$ gedit etc/hadoop/hadoop-env.sh

export HADOOP_PREFIX=/opt/ha/hadoop-2.7.2

hadoop@hadoop:hadoop-2.7.2$ gedit etc/hadoop/slaves

hadoop

hadoop1

hadoop2

启动验证

1 每个节点上启动journalnode

注意看日志,有时候虽然启动起来了,但是日志有报错,还是等于没启动起来,以hadoop2为例

[hadoop@hadoop2 hadoop-2.7.2]$ sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-journalnode-hadoop2.out

[hadoop@hadoop2 hadoop-2.7.2]$ jps

2018 JournalNode

2071 Jps

[hadoop@hadoop2 hadoop-2.7.2]$ gedit logs/hadoop-hadoop-journalnode-hadoop2.log [hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs namenode -format

省略.....

Re-format filesystem in Storage Directory /opt/ha/hadoop-2.7.2/data/dfs/name ? (Y or N) Y

16/05/18 15:36:16 INFO namenode.FSImage: Allocated new BlockPoolId: BP-999551788-192.168.2.10-1463556976410

16/05/18 15:36:16 INFO common.Storage: Storage directory /opt/ha/hadoop-2.7.2/data/dfs/name has been successfully formatted.

16/05/18 15:36:16 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

16/05/18 15:36:16 INFO util.ExitUtil: Exiting with status 0

16/05/18 15:36:16 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop1/192.168.2.10

************************************************************/

[hadoop@hadoop1 hadoop-2.7.2]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-namenode-hadoop1.out

[hadoop@hadoop2 hadoop-2.7.2]$ bin/hdfs namenode -bootstrapStandby

省略...

Re-format filesystem in Storage Directory /opt/ha/hadoop-2.7.2/data/dfs/name ? (Y or N) Y

[hadoop@hadoop2 hadoop-2.7.2]$ sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-namenode-hadoop2.out

[hadoop@hadoop2 hadoop-2.7.2]$ jps

2018 JournalNode

2236 Jps

2159 NameNode

[hadoop@hadoop2 hadoop-2.7.2]$ gedit logs/hadoop-hadoop-journalnode-hadoop2.log

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs dfsadmin -report

16/05/18 15:59:43 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

report: Operation category READ is not supported in state standby

[hadoop@hadoop1 hadoop-2.7.2]$

这时候需要手动讲一个节点转换为Active,然后就可以使用hdfs命令了

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs haadmin -transitionToActive nn1

16/05/18 16:04:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs dfsadmin -report

16/05/18 16:05:43 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Configured Capacity: 0 (0 B)

Present Capacity: 0 (0 B)

DFS Remaining: 0 (0 B)

DFS Used: 0 (0 B)

DFS Used%: NaN%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

[hadoop@hadoop1 hadoop-2.7.2]$

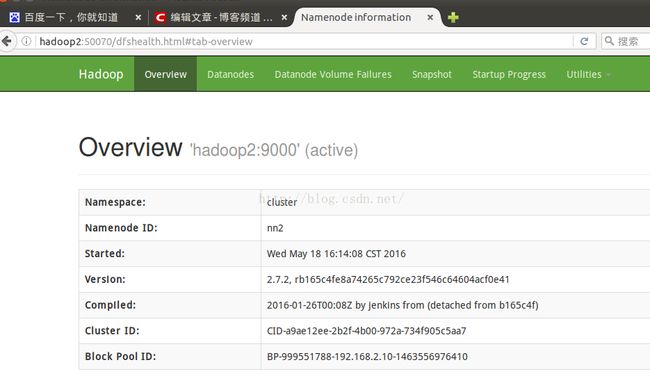

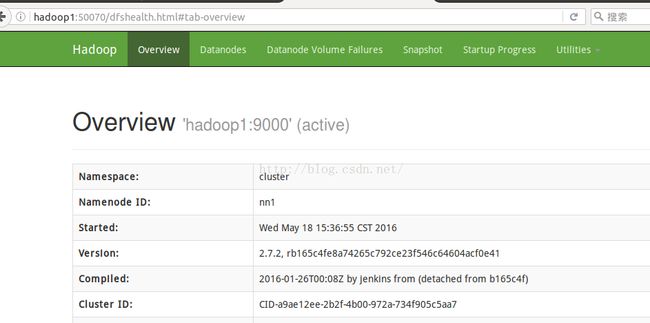

同时在通过web ui访问两个namenode时候,nn1已经是actvie状态了

在hadoop1上启动datanode,在hadoop上启动yarn

[hadoop@hadoop1 hadoop-2.7.2]$ sbin/hadoop-daemons.sh start datanode

hadoop1: starting datanode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-datanode-hadoop1.out

hadoop2: starting datanode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-datanode-hadoop2.out

hadoop: starting datanode, logging to /opt/ha/hadoop-2.7.2/logs/hadoop-hadoop-datanode-hadoop.out

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs dfsadmin -report

16/05/18 16:08:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Configured Capacity: 130101776384 (121.17 GB)

Present Capacity: 96697389056 (90.06 GB)

DFS Remaining: 96697315328 (90.06 GB)

DFS Used: 73728 (72 KB)

DFS Used%: 0.00%

Under replicated blocks: 0

Blocks with corrupt replicas: 0

Missing blocks: 0

Missing blocks (with replication factor 1): 0

-------------------------------------------------

Live datanodes (3):

Name: 192.168.2.3:50010 (hadoop)

Hostname: hadoop

Decommission Status : Normal

Configured Capacity: 97124175872 (90.45 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 24880119808 (23.17 GB)

DFS Remaining: 72244031488 (67.28 GB)

DFS Used%: 0.00%

DFS Remaining%: 74.38%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Wed May 18 16:08:57 CST 2016

Name: 192.168.2.11:50010 (hadoop2)

Hostname: hadoop2

Decommission Status : Normal

Configured Capacity: 16488800256 (15.36 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 4261191680 (3.97 GB)

DFS Remaining: 12227584000 (11.39 GB)

DFS Used%: 0.00%

DFS Remaining%: 74.16%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Wed May 18 16:08:57 CST 2016

Name: 192.168.2.10:50010 (hadoop1)

Hostname: hadoop1

Decommission Status : Normal

Configured Capacity: 16488800256 (15.36 GB)

DFS Used: 24576 (24 KB)

Non DFS Used: 4263075840 (3.97 GB)

DFS Remaining: 12225699840 (11.39 GB)

DFS Used%: 0.00%

DFS Remaining%: 74.15%

Configured Cache Capacity: 0 (0 B)

Cache Used: 0 (0 B)

Cache Remaining: 0 (0 B)

Cache Used%: 100.00%

Cache Remaining%: 0.00%

Xceivers: 1

Last contact: Wed May 18 16:08:57 CST 2016

[hadoop@hadoop1 hadoop-2.7.2]$

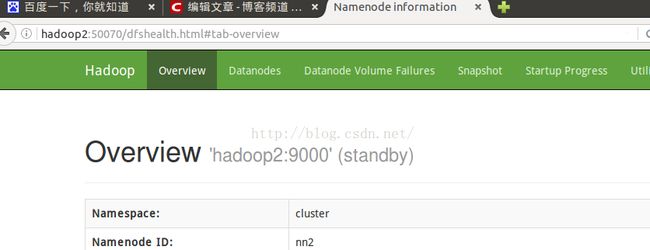

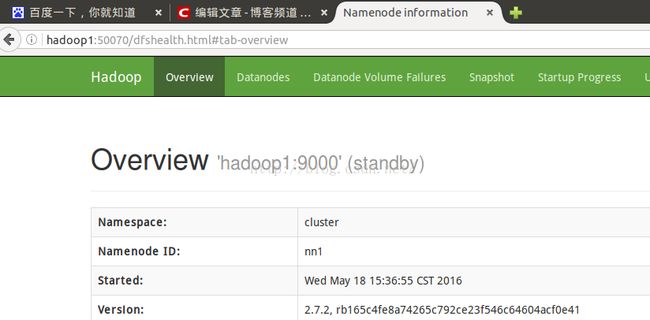

可以通过命令手动切换两个namenode

Usage: haadmin

[-transitionToActive [--forceactive] ]

[-transitionToStandby ]

[-failover [--forcefence] [--forceactive] ]

[-getServiceState ]

[-checkHealth ]

[-help ]

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs haadmin -transitionToStandby nn1

16/05/18 16:20:59 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@hadoop1 hadoop-2.7.2]$ bin/hdfs haadmin -transitionToActive nn2

16/05/18 16:24:32 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[hadoop@hadoop1 hadoop-2.7.2]$

然后再产看nn1已经变为standby,在使用命令actvie nn2,就讲active node转到了nn2