sqoop 安装

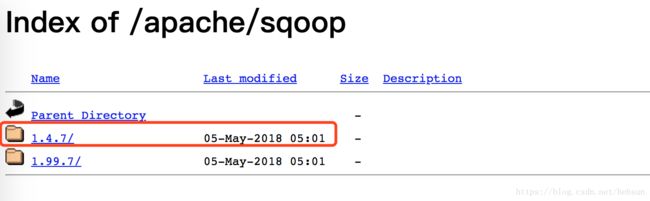

下载地址

http://mirror.bit.edu.cn/apache/sqoop/

因为官方并不建议在生产环境中使用sqoop2,即1.99.7,所以这里我用的是sqoop1,即1.4.7

找个编译好的,懒得去自己编译。

sqoop-1.4.7.bin__hadoop-2.6.0.tar.gz重命名,我都爱留着版本号。sqoop-1.4.7

配置环境变量;根据自己的系统看看如何配置;我的是mac;如下

vi ~/.bash_profile

加入下面

export SQOOP_HOME=/Users/你的用户名/sqoop-1.4.7

export PATH=$PATH:$SQOOP_HOME/bin

别忘记了 source ~/.bash_profile

接着去修改配置文件

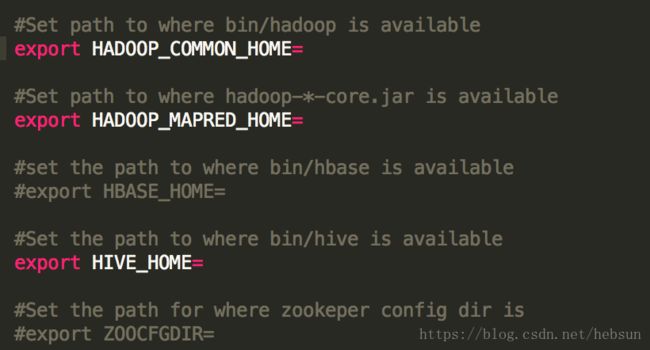

将 sqoop-env-template.sh 重命名成 sqoop-env.sh

编辑

配置你的hadoop和hive安装路径;

找个mysql连接包。放到sqoop的lib目录下

配置完,测试导入。

从mysql导出表到hive数据仓库

mysql里建个库和表。用自己以前有的库表也行。测试而已。

这里有些做好的表。就来当测试用了。

CREATE TABLE `user_account` (

`ac_no` int(11) NOT NULL DEFAULT '0',

`user_id` varchar(30) DEFAULT NULL,

`user_pswd` varchar(30) DEFAULT NULL,

`date` datetime DEFAULT NULL,

`no_of_visit` int(11) DEFAULT NULL,

`no_of_trnsc` int(11) DEFAULT NULL,

`trnsc_amt` int(11) DEFAULT NULL,

PRIMARY KEY (`ac_no`)

) ENGINE=InnoDB DEFAULT CHARSET=latin1

插入点儿测试数据

insert into user_account values(125,'a123','pp284','2011-04-14',5,6,100);insert into user_account values(126,'a124','rr999','2012-06-13',2,3,1000);

insert into user_account values(127,'a125','ab888','2010-07-15',3,9,5000);

insert into user_account values(128,'a126','bb900','2016-05-15',1,8,7000);

insert into user_account values(129,'a127','rt007','2012-07-14',4,5,4000);

insert into user_account values(130,'a128','ss008','2013-03-15',6,2,8000);

Sqoop导出到hdfs

sqoop import --connect jdbc:mysql://localhost:3306/ecom --username 'root' --password '123456' --table 'user_account' -m 1 --hive-import --fields-terminated-by '#'

执行。

2018-10-17 10:57:06,373 INFO hive.HiveImport: Loading uploaded data into Hive

2018-10-17 10:57:06,377 ERROR hive.HiveConfig: Could not load org.apache.hadoop.hive.conf.HiveConf. Make sure HIVE_CONF_DIR is set correctly.

2018-10-17 10:57:06,378 ERROR tool.ImportTool: Import failed: java.io.IOException: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:50)

at org.apache.sqoop.hive.HiveImport.getHiveArgs(HiveImport.java:392)

at org.apache.sqoop.hive.HiveImport.executeExternalHiveScript(HiveImport.java:379)

at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:337)

at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:537)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.hive.conf.HiveConf

at java.net.URLClassLoader.findClass(URLClassLoader.java:381)

at java.lang.ClassLoader.loadClass(ClassLoader.java:424)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:331)

at java.lang.ClassLoader.loadClass(ClassLoader.java:357)

at java.lang.Class.forName0(Native Method)

at java.lang.Class.forName(Class.java:264)

at org.apache.sqoop.hive.HiveConfig.getHiveConf(HiveConfig.java:44)

... 12 more

报错。

解决方法;

export HADOOP_CLASSPATH=$HADOOP_CLASSPATH:$HIVE_HOME/lib/*

运行,又报错。

main ERROR Could not register mbeans java.security.AccessControlException: access denied ("javax.management.MBeanTrustPermission" "register")

at java.security.AccessControlContext.checkPermission(AccessControlContext.java:472)

at java.lang.SecurityManager.checkPermission(SecurityManager.java:585)

at com.sun.jmx.interceptor.DefaultMBeanServerInterceptor.checkMBeanTrustPermission(DefaultMBeanServerInterceptor.java:1848)

at com.sun.jmx.interceptor.DefaultMBeanServerInterceptor.registerMBean(DefaultMBeanServerInterceptor.java:322)

at com.sun.jmx.mbeanserver.JmxMBeanServer.registerMBean(JmxMBeanServer.java:522)

at org.apache.logging.log4j.core.jmx.Server.register(Server.java:389)

at org.apache.logging.log4j.core.jmx.Server.reregisterMBeansAfterReconfigure(Server.java:167)

at org.apache.logging.log4j.core.jmx.Server.reregisterMBeansAfterReconfigure(Server.java:140)

at org.apache.logging.log4j.core.LoggerContext.setConfiguration(LoggerContext.java:556)

at org.apache.logging.log4j.core.LoggerContext.start(LoggerContext.java:261)

at org.apache.logging.log4j.core.async.AsyncLoggerContext.start(AsyncLoggerContext.java:87)

at org.apache.logging.log4j.core.impl.Log4jContextFactory.getContext(Log4jContextFactory.java:240)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:158)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:131)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:101)

at org.apache.logging.log4j.core.config.Configurator.initialize(Configurator.java:188)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jDefault(LogUtils.java:173)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:106)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4jCommon(LogUtils.java:98)

at org.apache.hadoop.hive.common.LogUtils.initHiveLog4j(LogUtils.java:81)

at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:699)

at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:683)

at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method)

at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:62)

at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43)

at java.lang.reflect.Method.invoke(Method.java:498)

at org.apache.sqoop.hive.HiveImport.executeScript(HiveImport.java:331)

at org.apache.sqoop.hive.HiveImport.importTable(HiveImport.java:241)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:537)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:628)

at org.apache.sqoop.Sqoop.run(Sqoop.java:147)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:76)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:183)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:234)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:243)

at org.apache.sqoop.Sqoop.main(Sqoop.java:252)

虽然报错,但是查看一下,数据已经导入了进去。