k8s-dns-gateway 网关网络扩展实战

dns以及网络扩展实战

*k8s-dns-gateway dns网关网络扩展实战*

k8s服务暴露分为几种情况

1.svc-nodeport暴露 缺点所有node上开启端口监听,需要记住端口号。

2.ingress http 80端口暴露 必需通过域名引入。

3.tcp–udp–ingress tcp udp 端口暴露需要配置一个ingress lb,一次只能一条规则,很麻烦,要先规划好lb节点 同样也需要仿问lb端口,无比麻烦。

然而正常的虚拟机我们只需要一个地址+端口直接仿问即可

那么我们能不能做到像访部虚拟机一样访部k8s集群服务呢,当然可以 以下架构实现打通k8s网络和物理网理直通,物理网 络的dns域名直接调用k8s-dns域名服务直接互访

架构环境如下

k8s集群网络 172.1.0.0/16

k8s-service网络 172.1.0.0/16

物理机网络192.168.0.0/16访问k8s中的一个服务 mysql-read 为我们要访问的svc服务如下

[root@master3 etc]# kubectl get svc -o wide|egrep 'NAME|mysql'

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

mysql None 3306/TCP 8h app=mysql

mysql-read 172.1.86.83 3306/TCP 8h app=mysql

[root@master3 etc]#

k8s集群节点:

[root@master3 etc]# kubectl get cs -o wide

NAME STATUS MESSAGE ERROR

controller-manager Healthy ok

scheduler Healthy ok

etcd-2 Healthy {"health": "true"}

etcd-0 Healthy {"health": "true"}

etcd-1 Healthy {"health": "true"}

[root@master3 etc]# kubectl get node -o wide

NAME STATUS AGE VERSION EXTERNAL-IP OS-IMAGE KERNEL-VERSION

jenkins-2 Ready 14d v1.6.4 CentOS Linux 7 (Core) 4.4.71-1.el7.elrepo.x86_64

node1.txg.com Ready 14d v1.6.4 CentOS Linux 7 (Core) 4.4.71-1.el7.elrepo.x86_64

node2.txg.com Ready 14d v1.6.4 CentOS Linux 7 (Core) 4.4.71-1.el7.elrepo.x86_64

node3.txg.com Ready 14d v1.6.4 CentOS Linux 7 (Core) 4.4.71-1.el7.elrepo.x86_64

node4.txg.com Ready 14d v1.6.4 CentOS Linux 7 (Core) 3.10.0-514.6.2.el7.x86_64

[root@master3 etc]#

[root@master3 etc]# kubectl get pod --all-namespaces -o wide |egrep 'NAME|node|mysql|udp'

NAMESPACE NAME READY STATUS RESTARTS AGE IP NODE

default cnetos6-7-rc-xv12x 1/1 Running 0 7d 172.1.201.225 node3.txg.com

default kibana-logging-3543001115-v52kn 1/1 Running 0 12d 172.1.26.70 node1.txg.com

default mysql-0 2/2 Running 0 10h 172.1.201.236 node3.txg.com

default mysql-1 2/2 Running 0 10h 172.1.24.230 jenkins-2

default mysql-2 2/2 Running 1 10h 172.1.160.213 node4.txg.com

default nfs-client-provisioner-278618947-wr97r 1/1 Running 0 12d 172.1.201.201 node3.txg.com

kube-system calico-node-5r37q 2/2 Running 3 14d 192.168.2.72 jenkins-2

kube-system calico-node-hldk2 2/2 Running 2 14d 192.168.2.68 node3.txg.com

kube-system calico-node-pjdj8 2/2 Running 4 14d 192.168.2.69 node4.txg.com

kube-system calico-node-rqkm9 2/2 Running 2 14d 192.168.1.68 node1.txg.com

kube-system calico-node-zqkxd 2/2 Running 0 14d 192.168.1.69 node2.txg.com

kube-system heapster-v1.3.0-1076354760-6kn4m 4/4 Running 4 13d 172.1.160.199 node4.txg.com

kube-system kube-dns-474739028-26gds 3/3 Running 3 14d 172.1.160.198 node4.txg.com

kube-system nginx-udp-ingress-controller-c0m04 1/1 Running 0 1d 192.168.2.72 jenkins-2

[root@master3 etc]#

k8s证书如下:

[root@node3 kubernetes]# ls

bootstrap.kubeconfig kubelet.kubeconfig kube-proxy.kubeconfig ssl token.csv

[root@node3 kubernetes]#

角色名称:边界网关路由器 192.168.2.71 主机名 jenkins-1 主机名自己定义

角色名称:边界dns代理服务器 192.168.2.72 主机名 jenkins-2 主机名自己定义

架构原理:

192.168.0.0/16 #物理网络以域名或tcp方式发起访问k8s service以及端口

'

'

mysql-read.default.svc.cluster.local #请求k8s服务所在空间的服务名svc名,完整域名

'

'

192.168.2.72 #dns代理服务以ingress-udp pod的模式运行在此节点udp53号端口上

' ,为物理网络提供仿问k8s-dns的桥梁解析dns

' #此节点应固定做为一个节点布署,所有外部设置dns为此192.168.2.72

'

172.1.86.83 #获取svc的实际clusterip

'

'

192.168.2.71 #边界网关,用于物理网络连接k8s集群内内核转发开启net.ipv4.ip_forward=1

' #所有外部物理机加一条静态路由访问k8s网络172网段必需经过网关192.168.2.71

' #route add -net 172.1.0.0 netmask 255.255.0.0 gw 192.168.2.71

' #边界网关运行kube-proxy用于防火墙规则同步实现svc分流,此节点不运行kubele服务,不受k8s管控

calico and flannel-Iface接口 #此节点为物理节点,只运行calico 或flanne服务

'

k8s集群网络 #流量最终到达k8s集群

#布署dns代理服务节点为外部提供服务,以 hostNetwork: true 为非k8s集群网络物理机节点提共访问53 dns服务

[root@master3 udp]# ls

nginx-udp-ingress-configmap.yaml nginx-udp-ingress-controller.yaml

[root@master3 udp]# cd ../ ; kubectl create -f udp/

[root@master3 udp]# cat nginx-udp-ingress-configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-udp-ingress-configmap

namespace: kube-system

data:

53: "kube-system/kube-dns:53"

[root@master3 udp]# cat nginx-udp-ingress-controller.yaml

apiVersion: v1

kind: ReplicationController

metadata:

name: nginx-udp-ingress-controller

labels:

k8s-app: nginx-udp-ingress-lb

namespace: kube-system

spec:

replicas: 1

selector:

k8s-app: nginx-udp-ingress-lb

template:

metadata:

labels:

k8s-app: nginx-udp-ingress-lb

name: nginx-udp-ingress-lb

spec:

hostNetwork: true

terminationGracePeriodSeconds: 60

containers:

#- image: gcr.io/google_containers/nginx-ingress-controller:0.9.0-beta.8

- image: 192.168.1.103/k8s_public/nginx-ingress-controller:0.9.0-beta.5

name: nginx-udp-ingress-lb

readinessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

livenessProbe:

httpGet:

path: /healthz

port: 10254

scheme: HTTP

initialDelaySeconds: 10

timeoutSeconds: 1

env:

- name: POD_NAME

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: POD_NAMESPACE

valueFrom:

fieldRef:

fieldPath: metadata.namespace

ports:

- containerPort: 81

hostPort: 81

- containerPort: 443

hostPort: 443

- containerPort: 53

hostPort: 53

args:

- /nginx-ingress-controller

- --default-backend-service=$(POD_NAMESPACE)/default-http-backend

- --udp-services-configmap=$(POD_NAMESPACE)/nginx-udp-ingress-configmap

[root@master3 udp]#

布署gateway 边界网关节点,此节点只运行 calico 或者flannel 和kube-proxy

echo 'net.ipv4.ip_forward=1' >>/etc/sysctl.conf ;sysctl -p

1.k8s运行在calico集群上网络方式 ,calico集群安装方式详见本人另一篇文章 http://blog.csdn.net/idea77/article/details/73090403

#本次安装的节点为docker方式运行

[root@jenkins-1 ~]# cat calico-docker.sh

systemctl start docker.service

/usr/bin/docker rm -f calico-node

/usr/bin/docker run --net=host --privileged --name=calico-node -d --restart=always \

-v /etc/kubernetes/ssl:/etc/kubernetes/ssl \

-e ETCD_ENDPOINTS=https://192.168.1.65:2379,https://192.168.1.66:2379,https://192.168.1.67:2379 \

-e ETCD_KEY_FILE=/etc/kubernetes/ssl/kubernetes-key.pem \

-e ETCD_CERT_FILE=/etc/kubernetes/ssl/kubernetes.pem \

-e ETCD_CA_CERT_FILE=/etc/kubernetes/ssl/ca.pem \

-e NODENAME=${HOSTNAME} \

-e IP= \

-e CALICO_IPV4POOL_CIDR=172.1.0.0/16 \

-e NO_DEFAULT_POOLS= \

-e AS= \

-e CALICO_LIBNETWORK_ENABLED=true \

-e IP6= \

-e CALICO_NETWORKING_BACKEND=bird \

-e FELIX_DEFAULTENDPOINTTOHOSTACTION=ACCEPT \

-v /var/run/calico:/var/run/calico \

-v /lib/modules:/lib/modules \

-v /run/docker/plugins:/run/docker/plugins \

-v /var/run/docker.sock:/var/run/docker.sock \

-v /var/log/calico:/var/log/calico \

192.168.1.103/k8s_public/calico-node:v1.1.3

#192.168.1.103/k8s_public/calico-node:v1.3.0

[root@jenkins-1 ~]# 注意此入为-e CALICO_IPV4POOL_CIDR=172.1.0.0/16 k8s集群网络的网段一致

注意,calicocatl 我是安装在k8s的master服务器上的,在主控节点上运行创建边界路由器

此处在master3服务器上执行开通边界网关这台机的calicoctl用于管理BGP 的命令。它主要面向在私有云上运行的用户,并希望与其底层基础架构对等。

[root@master3 calico]# cat bgpPeer.yaml

apiVersion: v1

kind: bgpPeer

metadata:

peerIP: 192.168.2.71

scope: global

spec:

asNumber: 64512

[root@master3 calico]# calicoctl create -f bgpPeer.yaml

#查看node情况

[root@master3 kubernetes]# calicoctl node status

Calico process is running.

IPv4 BGP status

+--------------+-------------------+-------+----------+-------------+

| PEER ADDRESS | PEER TYPE | STATE | SINCE | INFO |

+--------------+-------------------+-------+----------+-------------+

| 192.168.2.71 | node-to-node mesh | up | 04:08:47 | Established |

| 192.168.2.71 | global | start | 04:08:43 | Idle |

| 192.168.2.72 | node-to-node mesh | up | 04:08:47 | Established |

| 192.168.1.61 | node-to-node mesh | up | 04:08:47 | Established |

| 192.168.1.62 | node-to-node mesh | up | 04:08:47 | Established |

| 192.168.1.68 | node-to-node mesh | up | 04:08:48 | Established |

| 192.168.1.69 | node-to-node mesh | up | 04:08:47 | Established |

| 192.168.2.68 | node-to-node mesh | up | 04:08:47 | Established |

| 192.168.2.69 | node-to-node mesh | up | 04:08:47 | Established |

+--------------+-------------------+-------+----------+-------------+

#查看全局对等体节点

[root@master3 calico]# calicoctl get bgpPeer --scope=global

SCOPE PEERIP NODE ASN

global 192.168.2.71 64512

[root@master3 calico]# ok calico配置完成 192.168.2.71 为路由转发节点

2.flannel方式,如果k8s集群是运行在flannel网络基础上的 在此节点安装flannel

直接启动flannel即可 systemctl start flanneld.service

echo 'net.ipv4.ip_forward=1' >>/etc/sysctl.conf ;sysctl -p

3.布署kube-proxy.service

kube-proxy.service 需要证书kube-proxy.kubeconfig ,复制k8s node上的kubeconfig /etc/kubernetes/kube-proxy.kubeconfig 到此节点处即可

#复制kube-proxy的二进至文件到些处即可

[root@jenkins-1 ~]# mkdir -p /var/lib/kube-proxy

[root@jenkins-1 ~]# rsync -avz node3:/bin/kube-proxy /bin/kube-proxy

#kube-proxy服务

[root@jenkins-1 ~]# cat /lib/systemd/system/kube-proxy.service

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/GoogleCloudPlatform/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/bin/kube-proxy \

--bind-address=192.168.2.71 \

--hostname-override=jenkins-1 \

--cluster-cidr=172.1.0.0/16 \

--kubeconfig=/etc/kubernetes/kube-proxy.kubeconfig \

--logtostderr=true \

--proxy-mode=iptables \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

[root@jenkins-1 ~]#

#启动 systemctl start kube-proxy.service

4.测试网关和dns解析 以及服务访问情况

#上面我说过了,所有K8S集群外机器要想访问必需要加一条静态路由

linux 机器命令 ,找台集群外的机器来验证,这台机器只有一个网卡,没有安装calico和 flannel

[root@247 ~]#route add -net 172.1.0.0 netmask 255.255.0.0 gw 192.168.2.71

检查路由 route -n

Kernel IP routing table

Destination Gateway Genmask Flags Metric Ref Use Iface

0.0.0.0 192.168.5.1 0.0.0.0 UG 0 0 0 eth0

169.254.0.0 0.0.0.0 255.255.0.0 U 1002 0 0 eth0

172.1.0.0 192.168.2.71 255.255.0.0 UG 0 0 0 eth0 #注意看此处已经设置了网段路由生效了

192.168.0.0 0.0.0.0 255.255.0.0 U 0 0 0 eth0

192.168.1.0 0.0.0.0 255.255.255.0 U 0 0 0 eth0

linux 服务器dns设置为该机dns 192.168.2.72 为了能解析K8Sdns服务名

[root@247 ~]# egrep 'DNS' /etc/sysconfig/network-scripts/ifcfg-eth0

IPV6_PEERDNS="yes"

DNS1="192.168.2.72"

[root@247 ~]# nslookup kubernetes-dashboard.kube-system.svc.cluster.local

Server: 192.168.2.72

Address: 192.168.2.72#53

Non-authoritative answer:

Name: kubernetes-dashboard.kube-system.svc.cluster.local

Address: 172.1.8.71

#dns成功解析

访问一下 curl 成功访问

[root@247 ~]# curl -v kubernetes-dashboard.kube-system.svc.cluster.local

* About to connect() to kubernetes-dashboard.kube-system.svc.cluster.local port 80 (#0)

* Trying 172.1.8.71...

* Connected to kubernetes-dashboard.kube-system.svc.cluster.local (172.1.8.71) port 80 (#0)

> GET / HTTP/1.1

> User-Agent: curl/7.29.0

> Host: kubernetes-dashboard.kube-system.svc.cluster.local

> Accept: */*

>

< HTTP/1.1 200 OK

< Accept-Ranges: bytes

< Cache-Control: no-store

< Content-Length: 848

< Content-Type: text/html; charset=utf-8

< Last-Modified: Thu, 16 Mar 2017 13:30:10 GMT

< Date: Thu, 29 Jun 2017 06:45:47 GMT

<

"kubernetesDashboard"> "utf-8"> "kdTitle as $ctrl"</span> ng-bind=<span class="hljs-string">"$ctrl.title()"</span>> "icon" type="image/png" href="assets/images/kubernetes-logo.png"> "viewport" content="width=device-width"> "stylesheet" href="static/vendor.4f4b705f.css"> "stylesheet" href="static/app.93b90a74.css"> "column" layout-fill>

windows机器用管理员打开cmd命令运行 route ADD -p 172.1.0.0 MASK 255.255.0.0 192.168.2.71

角色名称:边界dns代理服务器 192.168.2.72 主机名 jenkins-2 主机名自己定义

windowsip配置里面 dns 服务器设置为该机dns 192.168.2.72

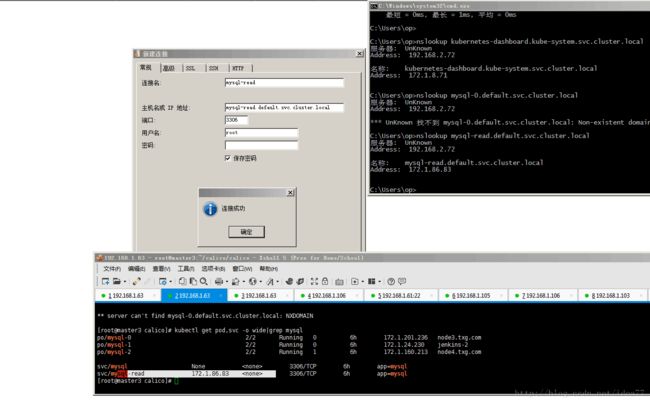

我们测试访问k8s的mysql服务

mysql-read 为我们要访问的svc服务,k8s master上查看mysql svc

[root@master3 etc]# kubectl get svc -o wide|egrep 'NAME|mysql'

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

mysql None 3306/TCP 8h app=mysql

mysql-read 172.1.86.83 3306/TCP 8h app=mysql

cmd 命令行运行

C:\Users\op>nslookup mysql-read.default.svc.cluster.local

服务器: UnKnown

Address: 192.168.2.72

非权威应答:

名称: mysql-read.default.svc.cluster.local

Address: 172.1.86.83

#成功访问dns 并解析出域名

如果知道容器ip 和端口直接访问即可,如ssh web服务 等

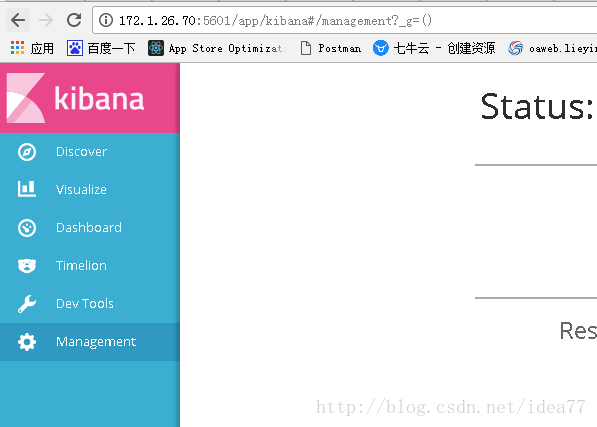

[root@master3 udp]# kubectl get pod,svc -o wide|egrep 'NAME|kibana'

NAME READY STATUS RESTARTS AGE IP NODE

po/kibana-logging-3543001115-v52kn 1/1 Running 0 12d 172.1.26.70 node1.txg.com

NAME CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

svc/kibana-logging 172.1.166.83 5601:8978/TCP 12d k8s-app=kibana-logging

[root@master3 udp]#

[root@master3 udp]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE

cnetos6-7-rc-xv12x 1/1 Running 0 8d 172.1.201.225 node3.txg.com

kibana-logging-3543001115-v52kn 1/1 Running 0 12d 172.1.26.70 node1.txg.com

**如果你不想一台台机器加路由和dns

你可以把路由信息加入物理路由器上,这样就不用每台机都加路由和dns了,直接打通所有链路**

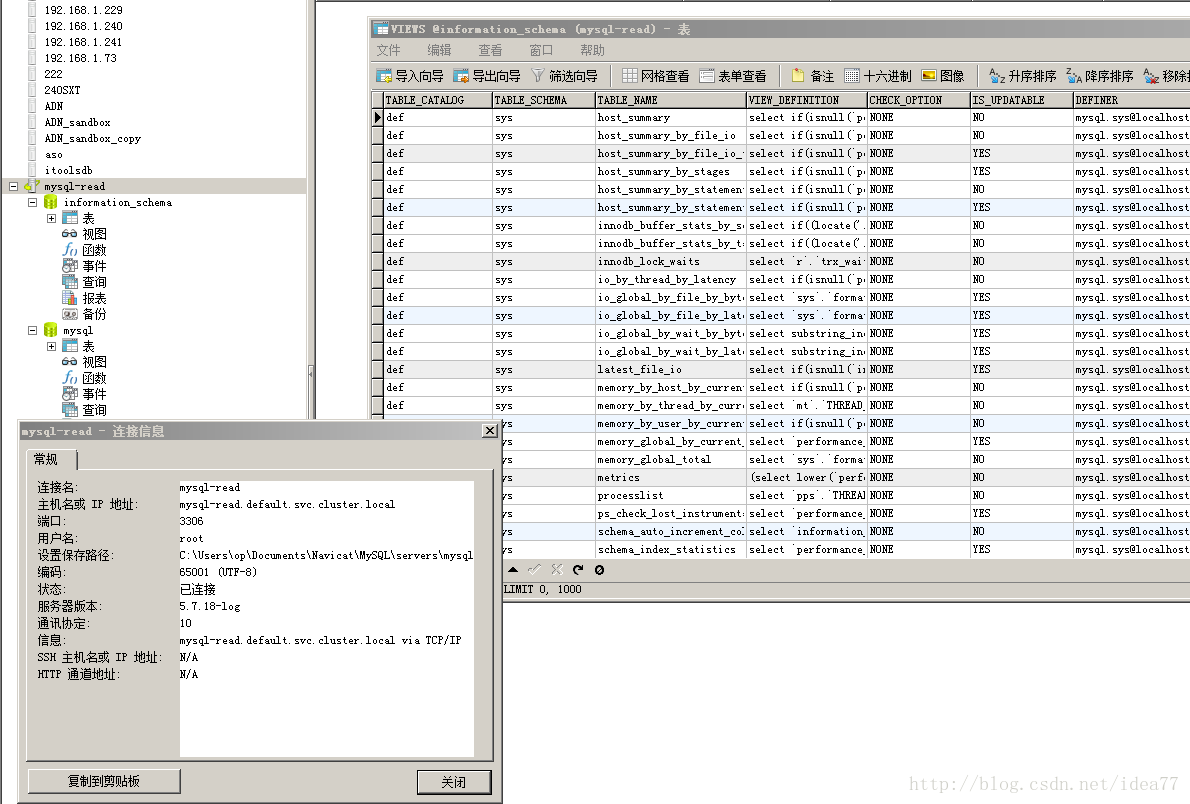

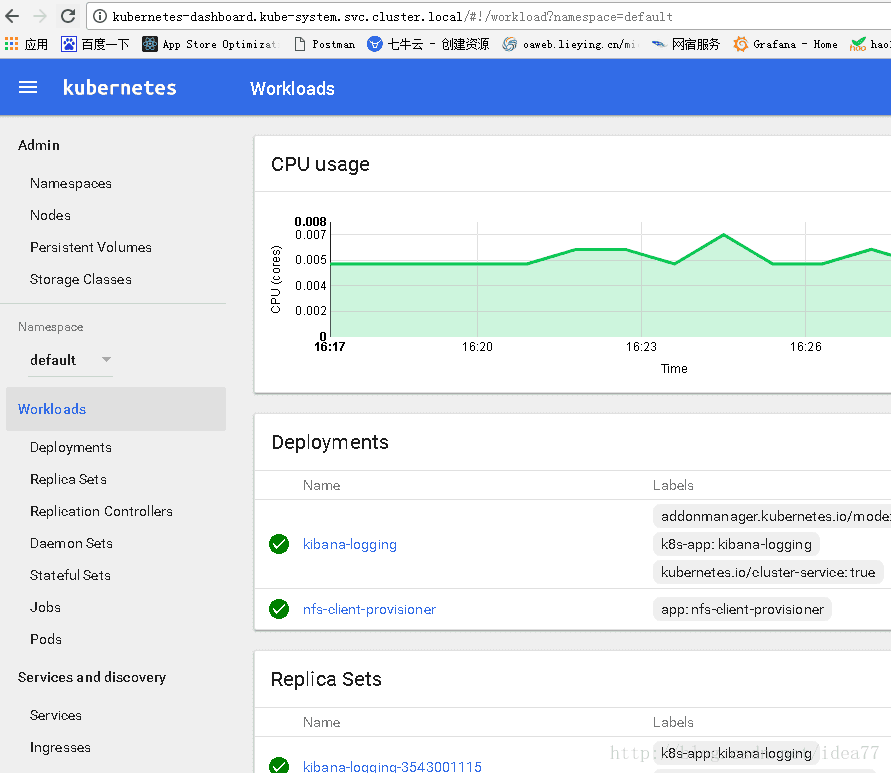

接下来在windows只接仿问dashboard 和用navicat仿问k8s服务,完美成功访问

这对于在windows用开发工具调试访问k8s服务提供了捷径

直接浏览器仿问kubernetes-dashboard.kube-system.svc.cluster.local

直接用mysql工具仿问mysql服务 mysql-read.default.svc.cluster.local

直接用浏览器访问kibana http://172.1.26.70 :5601