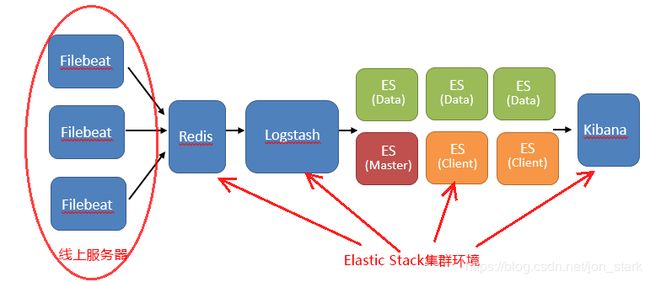

filebeat+redis+ELK 集群环境

ELK搭建手册

2018-12-30

需求背景:

- 业务发展越来越庞大,服务器越来越多

- 各种访问日志、应用日志、错误日志量越来越多,导致运维人员无法很好的去管理日志

- 开发人员排查问题,需要运维到服务器上查日志,不方便

- 运营人员需要一些数据,需要我们运维到服务器上分析日志

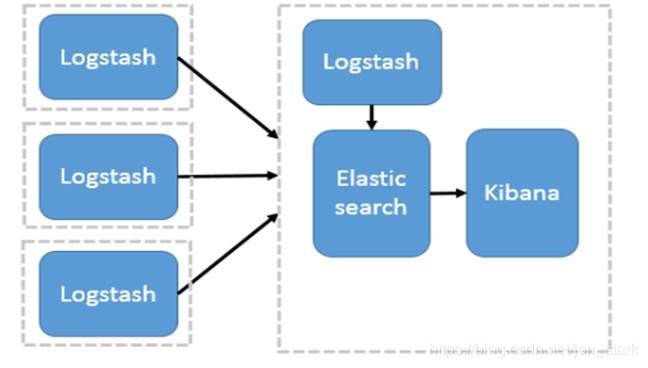

ELK是三个开源软件的缩写,分别为:Elasticsearch 、 Logstash以及Kibana , 它们都是开源软件。不过现在还新增了一个Beats,它是一个轻量级的日志收集处理工具(Agent),Beats占用资源少,适合于在各个服务器上搜集日志后传输给Logstash,官方也推荐此工具,目前由于原本的ELK Stack成员中加入了 Beats 工具所以已改名为Elastic Stack。

早期的ELK架构中使用Logstash收集、解析日志,但是Logstash对内存、cpu、io等资源消耗比较高。相比 Logstash,Beats所占系统的CPU和内存几乎可以忽略不计,所以在日志节点上安装轻量级的Filebeat替换Logstash。

集群环境工作模式,由filebeat在各节点收集日志,交给redis当作一个缓冲,logstash从redis调用后进行分析过滤,交给elasticsearch集群环境分布式存储,kibana最后把收到的日志展示在web界面,另外再安装x-pack插件,提供kibanaweb页面的登陆账号认证,监控,报表系列功能。

服务器需求:

数量:3台

配置:8核/8G内存

服务器环境:3台

10.88.120.100 node1 安装redis,nginx,elasticsearch_master,kibana

10.88.120.110 node2 安装logstash,elasticsearch_node

10.88.120.120 node3 安装logstash,elasticsearch_node

软件环境

| 项 |

说明 |

| Linux Server |

CentOS 7.5 |

| Elasticsearch |

6.0.0 |

| Logstash |

6.0.0 |

| Kibana |

6.0.0 |

| Redis |

5.0.3 |

| JDK |

1.8 |

- 系统环境准备

- 防火墙端口开启白名单

对指定filebeat客户端开启6379端口(用于日志传送)、80端口(仅限于公司内网访问ELK服务器)

[root@node1 elk]# firewall-cmd --permanent --new-ipset=elk_whitelist --type=hash:ip

[root@node1 elk]# firewall-cmd --permanent --new-ipset=web_whitelist --type=hash:ip

[root@node1 elk]# firewall-cmd --permanent --ipset=web_whitelist --add-entry=113.61.35.0/24 ##公司内网

[root@node1 elk]# firewall-cmd --permanent --ipset=elk_whitelist --add-entry=filebeat客户端IP

[root@node1 elk]# firewall-cmd --permanent --add-rich-rule='rule family="ipv4" source ipset="elk_whitelist" port port="6379" protocol="tcp" accept'

[root@node1 elk]# firewall-cmd --permanent --add-rich-rule='rule family="ipv4" source ipset="web_whitelist" port port="80" protocol="tcp" accept

[root@node1 elk]# systemctl restart firewalld

备注:

后期加filebeat客户端,需要添加6379白名单的时候,直接在elk_whitelist添加IP即可

- 导入jdk环境变量

[root@node1 elk]# vim /etc/profile

#######JDK环境变量########################

export JAVA_HOME=/usr/local/jdk

export JRE_HOME=$JAVA_HOME/jre

export CLASSPATH=$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

export PATH=$PATH:$JAVA_HOME/bin:$JAVA_HOME/jre/bin

#########elk环境变量##########

export PATH=$PATH:/usr/local/elk/elasticsearch/bin/

export PATH=$PATH:/usr/local/elk/logstash/bin/

export PATH=$PATH:/usr/local/elk/kibana/bin/

[root@localhost ~]# source /etc/profile

- 系统优化 ---开集群要配置

[root@node1 elk]# vim /etc/sysctl.conf

fs.file-max = 262144

vm.max_map_count= 262144

[root@node1 elk]# sysctl -p

[root@node1 elk]# vim /etc/security/limits.conf

* soft nproc 262144

* hard nproc 262144

* soft nofile 262144

* hard nofile 262144

[root@localhost ~]# ulimit -n 262144

- 搭建ELK服务器

创建elk用户,创建对应目录

[root@node1 ~]# useradd elk

[root@node1 ~]# passwd elk

[root@node1 ~]# mkdir /usr/local/elk

[root@node1 ~]# mkdir /elk/es/data/ -p

[root@node1 ~]# mkdir /elk/es/logs/ -p

[root@node1 ~]# ls |sed "s:^:`pwd`/:"

/elk/es/data

/elk/es/logs

[root@node1 ~]# chown -R elk.elk /elk/

[root@node1 ~]# chown -R elk.elk /usr/local/elk/

- JDK环境搭建 ---需要准备JDK-8.0环境

[root@node1 ~]# tar -xf jdk-8u181-linux-x64.tar.gz

[root@node1 ~]# mv jdk1.8.0_181/ /usr/local/jdk

- 下载ELK包到/opt/src并解压安装

[root@node1 ~]# su elk

[elk@node1 elk]$ cd /usr/local/elk

[elk@node1 elk]$ wget https://artifacts.elastic.co/downloads/elasticsearch/elasticsearch-6.0.0.zip

[elk@node1 elk]$ wget https://artifacts.elastic.co/downloads/kibana/kibana-6.0.0-linux-x86_64.tar.gz

[elk@node1 elk]$ wget https://artifacts.elastic.co/downloads/logstash/logstash-6.0.0.zip

[elk@node1 elk]$ unzip elasticsearch-6.0.0.zip && unzip logstash-6.0.0.zip

[elk@node1 elk]$ tar -xf kibana-6.0.0-linux-x86_64.tar.gz

[elk@node1 elk]$ mv elasticsearch-6.0.0 elasticsearch

[elk@node1 elk]$ mv logstash-6.0.0 logstash

[elk@node1 elk]$ mv kibana-6.0.0-linux-x86_64 kibana

cat elasticsearch/config/elasticsearch.yml |egrep -v "^$|^#"

…

cluster.name: es

node.name: node1 ##每个节点根据自己主机名定义

path.data: /elk/es/data

path.logs: /elk/es/logs

network.host: 0.0.0.0

http.port: 9200

transport.tcp.port: 9300

node.master: true

node.data: true

discovery.zen.ping.unicast.hosts: ["node1:9300","node2:9300","node3:9300"]

discovery.zen.minimum_master_nodes: 2

action.destructive_requires_name: true

xpack.security.enabled: true

…

- 配置项说明

| 项 |

说明 |

| cluster.name |

集群名 |

| node.name |

节点名 |

| path.data |

数据保存目录 |

| path.logs |

日志保存目录 |

| network.host |

节点host/ip |

| http.port |

HTTP访问端口 |

| transport.tcp.port |

TCP传输端口 |

| node.master |

是否允许作为主节点 |

| node.data |

是否保存数据 |

| discovery.zen.ping.unicast.hosts |

集群中的主节点的初始列表,当节点(主节点或者数据节点)启动时使用这个列表进行探测 |

| discovery.zen.minimum_master_nodes |

主节点个数 |

- 给elastic安装geoip模块和x-pack模块

[elk@node1 elk]$ source /etc/profile

[elk@node1 elk]$ elasticsearch-plugin install ingest-geoip ##geoip模块

[elk@node1 elk]$ kibana-plugin install x-pack

[elk@node1 elk]$ elasticsearch-plugin install x-pack

[elk@node1 elk]$ logstash-plugin install x-pack

[elk@node1 elk]$ /usr/local/elk/elasticsearch/bin/x-pack/setup-passwords interactive

依次设置elasticsearch kibana logstash的密码

[elk@node1 elk]$ vim /opt/apps/elk/kibana/config/kibana.yml

…

server.port: 5601

server.host: "0.0.0.0"

elasticsearch.url: "http://10.88.120.100:9200"

elasticsearch.username: "elastic"

elasticsearch.password: "qwe7410"

…

备注:

- /elk为nginx反代的url,如http://103.68.110.223/elk

- elastic用户名不要写其他

- 这个密码是前面设置的elasticsearch的x-pack密码

- 启动elastic和kibana

[elk@node1 elk]$ nohup elasticsearch &>/dev/null &

[elk@node1 elk]$ nohup kibana &>/dev/null &

查看下是否启动elastic(9200端口)和kibana(5601端口)

[elk@node1 elk]$ netstat -antpu |egrep "5601|9200"

tcp 0 0 0.0.0.0:5601 0.0.0.0:* LISTEN 27715/node

tcp6 0 0 :::9200 :::* LISTEN 26482/java

备注:

前面kibana进行地址重置再重启的话,需要花费好几分钟的时间才能启动成功,属于正常现象

logstash在搭完客户端后再做配置启动

- 安装kibana汉化包

[elk@node1 elk]$ wget https://github.com/anbai-inc/Kibana_Hanization/archive/master.zip

[elk@node1 elk]$ unzip master.zip && mv Kibana_Hanization-master/ KIBANA-CHINA

[elk@node1 elk]$ cd KIBANA-CHINA

[elk@node1 elk]$ python main.py "/usr/local/elk/kibana"

耐心等待,安装完毕,重启kibana!!!

- 安装并启动redis 指定端口为6379 设置密码 修改bind ---略

- 查看key

127.0.0.1:6379> KEYS *

1) "john-test"

Redis.conf主要修改内容

bind 0.0.0.0

requirepass qwe7410

- 配置nginx反代(不是必备)

- 安装过程 ---- 略

- 配置

[elk@node1 elk]$ vim/etc/nginx/conf.d/nginx.conf

server {

listen 80;

server_name ELK服务器IP或域名;

location ^~ /elk ① {

rewrite /elk/(.*)$ /$1 break;

proxy_pass http://127.0.0.1:5601/ ;

proxy_http_version 1.1;

proxy_set_header Upgrade $http_upgrade;

proxy_set_header Connection 'upgrade';

proxy_set_header Host $host;

proxy_cache_bypass $http_upgrade;

}

}

备注:

- /elk是前面配置的kibana的url,要保持一致

- 启动nginx --- 略

- 搭建客户端日志采集器

- 搭建filebeat客户端

介绍资料:http://blog.csdn.net/weixin_39077573/article/details/73467712

- 安装filebeat

[root@agent ~]# wget https://artifacts.elastic.co/downloads/beats/filebeat/filebeat-6.0.0-linux-x86_64.tar.gz

[root@agent ~]# tar -xf filebeat-6.0.0-linux-x86_64.tar.gz

[root@agent ~]# mv filebeat-6.0.0-linux-x86_64 /usr/local/filebeat

- 修改配置文件

[root@agent ~]# cd /usr/local/filebeat

[root@agent filebeat]# cp filebeat.yml filebeat.yml.bak

[root@agent filebeat]# vim filebeat.yml

filebeat.prospectors:

- type: log

enabled: true

paths:

- /var/log/nginx/access.log

- /var/log/nginx/error.log

tail_files: true

fields:

input_type: log

tag: nginx-log

- type: log

enabled: true

paths:

- "/home/website/logs/manager.log"

tail_files: true

fields:

input_type: log

tag: domainsystem-log

filebeat.config.modules:

path: ${path.config}/modules.d/*.yml

reload.enabled: false

setup.template.settings:

index.number_of_shards: 3

output.redis:

hosts: ["10.88.120.100:6379"]

data_type: "list"

password: "qwe7410"

key: "john-test"

db: 0

filebeat.prospectors:

####nginx #######

- type: log

enabled: true

paths:

- /opt/bet/logs/nginx/web_access*.log

- /opt/ylgj2/logs/nginx/web_access*.log

fields:

log_type: hbet_cyl_nginx_log

ignore_older: 24h ###采集24小时以内的数据

#================================ Outputs =====================================

output.redis:

hosts: ["103.68.110.223:17693"]

data_type: "list"

password: "9tN6GFGK60Jk8BNkBJM611GwA66uDFeG①"

key: "hbet_cyl_nginx②"

db: 0 #redis数据库的编号

备注:

- 为ELK服务器redis密码

- redis监听的键值,在logstash的input中的key要保持一致

- 启动filebeat

[root@agent filebeat]# nohup ./filebeat -e -c filebeat.yml &>/dev/null &

检查下是否启动成功

[root@agent filebeat]# ps aux |grep filebeat

root 2808 0.0 0.3 296664 13252 pts/0 Sl 22:27 0:00 ./filebeat -e -c filebeat.yml

- elasticsearch的设置

- setting

通过setting可以更改es配置可以用来修改副本数和分片数

http://blog.csdn.net/tanga842428/article/details/60953579

- 通过curl或浏览器查看索引、副本、分片信息

[swadmin@MyCloudServer ~]$ curl -XGET http://127.0.0.1:9200/nginx-2018.03.13/_settings?pretty -u elastic

{ "nginx-2018.03.13" : {

"settings" : {

"index" : {

"creation_date" : "1520899209420",

"number_of_shards" : "5",

"number_of_replicas" : "1",

"uuid" : "tGs5EcupT3W-UX-w38GYFg",

"version" : {

"created" : "6000099"

},

"provided_name" : "nginx-2018.03.13"

}

}

}

}

备注: shards ---- 分片 replicas -- 索引 provided_name --索引名

- map查看

https://www.cnblogs.com/zlslch/p/6474424.html

[swadmin@vhost-elk]$curl -XGET http://192.168.175.223:9200/java_log-2018.03.23/_mappings?pretty -u elastic

- 配置logstash,实现对filebeat客户端的日志管理

- 建立logstash的配置目录和data目录

[swadmin@MyCloudServer ~]$ mkdir /opt/apps/elk/logstash/conf.d/

[swadmin@MyCloudServer ~]$ mkdir /opt/apps/elk/logstash/data/hbet_cyl_nginx/

备注:

conf.d目录存放logstash的配置文件

data下面的新建的目录为各个站点的库,用于启动多个logstash

- 编写logstash配置文件,实现对filebeat客户端的日志检索及推送给elasticsearch

- 单个日志文件fliter

[swadmin@MyCloudServer ~]$ vim /opt/apps/elk/logstash/conf.d/hbet_cyl_nginx.conf

input {

redis {

data_type => "list"

password => "9tN6GFGK60Jk8BNkBJM611GwA66uDFeG"

key => "hbet_cyl_nginx①"

host => "127.0.0.1"

port => 17693

threads => 5

}

}

filter {

grok {

match => [ "message" , "%{COMBINEDAPACHELOG}+%{GREEDYDATA:extra_fields}"]

overwrite => [ "message" ]

}

mutate {

convert => ["response", "integer"]

convert => ["bytes", "integer"]

convert => ["responsetime", "float"]

}

geoip {

source => "clientip"

target => "geoip"

add_tag => [ "nginx-geoip" ]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

remove_field => [ "timestamp" ]

}

useragent {

source => "agent"

}

}

output {

elasticsearch {

hosts => ["127.0.0.1:9200"]

index => "hbet_cyl_test_nginx②"

user => "elastic"

password => "Passw0rd③"

}

stdout { codec => "rubydebug" }

}

备注:

- redis与filebeat客户端通信的键值,要与前面配置的一致

- 后面kibana用来查看日志的index,用令牌码,统一格式,以便对各站点的日志进行区分

- 前面设置的elastic x-pack的密码,要一致

- 多个日志文件fliter

参考:https://discuss.elastic.co/t/filter-multiple-different-file-beat-logs-in-logstash/76847/4

input {

file {

path => "/opt/src/log_source/8hcp/gameplat_work.2018-03-23-13.log"

start_position => "beginning"

type => "8hcp-gameplat_work-log"

ignore_older => 0

}

file {

path => "/opt/src/log_source/8hcp/tomcat_18001/catalina.out"

start_position => "beginning"

type => "8hcp-tomcat8001-log"

ignore_older => 0

}

file {

path => "/opt/src/log_source/8hcp/nginx/web_access.log"

start_position => "beginning"

type => "8hcp-nginx-log"

ignore_older => 0

}

}

filter {

if ([type] =~ "gameplat" or [type] =~ "tomcat") {

mutate {

"remove_field" => ["beat", "host", "offset", "@version"]

}

grok {

match => { "message" => "%{COMBINEDAPACHELOG}" }

tag_on_failure => []

}

date {

match => [ "timestamp" , "dd/MMM/yyyy:HH:mm:ss Z" ]

}

}

else if ([type] =~ "nginx") {

grok {

match => [ "message" , "%{COMBINEDAPACHELOG}+%{GREEDYDATA:extra_fields}"]

overwrite => [ "message" ]

}

mutate {

convert => ["response", "integer"]

convert => ["bytes", "integer"]

convert => ["responsetime", "float"]

"remove_field" => ["beat", "host", "offset", "@version"]

}

geoip {

source => "clientip"

target => "geoip"

database => "/opt/apps/elk/logstash/geoData/GeoLite2-City_20180306/GeoLite2-City.mmdb"

add_field => [ "[geoip][coordinates]", "%{[geoip][longitude]}" ]

add_field => [ "[geoip][coordinates]", "%{[geoip][latitude]}" ]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

remove_field => [ "timestamp" ]

}

useragent {

source => "agent"

}

}

}

output {

if ([type] =~ "gameplat") {

elasticsearch {

hosts => ["192.168.175.241:9200"]

index => "gameplat-%{+YYYY.MM.dd}"

user => "elastic"

password => "Passw0rd!**yibo"

}

}

else if ([type] =~ "tomcat") {

elasticsearch {

hosts => ["192.168.175.241:9200"]

index => "tomcat-%{+YYYY.MM.dd}"

user => "elastic"

password => "Passw0rd!**yibo"

}

}

else if ([type] =~ "nginx") {

elasticsearch {

hosts => ["192.168.175.241:9200"]

index => "logstash-nginx-%{+YYYY.MM.dd}"

user => "elastic"

password => "Passw0rd!**yibo"

}

}

stdout {codec => rubydebug}

}

备注:

索引加时间戳: index => "%{type}-%{+YYYY.MM.dd}"

input {

redis {

data_type => "list"

password => "qwe7410"

key => "john-test"

host => "10.88.120.100"

port => 6379

threads => 5

db => 0

}

}

filter {

if "nginx-log" in [tags] {

grok {

match => [ "message" , "%{COMBINEDAPACHELOG}+%{GREEDYDATA:extra_fields}"]

overwrite => [ "message" ]

}

}

mutate {

convert => ["response", "integer"]

convert => ["bytes", "integer"]

convert => ["responsetime", "float"]

}

geoip {

source => "clientip"

target => "geoip"

add_tag => [ "nginx-geoip" ]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

remove_field => [ "timestamp" ]

}

useragent {

source => "agent"

}

if "domainsystem-log" in [tags] {

grok {

match => [ "message" , "%{COMBINEDAPACHELOG}+%{GREEDYDATA:extra_fields}"]

overwrite => [ "message" ]

}

}

mutate {

convert => ["response", "integer"]

convert => ["bytes", "integer"]

convert => ["responsetime", "float"]

}

geoip {

source => "clientip"

target => "geoip"

add_tag => [ "nginx-geoip" ]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

remove_field => [ "timestamp" ]

}

useragent {

source => "agent"

}

}

output {

if [fields][tag] == "nginx-log" {

elasticsearch {

hosts => ["10.88.120.100:9200", "10.88.120.110:9200", "10.88.120.120:9200"]

index => "nginx"

document_type => "%{type}"

user => elastic

password => qwe7410

}

stdout { codec => rubydebug }

}

if [fields][tag] == "domainsystem-log" {

elasticsearch {

hosts => ["10.88.120.100:9200", "10.88.120.110:9200", "10.88.120.120:9200"]

index => "domainsystem"

document_type => "%{type}"

user => elastic

password => qwe7410

}

}

stdout { codec => rubydebug }

}

input {

redis {

data_type => "list"

password => "pass"

key => "nysa-elk"

host => "1.1.1.1"

port => 6379

threads => 5

db => 0

}

}

filter {

if "aoya-crawler-log" in [tags] {

grok {

match => [ "message" , "%{COMBINEDAPACHELOG}+%{GREEDYDATA:extra_fields}"]

overwrite => [ "message" ]

}

}

mutate {

convert => ["response", "integer"]

convert => ["bytes", "integer"]

convert => ["responsetime", "float"]

}

geoip {

source => "clientip"

target => "geoip"

add_tag => [ "aoyacrawler-geoip" ]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

remove_field => [ "timestamp" ]

}

useragent {

source => "agent"

}

if "lock_return" in [tags] {

grok {

match => [ "message" , "%{COMBINEDAPACHELOG}+%{GREEDYDATA:extra_fields}"]

overwrite => [ "message" ]

}

}

mutate {

convert => ["response", "integer"]

convert => ["bytes", "integer"]

convert => ["responsetime", "float"]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

remove_field => [ "timestamp" ]

}

if "new-logs" in [tags] {

grok {

match => [ "message" , "%{COMBINEDAPACHELOG}+%{GREEDYDATA:extra_fields}"]

overwrite => [ "message" ]

}

}

mutate {

convert => ["response", "integer"]

convert => ["bytes", "integer"]

convert => ["responsetime", "float"]

}

date {

match => [ "timestamp" , "dd/MMM/YYYY:HH:mm:ss Z" ]

remove_field => [ "timestamp" ]

}

}

output {

if [fields][tag] == "aoya-crawler-log" {

elasticsearch {

hosts => ["172.16.3.93:9200", "172.16.3.88:9200", "172.16.3.91:9200"]

index => "aoya-crawler-logs-%{+YYYY.MM.dd}"

document_type => "%{type}"

user => elastic

password => qwe7410

}

}

if [fields][tag] == "failed-http-log" {

elasticsearch {

hosts => ["172.16.3.93:9200", "172.16.3.88:9200", "172.16.3.91:9200"]

index => "failed-http-log-%{+YYYY.MM.dd}"

document_type => "%{type}"

user => elastic

password => qwe7410

}

}

if [fields][tag] == "queue-log" {

elasticsearch {

hosts => ["172.16.3.93:9200", "172.16.3.88:9200", "172.16.3.91:9200"]

index => "queue-log-%{+YYYY.MM.dd}"

document_type => "%{type}"

user => elastic

password => qwe7410

}

}

if [fields][tag] == "lock_return" {

elasticsearch {

hosts => ["172.16.3.93:9200", "172.16.3.88:9200", "172.16.3.91:9200"]

index => "lock_return-%{+YYYY.MM.dd}"

document_type => "%{type}"

user => elastic

password => qwe7410

}

}

if [fields][tag] == "new-logs" {

elasticsearch {

hosts => ["172.16.3.93:9200", "172.16.3.88:9200", "172.16.3.91:9200"]

index => "new-logs-%{+YYYY.MM.dd}"

document_type => "%{type}"

user => elastic

password => qwe7410

}

}

}

- filter插件用法

参考:https://www.jianshu.com/p/d469d9271f19

系统自带语法:HOME{logstash}/vendor/bundle/jruby/2.3.0/gems/logstash-patterns-core-4.1.2/patternsmutate

删除无用字段

mutate {

remove_field => "message"

remove_field => "@version"

}

或

mutate {

"remove_field" => ["beat", "host", "offset", "@version"]

}

加一个字段

mutate {

add_field => {

"web_log" => "%{[fields][web_log]}"

}

- ELK服务启动logstash

[elk@node2 logstash]$ nohup logstash -f config/input-output.conf &>/dev/null &

稍等片刻检查是否启动成功

[elk@node2 logstash]$ ps aux |grep logstash

- 登录kibana查看日志信息

- 用浏览器登录http://10.88.120.100:5601

登录用户:elastic 登录密码: 前面设置的

- 创建index

管理 ---》kibana:索引模式 ---》创建索引模式(如下图)

- 点击发现查看日志内容

获取改ES所有索引信息

curl -XGET -u username:password http://172.16.3.93:9200/_cat/indices 定期删除10天前ES集群的索引

elasticsearch配置里action.destructive_requires_name需要改成false 允许通过通配符或_all删除索引

action.destructive_requires_name: false#!/bin/bash

###################################

#删除早于十天的ES集群的索引

###################################

function delete_indices() {

comp_date=`date -d "10 day ago" +"%Y-%m-%d"`

date1="$1 00:00:00"

date2="$comp_date 00:00:00"

t1=`date -d "$date1" +%s`

t2=`date -d "$date2" +%s`

if [ $t1 -le $t2 ]; then

echo "$1时间早于$comp_date,进行索引删除"

#转换一下格式,将类似2017-10-01格式转化为2017.10.01

format_date=`echo $1| sed 's/-/\./g'`

curl -XDELETE -u username:password http://172.16.3.93:9200/*$format_date

fi

}

curl -XGET -u username:password http://172.16.3.93:9200/_cat/indices | awk -F" " '{print $3}' | awk -F"-" '{print $NF}' | egrep "[0-9]*\.[0-9]*\.[0-9]*" | sort | uniq | sed 's/\./-/g' | while read LINE

do

#调用索引删除函数

delete_indices $LINE

done定时清理日志文件

#!/bin/bash

path=/elk/es/logs

find $path -name "*.log.gz" -type f -mtime +10 -exec rm {} \; > /dev/null 2>&1使用systemctl启动kibana

启动脚本

[elk@node1 ~]$ cat /home/elk/kibana.sh

#!/bin/bash

sudo setfacl -m u:elk:rwx /run

/usr/local/elk/kibana/bin/kibana >> /elk/es/logs/kibana.log &

echo $! > /run/kibana.pid停止脚本

[elk@node1 ~]$ cat kibanastop.sh

#!/bin/sh

PID=$(cat /run/kibana.pid)

kill -9 $PIDsystemctl脚本,使用elk普通用户启动kibana

[root@node1 ~]# cat /usr/lib/systemd/system/kibana.service

[Unit]

Description=kibana

After=network.target

[Service]

ExecStart=/home/elk/kibana.sh

ExecStop=/home/elk/kibanastop.sh

Type=forking

User=elk

Group=elk

PrivateTmp=true

[Install]

WantedBy=multi-user.target

启动关闭开机自启命令

[root@node1 ~]# systemctl enable kibana.service

Created symlink from /etc/systemd/system/multi-user.target.wants/kibana.service to /usr/lib/systemd/system/kibana.service.

[root@node1 ~]# systemctl stop kibana.service

[root@node1 ~]# systemctl start kibana.service

使用systemctl启动logstash

启动脚本

[elk@node3 ~]$ cat logstash.sh

#!/bin/bash

sudo setfacl -m u:elk:rwx /run

source /etc/profile

/usr/local/elk/logstash/bin/logstash -f /usr/local/elk/logstash/config/input-output.conf >> /elk/es/logs/logstash.log &

echo $! > /run/logstash.pid停止脚本

[elk@node2 ~]$ cat logstashstop.sh

#!/bin/sh

PID=$(cat /run/logstash.pid)

kill -9 $PIDsystemctl脚本,使用elk普通用户启动logstash

[root@node2 ~]# cat /usr/lib/systemd/system/logstash.service

[Unit]

Description=logstash

After=network.target

[Service]

ExecStart=/home/elk/logstash.sh

ExecStop=/home/elk/logstashstop.sh

Type=forking

User=elk

Group=elk

PrivateTmp=true

[Install]

WantedBy=multi-user.target启动关闭开机自启命令

[root@node2 ~]# systemctl enable logstash.service

Created symlink from /etc/systemd/system/multi-user.target.wants/logstash.service to /etc/systemd/system/logstash.service.

[root@node2 ~]# systemctl stop logstash.service

[root@node2 ~]# systemctl start logstash.service

使用systemctl启动elasticsearch

启动脚本

[elk@node3 ~]$ cat elasticsearch.sh

#!/bin/bash

sudo setfacl -m u:elk:rwx /run

source /etc/profile

/usr/local/elk/elasticsearch/bin/elasticsearch >> /dev/null 2>&1 &

echo $! > /run/elasticsearch.pid

停止脚本

[elk@node3 ~]$ cat elasticsearchstop.sh

#!/bin/bash

PID=$(cat /run/elasticsearch.pid)

kill -9 $PIDsystemctl脚本,使用elk普通用户启动elasticsearch

[root@node3 ~]# cat /usr/lib/systemd/system/elasticsearch.service

[Unit]

Description=elasticsearch

After=network.target

[Service]

LimitCORE=infinity

LimitNOFILE=65536

LimitNPROC=65536

ExecStart=/home/elk/elasticsearch.sh

ExecStop=/home/elk/elasticsearchstop.sh

Type=forking

User=elk

Group=elk

PrivateTmp=true

[Install]

WantedBy=multi-user.target启动关闭开机自启命令

[root@node3 ~]# systemctl enable elasticsearch

Created symlink from /etc/systemd/system/multi-user.target.wants/elasticsearch.service to /usr/lib/systemd/system/elasticsearch.service.

[root@node3 ~]# systemctl stop elasticsearch

[root@node3 ~]# systemctl start elasticsearch

使用systemctl启动filebeat

启动脚本

[elk@chat_app ~]$ vim /home/elk/filebeat.sh

#!/bin/bash

sudo setfacl -m u:elk:rwx /run

/usr/local/filebeat/filebeat -e -c /usr/local/filebeat/filebeat.yml >> /home/elk/log/filebeat.log 2>&1 &

echo $! > /run/filebeat.pid[elk@chat_app ~]$ mkdir /home/elk/log

停止脚本

[elk@chat_app ~]$ vim /home/elk/filebeatstop.sh

#!/bin/bash

PID=$(cat /run/filebeat.pid)

kill -9 $PIDsystemctl脚本,使用elk普通用户启动filebeat

[root@chat_app ~]$ vim /usr/lib/systemd/system/filebeat.service

[Unit]

Description=filebeat

After=network.target

[Service]

ExecStart=/home/elk/filebeat.sh

ExecStop=/home/elk/filebeatstop.sh

Type=forking

User=elk

Group=elk

PrivateTmp=true

[Install]

WantedBy=multi-user.target启动关闭开机自启命令

[root@chat_app ~]# systemctl enable filebeat.service

Created symlink from /etc/systemd/system/multi-user.target.wants/filebeat.service to /usr/lib/systemd/system/filebeat.service.

[root@chat_app ~]# systemctl stop filebeat.service

[root@chat_app ~]# systemctl start filebeat.service