centos7环境下kubeadm方式安装kubernates1.13

安装说明:

kubadm

1,master,node:安装kubelet,kubeadm,docker

2,master:kubeadm init

3,nodes:kubeadm join

1、kubernate通过kubeadm安装有2种方式:

一种是从google网站下载离线安装包

另一种是通过设置阿里云镜像安装。本文通过这一种。

2、设置Kubernetes仓库

vi /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes Repo

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

gpgcheck=0

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg

enabled=1

建立docker-ee仓库,已经安装过的,可忽略

wget -P /etc/yum.repos.d/ https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

3、禁用swap

swapoff -a

同时

vi /etc/fstab

注释掉swap那一行

#UUID=7dac6afd-57ad-432c-8736-5a3ba67340ad swap swap defaults 0 0free m 查看swap使用

4、主节点和子节点安装

yum install docker-ce kubelet kubeadm kubectl

如果已经安装过docker的,只需执行yum install kubelet kubeadm kubectl

5、仅主节点安装

kubeadm init --kubernetes-version=v1.13.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

如果报如下错误

[root@yanfa2 bridge]# kubeadm init --kubernetes-version=v1.13.0 --pod-network-cidr=10.244.0.0/16 --service-cidr=10.96.0.0/12

[init] Using Kubernetes version: v1.13.0

[preflight] Running pre-flight checks

[preflight] Pulling images required for setting up a Kubernetes cluster

[preflight] This might take a minute or two, depending on the speed of your internet connection

[preflight] You can also perform this action in beforehand using 'kubeadm config images pull'

error execution phase preflight: [preflight] Some fatal errors occurred:

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-apiserver:v1.13.0: output: Trying to pull repository k8s.gcr.io/kube-apiserver ...

Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.125.82:443: i/o timeout

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-controller-manager:v1.13.0: output: Trying to pull repository k8s.gcr.io/kube-controller-manager ...

Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.125.82:443: i/o timeout

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-scheduler:v1.13.0: output: Trying to pull repository k8s.gcr.io/kube-scheduler ...

Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.125.82:443: i/o timeout

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/kube-proxy:v1.13.0: output: Trying to pull repository k8s.gcr.io/kube-proxy ...

Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.97.82:443: i/o timeout

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/pause:3.1: output: Trying to pull repository k8s.gcr.io/pause ...

Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.97.82:443: i/o timeout

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/etcd:3.2.24: output: Trying to pull repository k8s.gcr.io/etcd ...

Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.97.82:443: i/o timeout

, error: exit status 1

[ERROR ImagePull]: failed to pull image k8s.gcr.io/coredns:1.2.6: output: Trying to pull repository k8s.gcr.io/coredns ...

Get https://k8s.gcr.io/v1/_ping: dial tcp 108.177.97.82:443: i/o timeout

, error: exit status 1

[preflight] If you know what you are doing, you can make a check non-fatal with `--ignore-preflight-errors=...`是因为从k8s.gcr.io下载不了镜像,执行如下命令:注意版本号,根据自己的报错提示修改

docker pull mirrorgooglecontainers/kube-apiserver-amd64:v1.13.0

docker pull mirrorgooglecontainers/kube-controller-manager-amd64:v1.13.0

docker pull mirrorgooglecontainers/kube-scheduler-amd64:v1.13.0

docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.13.0

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd-amd64:3.2.24

docker pull coredns/coredns:1.2.6

通过docker tag命令来修改镜像的标签

docker tag docker.io/mirrorgooglecontainers/kube-apiserver-amd64:v1.13.0 k8s.gcr.io/kube-apiserver:v1.13.0

docker tag docker.io/mirrorgooglecontainers/kube-controller-manager-amd64:v1.13.0 k8s.gcr.io/kube-controller-manager:v1.13.0

docker tag docker.io/mirrorgooglecontainers/kube-scheduler-amd64:v1.13.0 k8s.gcr.io/kube-scheduler:v1.13.0

docker tag docker.io/mirrorgooglecontainers/kube-proxy-amd64:v1.13.0 k8s.gcr.io/kube-proxy:v1.13.0

docker tag docker.io/mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag docker.io/mirrorgooglecontainers/etcd-amd64:3.2.24 k8s.gcr.io/etcd:3.2.24

docker tag docker.io/coredns/coredns:1.2.6 k8s.gcr.io/coredns:1.2.6

6、重新执行命令,主节点安装成功

执行

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

export KUBECONFIG=/etc/kubernetes/admin.conf

安装网络插件

kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/v0.10.0/Documentation/kube-flannel.yml

Your Kubernetes master has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of machines by running the following on each node

as root:

kubeadm join 192.168.7.216:6443 --token 16lrz8.amk86wpd1yd3mqqg --discovery-token-ca-cert-hash sha256:241adaf533f030e95ae606ddeaa71b4f7f93b443bb12e2470ae918a62e9cf2147.子节点安装

执行2.3.4步骤

7.1子节点加入集群

执行

systemctl enable kubelet.service

echo 1 > /proc/sys/net/bridge/bridge-nf-call-iptables

echo 1 > /proc/sys/net/ipv4/ip_forward

kubeadm join 192.168.7.216:6443 --token 16lrz8.amk86wpd1yd3mqqg --discovery-token-ca-cert-hash sha256:241adaf533f030e95ae606ddeaa71b4f7f93b443bb12e2470ae918a62e9cf214

错误提示:

[join] Reading configuration from the cluster...

[join] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -oyaml'

unable to fetch the kubeadm-config ConfigMap: failed to get config map: Unauthorized是因为我装完主节点后,没有当天加入子节点,导致token在24小时后过期,需要重新生成

kubeadm token create 生成

kubeadm token list 查看

重新执行

kubeadm join 192.168.7.216:6443 --token 37dday.nnlp7wwq7ac2enjy --discovery-token-ca-cert-hash sha256:241adaf533f030e95ae606ddeaa71b4f7f93b443bb12e2470ae918a62e9cf214

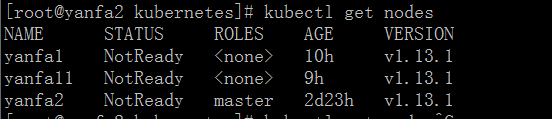

出现notready状态解决方式:

执行脚本:kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

如果是子节点出现上面问题,则需要下载镜像

docker pull mirrorgooglecontainers/kube-apiserver-amd64:v1.13.0

docker pull mirrorgooglecontainers/kube-controller-manager-amd64:v1.13.0

docker pull mirrorgooglecontainers/kube-scheduler-amd64:v1.13.0

docker pull mirrorgooglecontainers/kube-proxy-amd64:v1.13.0

docker pull mirrorgooglecontainers/pause:3.1

docker pull mirrorgooglecontainers/etcd-amd64:3.2.24

docker pull coredns/coredns:1.2.6

通过docker tag命令来修改镜像的标签

docker tag docker.io/mirrorgooglecontainers/kube-apiserver-amd64:v1.13.0 k8s.gcr.io/kube-apiserver:v1.13.0

docker tag docker.io/mirrorgooglecontainers/kube-controller-manager-amd64:v1.13.0 k8s.gcr.io/kube-controller-manager:v1.13.0

docker tag docker.io/mirrorgooglecontainers/kube-scheduler-amd64:v1.13.0 k8s.gcr.io/kube-scheduler:v1.13.0

docker tag docker.io/mirrorgooglecontainers/kube-proxy-amd64:v1.13.0 k8s.gcr.io/kube-proxy:v1.13.0

docker tag docker.io/mirrorgooglecontainers/pause:3.1 k8s.gcr.io/pause:3.1

docker tag docker.io/mirrorgooglecontainers/etcd-amd64:3.2.24 k8s.gcr.io/etcd:3.2.24

docker tag docker.io/coredns/coredns:1.2.6 k8s.gcr.io/coredns:1.2.6

查看kubectl问题

kubectl describe nodes yanfa2

journalctl -f -u kubelet.service

确认是否重启是否成功

kubectl get node

kubectl get pod --all-namespaces