Oracle 12C新特性总结

Oracle 12C新特性总结

- 1.1 不可见字段

- 1.2 相同字段上的多重索引

- 1.3 DDL日志

- 1.4 新的备份用户特权

- 1.5 数据泵的增强

- 1.6 完整可传输导出/导入 迁移数据库

- 1.7 扩展数据类型长度32k Strings

- 1.8 在SQL语句中通过with语句定义函数(12.1.0.2)

- 1.9 在线移动并重命名数据文件

- 1.10 高级索引压缩

- 1.11 PGA大小限制

- 1.12 RMAN 表级恢复

- 1.13 In-Memory选件

- 1.14 配置第二个网络和相应的scan

- 1.15 全库缓存

- 1.16 Sharding Database(12.2.0.1)

- 1.17 在线把非分区表转为分区表(12.2.0.1)

- 1.18 表在线移动(12.2.0.1)

- 1.19 DataGuard通过网络恢复数据文件

- 1.20 In-Database Archiving数据库内归档

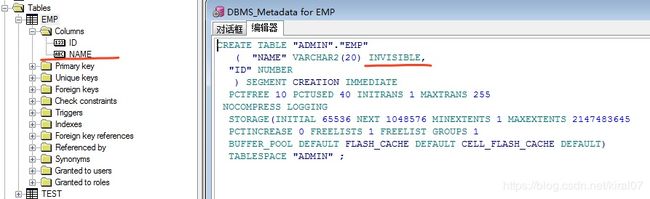

1.1 不可见字段

Invisible columns

在 12c R1中,可以在表中创建不可见字段。当一个字段为不可见时,这一字段就不会出现在查询结果中,除非显式在 SQL 语句或条件中指定,或是在表定义中有 DESCRIBED。

场景:

创建一张表TEST,将其中一列设置为invisible,全字段查询观察结果。

操作步骤:

- 创建表test

create table emp (id number,name varchar2(20) invisible);

insert into emp(id,name) values(1,'tom');

insert into emp(id,name) values(2,'mike');

commit;

- 查看表描述

SQL> desc emp;

Name Null? Type

-------- -------- ----------

ID NUMBER

SQL> set colinvisible on

SQL> desc emp;

Name Null? Type

----------------------------------------- -------- ----------------------------

ID NUMBER

NAME (INVISIBLE) VARCHAR2(20)

- 全字段查询

SQL> select * from emp;

ID

----------

1

2

- 显式指定字段名查询

SQL> select id,name from emp;

ID NAME

---------- --------------------

1 tom

2 mike

1.2 相同字段上的多重索引

Multiple indexes on the same set of columns

在12c中可使用invisible参数,对表同一字段创建不同类型的索引

示例:

create table dup(name varchar2(10),sex varchar2(10),adr varchar2(200),id number(10),birth date);

create index idx_dup_adr on dup(adr);

create bitmap index bitidx_dup_adr on dup(adr) invisible;

如果要使bitmap索引生效,将btree索引设为隐藏即可。

alter index idx_dup_adr invisible;

alter index bitidx_dup_adr visible;

1.3 DDL日志

DDL log开启之后可在记录的xml文件中找到对应的时间戳以及主机ip

- 开启 DDL 日志功能

SQL> ALTER SYSTEM|SESSION SET ENABLE_DDL_LOGGING=TRUE;

- 以下的 DDL 语句可能会记录在 xml 或日志文件中:

CREATE|ALTER|DROP|TRUNCATE TABLE

DROP USER

CREATE|ALTER|DROP PACKAGE|FUNCTION|VIEW|SYNONYM|SEQUENCE

- 删除表dup

drop table dup;

- 查看日志

vi /u01/app/oracle/diag/rdbms/orcl/orcl/log/ddl/log.xml

[oracle@sdw2 ddl]$ vi log.xml

<msg time='2018-09-12T14:07:46.443+08:00' org_id='oracle' comp_id='rdbms'

msg_id='kpdbLogDDL:21810:2946163730' type='UNKNOWN' group='diag_adl'

level='16' host_id='sdw2' host_addr='192.168.212.112'

pid='9271' version='1' con_uid='1726817995'

con_id='3' con_name='PDBORCL'>

<txt>drop table dup

</txt>

</msg>

1.4 新的备份用户特权

grant sysbackup to admin

rman

connect target "admin/oracle@pdb as sysbackup"

1.5 数据泵的增强

-

关闭redo日志的生成

在导入大型表时可以通过增加TRANSFORM选项,关闭redo日志的生成,从而加快导入。

impdp admin/oracle@pdb directory=dump dumpfile=admin.dmp logfile=admin_nolog.log schemas=admin TRANSFORM=DISABLE_ARCHIVE_LOGGING:Y -

导出将视图转换为表

expdp admin/oracle@pdb directory=dump dumpfile=admin.dmp logfile=admin.log views_as_tables=view_test

1.6 完整可传输导出/导入 迁移数据库

Full transportable export/import

Oracle 数据库 12c 的完整可传输导出/导入(Full Transportable Export/Import)功能来使用较少的停机时间,将数据库升级/迁移到 Oracle 数据库 12c (12.1.0.2以上)中。(支持单实例迁移到RAC集群)

全库导出迁移

先决条件:

- 新的目标库必须是 Oracle 数据库 12c 的数据库(可以是 non-CDB(容器数据库)或 PDB(可插拔数据库))

- 为将要执行迁移的用户授权 DATAPUMP_IMP_FULL_DATABASE 角色

- 执行升级/迁移之前,设置表空间为只读

- 源数据库必须是 11.2.0.3 或更高版本。

- 可通过基于表空间传输,将单实例迁移到RAC集群

步骤:

- 准备12c数据库

- 检查源数据库与目标数据库Endian 格式

SELECT d.PLATFORM_NAME, ENDIAN_FORMAT

FROM V$TRANSPORTABLE_PLATFORM tp, V$DATABASE d

WHERE tp.PLATFORM_ID = d.PLATFORM_ID;

如果 Endian 格式相同,数据文件可以直接拷贝到目标平台。否则,需要使用 RMAN 或者DBMS_FILE_TRANSFER来为数据文件进行转换

-

检验被迁移的表空间是自包含的

EXECUTE DBMS_TTS.TRANSPORT_SET_CHECK(‘CS,ADMIN,USERS’, TRUE);

其结果可以通过检查

SQL> SELECT * FROM TRANSPORT_SET_VIOLATIONS;

如果查询返回有值,则需要在进行整个迁移操作之前解决掉依存关系。依存对象需要移动到准备迁移的表空间里。缺少该步骤,迁移对象将存在问题。 -

创建目录对象

在源服务器、目标创建directory,用来读取dump文件 -

源服务器要迁移的表空间置为只读模式

-

在源服务器数据泵调用完整可传输导出dmp(Full Transportable)

11g版本如果是11.2.0.3以上,必须加version=12,如果源库是12c version参数为12.0.0 -

复制源数据库的数据文件到目标端

直接物理复制到目标端数据文件目录,如果跨平台,可通过dbms_file_transfer.put_file包以及dblink传输或者RMAN convert 命令 -

在目标端执行导入

-

在源数据库将表空间转为读写模式

场景示例:

在Linux平台上,跨数据库版本迁移

备注:windows环境迁移至Linux也适用

| 数据库名 | 操作系统 | 数据库版本 |

|---|---|---|

| orcl(源数据库) | CentOS7 | 11.2.0.4 |

| pdborcl(目标pdb数据库) | CentOS7 | 12.2.0.1 |

步骤

s1、检查源数据库以及目标数据库endian是否相同

SQL> SELECT d.PLATFORM_NAME, ENDIAN_FORMAT FROM V$TRANSPORTABLE_PLATFORM tp, V$DATABASE d WHERE tp.PLATFORM_ID = d.PLATFORM_ID;

PLATFORM_NAME

--------------------------------------------------------------------------------

ENDIAN_FORMAT

--------------

Linux x86 64-bit

Little

s2、检查表空间是否自包含

源数据库要迁移的3个表空间

SQL> EXECUTE DBMS_TTS.TRANSPORT_SET_CHECK('CS,ADMIN,USERS', TRUE);

SQL> SELECT * FROM TRANSPORT_SET_VIOLATIONS;

no rows selected

无返回值,都是自包含

s3、源数据库创建dump目录用于导出系统表空间元数据

SQL> create or replace directory dump as '/u01/dump';

SQL> grant read,write on directory dump to public;

s4、源数据库创建"数据文件“目录用于导出数据文件到目标

SQL> create or replace directory source as '/u01/app/oracle/oradata/orcl';

备注:创建的目的是为了将源数据库的数据文件通过存储过程传到目标数据库,存储过程根据两边数据文件的directory进行传输,此步骤是作为源数据库的数据文件目录

s5、在源数据库上创建dblink连接目标,用于传输数据文件

SQL> create public database link con_12c connect to system identified by oracle using '192.168.212.112/pdborcl';

s6、在目标数据库上创建dump目录,用于读取源数据库dmp

SQL> create or replace directory dump as '/u01/dump';

SQL> grant read,write on directory dump to public;

s7、在目标数据库创建"数据文件“目录,用于指向12c的pdb数据文件目录

SQL> create or replace directory tardir as '/u01/app/oracle/oradata/orcl/pdborcl';

备注:

1、创建的目的是为了将源数据文件传到目标数据库的数据文件目录中,实际就是目标数据库表空间的数据文件目录。

2、数据文件的传输支持文件系统到ASM磁盘,例如create or replace directory tardir as ‘+DATA/ORCL/774F1D03EEBA6B88E0530B0410ACEFB0/DATAFILE/’;

s8、表空间置为只读

SQL> alter tablespace admin read only;

SQL> alter tablespace cs read only;

SQL> alter tablespace users read only;

s9、源数据库导出系统元数据

全库导出

expdp system/oracle dumpfile=full_db.dmp directory=dump logfile=full_db.log full=y transportable=always version=12

导出部分表空间

例如导出admin表空间:

expdp system/oracle dumpfile=admin.dmp directory=dump logfile=admin.log transport_tablespaces=admin

s10、在源数据库调用存储过程传输数据文件到目标

备注:Linux下可直接拷贝数据文件到目标

exec dbms_file_transfer.put_file(source_directory_object =>'source',source_file_name => 'admin.dbf',destination_directory_object => 'tardir',destination_file_name => 'admin.dbf',destination_database => 'con_12c');

exec dbms_file_transfer.put_file(source_directory_object =>'source',source_file_name => 'cs.dbf',destination_directory_object => 'tardir',destination_file_name => 'cs.dbf',destination_database => 'con_12c');

exec dbms_file_transfer.put_file(source_directory_object =>'source',source_file_name => 'users01.dbf',destination_directory_object => 'tardir',destination_file_name => 'users01.dbf',destination_database => 'con_12c');

备注:

存储过程支持文件系统到ASM

s11、传输dmp以及数据文件到目标

[oracle@sdw1 dump]$ scp full_db.dmp oracle@192.168.212.112:/u01/dump

在目标数据库确认数据文件是否传到pdb数据文件目录

[root@sdw2 pdborcl]# ll -hrt /u01/app/oracle/oradata/orcl/pdborcl

total 1.7G

-rw-r----- 1 oracle oinstall 132M Sep 14 08:29 temp012018-08-14_01-06-20-262-AM.dbf

-rw-r----- 1 oracle oinstall 791M Sep 14 14:54 sysaux01.dbf

-rw-r----- 1 oracle oinstall 101M Sep 14 15:01 admin.dbf

-rw-r----- 1 oracle oinstall 61M Sep 14 15:01 cs.dbf

-rw-r----- 1 oracle oinstall 5.1M Sep 14 15:01 users01.dbf

-rw-r----- 1 oracle oinstall 211M Sep 14 15:01 undotbs01.dbf

-rw-r----- 1 oracle oinstall 431M Sep 14 15:01 system01.dbf

备注:asm磁盘看下asmcmd目录下是否存在数据文件

s12、在目标执行元数据导入

全库导入

impdp system/oracle@pdb full=Y dumpfile=full_db.dmp directory=dump transport_datafiles='/u01/app/oracle/oradata/orcl/pdborcl/users01.dbf','/u01/app/oracle/oradata/orcl/pdborcl/admin.dbf','/u01/app/oracle/oradata/orcl/pdborcl/cs.dbf' logfile=import2.log

备注:

(1)transport_datafiles后面是导入pdb数据库所在数据文件

(2)如果在导入过程中有如下报错(12C R2)。需要修改12c数据库参数

ORA-12801: error signaled in parallel query server P057

ORA-00018: maximum number of sessions exceeded

alter system set parallel_max_servers=0 scope=spfile;

部分表空间导入

impdp system/oracle@pdborcl dumpfile=admin.dmp directory=dump transport_datafiles='+DATA/ORCL/774F1D03EEBA6B88E0530B0410ACEFB0/DATAFILE/ADMIN.DBF' logfile=admin_imp.log

transport_datafiles参数后的路径为目标数据库表空间数据文件,即导入之后表空间所在数据文件

备注:

1、以表空间方式进行导入前,在目标数据库需要提前创建好用户,如目标数据库schema与源数据库不一致,可通过remap_schema指定

2、在目标数据库无需创建表空间,待导入之后可检查目标schema与默认表空间是否对应,或使用命令更改默认表空间alter user admin default tablespace admin;

s12、检查表空间以及用户是否都导入

SQL> select name from v$tablespace;

NAME

------------------------------

SYSTEM

SYSAUX

UNDOTBS1

TEMP

ADMIN

CS

USERS

7 rows selected.

s13、源数据库表空间恢复读写

SQL> alter tablespace admin read write;

SQL> alter tablespace cs read write;

SQL> alter tablespace users read write;

Oracle DOC

https://docs.oracle.com/database/121/ADMIN/transport.htm#ADMIN13722

1.7 扩展数据类型长度32k Strings

VARCHAR2, NAVARCHAR2 以及 RAW 这些数据类型的 大小可以从 4K 以及 2K 字节扩展至32K 字节。

开启方法:

1、关闭cdb

2、启动数据库到upgrade模式

startup upgrade;

3、在cdb内修改初始化参数MAX_STRING_SIZE

ALTER SESSION SET CONTAINER=CDB$ROOT;

ALTER SYSTEM SET max_string_size=extended SCOPE=SPFILE;

4、以sys用户执行扩展脚本

@?/rdbms/admin/utl32k.sql

5、重启数据库到normal模式

shutdown immediate;

startup;

6、执行脚本编译无效对象

@?/rdbms/admin/utlrp.sql

1.8 在SQL语句中通过with语句定义函数(12.1.0.2)

ORACLE 12C可以在sql语句中编写函数,在read only或者不想新建函数,可通过这种方法实现。

如下创建一个函数,用来判断输入数据是否是数字,如果是数字输出Y,如果不是输出N.

with function Is_Number

(x in varchar2) return varchar2 is

Plsql_Num_Error exception;

pragma exception_init(Plsql_Num_Error, -06502);

begin

if (To_Number(x) is NOT null) then

return 'Y';

else

return '';

end if;

exception

when Plsql_Num_Error then

return 'N';

end Is_Number;

最后用select语句调用Is_Number函数

select is_number('1') from dual;

/

IS_NUMBER('1')

--------------------

Y

1.9 在线移动并重命名数据文件

当数据文件正在传输时,终端用户可以执行查询,DML 以及 DDL 方面的任务

移动数据文件并且重命名

SQL> alter database move datafile '/u01/app/oracle/oradata/orcl/pdborcl/admin.dbf' to '/home/oracle/admin1.dbf';

备注:容器数据只能移动自己所属数据文件

文件系统移动到ASM磁盘

SQL> alter database move datafile '/home/oracle/admin.dbf' to '+DATA/pdborcl_admin.dbf';

备注:如果rac环境中数据文件创建在某个节点,移动数据文件之后,需要在另外节点重启cdb。

1.10 高级索引压缩

COMPRESS ADVANCED LOW

场景实验;a表普通索引,b表压缩索引,分别测试DML,执行计划

1、分别对a,b表创建索引

SQL> create index idx_obname_a on a(object_name);

SQL> select segment_name,bytes/1024/1024 from dba_segments where segment_name =upper('idx_obname_a');

SEGMENT_NAME BYTES/1024/1024

------------------------------------

IDX_OBNAME_A 208

不压缩索引占用208M

create index idx_obname_b on b(object_name) COMPRESS ADVANCED LOW;

SQL> select segment_name,bytes/1024/1024 from dba_segments where segment_name =upper('idx_obname_b');

SEGMENT_NAME BYTES/1024/1024

------------------------------------

IDX_OBNAME_B 72

压缩索引占用72M

2、查询场景统计信息

SQL> alter system flush shared_pool;

SQL> alter system flush buffer_cache;

SQL> select object_name from a where object_name='CS';

4s

Statistics

----------------------------------------------------------

79 recursive calls

0 db block gets

165 consistent gets

29 physical reads

0 redo size

347 bytes sent via SQL*Net to client

596 bytes received via SQL*Net from client

1 SQL*Net roundtrips to/from client

10 sorts (memory)

0 sorts (disk)

0 rows processed

SQL> alter system flush shared_pool;

SQL> alter system flush buffer_cache;

SQL> select object_name from b where object_name='CS';

Elapsed: 00:00:00.01

Statistics

----------------------------------------------------------

45 recursive calls

0 db block gets

64 consistent gets

5 physical reads

0 redo size

347 bytes sent via SQL*Net to client

596 bytes received via SQL*Net from client

1 SQL*Net roundtrips to/from client

6 sorts (memory)

0 sorts (disk)

0 rows processed

3、插入场景统计信息

SQL> alter system flush shared_pool;

SQL> alter system flush buffer_cache;

SQL> insert into a select * from admin;

Elapsed: 00:00:54.84

Statistics

----------------------------------------------------------

2053 recursive calls

14688908 db block gets

322933 consistent gets

124007 physical reads

2647322152 redo size

865 bytes sent via SQL*Net to client

953 bytes received via SQL*Net from client

3 SQL*Net roundtrips to/from client

79 sorts (memory)

0 sorts (disk)

5156288 rows processed

SQL> alter system flush shared_pool;

SQL> alter system flush buffer_cache;

SQL> insert into b select * from admin;

Elapsed: 00:00:36.67

Statistics

----------------------------------------------------------

1229 recursive calls

10458105 db block gets

222670 consistent gets

81895 physical reads

1887079004 redo size

869 bytes sent via SQL*Net to client

953 bytes received via SQL*Net from client

3 SQL*Net roundtrips to/from client

43 sorts (memory)

0 sorts (disk)

5156288 rows processed

4、删除场景统计信息

SQL> alter system flush shared_pool;

SQL> alter system flush buffer_cache;

SQL> delete from a where object_name='CS';

Elapsed: 00:00:00.13

Statistics

----------------------------------------------------------

81 recursive calls

0 db block gets

171 consistent gets

40 physical reads

0 redo size

870 bytes sent via SQL*Net to client

956 bytes received via SQL*Net from client

3 SQL*Net roundtrips to/from client

13 sorts (memory)

0 sorts (disk)

0 rows processed

SQL> alter system flush shared_pool;

SQL> alter system flush buffer_cache;

SQL> delete from b where object_name='CS';

Elapsed: 00:00:00.03

Statistics

----------------------------------------------------------

81 recursive calls

0 db block gets

153 consistent gets

33 physical reads

0 redo size

870 bytes sent via SQL*Net to client

956 bytes received via SQL*Net from client

3 SQL*Net roundtrips to/from client

13 sorts (memory)

0 sorts (disk)

0 rows processed

总结:在使用高级索引压缩之后,可以一定程度下降低数据库IO。

1.11 PGA大小限制

PGA Size Limit

在12C之前,对于PGA内存的管理是使用PGA_AGGREGATE_TARGET参数来控制的,但这个参数只是一个目标值,可以超过设定的大小,无法直接对pga限定大小。

在12C中可使用PGA_AGGREGATE_LIMIT参数来限制Oracle实例PGA使用内存的上限,如果超过限制就采取终止会话的方式来降低PGA内存的使用量。

备注:如果使用ASMM,PGA_AGGREGATE_LIMIT大小为2G(初始化参数未超过2G)或者PGA_AGGREGATE_TARGET的2倍(超过2G)

1.12 RMAN 表级恢复

在Oracle 12C之前RMAN只能在数据库级(数据文件)、表空间级进行完全恢复或者不完全恢复。如果是某张表发生了截断或者删除,可通过闪回表或者闪回数据库快速恢复,或者是dmp文件。然而到了12C,RMAN可以通过备份将数据表恢复到故障时间点,而其他表不受影响。其过程是在恢复过程中创建辅助实例,还原系统数据文件(system、sysaux、undotbs),基于redo或者archivelog应用,最后通过数据泵导入目标shemas,恢复之后自动创建的辅助实例会被自动删除。

场景1:

在T0时间点,存在1张表T1此时无任何数据,此时做cdb级别RMAN全备。在T1时间点插入3条测试数据并提交,在T2时间点删除表T1(模拟误删)。

解决方法:

通过表级恢复,表T1恢复到T2时间点之前。

1、先查看表T1

12:47:45 SQL> select * from t1;

no rows selected

12:47:55 SQL> select * from t2;

ID

----------

1

2

查看下此时表T1和T2数据

2、T0时间点做CDB级别的RMAN全备

RMAN> backup as compressed backupset database format '/u01/rman/%d_%T_%U.bak';

Starting backup at 2018-08-13 12:48:14

Finished backup at 2018-08-13 12:49:25

3、T1时间点插入3条数据

12:48:04 SQL> insert into t1 values(3);

1 row created.

12:50:54 SQL> insert into t1 values(4);

1 row created.

12:51:12 SQL> insert into t1 values(5);

1 row created.

12:51:25 SQL> commit;

Commit complete.

12:51:28 SQL> select * from t1;

ID

----------

3

4

5

查看表T1,T1时间点已完成数据插入

4、T2时间点删除表T1

12:51:50 SQL> drop table t1 purge;

Table dropped.

记下此时删除时间:12:52:03

12:52:03 SQL> select * from t1;

select * from t1

*

ERROR at line 1:

ORA-00942: table or view does not exist

表T1已被删除

5、执行表级恢复

RMAN> RECOVER TABLE admin.t1 of pluggable database pdborcl2

UNTIL time "to_date('2018-08-13 12:52:03','yyyy-mm-dd hh24:mi:ss')"

#指定时间drop之前(12:52:03)

AUXILIARY DESTINATION '/u01/inst'

#auxiliary为oracle自动创建辅助实例的目录

datapump destination '/u01/dump';

#datapump为oracle恢复数据之后再导入到原数据库

DUMP FILE 'tablename.dmp'

#(可选)NOTABLEIMPORT 此选项避免自动导入表

恢复过程日志

Starting recover at 2018-08-13 12:52:54

current log archived

using channel ORA_DISK_1

RMAN-05026: warning: presuming following set of tablespaces applies to specified point-in-time

List of tablespaces expected to have UNDO segments

Tablespace SYSTEM

Tablespace UNDOTBS1

Creating automatic instance, with SID='Fina'

initialization parameters used for automatic instance:

db_name=ORCL

db_unique_name=Fina_pitr_pdborcl2_ORCL

compatible=12.2.0

db_block_size=8192

db_files=200

diagnostic_dest=/u01/app/oracle

_system_trig_enabled=FALSE

sga_target=796M

processes=200

db_create_file_dest=/u01/inst

log_archive_dest_1='location=/u01/inst'

enable_pluggable_database=true

_clone_one_pdb_recovery=true

#No auxiliary parameter file used

starting up automatic instance ORCL

Oracle instance started

Total System Global Area 834666496 bytes

Fixed Size 8798264 bytes

Variable Size 226496456 bytes

Database Buffers 595591168 bytes

Redo Buffers 3780608 bytes

Automatic instance created

contents of Memory Script:

{

# set requested point in time

set until time "to_date('2018-08-13 12:52:03','yyyy-mm-dd hh24:mi:ss')";

# restore the controlfile

restore clone controlfile;

# mount the controlfile

sql clone 'alter database mount clone database';

# archive current online log

sql 'alter system archive log current';

}

executing Memory Script

executing command: SET until clause

Starting restore at 2018-08-13 12:53:03

allocated channel: ORA_AUX_DISK_1

channel ORA_AUX_DISK_1: SID=34 device type=DISK

channel ORA_AUX_DISK_1: starting datafile backup set restore

channel ORA_AUX_DISK_1: restoring control file

channel ORA_AUX_DISK_1: reading from backup piece /u01/app/oracle/product/12.2.0/db_1/dbs/c-1498869325-20180813-04

channel ORA_AUX_DISK_1: piece handle=/u01/app/oracle/product/12.2.0/db_1/dbs/c-1498869325-20180813-04 tag=TAG20180813T124925

channel ORA_AUX_DISK_1: restored backup piece 1

channel ORA_AUX_DISK_1: restore complete, elapsed time: 00:00:01

output file name=/u01/inst/ORCL/controlfile/o1_mf_fq23oj3h_.ctl

Finished restore at 2018-08-13 12:53:05

sql statement: alter database mount clone database

sql statement: alter system archive log current

contents of Memory Script:

{

# set requested point in time

set until time "to_date('2018-08-13 12:52:03','yyyy-mm-dd hh24:mi:ss')";

# set destinations for recovery set and auxiliary set datafiles

set newname for clone datafile 1 to new;

set newname for clone datafile 5 to new;

set newname for clone datafile 3 to new;

set newname for clone datafile 19 to new;

set newname for clone datafile 20 to new;

set newname for clone tempfile 1 to new;

set newname for clone tempfile 4 to new;

# switch all tempfiles

switch clone tempfile all;

# restore the tablespaces in the recovery set and the auxiliary set

restore clone datafile 1, 5, 3, 19, 20;

switch clone datafile all;

}

executing Memory Script

executing command: SET until clause

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

executing command: SET NEWNAME

renamed tempfile 1 to /u01/inst/ORCL/datafile/o1_mf_temp_%u_.tmp in control file

renamed tempfile 4 to /u01/inst/ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_temp_%u_.tmp in control file

Starting restore at 2018-08-13 12:53:09

using channel ORA_AUX_DISK_1

channel ORA_AUX_DISK_1: starting datafile backup set restore

channel ORA_AUX_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_AUX_DISK_1: restoring datafile 00001 to /u01/inst/ORCL/datafile/o1_mf_system_%u_.dbf

channel ORA_AUX_DISK_1: restoring datafile 00005 to /u01/inst/ORCL/datafile/o1_mf_undotbs1_%u_.dbf

channel ORA_AUX_DISK_1: restoring datafile 00003 to /u01/inst/ORCL/datafile/o1_mf_sysaux_%u_.dbf

channel ORA_AUX_DISK_1: reading from backup piece /u01/rman/ORCL_20180813_1otaevse_1_1.bak

channel ORA_AUX_DISK_1: piece handle=/u01/rman/ORCL_20180813_1otaevse_1_1.bak tag=TAG20180813T124814

channel ORA_AUX_DISK_1: restored backup piece 1

channel ORA_AUX_DISK_1: restore complete, elapsed time: 00:00:25

channel ORA_AUX_DISK_1: starting datafile backup set restore

channel ORA_AUX_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_AUX_DISK_1: restoring datafile 00019 to /u01/inst/ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_system_%u_.dbf

channel ORA_AUX_DISK_1: restoring datafile 00020 to /u01/inst/ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_sysaux_%u_.dbf

channel ORA_AUX_DISK_1: reading from backup piece /u01/rman/ORCL_20180813_1ptaevt8_1_1.bak

channel ORA_AUX_DISK_1: piece handle=/u01/rman/ORCL_20180813_1ptaevt8_1_1.bak tag=TAG20180813T124814

channel ORA_AUX_DISK_1: restored backup piece 1

channel ORA_AUX_DISK_1: restore complete, elapsed time: 00:00:15

Finished restore at 2018-08-13 12:53:49

datafile 1 switched to datafile copy

input datafile copy RECID=12 STAMP=984056029 file name=/u01/inst/ORCL/datafile/o1_mf_system_fq23oov3_.dbf

datafile 5 switched to datafile copy

input datafile copy RECID=13 STAMP=984056029 file name=/u01/inst/ORCL/datafile/o1_mf_undotbs1_fq23ooth_.dbf

datafile 3 switched to datafile copy

input datafile copy RECID=14 STAMP=984056029 file name=/u01/inst/ORCL/datafile/o1_mf_sysaux_fq23oovg_.dbf

datafile 19 switched to datafile copy

input datafile copy RECID=15 STAMP=984056029 file name=/u01/inst/ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_system_fq23pgw4_.dbf

datafile 20 switched to datafile copy

input datafile copy RECID=16 STAMP=984056029 file name=/u01/inst/ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_sysaux_fq23pgx3_.dbf

contents of Memory Script:

{

# set requested point in time

set until time "to_date('2018-08-13 12:52:03','yyyy-mm-dd hh24:mi:ss')";

# online the datafiles restored or switched

sql clone "alter database datafile 1 online";

sql clone "alter database datafile 5 online";

sql clone "alter database datafile 3 online";

sql clone 'PDBORCL2' "alter database datafile

19 online";

sql clone 'PDBORCL2' "alter database datafile

20 online";

# recover and open database read only

recover clone database tablespace "SYSTEM", "UNDOTBS1", "SYSAUX", "PDBORCL2":"SYSTEM", "PDBORCL2":"SYSAUX";

sql clone 'alter database open read only';

}

executing Memory Script

executing command: SET until clause

sql statement: alter database datafile 1 online

sql statement: alter database datafile 5 online

sql statement: alter database datafile 3 online

sql statement: alter database datafile 19 online

sql statement: alter database datafile 20 online

Starting recover at 2018-08-13 12:53:50

using channel ORA_AUX_DISK_1

starting media recovery

archived log for thread 1 with sequence 35 is already on disk as file /u01/arch/1_35_971088589.dbf

archived log file name=/u01/arch/1_35_971088589.dbf thread=1 sequence=35

media recovery complete, elapsed time: 00:00:00

Finished recover at 2018-08-13 12:53:51

sql statement: alter database open read only

contents of Memory Script:

{

sql clone 'alter pluggable database PDBORCL2 open read only';

}

executing Memory Script

sql statement: alter pluggable database PDBORCL2 open read only

contents of Memory Script:

{

sql clone "create spfile from memory";

shutdown clone immediate;

startup clone nomount;

sql clone "alter system set control_files =

''/u01/inst/ORCL/controlfile/o1_mf_fq23oj3h_.ctl'' comment=

''RMAN set'' scope=spfile";

shutdown clone immediate;

startup clone nomount;

# mount database

sql clone 'alter database mount clone database';

}

executing Memory Script

sql statement: create spfile from memory

database closed

database dismounted

Oracle instance shut down

connected to auxiliary database (not started)

Oracle instance started

Total System Global Area 834666496 bytes

Fixed Size 8798264 bytes

Variable Size 226496456 bytes

Database Buffers 595591168 bytes

Redo Buffers 3780608 bytes

sql statement: alter system set control_files = ''/u01/inst/ORCL/controlfile/o1_mf_fq23oj3h_.ctl'' comment= ''RMAN set'' scope=spfile

Oracle instance shut down

connected to auxiliary database (not started)

Oracle instance started

Total System Global Area 834666496 bytes

Fixed Size 8798264 bytes

Variable Size 226496456 bytes

Database Buffers 595591168 bytes

Redo Buffers 3780608 bytes

sql statement: alter database mount clone database

contents of Memory Script:

{

# set requested point in time

set until time "to_date('2018-08-13 12:52:03','yyyy-mm-dd hh24:mi:ss')";

# set destinations for recovery set and auxiliary set datafiles

set newname for datafile 27 to new;

# restore the tablespaces in the recovery set and the auxiliary set

restore clone datafile 27;

switch clone datafile all;

}

executing Memory Script

executing command: SET until clause

executing command: SET NEWNAME

Starting restore at 2018-08-13 12:54:35

allocated channel: ORA_AUX_DISK_1

channel ORA_AUX_DISK_1: SID=34 device type=DISK

channel ORA_AUX_DISK_1: starting datafile backup set restore

channel ORA_AUX_DISK_1: specifying datafile(s) to restore from backup set

channel ORA_AUX_DISK_1: restoring datafile 00027 to /u01/inst/FINA_PITR_PDBORCL2_ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_admin_%u_.dbf

channel ORA_AUX_DISK_1: reading from backup piece /u01/rman/ORCL_20180813_1ptaevt8_1_1.bak

channel ORA_AUX_DISK_1: piece handle=/u01/rman/ORCL_20180813_1ptaevt8_1_1.bak tag=TAG20180813T124814

channel ORA_AUX_DISK_1: restored backup piece 1

channel ORA_AUX_DISK_1: restore complete, elapsed time: 00:00:01

Finished restore at 2018-08-13 12:54:36

datafile 27 switched to datafile copy

input datafile copy RECID=18 STAMP=984056077 file name=/u01/inst/FINA_PITR_PDBORCL2_ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_admin_fq23rcy4_.dbf

contents of Memory Script:

{

# set requested point in time

set until time "to_date('2018-08-13 12:52:03','yyyy-mm-dd hh24:mi:ss')";

# online the datafiles restored or switched

sql clone 'PDBORCL2' "alter database datafile

27 online";

# recover and open resetlogs

recover clone database tablespace "PDBORCL2":"ADMIN", "SYSTEM", "UNDOTBS1", "SYSAUX", "PDBORCL2":"SYSTEM", "PDBORCL2":"SYSAUX" delete archivelog;

alter clone database open resetlogs;

}

executing Memory Script

executing command: SET until clause

sql statement: alter database datafile 27 online

Starting recover at 2018-08-13 12:54:37

using channel ORA_AUX_DISK_1

starting media recovery

archived log for thread 1 with sequence 35 is already on disk as file /u01/arch/1_35_971088589.dbf

archived log file name=/u01/arch/1_35_971088589.dbf thread=1 sequence=35

media recovery complete, elapsed time: 00:00:00

Finished recover at 2018-08-13 12:54:37

database opened

contents of Memory Script:

{

sql clone 'alter pluggable database PDBORCL2 open';

}

executing Memory Script

sql statement: alter pluggable database PDBORCL2 open

contents of Memory Script:

{

# create directory for datapump import

sql 'PDBORCL2' "create or replace directory

TSPITR_DIROBJ_DPDIR as ''

/u01/dump''";

# create directory for datapump export

sql clone 'PDBORCL2' "create or replace directory

TSPITR_DIROBJ_DPDIR as ''

/u01/dump''";

}

executing Memory Script

sql statement: create or replace directory TSPITR_DIROBJ_DPDIR as ''/u01/dump''

sql statement: create or replace directory TSPITR_DIROBJ_DPDIR as ''/u01/dump''

Performing export of tables...

EXPDP> Starting "SYS"."TSPITR_EXP_Fina_zezj":

EXPDP> Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

EXPDP> Processing object type TABLE_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS

EXPDP> Processing object type TABLE_EXPORT/TABLE/STATISTICS/MARKER

EXPDP> Processing object type TABLE_EXPORT/TABLE/TABLE

EXPDP> . . exported "ADMIN"."T1" 5.062 KB 3 rows

EXPDP> Master table "SYS"."TSPITR_EXP_Fina_zezj" successfully loaded/unloaded

EXPDP> ******************************************************************************

EXPDP> Dump file set for SYS.TSPITR_EXP_Fina_zezj is:

EXPDP> /u01/dump/tspitr_Fina_70883.dmp

EXPDP> Job "SYS"."TSPITR_EXP_Fina_zezj" successfully completed at Mon Aug 13 12:55:09 2018 elapsed 0 00:00:19

Export completed

contents of Memory Script:

{

# shutdown clone before import

shutdown clone abort

}

executing Memory Script

Oracle instance shut down

Performing import of tables...

IMPDP> Master table "SYS"."TSPITR_IMP_Fina_Cpli" successfully loaded/unloaded

IMPDP> Starting "SYS"."TSPITR_IMP_Fina_Cpli":

IMPDP> Processing object type TABLE_EXPORT/TABLE/TABLE

IMPDP> Processing object type TABLE_EXPORT/TABLE/TABLE_DATA

IMPDP> . . imported "ADMIN"."T1" 5.062 KB 3 rows

IMPDP> Processing object type TABLE_EXPORT/TABLE/STATISTICS/TABLE_STATISTICS

IMPDP> Processing object type TABLE_EXPORT/TABLE/STATISTICS/MARKER

IMPDP> Job "SYS"."TSPITR_IMP_Fina_Cpli" successfully completed at Mon Aug 13 12:55:27 2018 elapsed 0 00:00:13

Import completed

Removing automatic instance

Automatic instance removed

auxiliary instance file /u01/inst/ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_temp_fq23q0fj_.tmp deleted

auxiliary instance file /u01/inst/ORCL/datafile/o1_mf_temp_fq23pzdk_.tmp deleted

auxiliary instance file /u01/inst/FINA_PITR_PDBORCL2_ORCL/onlinelog/o1_mf_3_fq23rgso_.log deleted

auxiliary instance file /u01/inst/FINA_PITR_PDBORCL2_ORCL/onlinelog/o1_mf_2_fq23rfsh_.log deleted

auxiliary instance file /u01/inst/FINA_PITR_PDBORCL2_ORCL/onlinelog/o1_mf_1_fq23rfr4_.log deleted

auxiliary instance file /u01/inst/FINA_PITR_PDBORCL2_ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_admin_fq23rcy4_.dbf deleted

auxiliary instance file /u01/inst/ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_sysaux_fq23pgx3_.dbf deleted

auxiliary instance file /u01/inst/ORCL/67AFB4BA944126D2E0536E0410ACB92F/datafile/o1_mf_system_fq23pgw4_.dbf deleted

auxiliary instance file /u01/inst/ORCL/datafile/o1_mf_sysaux_fq23oovg_.dbf deleted

auxiliary instance file /u01/inst/ORCL/datafile/o1_mf_undotbs1_fq23ooth_.dbf deleted

auxiliary instance file /u01/inst/ORCL/datafile/o1_mf_system_fq23oov3_.dbf deleted

auxiliary instance file /u01/inst/ORCL/controlfile/o1_mf_fq23oj3h_.ctl deleted

auxiliary instance file tspitr_Fina_70883.dmp deleted

Finished recover at 2018-08-13 12:55:30

已恢复表完毕

6、验证数据

12:52:12 SQL> select * from t1;

ID

----------

3

4

5

12:56:03 SQL> select * from t2;

ID

----------

1

2

结果:RMAN已将表T1恢复到删除之前。

场景2 先truncate表,再drop表,用RMAN恢复表

1、先插入1条测试数据

12:56:10 SQL> insert into t1 values(6);

1 row created.

13:26:17 SQL> commit;

Commit complete.

13:26:17 SQL> select * from t1;

ID

----------

3

4

5

6

2、truncate表T1

13:26:21 SQL> truncate table t1;

Table truncated.

记录时间点13:26:45truncate执行完毕

3、drop表T1

13:26:45 SQL> drop table t1 purge;

Table dropped.

4、RMAN恢复

RMAN> RECOVER TABLE admin.t1 of pluggable database pdborcl2

UNTIL time "to_date('2018-08-13 13:26:45','yyyy-mm-dd hh24:mi:ss')"

#指定时间点truncate之前

AUXILIARY DESTINATION '/u01/inst'

datapump destination '/u01/dump';

5、查看表数据

13:26:59 SQL> select * from t1;

ID

----------

3

4

5

6

13:34:30 SQL> select * from t2;

ID

----------

1

2

结果:恢复成功

RMAN表级恢复总结:

1、12c的RMAN表级恢复可以恢复到被删除之前的任意时间点。假设表T1在drop之前,执行了truncate操作,表删除之后,可以恢复到truncate之前,其他表数据无影响。

2、表级恢复只能用cdb RMAN备份。

3、恢复期间会自动创建辅助实例,会还原system,sysaux,undo表空间,恢复目录需要有充足空间,最后通过数据泵把数据导入到原数据库。

1.13 In-Memory选件

1、In-Memory 开启方法

启用IMO非常简单,12.1.0.2及之后版本下,设置INMEMORY_SIZE 为非0值便可启用IM column store特性。

INMEMORY_SIZE 是个实例级参数,默认为0,设置一个非0值时,最小值为100M。

通常情况下,sys用户下的对象及SYSTEM、SYSAUX表空间上的对象无法使用IMO特性,但通过设置“_enable_imc_sys”隐含参数也可以使用

开启DB In-Memory过程如下:

1、修改INMEMORY_SIZE参数:

SQL> ALTER SYSTEM SET INMEMORY_SIZE=1G SCOPE=SPFILE;

2、检查sga参数的设置,确保在设置完inmemroy_size参数之后数据库实例还可以正常启动。如果数据库使用了ASMM,则需要检查sga_target参数。如果使用了AMM,则需要检查MEMORY_TARGET参数,同时也需要检查SGA_MAX_TARGET(或MEMORY_MAX_TARGET)。

备注:从 12.2 开始,可以动态增加 In-Memory 区域的大小,为此,只需 通过 ALTER SYSTEM 命令增加 INMEMORY_SIZE 参数值即可

3、重启数据库实例

4、查看IM特性是否开启

SQL> SHOW PARAMETER inmemory;

NAME TYPE VALUE

------------------------------------ ----------- ------------------------------

inmemory_adg_enabled boolean TRUE

inmemory_clause_default string

inmemory_expressions_usage string ENABLE

inmemory_force string DEFAULT

inmemory_max_populate_servers integer 2

inmemory_query string ENABLE

inmemory_size big integer 1G

inmemory_trickle_repopulate_servers_ integer 1

percent

inmemory_virtual_columns string MANUAL

optimizer_inmemory_aware boolean TRUE

2、开启与关闭IM column store

1、TABLE 级启用:

可以通过如下初始建表或后续修改表 inmemory 属性的方式进行启用:

create table test (id number) inmemory;

alter table test inmemory;

1.14 配置第二个网络和相应的scan

在11G R2中虽然能够创建第二网络,以及在第二网络创建vip监听,但是scan监听只能在第一个网络中提供服务,然而到了12c版本oracle增加了第二网段 SCAN监听特性,通过指定listener_networks参数,scan监听器可以将数据库连接重定向到相同子网的vip监听上。

实现方法:

规划第二个网络的vip以及scanip

172.16.4.11 rac1

172.16.4.12 rac2

172.16.4.13 rac1-vip

10.100.1.13 rac1-test-vip

172.16.4.14 rac2-vip

10.100.1.14 rac2-test-vip

10.10.10.11 rac1-prv

10.10.10.12 rac2-prv

172.16.4.15 rac-scan

10.100.1.15 ractest-scan

1、添加新的public网络

[root@rac1 ~]# oifcfg getif

ens34 172.16.4.0 global public

ens35 10.10.10.0 global cluster_interconnect

此时只有ens34位public网段

ens40为第二网段网卡,添加新网段到public

[root@rac1 ~]# oifcfg setif -global ens40/10.100.1.0:public

添加完毕之后,再次查看集群public信息

[root@rac1 ~]# oifcfg getif

ens34 172.16.4.0 global public

ens35 10.10.10.0 global cluster_interconnect

ens40 10.100.1.0 global public

2、添加新的网络集群资源

在集群资源中添加netnum为2的网络

[root@rac1 ~]# srvctl add network -netnum 2 -subnet 10.100.1.0/255.255.255.0/ens40

添加完毕之后验证集群网络配置

[root@rac1 ~]# srvctl config network

Network 1 exists

Subnet IPv4: 172.16.4.0/255.255.255.0/ens34, static

Subnet IPv6:

Ping Targets:

Network is enabled

Network is individually enabled on nodes:

Network is individually disabled on nodes:

Network 2 exists

Subnet IPv4: 10.100.1.0/255.255.255.0/ens40, static

Subnet IPv6:

Ping Targets:

Network is enabled

Network is individually enabled on nodes:

Network is individually disabled on nodes:

查看集群资源网络

[root@rac1 ~]# crsctl status res -t |grep -A 2 network

ora.net1.network

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.net2.network

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

3、集群中添加vip

[root@rac1 ~]# srvctl add vip -node rac1 -netnum 2 -address rac1-test-vip/255.255.255.0/ens40

[root@rac1 ~]# srvctl add vip -node rac2 -netnum 2 -address rac2-test-vip/255.255.255.0/ens40

[root@rac1 ~]# srvctl config vip -n rac1

VIP exists: network number 1, hosting node rac1

VIP Name: rac1-vip

VIP IPv4 Address: 172.16.4.13

VIP IPv6 Address:

VIP is enabled.

VIP is individually enabled on nodes:

VIP is individually disabled on nodes:

VIP exists: network number 2, hosting node rac1

VIP Name: rac1-test-vip

VIP IPv4 Address: 10.100.1.13

VIP IPv6 Address:

VIP is enabled.

VIP is individually enabled on nodes:

VIP is individually disabled on nodes:

[root@rac1 ~]# srvctl config vip -n rac2

VIP exists: network number 1, hosting node rac2

VIP Name: rac2-vip

VIP IPv4 Address: 172.16.4.14

VIP IPv6 Address:

VIP is enabled.

VIP is individually enabled on nodes:

VIP is individually disabled on nodes:

VIP exists: network number 2, hosting node rac2

VIP Name: rac2-test-vip

VIP IPv4 Address: 10.100.1.14

VIP IPv6 Address:

VIP is enabled.

VIP is individually enabled on nodes:

VIP is individually disabled on nodes:

4、启动vip,查看vip资源

[root@rac1 ~]# srvctl start vip -vip rac1-test-vip

[root@rac1 ~]# srvctl start vip -vip rac2-test-vip

[root@rac1 ~]# srvctl status vip -n rac1

VIP rac1-vip is enabled

VIP rac1-vip is running on node: rac1

VIP rac1-test-vip is enabled

VIP rac1-test-vip is running on node: rac1

[root@rac1 ~]# srvctl status vip -n rac2

VIP rac2-vip is enabled

VIP rac2-vip is running on node: rac2

VIP rac2-test-vip is enabled

VIP rac2-test-vip is running on node: rac2

检查新创建的vip是否运行了

[root@rac1 ~]# ifconfig ens40:1

ens40:1: flags=4163<UP,BROADCAST,RUNNING,MULTICAST> mtu 1500

inet 10.100.1.13 netmask 255.255.255.0 broadcast 10.100.1.255

ether 00:0c:29:32:02:1d txqueuelen 1000 (Ethernet)

5、添加网络2上的监听

增加第二网络监听名 LISTENER_TEST,注意端口要与网络1不相同

[root@rac1 ~]# srvctl add listener -listener LISTENER_TEST -netnum 2 -endpoints "TCP:1522"

[root@rac1 ~]# crsctl status res -t |grep -A 2 LISTENER

ora.LISTENER.lsnr

ONLINE ONLINE rac1 STABLE

ONLINE ONLINE rac2 STABLE

ora.LISTENER_TEST.lsnr

OFFLINE OFFLINE rac1 STABLE

OFFLINE OFFLINE rac2 STABLE

--

ora.LISTENER_SCAN1.lsnr

1 ONLINE ONLINE rac1 STABLE

ora.MGMTLSNR

6、在第二网络上增加SCAN

[root@rac1 ~]# srvctl add scan -scanname ractest-scan -netnum 2

[root@rac1 ~]# srvctl config scan -netnum 2

SCAN name: ractest-scan, Network: 2

Subnet IPv4: 10.100.1.0/255.255.255.0/ens40, static

Subnet IPv6:

SCAN 0 IPv4 VIP: 10.100.1.15

SCAN VIP is enabled.

SCAN VIP is individually enabled on nodes:

SCAN VIP is individually disabled on nodes:

7、启动第二网络上的监听

[root@rac1 ~]# srvctl start listener -listener LISTENER_TEST

[root@rac1 ~]# srvctl status listener -listener LISTENER_TEST

Listener LISTENER_TEST is enabled

Listener LISTENER_TEST is running on node(s): rac1,rac2

8、在第二网段上启动SCAN

[root@rac1 ~]# srvctl start scan -netnum 2

9、在network2上添加SCAN LISTENER

第二网络添加名为LISTENER_TEST_SCAN端口1522的scan 监听

[root@rac1 ~]# srvctl add scan_listener -netnum 2 -listener LISTENER_TEST_SCAN -endpoints "TCP:1522"

启动第二网段的scan监听

[root@rac1 ~]# srvctl start scan_listener -netnum 2

[root@rac1 ~]# srvctl status scan_listener -netnum 2

SCAN Listener LISTENER_TEST_SCAN_SCAN1_NET2 is enabled

SCAN listener LISTENER_TEST_SCAN_SCAN1_NET2 is running on node rac2

[root@rac1 ~]# srvctl status scan_listener -netnum 1

SCAN Listener LISTENER_SCAN1 is enabled

SCAN listener LISTENER_SCAN1 is running on node rac1

10、检查监听状态

netstat -an |grep 1521|grep LISTEN

netstat -an |grep 1522|grep LISTEN

lsnrctl status LISTENER

lsnrctl status LISTENER_TEST

11、配置ORACLE数据库实例支持多个网络

需要修改初始化参数listener_networks,让PMON或者LREG进程在2个网络中都把服务注册到监听中。

第一部分指定第一网络的配置,命名为network1,第二部分指定第二网络的配置,命名为network2.如下RAC1_VIP_NET1,RAC_SCAN_NET1为第一网络的TNS别名,分别连接本地的vip监听途虎scan监听。

tnsnames.ora

RAC1_VIP_NET1 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac1-vip)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcl)

)

)

RAC1_VIP_NET2 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac1-test-vip)(PORT = 1522))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcl)

)

)

RAC2_VIP_NET1 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac2-vip)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcl)

)

)

RAC2_VIP_NET2 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac2-test-vip)(PORT = 1522))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcl)

)

)

RAC_SCAN_NET1 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = rac-scan)(PORT = 1521))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcl)

)

)

RAC_SCAN_NET2 =

(DESCRIPTION =

(ADDRESS = (PROTOCOL = TCP)(HOST = ractest-scan)(PORT = 1522))

(CONNECT_DATA =

(SERVER = DEDICATED)

(SERVICE_NAME = orcl)

)

)

alter system set listener_networks='((NAME=network1)(LOCAL_LISTENER=RAC1_VIP_NET1)(REMOTE_LISTENER=RAC_SCAN_NET1))','((NAME=network2)(LOCAL_LISTENER=RAC1_VIP_NET2)(REMOTE_LISTENER=RAC_SCAN_NET2))' sid='orcl1';

alter system set listener_networks='((NAME=network1)(LOCAL_LISTENER=RAC2_VIP_NET1)(REMOTE_LISTENER=RAC_SCAN_NET1))','((NAME=network2)(LOCAL_LISTENER=RAC2_VIP_NET2)(REMOTE_LISTENER=RAC_SCAN_NET2))' sid='orcl2';

--alter system set local_listener='(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=TCP)(HOST=rac1-vip)(PORT=1521))))' sid='orcl1';

--alter system set local_listener='(DESCRIPTION=(ADDRESS_LIST=(ADDRESS=(PROTOCOL=TCP)(HOST=rac2-vip)(PORT=1521))))' sid='orcl2';

检查下remote_listener参数是否为空,如果为空可添加下第一网络的scanip如下

alter system set remote_listener='rac-scan:1521';

备注:

remote_listener这个参数如果为空,客户端无法直接通过scanip连接服务端,

必须在客户端hosts文件添加对应主机ip

remote_listener如果设定为scanip,客户端不用设置hosts,直接用scanip就可以连接服务端

12、客户端连接测试

在客户端hosts文件添加第二网络主机信息

10.100.1.13 rac1-test-vip

10.100.1.14 rac2-test-vip

10.100.1.15 ractest-scan

kaysMacBookPro:~ oracle$ sqlplus system/oracle@10.100.1.15:1522/orcl

SQL> show parameter instance_name

NAME TYPE

------------------------------------ ---------------------------------

VALUE

------------------------------

instance_name string

orcl2

kaysMacBookPro:~ oracle$ sqlplus system/oracle@172.16.4.15:1521/orcl

SQL*Plus: Release 12.1.0.2.0 Production on Fri Sep 28 21:57:42 2018

Copyright © 1982, 2016, Oracle. All rights reserved.

Last Successful login time: Fri Sep 28 2018 21:57:26 +08:00

13、查看第二网络监听信息

[root@rac1 ~]# su - grid -c "lsnrctl status LISTENER_TEST"

LSNRCTL for Linux: Version 12.1.0.2.0 - Production on 27-SEP-2018 22:07:49

Copyright © 1991, 2014, Oracle. All rights reserved.

Connecting to (DESCRIPTION=(ADDRESS=(PROTOCOL=IPC)(KEY=LISTENER_TEST)))

STATUS of the LISTENER

------------------------

Alias LISTENER_TEST

Version TNSLSNR for Linux: Version 12.1.0.2.0 - Production

Start Date 27-SEP-2018 21:51:27

Uptime 0 days 0 hr. 16 min. 21 sec

Trace Level off

Security ON: Local OS Authentication

SNMP OFF

Listener Parameter File /u01/app/12.1.0/grid/network/admin/listener.ora

Listener Log File /u01/app/grid/diag/tnslsnr/rac1/listener_test/alert/log.xml

Listening Endpoints Summary...

(DESCRIPTION=(ADDRESS=(PROTOCOL=ipc)(KEY=LISTENER_TEST)))

(DESCRIPTION=(ADDRESS=(PROTOCOL=tcp)(HOST=10.100.1.13)(PORT=1522)))

Services Summary...

Service "orcl" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "orclXDB" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

Service "pdborcl" has 1 instance(s).

Instance "orcl1", status READY, has 1 handler(s) for this service...

The command completed successfully

1.15 全库缓存

Force Full Database Caching Mode

A)查看是否启用全数据库缓存

SELECT FORCE_FULL_DB_CACHING FROM V$DATABASE;

B)启用全数据库缓存

ALTER DATABASE FORCE FULL DATABASE CACHING;

##数据编写(Redaction)

https://blog.csdn.net/lqx0405/article/details/52185852

begin dbms_redact.add_policy(

object_schema=>‘admin’,

object_name=>‘employee’,

policy_name=>‘p1’,

column_name=>‘mobile’,

function_type=>dbms_redact.partial,

enable=>true,

expression=>‘1=1’,

function_parameters=>‘VVVFVVVVFVVVV,VVV-VVVV-VVVV,*,1,8’);

end;

/

begin dbms_redact.alter_policy(

object_schema=>‘admin’,

object_name=>‘employee’,

policy_name=>‘p1’,

action=>dbms_redact.modify_expression,

expression=>‘SYS_CONTEXT(’‘USERENV’’,’‘SESSION_USER’’) != ‘‘CS’’’);

end;

/

1.16 Sharding Database(12.2.0.1)

Oracle Sharding是Oracle 12.2版本推出的新功能,也称为数据分片,适用于online transaction processing (OLTP). Oracle Sharding基于表分区技术,是一种在数据层将数据水平分区存储到不同的数据库的技术. Sharding可以实现将一个分区表的不同分区存储在不同的数据库中,每个数据库位于不同的服务器,每一个数据库都称为shard, 这些shard组成一个逻辑数据库,称为sharded database (SDB). 这个table也称为sharded table, 每个shard数据库中保存该表的不同数据集(按照sharding key分区), 但是他们有相同的列(columns)。

Shard是一种shared-nothing技术,每个shard数据库使用独立的服务器硬件(CPU,内存等)。Shard可以运行在单机数据库或者DATAGUARD/ADG数据库。

环境简介

操作系统:CentOS 7.5

主机:sdb1、sdb2、sdb3

软件:Oracle Database 12c Release 2+OracleDatabase 12c Release 2 Global Service Manager (GSM/GDS)

sdb1为catalog数据库存储shard database的元数据、通过gds软件实现shard节点数据库的自动部署

sdb2、sdb3用于存储各个节点分片数据

部署过程

一、部署前准备

1、在所有节点编辑hosts文件用于ip解析

vi /etc/hosts

172.16.4.21 sdb1

172.16.4.22 sdb2

172.16.4.23 sdb3

2、在所有节点安装12C数据库软件(不创建DB)

备注:在所有节点确保执行root.sh

/u01/app/oraInventory/orainstRoot.sh

/u01/app/oracle/product/12.2.0/db_1/root.sh

二、安装Oracle Database 12c Release 2 Global Service Manager (GSM/GDS)

在主节点sdb1上进行

2.1 解压GSM软件

unzip linuxx64_12201_gsm.zip

2.2 oracle用户下进行安装

./runInstaller

2.3 GDS安装过程与oracle software类似

三、创建Shard Catalog Database

在主节点dbca创建shard数据库实例,这里需要注意的是选择非CDB选项,字符集使用UTF8(zhs16gbk不支持),启用OMF,归档以及快闪区

注意这里不要勾选cdb

创建数据库

最后通过netca创建监听

四、设置OracleSharding Manage和路由层

4.1-4.1都是在主节点操作

4.1 解锁用户GSMCATUSER

SQL> alter user gsmcatuser identified by oracle account unlock;

备注:创建gsmcatuser用户目的是可以让shard director通过此用户连接到shard catalog数据库

4.2 创建管理用户gsmadmin

SQL> create user gsmadmin identified by oracle;

SQL> grant connect, create session, gsmadmin_role to gsmadmin;

SQL> grant inherit privileges on user SYS to GSMADMIN_INTERNAL;

SQL> exec DBMS_SCHEDULER.SET_AGENT_REGISTRATION_PASS('oracle');

--->设置scheduler agent连接到主数据库的口令

备注:

1、在catalog数据库创建管理用户gsmadmin用于存储Sharding节点管理信息,以及GDSCTL接口可以通过gsmadmin用户连接到catalog数据库

4.3 进入到GDSCTL命令行,创建shard catalog

环境变量增加gds bin路径

PATH=$PATH:$HOME/.local/bin:/u01/app/oracle/product/12.2.0/gsmhome_1/bin:$HOME/bin

[oracle@sdb1 admin]$ gdsctl

GDSCTL>create shardcatalog -database 172.16.4.21:1521:shard -chunks 12 -user gsmadmin/oracle -sdb shard -region region1,region2

Catalog is created

备注:在创建catalog之前先查看下监听是否正常

4.4 创建并启动shard director。并设置操作系统安全认证

GDSCTL>add gsm -gsm sharddirector1 -listener 1522 -pwd oracle -catalog 172.16.4.21:1521:shard -region region1

GSM successfully added

参数含义:

-gsm: 指定shard director名称

-listener: 指定shard director的监听端口,注意不能与数据库的listener端口冲突

-catalog: 指定catalog database 信息,catalog数据库的主机名:监听器port: catalog 数据库db_name

启动director

GDSCTL>start gsm -gsm sharddirector1

GSM is started successfully

添加操作系统认证

GDSCTL>add credential -credential region1_cred -osaccount oracle -ospassword oracle

The operation completed successfully

GDSCTL>exit

4.5 在其余节点注册Scheduler agents, 并创建好oradata和fast_recovery_area文件夹。

sdb2:

schagent -start

schagent -status

echo oracle | schagent -registerdatabase 172.16.4.21 8080

mkdir /u01/app/oracle/oradata

mkdir /u01/app/oracle/fast_recovery_area

sdb3:

schagent -start

schagent -status

echo oracle | schagent -registerdatabase 172.16.4.21 8080

mkdir /u01/app/oracle/oradata

mkdir /u01/app/oracle/fast_recovery_area

五、开始布署SharedDatabase。本例将布署System-ManagedSDB。

主节点进行

5.1 添加shardgroup

[oracle@sdb1 ~]$ gdsctl

GDSCTL>set gsm -gsm sharddirector1

GDSCTL>connect gsmadmin/oracle

GDSCTL>add shardgroup -shardgroup primary_shardgroup -deploy_as primary -region region1

备注:

shardgroup是一组shard的集合,shardgroup名称为primary_shardgroup,-deploy_as primary表示这个group中的shard都是主库。

5.2 创建shard

sdb2:

GDSCTL>create shard -shardgroup primary_shardgroup -destination sdb2 -credential region1_cred -sys_password oracle

The operation completed successfully

DB Unique Name: sh1

sdb3:

GDSCTL>create shard -shardgroup primary_shardgroup -destination sdb3 -credential region1_cred -sys_password oracle

The operation completed successfully

DB Unique Name: sh21

附删除shard方式

-REMOVE SHARD -SHARD{shard_name_list | ALL} | -SHARDSPACE shardspace_list | -SHARDGROUP shardgroup_list} [-FORCE]

-remove shard -shard sh21 -force

-remove shard -shard sh1 -force

--primary_shardgroup

--shardspaceora

5.3 查看shard节点配置信息

GDSCTL>config shard

Name Shard Group Status State Region Availability

---- ----------- ------ ----- ------ ------------

sh1 primary_shardgroup U none region1 -

sh21 primary_shardgroup U none region1 -

由于还未部署,state为none

5.4 查看gsm状态信息

GDSCTL>status gsm

Alias SHARDDIRECTOR1

Version 12.2.0.1.0

Start Date 16-OCT-2018 17:34:06

Trace Level off

Listener Log File /u01/app/oracle/diag/gsm/sdb1/sharddirector1/alert/log.xml

Listener Trace File /u01/app/oracle/diag/gsm/sdb1/sharddirector1/trace/ora_13073_140473160778112.trc

Endpoint summary (ADDRESS=(HOST=sdb1)(PORT=1522)(PROTOCOL=tcp))

GSMOCI Version 2.2.1

Mastership Y

Connected to GDS catalog Y

Process Id 13076

Number of reconnections 0

Pending tasks. Total 0

Tasks in process. Total 0

Regional Mastership TRUE

Total messages published 0

Time Zone +08:00

Orphaned Buddy Regions:

None

GDS region region1

5.5 利用GDS自动部署shard节点数据库

GDSCTL>deploy

备注:

1、deploy命令会调用远程每一个节点上的dbca去静默安装sharded database

2、可以通过查看dbca日志跟踪每个节点的安装进度

3、deploy过程会持续一段时间,中间没有输出过程

dbca日志:$ORACLE_BASE/cfgtoollogs/dbca/实例名/trace.log

[oracle@sdb2 sh1]$ tail -100f /u01/app/oracle/cfgtoollogs/dbca/sh1/trace.log_2018-10-16_03-00-25-PM

deploy: examining configuration...

deploy: deploying primary shard 'sh1' ...

deploy: network listener configuration successful at destination 'sdb2'

deploy: starting DBCA at destination 'sdb2' to create primary shard 'sh1' ...

deploy: deploying primary shard 'sh21' ...

deploy: network listener configuration successful at destination 'sdb3'

deploy: starting DBCA at destination 'sdb3' to create primary shard 'sh21' ...

deploy: waiting for 2 DBCA primary creation job(s) to complete...

deploy: waiting for 2 DBCA primary creation job(s) to complete...

deploy: waiting for 2 DBCA primary creation job(s) to complete...

deploy: waiting for 2 DBCA primary creation job(s) to complete...

deploy: waiting for 2 DBCA primary creation job(s) to complete...

deploy: waiting for 2 DBCA primary creation job(s) to complete...

deploy: DBCA primary creation job succeeded at destination 'sdb3' for shard 'sh21'

deploy: waiting for 1 DBCA primary creation job(s) to complete...

deploy: DBCA primary creation job succeeded at destination 'sdb2' for shard 'sh1'

deploy: requesting Data Guard configuration on shards via GSM

deploy: shards configured successfully

The operation completed successfully

部署完毕

5.6 再次查看shard数据库状态

GDSCTL>config shard

Name Shard Group Status State Region Availability

---- ----------- ------ ----- ------ ------------

sh1 primary_shardgroup Ok Deployed region1 ONLINE

sh21 primary_shardgroup Ok Deployed region1 ONLINE

status状态ok已部署完毕

六、建立service

6.1 创建服务

GDSCTL>add service -service oltp_rw_srvc -role primary

The operation completed successfully

6.2 启动服务

GDSCTL>start service -service oltp_rw_srvc

The operation completed successfully

6.3 查看服务状态

GDSCTL>status service

Service "oltp_rw_srvc.shard.oradbcloud" has 2 instance(s). Affinity: ANYWHERE

Instance "shard%1", name: "sh1", db: "sh1", region: "region1", status: ready.

Instance "shard%11", name: "sh21", db: "sh21", region: "region1", status: ready.

服务已启动完毕

七、修改sqlnet文件

所有节点修改sqlnet.ora

七、创建用户并授权

SQL> alter session enable shard ddl;

Session altered.

--创建应用用户app_schema

SQL> create user app_schema identified by oracle;

User created.

--对用户授权

SQL> grant all privileges to app_schema;

Grant succeeded.

SQL> grant gsmadmin_role to app_schema;

Grant succeeded.

SQL> grant select_catalog_role to app_schema;

Grant succeeded.

SQL> grant connect, resource to app_schema;

Grant succeeded.

SQL> grant dba to app_schema;

Grant succeeded.

SQL> grant execute on dbms_crypto to app_schema;

Grant succeeded.

八、利用应用用户登录,创建sharded table和duplicated table

创建表空间集合

conn app_schema/oracle

alter session enable shard ddl;

CREATE TABLESPACE SET TSP_SET_1 using template (datafile size 100m extent management local segment space management auto );

CREATE TABLESPACE products_tsp datafile size 100m extent management local uniform size 1m;

备注:

1、创建TSP_SET_1表空间集是提供给以下customers,orders,lineitems,这3个sharded table使用。products_tsp表空间是用于duplicate表products使用。

2、TABLESPACE SET只有sharding环境才能创建,并且需要在catalog数据库以sdb用户创建

3、TABLESPACE SET中的表空间是bigfile,每一个表空间会自动创建,其总数量与chunks相同(所有节点chunks之和)

创建表家族

-- Create sharded table family

CREATE SHARDED TABLE Customers

(

CustId VARCHAR2(60) NOT NULL,

FirstName VARCHAR2(60),

LastName VARCHAR2(60),

Class VARCHAR2(10),

Geo VARCHAR2(8),

CustProfile VARCHAR2(4000),

Passwd RAW(60),

CONSTRAINT pk_customers PRIMARY KEY (CustId),

CONSTRAINT json_customers CHECK (CustProfile IS JSON)

) TABLESPACE SET TSP_SET_1

PARTITION BY CONSISTENT HASH (CustId) PARTITIONS AUTO;

CREATE SHARDED TABLE Orders

(

OrderId INTEGER NOT NULL,

CustId VARCHAR2(60) NOT NULL,

OrderDate TIMESTAMP NOT NULL,

SumTotal NUMBER(19,4),

Status CHAR(4),

constraint pk_orders primary key (CustId, OrderId),

constraint fk_orders_parent foreign key (CustId)

references Customers on delete cascade

) partition by reference (fk_orders_parent);

CREATE SEQUENCE Orders_Seq;

CREATE SHARDED TABLE LineItems

(

OrderId INTEGER NOT NULL,

CustId VARCHAR2(60) NOT NULL,

ProductId INTEGER NOT NULL,

Price NUMBER(19,4),

Qty NUMBER,

constraint pk_items primary key (CustId, OrderId, ProductId),

constraint fk_items_parent foreign key (CustId, OrderId)

references Orders on delete cascade

) partition by reference (fk_items_parent);

-- duplicated table

CREATE DUPLICATED TABLE Products

(

ProductId INTEGER GENERATED BY DEFAULT AS IDENTITY PRIMARY KEY,

Name VARCHAR2(128),

DescrUri VARCHAR2(128),

LastPrice NUMBER(19,4)

) TABLESPACE products_tsp;

备注:

1、如上创建了customers、orders、lineitems3张表,均为shared table,三张表组成了表家族,其中customers是根表,orders以及lineitems表为子表,他们按照sharding key (custid)根表的主键进行分区

2、customers表partitioning by consistent hash.主要作用是打散数据

创建function,目的是为了后面的DEMO:

CREATE OR REPLACE FUNCTION PasswCreate(PASSW IN RAW)

RETURN RAW

IS

Salt RAW(8);

BEGIN

Salt := DBMS_CRYPTO.RANDOMBYTES(8);

RETURN UTL_RAW.CONCAT(Salt, DBMS_CRYPTO.HASH(UTL_RAW.CONCAT(Salt,

PASSW), DBMS_CRYPTO.HASH_SH256));

END;

/

CREATE OR REPLACE FUNCTION PasswCheck(PASSW IN RAW, PHASH IN RAW)

RETURN INTEGER IS

BEGIN

RETURN UTL_RAW.COMPARE(

DBMS_CRYPTO.HASH(UTL_RAW.CONCAT(UTL_RAW.SUBSTR(PHASH, 1, 8),

PASSW), DBMS_CRYPTO.HASH_SH256),

UTL_RAW.SUBSTR(PHASH, 9));

END;

/

进入catalog数据库检查刚才执行的ddl操作是否有错误

GDSCTL>connect gsmadmin/oracle

Catalog connection is established

GDSCTL>show ddl

id DDL Text Failed shards

-- -------- -------------

7 grant execute on dbms_crypto to app_s...

8 CREATE TABLESPACE SET TSP_SET_1 using...

9 CREATE TABLESPACE products_tsp datafi...

10 CREATE SHARDED TABLE Customers ( ...

11 CREATE SHARDED TABLE Orders ( ...

12 CREATE SEQUENCE Orders_Seq

13 CREATE SHARDED TABLE LineItems ...

14 CREATE MATERIALIZED VIEW "APP_SCHEMA"...

15 CREATE OR REPLACE FUNCTION PasswCreat...

16 CREATE OR REPLACE FUNCTION PasswCheck...

检查每个shard是否有ddl错误

shard node1节点:

GDSCTL>config shard -shard sh1

Name: sh1

Shard Group: primary_shardgroup

Status: Ok

State: Deployed

Region: region1

Connection string: sdb2:1521/sh1:dedicated

SCAN address:

ONS remote port: 0

Disk Threshold, ms: 20

CPU Threshold, %: 75

Version: 12.2.0.0

Failed DDL:

DDL Error: --- <<<<<<<<<<<<没有DDL错误

Failed DDL id:

Availability: ONLINE

Rack:

Supported services

------------------------

Name Preferred Status

---- --------- ------

oltp_rw_srvc Yes Enabled

shard node2节点:

GDSCTL>config shard -shard sh21

Name: sh21

Shard Group: primary_shardgroup

Status: Ok

State: Deployed

Region: region1

Connection string: sdb3:1521/sh21:dedicated

SCAN address:

ONS remote port: 0

Disk Threshold, ms: 20

CPU Threshold, %: 75

Version: 12.2.0.0

Failed DDL:

DDL Error: --- 没有错误

Failed DDL id:

Availability: ONLINE

Rack:

Supported services

------------------------

Name Preferred Status

---- --------- ------

oltp_rw_srvc Yes Enabled

九、验证环境-表空间/chunks

9.1 在gsm节点,检查chunks信息

前面创建shardcatalog时指定chunks为12,因此后续创建shard table分配12个chunks

每个shard节点均有6个chunk

GDSCTL>config chunks

Chunks

------------------------

Database From To

-------- ---- --

sh1 1 6

sh21 7 12

9.2 在shard所有节点检查表空间和chunks信息

shard node1:

SQL> select TABLESPACE_NAME, BYTES/1024/1024 MB from sys.dba_data_files order by tablespace_name;

TABLESPACE_NAME MB

------------------------------ ----------

C001TSP_SET_1 100

C002TSP_SET_1 100

C003TSP_SET_1 100

C004TSP_SET_1 100

C005TSP_SET_1 100

C006TSP_SET_1 100

PRODUCTS_TSP 100

SYSAUX 470

SYSTEM 800

TSP_SET_1 100

UNDOTBS1 70

USERS 5

备注:

1、创建了6个表空间,分别是C001TSP_SET_1 ~ 表空间C006TSP_SET_1,因为设置chunks=12,每个shard有6个chunks。

2、每个表空间有一个datafile,大小是100M,这个是在创建tablespace set时设置的datafile 100M。

shard node2:

SQL> select TABLESPACE_NAME, BYTES/1024/1024 MB from sys.dba_data_files order by tablespace_name;

TABLESPACE_NAME MB

------------------------------ ----------

C007TSP_SET_1 100

C008TSP_SET_1 100

C009TSP_SET_1 100

C00ATSP_SET_1 100

C00BTSP_SET_1 100

C00CTSP_SET_1 100

PRODUCTS_TSP 100

SYSAUX 480

SYSTEM 800

TSP_SET_1 100

UNDOTBS1 70

USERS 5

检查chunks

shard node1:

set linesize 140

set pagesize 200

column table_name format a20

column tablespace_name format a20

column partition_name format a20

show parameter db_unique_name

select table_name, partition_name, tablespace_name from dba_tab_partitions where tablespace_name like 'C%TSP_SET_1' order by tablespace_name;

TABLE_NAME PARTITION_NAME TABLESPACE_NAME

-------------------- -------------------- --------------------

LINEITEMS CUSTOMERS_P1 C001TSP_SET_1

CUSTOMERS CUSTOMERS_P1 C001TSP_SET_1

ORDERS CUSTOMERS_P1 C001TSP_SET_1

CUSTOMERS CUSTOMERS_P2 C002TSP_SET_1

ORDERS CUSTOMERS_P2 C002TSP_SET_1

LINEITEMS CUSTOMERS_P2 C002TSP_SET_1

CUSTOMERS CUSTOMERS_P3 C003TSP_SET_1

LINEITEMS CUSTOMERS_P3 C003TSP_SET_1

ORDERS CUSTOMERS_P3 C003TSP_SET_1

LINEITEMS CUSTOMERS_P4 C004TSP_SET_1

CUSTOMERS CUSTOMERS_P4 C004TSP_SET_1

ORDERS CUSTOMERS_P4 C004TSP_SET_1

CUSTOMERS CUSTOMERS_P5 C005TSP_SET_1

ORDERS CUSTOMERS_P5 C005TSP_SET_1

LINEITEMS CUSTOMERS_P5 C005TSP_SET_1

CUSTOMERS CUSTOMERS_P6 C006TSP_SET_1

ORDERS CUSTOMERS_P6 C006TSP_SET_1

LINEITEMS CUSTOMERS_P6 C006TSP_SET_1

shard node2:

TABLE_NAME PARTITION_NAME TABLESPACE_NAME

-------------------- -------------------- --------------------

ORDERS CUSTOMERS_P7 C007TSP_SET_1

CUSTOMERS CUSTOMERS_P7 C007TSP_SET_1

LINEITEMS CUSTOMERS_P7 C007TSP_SET_1

CUSTOMERS CUSTOMERS_P8 C008TSP_SET_1

LINEITEMS CUSTOMERS_P8 C008TSP_SET_1

ORDERS CUSTOMERS_P8 C008TSP_SET_1

CUSTOMERS CUSTOMERS_P9 C009TSP_SET_1

ORDERS CUSTOMERS_P9 C009TSP_SET_1

LINEITEMS CUSTOMERS_P9 C009TSP_SET_1

ORDERS CUSTOMERS_P10 C00ATSP_SET_1

CUSTOMERS CUSTOMERS_P10 C00ATSP_SET_1

LINEITEMS CUSTOMERS_P10 C00ATSP_SET_1

CUSTOMERS CUSTOMERS_P11 C00BTSP_SET_1

LINEITEMS CUSTOMERS_P11 C00BTSP_SET_1

ORDERS CUSTOMERS_P11 C00BTSP_SET_1

CUSTOMERS CUSTOMERS_P12 C00CTSP_SET_1

LINEITEMS CUSTOMERS_P12 C00CTSP_SET_1

ORDERS CUSTOMERS_P12 C00CTSP_SET_1

9.3 在catalog数据库检查chunks信息

select a.name Shard, count( b.chunk_number) Number_of_Chunks from gsmadmin_internal.database a, gsmadmin_internal.chunk_loc b where a.database_num=b.database_num group by a.name;

SHARD NUMBER_OF_CHUNKS

------------------------------ ----------------

sh1 6

sh21 6

与gdsctl中config chunks命令一样,在catalog数据库查询的数量一致

9.4 检查catalog以及shard节点数据库中表信息是否正确

–catalog数据库

SQL> select table_name from user_tables;

TABLE_NAME

-----------------------

CUSTOMERS

ORDERS

LINEITEMS

PRODUCTS

MLOG$_PRODUCTS

RUPD$_PRODUCTS

–shard节点node1、node2

SQL> conn app_schema/oracle

Connected.

SQL> select table_name from user_tables;

TABLE_NAME

--------------------

CUSTOMERS

ORDERS

LINEITEMS

PRODUCTS

十、客户端连接shard分片节点测试

在连接字符串中指定sharding key连接shard数据库

sqlplus app_schema/oracle@’(description=(address=(protocol=tcp)(host=sdb1)(port=1522))(connect_data=(service_name=oltp_rw_srvc.shard.oradbcloud)(region=region1)([email protected])))’

SQL> select db_unique_name from v$database;

DB_UNIQUE_NAME

------------------------------

sh1

已连接到shard node1数据库

- 业务场景测试

一、shard table(分片表)测试

分别在shard的两个节点node1、node2做插入以及查询操作

目的:验证在shard 节点数据库是否只能看到本地节点数据

在shard节点node1插入一条数据

[oracle@sdb2 ~]$ sqlplus app_schema/oracle

SQL> INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'James', 'Parker',NULL, 'Gold', 'east', hextoraw('8d1c00e'));

SQL> commit;

column custid format a20

column firstname format a15

column lastname format a15

select custid, FirstName, LastName, class, geo from customers;

CUSTID FIRSTNAME LASTNAME CLASS GEO

--------- ------- ---- ---- ----

[email protected] James Parker Gold east

已成功插入

在shard节点node2插入一条数据

[oracle@sdb3 ~]$ sqlplus app_schema/oracle

SQL> INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'tom', 'crus',NULL, 'silver', 'west', hextoraw('9d1c00e'));

SQL> commit;

查询数据

SQL> select custid, FirstName, LastName, class, geo from customers;

CUSTID FIRSTNAME LASTNAME CLASS GEO

-------------------- --------------- --------------- ---------- --------

[email protected] tom crus silver west

结果:只有1条数据,无法查看shard node1的数据(james)

客户端连接catalog database sdb1

set pagesize 200 linesize 200

column custid format a20

column firstname format a15

column lastname format a15

col class for a10

col geo for a10

select custid, FirstName, LastName, class, geo from customers;

SQL> select custid, FirstName, LastName, class, geo from customers;

CUSTID FIRSTNAME LASTNAME CLASS GEO

-------------------- --------------- --------------- ---------- ----------

james.parker@x.bogus James Parker Gold east

tom.crus@qq.com tom crus silver west

在catalog数据库可查看到所有数据

客户端通过指定key访问shard

sqlplus app_schema/oracle@'(description=(address=(protocol=tcp)(host=sdb1)(port=1522))(connect_data=(service_name=oltp_rw_srvc.shard.oradbcloud)(region=region1)([email protected])))'

set pagesize 200 linesize 200

column custid format a20

column firstname format a15

column lastname format a15

col class for a10

col geo for a10

select custid, FirstName, LastName, class, geo from customers;

CUSTID FIRSTNAME LASTNAME CLASS GEO

-------------------- --------------- --------------- ---------- ----------

[email protected] tom crus silver west

结果:通过指定sharding key只能查到tom

总结:

1、每个shard节点只能查询到本地节点shard table的数据

2、在catalog数据库可以查询shard节点汇聚后的数据、

3、客户端可指定sharding key的方式,定向获取数据

二、dupliucate table测试

duplicated table是整个shard database每个成员均能访问相同表数据,下面场景测试插入catalog数据库以及node节点

目的:验证在每个shard包括catalog数据库查看duplicate表内容是否一致

catalog database:

SQL> insert into products(PRODUCTID,name,DESCRURI,LASTPRICE) values(3,'cts','car',1000);

SQL> commit;

col productid for 99999

col name for a10

col DESCRURI for a10

select * from products;

PRODUCTID NAME DESCRURI LASTPRICE

--------- ---------- ---------- ----------

2 ps4 sony 150

3 cts car 1000 --------<<<

1 xbox game 100

成功插入

shard node 1:

SQL> alter system switch logfile;

System altered.

SQL> select * from products;

PRODUCTID NAME DESCRURI LASTPRICE

--------- ---------- ---------- ----------

2 ps4 sony 150

3 cts car 1000

1 xbox game 100

结果:catalog新增的数据,在节点1上并没有实时查询到,手动切换日志后可查到数据库

shard node 2:

SQL> alter system switch logfile;

System altered.

SQL> select * from products;

PRODUCTID NAME DESCRURI LASTPRICE

--------- ---------- ---------- ----------

2 ps4 sony 150

3 cts car 1000

1 xbox game 100

结果:catalog新增的数据,在节点1上并没有实时查询到,手动切换日志后可查到数据库

总结:

1、在catalog数据库可以插入数据到duplicate表products,shard结果无法DML操作

2、每个shard节点查询duplicate表的内容均相同,在默认情况下,shard节点需要等待60s,数据才会同步。由初始化参数SHRD_DUPL_TABLE_REFRESH_RATE控制。

3、在每个shard节点查询duplicate表,实际上只会查本地节点MV视图,不会查询主表。即使主表所在的catalog数据库宕机也不会影响其他节点的查询。

参考资料:

50.3 Duplicated Tables

https://docs.oracle.com/en/database/oracle/oracle-database/12.2/admin/sharding-schema-design.html#GUID-50D56C0A-5185-4F04-A0CA-EAA442E825D3

58.2 Read-Only Materialized Views

https://docs.oracle.com/en/database/oracle/oracle-database/12.2/admin/read-only-materialized-view-concepts.html#GUID-DF9A3C8C-BD92-4C6A-958F-7D17FADB6276

duplicate表过程

三、数据分片测试

场景:分别在两个终端插入数据,custid不一致

目的:验证shard数据库在插入数据的时候是否会根据custid的一致性哈希算法进行随机数据分片

1、在客户端编辑tnsnames,用于连接shard数据库

sharddb =

(DESCRIPTION=

(FAILOVER=on)

(ADDRESS_LIST=

(LOAD_BALANCE=ON)

(ADDRESS=(PROTOCOL = TCP)(host=sdb1)(port=1522)))

(CONNECT_DATA=

(SERVICE_NAME=oltp_rw_srvc.shard.oradbcloud)

(REGION=region1)

)

)

2、在客户端分别用两个终端连接shard数据库

终端1 连接shard node1:

sqlplus app_schema/oracle@sharddb

SQL> show parameter db_uni

NAME TYPE VALUE

------------------------------------ ------ ------------------------------

db_unique_name string sh1

连接的是shard node1

插入数据

INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'tom2', 'crus2',NULL, 'silver', 'west', hextoraw('9d1c00e'));

INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'james', 'bond',NULL, 'silver', 'south', hextoraw('9d1d00e'));

INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'clark', 'kent',NULL, 'bronze', 'west', hextoraw('9d2c00e'));

INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'brad2', 'pitt2',NULL, 'silver', 'north', hextoraw('9d1c10e'));

commit;

结果:只有1条数据可以插入,其余三条数据有报错。ORA-14466: Data in a read-only partition or subpartition cannot be modified.

终端2 连接shard node2

sqlplus app_schema/oracle@sharddb

结果:只有3条数据插入,剩余一条数据报错ORA-14466: Data in a read-only partition or subpartition cannot be modified.

3、客户端直接连接catalog数据库

sqlplus app_schema/oracle@172.16.4.21/shard

INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'tom2', 'crus2',NULL, 'silver', 'west', hextoraw('9d1c00e'));

INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'james', 'bond',NULL, 'silver', 'south', hextoraw('9d1d00e'));

INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'clark', 'kent',NULL, 'bronze', 'west', hextoraw('9d2c00e'));

INSERT INTO Customers (CustId, FirstName, LastName, CustProfile,Class, Geo, Passwd) VALUES ('[email protected]', 'brad2', 'pitt2',NULL, 'silver', 'north', hextoraw('9d1c10e'));

commit;

set pagesize 200 linesize 200

column custid format a20

column firstname format a15

column lastname format a15

col class for a10

col geo for a10

select custid, FirstName, LastName, class, geo from customers;

CUSTID FIRSTNAME LASTNAME CLASS GEO

-------------------- --------------- --------------- ---------- ----------

brad2.pitt@qq.com brad2 pitt2 silver north

tom2.cru@qq.com tom2 crus2 silver west

james.bond@qq.com james bond silver south

结果:在catalog数据库可以成功插入所有数据,数据成功分片在两个节点,节点1一条数据库,节点2三条数据

总结:

1.在catalog无法直接通过truncate或者不加where条件的delete删除所有行,必须通过主键(此文档为custid)进行删除行。否则会有报错:不允许跨节点的dml操作

ORA-02671: DML are not allowed on more than one shard

2.通过连接catalog数据库,数据将以分片形式插入数据到两个节点

3.两个节点插入的数据可能是以某种算法进行分布,在某个节点单独执行插入语句会报错

ORA-14466: Data in a read-only partition or subpartition cannot be modified.

4.当以gdsctl创建的服务oltp_rw_srvc.shard.oradbcloud,进行客户端连接时,类似rac环境,shard数据库也能实现负载均衡。

- Sharding数据库维护

1.关闭shard database

catalog数据库端:

先关闭director

[oracle@sdb1 ~]$ gdsctl

Current GSM is set to SHARDDIRECTOR1

GDSCTL>stop gsm -gsm SHARDDIRECTOR1

GSM is stopped successfully

shard节点1

关闭agent、监听、关闭数据库

[oracle@sdb2 ~]$ schagent -stop

Agent running with PID 2783

Done stopping all running jobs

Terminating agent gracefully

[oracle@sdb2 ~]$ lsnrctl stop

SQL> shutdown immediate

shard节点2

关闭agent、监听、关闭数据库

[oracle@sdb3 ~]$ schagent -stop

Agent running with PID 2824

Done stopping all running jobs

Terminating agent gracefully

[oracle@sdb3 ~]$ lsnrctl stop

SQL> shutdown immediate

catalog数据库关监听、停库

[oracle@sdb1 ~]$ lsnrctl stop

SQL> shutdown immediate

2.启动shard数据库

catalog数据库

启动数据库以及监听

SQL> startup

[oracle@sdb1 ~]$ lsnrctl start

所有shard节点

启动数据库

SQL> startup

启动监听

[oracle@sdb2 ~]$ lsnrctl start

启动代理

[oracle@sdb2 ~]$ schagent -start

catalog数据库

启动director

GDSCTL>start gsm -gsm SHARDDIRECTOR1

查看shard节点状态

GDSCTL>config shard

查看shard数据库服务状态

GDSCTL>config service

查看shard节点数据库状态

GDSCTL>databases

3.客户端测试

sqlplus app_schema/oracle@sharddb

- 性能测试

1与单节点数据库相比,性能是否提升

插入1000W数据、并查询

2demo的fuction监控性能

1.17 在线把非分区表转为分区表(12.2.0.1)

在Oracle12.2版本之前,如果想把一个非分区表转为分区表常用的有这几种方法:1、建好分区表然后insert into select 把数据插入到分区表中;2、使用在线重定义(DBMS_REDEFINITION)的方法。

Oracle12cR2版本中提供了一种新特性,一条语句就可以把非分区表转换为分区表,语法如下:

1、创建测试表及相关索引,并查看状态

create table emp (empno number,job varchar2(20),deptno number);

create index idx_emp_no on emp(empno);

create index idx_emp_job on emp(job);

col table_name for a30

col index_name for a30

select table_name,partitioned from user_tables where table_name='EMP';

TABLE_NAME PAR

------------------------------ ---

EMP NO

select index_name,partitioned,status from user_indexes where table_name='EMP';

SQL> select index_name,partitioned,status from user_indexes where table_name='EMP';

INDEX_NAME PAR STATUS

------------------------------ --- --------

IDX_EMP_NO NO VALID

IDX_EMP_JOB NO VALID

2、使用alter table语句,执行分区表转换操作

alter table emp modify

partition by range (deptno) interval (10)

(

partition p1 values less than (10),

partition p2 values less than (20)

) online

;

3、查看现在的表和索引的状态

select table_name,partitioned from user_tables where table_name='EMP';

TABLE_NAME PAR

------------------------------ ---

EMP YES

select index_name,partitioned,status from user_indexes where table_name='EMP';

INDEX_NAME PAR STATUS

------------------------------ --- --------

IDX_EMP_NO NO VALID

IDX_EMP_JOB NO VALID

col PARTITION_NAME for a10

select table_name,partition_name from user_tab_partitions where table_name='EMP';

TABLE_NAME PARTITION_

------------------------------ ----------

EMP P1

EMP P2

现在表EMP已经被转换为分区表了,索引转换为分区索引

4、如果想在转换表时同时转换索引可以使用UPDATE INDEXES子句

alter table emp modify

partition by range (deptno) interval (10)

(

partition p1 values less than (10),

partition p2 values less than (20)

) online update indexes (idx_emp_no local)

;

查看索引状态

select index_name,partitioned,status from user_indexes where table_name='EMP';

INDEX_NAME PAR STATUS

------------------------------ --- --------

IDX_EMP_NO YES N/A

IDX_EMP_JOB NO VALID

select index_name,partition_name,status from user_ind_partitions where index_name='IDX_EMP_NO';

INDEX_NAME PARTITION_ STATUS

------------------------------ ---------- --------

IDX_EMP_NO P1 USABLE

IDX_EMP_NO P2 USABLE

1.18 表在线移动(12.2.0.1)

在11g以及12C R1,如果通过alter table move降低高水位,表的索引在move之后会失效。在12C R2中可通过独有关键字online子句以及update indexes在线移动表,并且索引不会失效

1、创建测试表,id为主键,主键索引为t1_pk

CREATE TABLE test

(id NUMBER,

description VARCHAR2(50),

created_date DATE,

CONSTRAINT t1_pk PRIMARY KEY (id)

) tablespace p1;

2、插入1000行数据

INSERT INTO test

SELECT level,

'Description for ' || level,

CASE

WHEN MOD(level,2) = 0 THEN TO_DATE('01/01/2018', 'DD/MM/YYYY')

ELSE TO_DATE('01/01/2019', 'DD/MM/YYYY')

END

FROM dual

CONNECT BY level <= 1000;

commit;

3、查看当前表使用的块以及空余块(高水位)

select TABLE_NAME,BLOCKS,EMPTY_BLOCKS from user_tables where table_name in ('TEST');

TABLE_NAME BLOCKS EMPTY_BLOCKS

------------------------------ ---------- ------------

TEST 13 3

4、删除500行数据

delete from test where rownum <=500;

commit;

5、通过move降低delete之后的高水位

alter table test move online;