大数据学习[12]:elasticsearch一些概念与索引操作

1. 文档

ES的python的API:

http://elasticsearch-py.readthedocs.io/en/master/api.html

ES官方文档:

https://www.elastic.co/guide/en/elasticsearch/reference/current/getting-started.html

中文指南:

https://www.elastic.co/guide/cn/elasticsearch/guide/current/index.html

2. 一些概念

Elasticsearch中存储数据的行为就叫做索引(indexing):

在Elasticsearch中,文档归属于一种类型(type),而这些类型存在于索引(index)中,我们可以画一些简单的对比图来类比传统关系型数据库:

Relational DB -> Databases -> Tables -> Rows -> Columns

Elasticsearch -> Indices -> Types -> Documents -> Fields

2.1.索引的含义

索引(名词)

一个索引(index)就像是传统关系数据库中的数据库,它是相关文档存储的地方,index的复数是indices 或indexes。

索引(动词)

「索引一个文档」表示把一个文档存储到索引(名词)里,以便它可以被检索或者查询。这很像SQL中的 INSERT 关键字,差别是,如果文档已经存在,新的文档将覆盖旧的文档。

倒排索引

传统数据库为特定列增加一个索引,例如B-Tree索引来加速检索。Elasticsearch和Lucene使用一种叫做倒排索引(inverted index)的数据结构来达到相同目的。

默认情况下,文档中的所有字段都会被索引(拥有一个倒排索引),只有这样他们才是可被搜索的。

2.2 自增ID

如果我们的数据没有自然ID,我们可以让Elasticsearch自动为我们生成。请求结构发生了变化: PUT 方法—— “在这个URL中存储文档” 变成了 POST 方法—— “在这个文档下存储文档” 。

2.3查看集群的情况

GET _cluster/health{

"cluster_name": "my-application",

"status": "yellow",

"timed_out": false,

"number_of_nodes": 1,

"number_of_data_nodes": 1,

"active_primary_shards": 51,

"active_shards": 51,

"relocating_shards": 0,

"initializing_shards": 0,

"unassigned_shards": 51,

"delayed_unassigned_shards": 0,

"number_of_pending_tasks": 0,

"number_of_in_flight_fetch": 0,

"task_max_waiting_in_queue_millis": 0,

"active_shards_percent_as_number": 50

}2.4查看所有索引(名词)

GET _cat/indices返回结果:

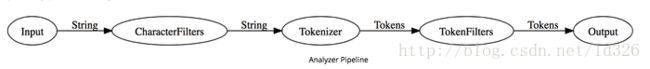

2.5 Anatomy of analyzer

https://www.elastic.co/guide/en/elasticsearch/reference/current/analyzer-anatomy.html#analyzer-anatomy

从文档中提取词元(Token)的算法称为分词器(Tokenizer),在分词前预处理的算法称为字符过滤器(Character Filter),进一步处理词元的算法称为词元过滤器(Token Filter),最后得到词(Term)。这整个分析算法称为分析器(Analyzer)。

Analyzer 按顺序做三件事:

使用 CharacterFilter 过滤字符

A character filter receives the original text as a stream of characters and can transform the stream by adding, removing, or changing characters.

一个字符过滤器,接受字符流作为源文本,通过增加、删除、改变字符的方法来转换。

例如把HTML的标签去掉,把字符转换成其它的字符。

An analyzer may have zero or more character filters, which are applied in order.

使用Tokenizer 分词

A tokenizer receives a stream of characters, breaks it up into individual tokens (usually individual words), and outputs a stream of tokens.

接收一个字符串流,分割成多个terms,形成一个流过程,一般是分词。

例如:以空格为分割符符进行分词。

An analyzer must have exactly one tokenizer.

使用 TokenFilter 过滤词

A token filter receives the token stream and may add, remove, or change tokens.

例如去掉词用词,把大写变成小写,同义词。

An analyzer may have zero or more token filters, which are applied in order.

例子:

POST _analyze

{

"char_filter": [ "html_strip" ],

"tokenizer": "standard",

"filter": [ "uppercase", "asciifolding" ,"stop"],

"text": "I'm so happy!

Is this déjà vu"

}运行结果:

{

"tokens": [

{

"token": "I'M",

"start_offset": 3,

"end_offset": 11,

"type": "" ,

"position": 0

},

{

"token": "SO",

"start_offset": 12,

"end_offset": 14,

"type": "" ,

"position": 1

},

{

"token": "HAPPY",

"start_offset": 18,

"end_offset": 27,

"type": "" ,

"position": 2

},

{

"token": "IS",

"start_offset": 37,

"end_offset": 39,

"type": "" ,

"position": 3

},

{

"token": "THIS",

"start_offset": 40,

"end_offset": 44,

"type": "" ,

"position": 4

},

{

"token": "DEJA",

"start_offset": 45,

"end_offset": 49,

"type": "" ,

"position": 5

},

{

"token": "VU",

"start_offset": 50,

"end_offset": 52,

"type": "" ,

"position": 6

}

]

}自定义解释(analysis要在索引下定义,可以在其它地方引用):

PUT my_index

{

"settings": {

"analysis": {

"analyzer": {

"std_folded": {

"type": "custom",

"tokenizer": "standard",

"filter": [

"lowercase",

"asciifolding"

]

}

}

}

},

"mappings": {

"my_type": {

"properties": {

"my_text": {

"type": "text",

"analyzer": "std_folded"

}

}

}

}

}3. 在kibana的开发工具中索引数据

3.1索引数据

PUT twitter/tweet/1

{

"user" : "kimchy",

"post_date" : "2009-11-15T14:12:12",

"message" : "trying out Elasticsearch"

}{

"_index": "twitter",

"_type": "tweet",

"_id": "1",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"created": true

}The _shards header provides information about the replication process of the index operation.

● total - Indicates to how many shard copies (primary and replica shards) the index operation should be executed on.

● successful- Indicates the number of shard copies the index operation succeeded on.

● failed - An array that contains replication related errors in the case an index operation failed on a replica shard.

The index operation is successful in the case successful is at least 1.

如果数据库中没肿这个索引,更新数据时会创建索引。

3.2 根据版本号来更新数据

PUT twitter/tweet/1?version=3

{

"message" : "elasticsearch now has versioning support, double cool!"

}{

"_index": "twitter",

"_type": "tweet",

"_id": "1",

"_version": 4,

"result": "updated",

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"created": false

}如果版本不对会出现版本冲突的异常,这个可以用来进行乐观锁并发操作。

{

"error": {

"root_cause": [

{

"type": "version_conflict_engine_exception",

"reason": "[tweet][1]: version conflict, current version [4] is different than the one provided [2]",

"index_uuid": "McxXlj-GS7iCfFD6EBX6EQ",

"shard": "3",

"index": "twitter"

}

],

"type": "version_conflict_engine_exception",

"reason": "[tweet][1]: version conflict, current version [4] is different than the one provided [2]",

"index_uuid": "McxXlj-GS7iCfFD6EBX6EQ",

"shard": "3",

"index": "twitter"

},

"status": 409

}3.3 自动生成ID[把put改成post]

POST twitter/tweet/

{

"user" : "hello world",

"post_date" : "2009-11-15T14:12:12",

"message" : "trying out good"

}{

"_index": "twitter",

"_type": "tweet",

"_id": "AWEsKql_h7jwcgPfOd03",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"created": true

}3.4路由Routing,提供Routing参数

默认情况下,分片定位【routing】是通过使用这个文档id值来控制的。对于更明确指定的控制,使用routing指定的参数通过router的方法把这个参数喂给hash函数来直接指定。

POST twitter/tweet?routing=happyprince

{

"user" : "happyprince",

"post_date" : "2009-11-15T14:12:12",

"message" : "go to beijing"

}运行结果:

{

"_index": "twitter",

"_type": "tweet",

"_id": "AWEsM2rah7jwcgPfOeM8",

"_version": 1,

"result": "created",

"_shards": {

"total": 2,

"successful": 1,

"failed": 0

},

"created": true

}通过这个方法:GET twitter/tweet/AWEsM2rah7jwcgPfOeM8?pretty

查找不到routing的内容的,要加routing参数才行:

GET twitter/tweet/AWEsM2rah7jwcgPfOeM8?routing=happyprince

返回结果:

{

"_index": "twitter",

"_type": "tweet",

"_id": "AWEsM2rah7jwcgPfOeM8",

"_version": 1,

"_routing": "happyprince",

"found": true,

"_source": {

"user": "happyprince",

"post_date": "2009-11-15T14:12:12",

"message": "go to beijing"

}

}注意:如果_routing映射被定义或设置了required,如果没有提供routing值索引操作会失败的。

3.5索引父子关系

PUT blogs

{

"mappings": {

"tag_parent": {},

"blog_tag": {

"_parent": {

"type": "tag_parent"

}

}

}

}PUT blogs/blog_tag/1122?parent=1111

{

"tag" : "something"

}查询出来:

{

"took": 0,

"timed_out": false,

"_shards": {

"total": 5,

"successful": 5,

"skipped": 0,

"failed": 0

},

"hits": {

"total": 1,

"max_score": 1,

"hits": [

{

"_index": "blogs",

"_type": "blog_tag",

"_id": "1122",

"_score": 1,

"_routing": "1111",

"_parent": "1111",

"_source": {

"tag": "something"

}

}

]

}

}3.6 索引超时设置

设置5分钟,当储存超过5分钟后就报出保存失败。

PUT twitter/tweet/1?timeout=5m

{

"user" : "kimchy",

"post_date" : "2009-11-15T14:12:12",

"message" : "trying out Elasticsearch"

}happyprince, http://blog.csdn.net/ld326/article/details/79187764

![大数据学习[12]:elasticsearch一些概念与索引操作_第1张图片](http://img.e-com-net.com/image/info8/af08686f0a88461b8f83e33f65f6230c.jpg)