python基础学习(五)——常用模块

模块,用一砣代码实现了某个功能的代码集合。

类似于函数式编程和面向过程编程,函数式编程则完成一个功能,其他代码用来调用即可,提供了代码的重用性和代码间的耦合。而对于一个复杂的功能来,可能需要多个函数才能完成(函数又可以在不同的.py文件中),n个 .py 文件组成的代码集合就称为模块。

如:os 是系统相关的模块;file是文件操作相关的模块

From module_test import *:

此种调用方式是将module_test模块中的代码复制到当下的代码中,此时如果当下文件中有同名的方法,则有可能发生当地的函数覆盖module_test的函数,因此此种导入方式不推荐。

另外有几种导入方式:

Import module_name

Import module_name, module2_name

From module_alex import *

From module_alex import m1, m2, m3

From module_alex import logger as logger_alex

Import的本质:

Import module_alex

即所有的代码赋值给module_alex变量,顾调用时直接为:module_alex.test();

From module_alex import name

即将module_alex中的name,放入到本地代码中,调用时可以不用加前缀:name即可。

包:用来从逻辑上组织模块的。

则导入包该如何导入:

导入包的本质就是解释这个包的目录下的__init__.py文件。

Sys.path:是指代码搜索方法时导入的路径集合。

不在同一目录下的py文件是无法使用import的,为了使用import,可以在sys.path中加入路径即可。

Os.path.abspath(__file__):确定当前模块的路径。

获取目录名:

Os.path.dirname(Os.path.abspath(__file__)):取上一级的目录。

添加到path中:

Sys.path.append(Os.path.dirname(Os.path.abspath(__file__))):即将目录放到sys.path中。

Sys.path.insert():插到路径集合的前面。

引入包后调用方式为:

1) 首先修改__init__.py

在init文件中加入:import test1(绝对路径导入)/from . import test1(从当前目录下导入test1) #test1=‘test1.py allcode’

2) 在test1.py文件中import package_test/fromday5 import package_test

调用时使用package_test.test1.find()即可。

模块分为三种:

· 自定义模块

· 内置标准模块(又称标准库)

· 开源模块

自定义模块和开源模块的使用参考 http://www.cnblogs.com/wupeiqi/articles/4963027.html

time & datetime模块

1#_*_coding:utf-8_*_

2__author__ = 'Alex Li'

3

4import time

5

6

7# print(time.clock())#返回处理器时间,3.3开始已废弃 , 改成了time.process_time()测量处理器运算时间,不包括sleep时间,不稳定,mac上测不出来

8#print(time.altzone) #返回与utc时间的时间差,以秒计算\

9#print(time.asctime()) #返回时间格式"Fri Aug 19 11:14:16 2016",

10# print(time.localtime()) #返回本地时间的struct time对象格式

11# print(time.gmtime(time.time()-800000)) #返回utc时间的struc时间对象格式

13# print(time.asctime(time.localtime())) #返回时间格式"Fri Aug 19 11:14:16 2016",

14#print(time.ctime()) #返回Fri Aug 19 12:38:29 2016 格式, 同上

18# 日期字符串 转成 时间戳

19# string_2_struct = time.strptime("2016/05/22","%Y/%m/%d")#将 日期字符串 转成 struct时间对象格式

20# print(string_2_struct)

21# #

22# struct_2_stamp = time.mktime(string_2_struct) #将struct时间对象转成时间戳

23# print(struct_2_stamp)

27#将时间戳转为字符串格式

28# print(time.gmtime(time.time()-86640)) #将utc时间戳转换成struct_time格式

29# print(time.strftime("%Y-%m-%d %H:%M:%S",time.gmtime()) ) #将utc struct_time格式转成指定的字符串格式

35#时间加减

36import datetime

38# print(datetime.datetime.now()) #返回 2016-08-19 12:47:03.941925

39#print(datetime.date.fromtimestamp(time.time()) ) # 时间戳直接转成日期格式 2016-08-19

40# print(datetime.datetime.now() )

41# print(datetime.datetime.now() + datetime.timedelta(3)) #当前时间+3天

42# print(datetime.datetime.now() + datetime.timedelta(-3)) #当前时间-3天

43# print(datetime.datetime.now() + datetime.timedelta(hours=3)) #当前时间+3小时

44# print(datetime.datetime.now() + datetime.timedelta(minutes=30)) #当前时间+30分

48# c_time = datetime.datetime.now()

49# print(c_time.replace(minute=3,hour=2)) #时间替换

Directive |

Meaning |

Notes |

%a |

Locale’s abbreviated weekday name. |

|

%A |

Locale’s full weekday name. |

|

%b |

Locale’s abbreviated month name. |

|

%B |

Locale’s full month name. |

|

%c |

Locale’s appropriate date and time representation. |

|

%d |

Day of the month as a decimal number [01,31]. |

|

%H |

Hour (24-hour clock) as a decimal number [00,23]. |

|

%I |

Hour (12-hour clock) as a decimal number [01,12]. |

|

%j |

Day of the year as a decimal number [001,366]. |

|

%m |

Month as a decimal number [01,12]. |

|

%M |

Minute as a decimal number [00,59]. |

|

%p |

Locale’s equivalent of either AM or PM. |

(1) |

%S |

Second as a decimal number [00,61]. |

(2) |

%U |

Week number of the year (Sunday as the first day of the week) as a decimal number [00,53]. All days in a new year preceding the first Sunday are considered to be in week 0. |

(3) |

%w |

Weekday as a decimal number [0(Sunday),6]. |

|

%W |

Week number of the year (Monday as the first day of the week) as a decimal number [00,53]. All days in a new year preceding the first Monday are considered to be in week 0. |

(3) |

%x |

Locale’s appropriate date representation. |

|

%X |

Locale’s appropriate time representation. |

|

%y |

Year without century as a decimal number [00,99]. |

|

%Y |

Year with century as a decimal number. |

|

%z |

Time zone offset indicating a positive or negative time difference from UTC/GMT of the form +HHMM or -HHMM, where H represents decimal hour digits and M represents decimal minute digits [-23:59, +23:59]. |

|

%Z |

Time zone name (no characters if no time zone exists). |

|

%% |

A literal '%' character. |

|

|

|

Gmtime():结果为UTC时区;

Localtime():结果为UTC+8的时区;

Struct_time():结构化的元组;

时间戳:即将时间转换成秒,然后返回一个长数据。

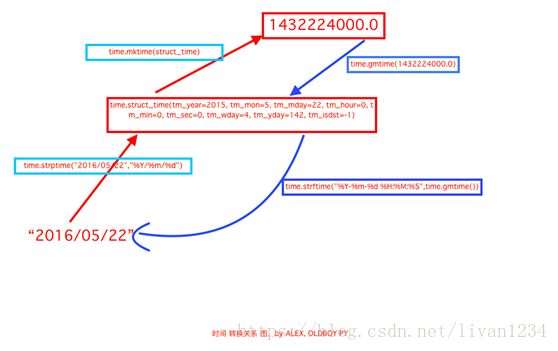

时间有三种格式:

Struct格式:元组形式存储;

Timestamp格式:秒的形式存储;

String格式:字符串的形式存储。

三者可以相互转换。

random模块

随机数

1 2 3 4 |

mport random print random.random() print random.randint(1,2) print random.randrange(1,10) |

生成随机验证码

1 2 3 4 5 6 7 8 9 10 |

import random checkcode = '' for i in range(4): current = random.randrange(0,4) if current != i: temp = chr(random.randint(65,90)) else: temp = random.randint(0,9) checkcode += str(temp) print checkcode |

验证码案例:

#!/usr/bin/env python

# _*_ UTF-8 _*_

import random

checkcode=''

#实现验证码

for i in range(4):

current=random.randrange(0,4)

if current==i:

tmp=chr(random.randint(65,90))

else:

tmp=random.randint(0,9)

checkcode+=str(current)

print(checkcode)

OS模块

提供对操作系统进行调用的接口

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 |

os.getcwd() 获取当前工作目录,即当前python脚本工作的目录路径 os.chdir("dirname") 改变当前脚本工作目录;相当于shell下cd os.curdir 返回当前目录: ('.') os.pardir 获取当前目录的父目录字符串名:('..') os.makedirs('dirname1/dirname2') 可生成多层递归目录 os.removedirs('dirname1') 若目录为空,则删除,并递归到 上一级目录,如若也为空,则删除,依此类推 os.mkdir('dirname') 生成单级目录;相当于shell中mkdir dirname os.rmdir('dirname') 删除单级空目录,若目录不为空则无法删除, 报错;相当于shell中rmdir dirname os.listdir('dirname') 列出指定目录下的所有文件和子目录, 包括隐藏文件,并以列表方式打印 os.remove() 删除一个文件 os.rename("oldname","newname") 重命名文件/目录 os.stat('path/filename') 获取文件/目录信息 os.sep 输出操作系统特定的路径分隔符,win下为"\\",Linux下为"/" os.linesep 输出当前平台使用的行终止符,win下为"\t\n",Linux下为"\n" os.pathsep 输出用于分割文件路径的字符串 os.name 输出字符串指示当前使用平台。win->'nt'; Linux->'posix' os.system("bash command") 运行shell命令,直接显示 os.environ 获取系统环境变量 os.path.abspath(path) 返回path规范化的绝对路径 os.path.split(path) 将path分割成目录和文件名二元组返回 os.path.dirname(path) 返回path的目录。其实就是os.path.split(path) 的第一个元素 os.path.basename(path) 返回path最后的文件名。如何path以/或\结尾, 那么就会返回空值。即os.path.split(path)的第二个元素 os.path.exists(path) 如果path存在,返回True;如果path不存在, 返回False os.path.isabs(path) 如果path是绝对路径,返回True os.path.isfile(path) 如果path是一个存在的文件,返回True。 否则返回False os.path.isdir(path) 如果path是一个存在的目录,则返回True。 否则返回False os.path.join(path1[, path2[, ...]]) 将多个路径组合后返回, 第一个绝对路径之前的参数将被忽略 os.path.getatime(path) 返回path所指向的文件或者目录的最后存取时间 os.path.getmtime(path) 返回path所指向的文件或者目录的最后修改时间 |

sys模块

1 2 3 4 5 6 7 8 |

sys.argv 命令行参数List,第一个元素是程序本身路径 sys.exit(n) 退出程序,正常退出时exit(0) sys.version 获取Python解释程序的版本信息 sys.maxint 最大的Int值 sys.path 返回模块的搜索路径,初始化时使用 PYTHONPATH环境变量的值 sys.platform 返回操作系统平台名称 sys.stdout.write('please:') val = sys.stdin.readline()[:-1] |

shutil 模块

直接参考 http://www.cnblogs.com/wupeiqi/articles/4963027.html

高级的文件、文件夹、压缩包处理模块

shutil.copyfileobj(fsrc,fdst[, length])

将文件内容拷贝到另一个文件中,可以部分内容

#!/usr/bin/env python

# _*_ UTF-8 _*_

import shutil

f1 = open("test1", encoding="utf-8")

f2 = open("test2","w")

shutil.copyfileobj(f1, f2)

def copyfileobj(fsrc, fdst, length=16*1024):

"""copy data from file-like object fsrc to file-like objectfdst"""

while 1:

buf = fsrc.read(length)

ifnot buf:

break

fdst.write(buf)

shutil.copyfile(src,dst)

拷贝文件

shutil.copyfile(test1, test2)

def copyfile(src, dst):

"""Copy data from src to dst"""

if _samefile(src, dst):

raiseError("`%s` and `%s`are the same file" %(src, dst))

for fn in [src, dst]:

try:

st = os.stat(fn)

exceptOSError:

# File most likely does not exist

pass

else:

# XXX What about other special files? (sockets,devices...)

ifstat.S_ISFIFO(st.st_mode):

raiseSpecialFileError("`%s` is anamed pipe" % fn)

with open(src, 'rb') asfsrc:

with open(dst, 'wb') asfdst:

copyfileobj(fsrc, fdst)

shutil.copymode(src,dst)

仅拷贝权限。内容、组、用户均不变

一般用在Linux中,Windows中权限的概念不清晰。

def copymode(src, dst):

"""Copy mode bits from src to dst"""

if hasattr(os, 'chmod'):

st = os.stat(src)

mode = stat.S_IMODE(st.st_mode)

os.chmod(dst, mode)

shutil.copystat(src,dst)

拷贝状态的信息,包括:mode bits, atime, mtime, flags

def copystat(src, dst):

"""Copy all stat info (mode bits, atime, mtime, flags) fromsrc to dst"""

st = os.stat(src)

mode = stat.S_IMODE(st.st_mode)

if hasattr(os, 'utime'):

os.utime(dst, (st.st_atime,st.st_mtime))

if hasattr(os, 'chmod'):

os.chmod(dst, mode)

if hasattr(os, 'chflags') and hasattr(st, 'st_flags'):

try:

os.chflags(dst, st.st_flags)

exceptOSError, why:

for err in'EOPNOTSUPP', 'ENOTSUP':

ifhasattr(errno, err) and why.errno == getattr(errno, err):

break

else:

raise

shutil.copy(src,dst)

拷贝文件和权限

def copy(src, dst):

"""Copy data and mode bits ("cp src dst").

The destination may be a directory.

"""

if os.path.isdir(dst):

dst = os.path.join(dst,os.path.basename(src))

copyfile(src, dst)

copymode(src, dst)

shutil.copy2(src,dst)

拷贝文件和状态信息

def copy2(src, dst):

"""Copy data and all stat info ("cp -p src dst").

The destination may be a directory.

"""

if os.path.isdir(dst):

dst = os.path.join(dst,os.path.basename(src))

copyfile(src, dst)

copystat(src, dst)

shutil.ignore_patterns(*patterns)

shutil.copytree(src, dst, symlinks=False, ignore=None)

递归的去拷贝文件(包括目录在内,全部复制)

例如:copytree(source, destination, ignore=ignore_patterns('*.pyc', 'tmp*'))

View Code

shutil.rmtree(path[,ignore_errors[, onerror]])

递归的去删除文件

shutil.copytree("test","New_test")

shutil.rmtree("new_test")

def rmtree(path, ignore_errors=False,οnerrοr=None):

"""Recursively delete a directory tree.

If ignore_errors is set, errors areignored; otherwise, if onerror

is set, it is called to handle the errorwith arguments (func,

path, exc_info) where func is os.listdir,os.remove, or os.rmdir;

path is the argument to that function thatcaused it to fail; and

exc_info is a tuple returned bysys.exc_info(). If ignore_errors

is false and onerror is None, an exceptionis raised.

"""

if ignore_errors:

defonerror(*args):

pass

elif onerror is None:

defonerror(*args):

raise

try:

ifos.path.islink(path):

# symlinks to directories are forbidden, see bug #1669

raiseOSError("Cannot callrmtree on a symbolic link")

except OSError:

onerror(os.path.islink, path,sys.exc_info())

# can't continue even if onerror hook returns

return

names = []

try:

names = os.listdir(path)

except os.error, err:

onerror(os.listdir, path, sys.exc_info())

for name innames:

fullname = os.path.join(path, name)

try:

mode = os.lstat(fullname).st_mode

exceptos.error:

mode = 0

ifstat.S_ISDIR(mode):

rmtree(fullname, ignore_errors,onerror)

else:

try:

os.remove(fullname)

exceptos.error, err:

onerror(os.remove, fullname,sys.exc_info())

try:

os.rmdir(path)

except os.error:

onerror(os.rmdir, path, sys.exc_info())

shutil.move(src,dst)

递归的去移动文件

def move(src, dst):

"""Recursively move a file or directory to anotherlocation. This is

similar to the Unix "mv" command.

If the destination is a directory or asymlink to a directory, the source

is moved inside the directory. Thedestination path must not already

exist.

If the destination already exists but isnot a directory, it may be

overwritten depending on os.rename()semantics.

If the destination is on our currentfilesystem, then rename() is used.

Otherwise, src is copied to the destinationand then removed.

A lot more could be done here... A look at a mv.c shows a lot of

the issues this implementation glossesover.

"""

real_dst = dst

if os.path.isdir(dst):

if_samefile(src, dst):

# We might be on a case insensitive filesystem,

# perform the rename anyway.

os.rename(src, dst)

return

real_dst = os.path.join(dst,_basename(src))

ifos.path.exists(real_dst):

raiseError, "Destinationpath '%s' already exists" %real_dst

try:

os.rename(src, real_dst)

except OSError:

ifos.path.isdir(src):

if_destinsrc(src, dst):

raiseError, "Cannot move adirectory '%s' into itself '%s'." % (src, dst)

copytree(src, real_dst,symlinks=True)

rmtree(src)

else:

copy2(src, real_dst)

os.unlink(src)

shutil.make_archive(base_name,format,...):

shutil.make_archive("new_name","zip","E:\pycharmproject\pyday1\day5")

创建压缩包并返回文件路径,例如:zip、tar

· base_name:压缩包的文件名,也可以是压缩包的路径。只是文件名时,则保存至当前目录,否则保存至指定路径,

如:www =>保存至当前路径

如:/Users/wupeiqi/www =>保存至/Users/wupeiqi/

· format:压缩包种类,“zip”, “tar”, “bztar”,“gztar”

· root_dir:要压缩的文件夹路径(默认当前目录)

· owner:用户,默认当前用户

· group:组,默认当前组

· logger:用于记录日志,通常是logging.Logger对象

1 2 3 4 5 6 7 8 9 |

#将 /Users/wupeiqi/Downloads/test 下的文件打包放置当前程序目录 import shutil ret = shutil.make_archive("wwwwwwwwww", 'gztar', root_dir='/Users/wupeiqi/Downloads/test')

#将 /Users/wupeiqi/Downloads/test 下的文件打包放置 /Users/wupeiqi/目录 import shutil ret = shutil.make_archive("/Users/wupeiqi/wwwwwwwwww", 'gztar', root_dir='/Users/wupeiqi/Downloads/test') |

def make_archive(base_name, format,root_dir=None, base_dir=None, verbose=0,

dry_run=0, owner=None,group=None, logger=None):

"""Create an archive file (eg. zip or tar).

'base_name' is the name of the file tocreate, minus any format-specific

extension; 'format' is the archive format:one of "zip", "tar", "bztar"

or "gztar".

'root_dir' is a directory that will be theroot directory of the

archive; ie. we typically chdir into'root_dir' before creating the

archive. 'base_dir' is the directory where we start archiving from;

ie. 'base_dir' will be the common prefix ofall files and

directories in the archive. 'root_dir' and 'base_dir' both default

to the current directory. Returns the name of the archive file.

'owner' and 'group' are used when creatinga tar archive. By default,

uses the current owner and group.

"""

save_cwd = os.getcwd()

if root_dir isnot None:

iflogger isnot None:

logger.debug("changing into '%s'", root_dir)

base_name = os.path.abspath(base_name)

ifnot dry_run:

os.chdir(root_dir)

if base_dir is None:

base_dir = os.curdir

kwargs = {'dry_run':dry_run, 'logger': logger}

try:

format_info = _ARCHIVE_FORMATS[format]

except KeyError:

raiseValueError, "unknownarchive format '%s'" %format

func = format_info[0]

for arg, val informat_info[1]:

kwargs[arg] = val

if format != 'zip':

kwargs['owner'] =owner

kwargs['group'] =group

try:

filename = func(base_name, base_dir,**kwargs)

finally:

ifroot_dir isnot None:

iflogger isnot None:

logger.debug("changing back to '%s'", save_cwd)

os.chdir(save_cwd)

return filename

shutil 对压缩包的处理是调用 ZipFile 和 TarFile 两个模块来进行的,详细:

import zipfile

# 压缩

z =zipfile.ZipFile('laxi.zip', 'w')

z.write('a.log')

z.write('data.data')

z.close()

#!/usr/bin/env python

# _*_ UTF-8 _*_

import zipfile

z = zipfile.ZipFile("day5.zip","w")

z.write("test1")

z.close()

# 解压

z =zipfile.ZipFile('laxi.zip', 'r')

z.extractall()

z.close()

import tarfile

# 压缩

tar =tarfile.open('your.tar','w')

tar.add('/Users/wupeiqi/PycharmProjects/bbs2.zip', arcname='bbs2.zip')

tar.add('/Users/wupeiqi/PycharmProjects/cmdb.zip', arcname='cmdb.zip')

tar.close()

# 解压

tar =tarfile.open('your.tar','r')

tar.extractall() # 可设置解压地址

tar.close()

class ZipFile(object):

""" Class with methods to open, read, write, close, listzip files.

z = ZipFile(file, mode="r",compression=ZIP_STORED, allowZip64=False)

file: Either the path to the file, or afile-like object.

If it is a path, the file will beopened and closed by ZipFile.

mode: The mode can be either read"r", write "w" or append "a".

compression: ZIP_STORED (no compression) orZIP_DEFLATED (requires zlib).

allowZip64: if True ZipFile will createfiles with ZIP64 extensions when

needed, otherwise it will raisean exception when this would

be necessary.

"""

fp = None # Set here since __del__ checks it

def__init__(self, file, mode="r", compression=ZIP_STORED, allowZip64=False):

"""Open the ZIP file with mode read"r", write "w" or append "a"."""

if mode notin ("r", "w", "a"):

raiseRuntimeError('ZipFile() requiresmode "r", "w", or "a"')

ifcompression == ZIP_STORED:

pass

elifcompression == ZIP_DEFLATED:

ifnot zlib:

raiseRuntimeError,\

"Compression requires the (missing) zlibmodule"

else:

raiseRuntimeError, "Thatcompression method is not supported"

self._allowZip64 = allowZip64

self._didModify = False

self.debug = 0 # Level of printing: 0 through 3

self.NameToInfo = {} # Find file info given name

self.filelist = [] # List of ZipInfo instances for archive

self.compression = compression # Method of compression

self.mode = key = mode.replace('b', '')[0]

self.pwd = None

self._comment = ''

# Check if we were passed a file-like object

ifisinstance(file, basestring):

self._filePassed = 0

self.filename = file

modeDict = {'r' : 'rb', 'w': 'wb', 'a' : 'r+b'}

try:

self.fp = open(file,modeDict[mode])

exceptIOError:

if mode== 'a':

mode = key = 'w'

self.fp = open(file,modeDict[mode])

else:

raise

else:

self._filePassed = 1

self.fp = file

self.filename = getattr(file, 'name', None)

try:

if key== 'r':

self._RealGetContents()

elif key== 'w':

# set the modified flag so central directory gets written

# even if no files are added to the archive

self._didModify = True

elif key== 'a':

try:

# See if file is a zip file

self._RealGetContents()

# seek to start of directory and overwrite

self.fp.seek(self.start_dir, 0)

exceptBadZipfile:

# file is not a zip file, just append

self.fp.seek(0, 2)

# set the modified flag so central directory gets written

# even if no files are added to the archive

self._didModify = True

else:

raise RuntimeError('Mode must be "r", "w" or"a"')

except:

fp = self.fp

self.fp = None

ifnot self._filePassed:

fp.close()

raise

def__enter__(self):

return self

def__exit__(self, type, value,traceback):

self.close()

def _RealGetContents(self):

"""Read in the table of contents for theZIP file."""

fp = self.fp

try:

endrec = _EndRecData(fp)

exceptIOError:

raise BadZipfile("File is not a zip file")

ifnot endrec:

raiseBadZipfile, "File is not azip file"

ifself.debug > 1:

printendrec

size_cd = endrec[_ECD_SIZE] # bytes in central directory

offset_cd = endrec[_ECD_OFFSET] # offset of central directory

self._comment =endrec[_ECD_COMMENT] # archive comment

# "concat" is zero, unless zip was concatenatedto another file

concat = endrec[_ECD_LOCATION] -size_cd - offset_cd

ifendrec[_ECD_SIGNATURE] == stringEndArchive64:

# If Zip64 extension structures are present, account forthem

concat -= (sizeEndCentDir64 +sizeEndCentDir64Locator)

ifself.debug > 2:

inferred = concat + offset_cd

print"given, inferred, offset", offset_cd, inferred, concat

# self.start_dir: Position of start of central directory

self.start_dir = offset_cd + concat

fp.seek(self.start_dir, 0)

data = fp.read(size_cd)

fp = cStringIO.StringIO(data)

total = 0

while total< size_cd:

centdir = fp.read(sizeCentralDir)

iflen(centdir) != sizeCentralDir:

raise BadZipfile("Truncated central directory")

centdir =struct.unpack(structCentralDir, centdir)

ifcentdir[_CD_SIGNATURE] != stringCentralDir:

raiseBadZipfile("Bad magicnumber for central directory")

if self.debug> 2:

printcentdir

filename =fp.read(centdir[_CD_FILENAME_LENGTH])

# Create ZipInfo instance to store file information

x = ZipInfo(filename)

x.extra =fp.read(centdir[_CD_EXTRA_FIELD_LENGTH])

x.comment =fp.read(centdir[_CD_COMMENT_LENGTH])

x.header_offset =centdir[_CD_LOCAL_HEADER_OFFSET]

(x.create_version, x.create_system,x.extract_version, x.reserved,

x.flag_bits, x.compress_type,t, d,

x.CRC, x.compress_size,x.file_size) = centdir[1:12]

x.volume, x.internal_attr,x.external_attr = centdir[15:18]

# Convert date/time code to (year, month, day, hour, min,sec)

x._raw_time = t

x.date_time = ( (d>>9)+1980,(d>>5)&0xF, d&0x1F,

t>>11, (t>>5)&0x3F, (t&0x1F) * 2 )

x._decodeExtra()

x.header_offset = x.header_offset +concat

x.filename = x._decodeFilename()

self.filelist.append(x)

self.NameToInfo[x.filename] = x

# update total bytes read from central directory

total = (total + sizeCentralDir +centdir[_CD_FILENAME_LENGTH]

+centdir[_CD_EXTRA_FIELD_LENGTH]

+centdir[_CD_COMMENT_LENGTH])

ifself.debug > 2:

print"total", total

def namelist(self):

"""Return a list of file names in thearchive."""

l = []

for data in self.filelist:

l.append(data.filename)

return l

def infolist(self):

"""Return a list of class ZipInfoinstances for files in the

archive."""

returnself.filelist

def printdir(self):

"""Print a table of contents for the zipfile."""

print"%-46s %19s %12s" % ("File Name", "Modified ", "Size")

for zinfoin self.filelist:

date = "%d-%02d-%02d %02d:%02d:%02d" % zinfo.date_time[:6]

print"%-46s %s %12d" % (zinfo.filename, date, zinfo.file_size)

def testzip(self):

"""Read all the files and check theCRC."""

chunk_size = 2 ** 20

for zinfoin self.filelist:

try:

# Read by chunks, to avoid an OverflowError or a

# MemoryError with very large embedded files.

with self.open(zinfo.filename, "r") as f:

whilef.read(chunk_size): # Check CRC-32

pass

exceptBadZipfile:

returnzinfo.filename

def getinfo(self, name):

"""Return the instance of ZipInfo given'name'."""

info = self.NameToInfo.get(name)

if info is None:

raiseKeyError(

'There is no item named %r in the archive' % name)

return info

def setpassword(self, pwd):

"""Set default password for encryptedfiles."""

self.pwd = pwd

@property

def comment(self):

"""The comment text associated with theZIP file."""

returnself._comment

@comment.setter

def comment(self, comment):

# check for valid comment length

iflen(comment) > ZIP_MAX_COMMENT:

importwarnings

warnings.warn('Archive comment is too long; truncating to%d bytes'

% ZIP_MAX_COMMENT,stacklevel=2)

comment = comment[:ZIP_MAX_COMMENT]

self._comment = comment

self._didModify = True

def read(self, name, pwd=None):

"""Return file bytes (as a string) forname."""

returnself.open(name, "r", pwd).read()

def open(self, name, mode="r", pwd=None):

"""Return file-like object for'name'."""

if mode notin ("r", "U", "rU"):

raiseRuntimeError, 'open() requiresmode "r", "U", or "rU"'

ifnot self.fp:

raiseRuntimeError, \

"Attempt to read ZIP archive that was alreadyclosed"

# Only open a new file for instances where we were not

# given a file object in the constructor

ifself._filePassed:

zef_file = self.fp

should_close = False

else:

zef_file = open(self.filename, 'rb')

should_close = True

try:

# Make sure we have an info object

ifisinstance(name, ZipInfo):

# 'name' is already an info object

zinfo = name

else:

# Get info object for name

zinfo = self.getinfo(name)

zef_file.seek(zinfo.header_offset,0)

# Skip the file header:

fheader =zef_file.read(sizeFileHeader)

iflen(fheader) != sizeFileHeader:

raiseBadZipfile("Truncated fileheader")

fheader =struct.unpack(structFileHeader, fheader)

iffheader[_FH_SIGNATURE] != stringFileHeader:

raiseBadZipfile("Bad magicnumber for file header")

fname =zef_file.read(fheader[_FH_FILENAME_LENGTH])

iffheader[_FH_EXTRA_FIELD_LENGTH]:

zef_file.read(fheader[_FH_EXTRA_FIELD_LENGTH])

if fname!= zinfo.orig_filename:

raiseBadZipfile, \

'File name in directory "%s" and header"%s" differ.' % (

zinfo.orig_filename, fname)

# check for encrypted flag & handle password

is_encrypted = zinfo.flag_bits& 0x1

zd = None

ifis_encrypted:

ifnot pwd:

pwd = self.pwd

ifnot pwd:

raiseRuntimeError, "File %s isencrypted, " \

"password required for extraction" % name

zd = _ZipDecrypter(pwd)

# The first 12 bytes in the cypher stream is anencryption header

# used tostrengthen the algorithm. The first 11 bytes are

# completelyrandom, while the 12th contains the MSB of the CRC,

# or the MSB ofthe file time depending on the header type

# and is used tocheck the correctness of the password.

bytes = zef_file.read(12)

h = map(zd, bytes[0:12])

ifzinfo.flag_bits & 0x8:

# compare against the file type from extended localheaders

check_byte =(zinfo._raw_time >> 8) & 0xff

else:

# compare against the CRC otherwise

check_byte = (zinfo.CRC>> 24) & 0xff

iford(h[11]) != check_byte:

raiseRuntimeError("Bad passwordfor file", name)

returnZipExtFile(zef_file, mode, zinfo, zd,

close_fileobj=should_close)

except:

ifshould_close:

zef_file.close()

raise

def extract(self, member, path=None, pwd=None):

"""Extract a member from the archive tothe current working directory,

using its full name. Its fileinformation is extracted as accurately

as possible. `member' may be afilename or a ZipInfo object. You can

specify a different directory using`path'.

"""

ifnot isinstance(member, ZipInfo):

member = self.getinfo(member)

if path is None:

path = os.getcwd()

returnself._extract_member(member, path, pwd)

def extractall(self, path=None, members=None,pwd=None):

"""Extract all members from the archive tothe current working

directory. `path' specifies adifferent directory to extract to.

`members' is optional and must be asubset of the list returned

by namelist().

"""

if members is None:

members = self.namelist()

for zipinfo in members:

self.extract(zipinfo, path, pwd)

def _extract_member(self, member, targetpath,pwd):

"""Extract the ZipInfo object 'member' toa physical

file on the path targetpath.

"""

# build the destination pathname, replacing

# forward slashes to platform specific separators.

arcname = member.filename.replace('/', os.path.sep)

ifos.path.altsep:

arcname =arcname.replace(os.path.altsep, os.path.sep)

# interpret absolute pathname as relative, remove driveletter or

# UNC path, redundant separators, "." and".." components.

arcname =os.path.splitdrive(arcname)[1]

arcname = os.path.sep.join(x for x in arcname.split(os.path.sep)

if x notin ('', os.path.curdir,os.path.pardir))

if os.path.sep == '\\':

# filter illegal characters on Windows

illegal = ':<>|"?*'

ifisinstance(arcname, unicode):

table = {ord(c): ord('_') for c in illegal}

else:

table =string.maketrans(illegal, '_' * len(illegal))

arcname = arcname.translate(table)

# remove trailing dots

arcname = (x.rstrip('.') for x in arcname.split(os.path.sep))

arcname = os.path.sep.join(x for x in arcname if x)

targetpath = os.path.join(targetpath,arcname)

targetpath =os.path.normpath(targetpath)

# Create all upper directories if necessary.

upperdirs = os.path.dirname(targetpath)

if upperdirsandnot os.path.exists(upperdirs):

os.makedirs(upperdirs)

ifmember.filename[-1] == '/':

ifnot os.path.isdir(targetpath):

os.mkdir(targetpath)

returntargetpath

with self.open(member, pwd=pwd) assource, \

file(targetpath, "wb") as target:

shutil.copyfileobj(source, target)

returntargetpath

def _writecheck(self, zinfo):

"""Check for errors before writing a fileto the archive."""

ifzinfo.filename in self.NameToInfo:

importwarnings

warnings.warn('Duplicate name: %r' % zinfo.filename, stacklevel=3)

ifself.mode notin ("w", "a"):

raiseRuntimeError, 'write() requiresmode "w" or "a"'

ifnot self.fp:

raiseRuntimeError, \

"Attempt to write ZIP archive that was alreadyclosed"

ifzinfo.compress_type == ZIP_DEFLATED andnot zlib:

raiseRuntimeError, \

"Compression requires the (missing) zlibmodule"

ifzinfo.compress_type notin (ZIP_STORED, ZIP_DEFLATED):

raiseRuntimeError, \

"That compression method is not supported"

ifnot self._allowZip64:

requires_zip64 = None

iflen(self.filelist) >= ZIP_FILECOUNT_LIMIT:

requires_zip64 = "Files count"

elifzinfo.file_size > ZIP64_LIMIT:

requires_zip64 = "Filesize"

elifzinfo.header_offset > ZIP64_LIMIT:

requires_zip64 = "Zipfile size"

ifrequires_zip64:

raiseLargeZipFile(requires_zip64 +

" would require ZIP64 extensions")

def write(self, filename, arcname=None,compress_type=None):

"""Put the bytes from filename into thearchive under the name

arcname."""

ifnot self.fp:

raiseRuntimeError(

"Attempt to write to ZIP archive that was alreadyclosed")

st = os.stat(filename)

isdir = stat.S_ISDIR(st.st_mode)

mtime = time.localtime(st.st_mtime)

date_time = mtime[0:6]

# Create ZipInfo instance to store file information

ifarcname is None:

arcname = filename

arcname =os.path.normpath(os.path.splitdrive(arcname)[1])

whilearcname[0] in (os.sep, os.altsep):

arcname = arcname[1:]

ifisdir:

arcname += '/'

zinfo = ZipInfo(arcname, date_time)

zinfo.external_attr = (st[0] &0xFFFF) << 16L # Unix attributes

ifcompress_type is None:

zinfo.compress_type =self.compression

else:

zinfo.compress_type = compress_type

zinfo.file_size = st.st_size

zinfo.flag_bits = 0x00

zinfo.header_offset =self.fp.tell() # Start of header bytes

self._writecheck(zinfo)

self._didModify = True

ifisdir:

zinfo.file_size = 0

zinfo.compress_size = 0

zinfo.CRC = 0

zinfo.external_attr |= 0x10 # MS-DOS directory flag

self.filelist.append(zinfo)

self.NameToInfo[zinfo.filename] =zinfo

self.fp.write(zinfo.FileHeader(False))

return

with open(filename, "rb") as fp:

# Must overwrite CRC and sizes with correct data later

zinfo.CRC = CRC = 0

zinfo.compress_size = compress_size= 0

# Compressed size can be larger than uncompressed size

zip64 = self._allowZip64 and \

zinfo.file_size * 1.05 >ZIP64_LIMIT

self.fp.write(zinfo.FileHeader(zip64))

ifzinfo.compress_type == ZIP_DEFLATED:

cmpr =zlib.compressobj(zlib.Z_DEFAULT_COMPRESSION,

zlib.DEFLATED, -15)

else:

cmpr = None

file_size = 0

while 1:

buf = fp.read(1024 * 8)

ifnot buf:

break

file_size = file_size +len(buf)

CRC = crc32(buf, CRC) &0xffffffff

if cmpr:

buf = cmpr.compress(buf)

compress_size =compress_size + len(buf)

self.fp.write(buf)

if cmpr:

buf = cmpr.flush()

compress_size = compress_size +len(buf)

self.fp.write(buf)

zinfo.compress_size = compress_size

else:

zinfo.compress_size = file_size

zinfo.CRC = CRC

zinfo.file_size = file_size

ifnot zip64 and self._allowZip64:

iffile_size > ZIP64_LIMIT:

raiseRuntimeError('File size hasincreased during compressing')

ifcompress_size > ZIP64_LIMIT:

raiseRuntimeError('Compressed sizelarger than uncompressed size')

# Seek backwards and write file header (which will nowinclude

# correct CRC and file sizes)

position = self.fp.tell() # Preserve current position in file

self.fp.seek(zinfo.header_offset, 0)

self.fp.write(zinfo.FileHeader(zip64))

self.fp.seek(position, 0)

self.filelist.append(zinfo)

self.NameToInfo[zinfo.filename] = zinfo

def writestr(self, zinfo_or_arcname, bytes,compress_type=None):

"""Write a file into the archive. The contents is the string

'bytes'. 'zinfo_or_arcname' is either a ZipInfoinstance or

the name of the file in thearchive."""

ifnot isinstance(zinfo_or_arcname, ZipInfo):

zinfo =ZipInfo(filename=zinfo_or_arcname,

date_time=time.localtime(time.time())[:6])

zinfo.compress_type =self.compression

ifzinfo.filename[-1] == '/':

zinfo.external_attr = 0o40775<< 16 # drwxrwxr-x

zinfo.external_attr |=0x10 # MS-DOS directory flag

else:

zinfo.external_attr = 0o600<< 16 # ?rw-------

else:

zinfo = zinfo_or_arcname

ifnot self.fp:

raiseRuntimeError(

"Attempt to write to ZIP archive that was alreadyclosed")

ifcompress_type isnot None:

zinfo.compress_type = compress_type

zinfo.file_size = len(bytes) # Uncompressed size

zinfo.header_offset =self.fp.tell() # Start of header bytes

self._writecheck(zinfo)

self._didModify = True

zinfo.CRC = crc32(bytes) &0xffffffff # CRC-32 checksum

ifzinfo.compress_type == ZIP_DEFLATED:

co =zlib.compressobj(zlib.Z_DEFAULT_COMPRESSION,

zlib.DEFLATED, -15)

bytes = co.compress(bytes) +co.flush()

zinfo.compress_size =len(bytes) # Compressed size

else:

zinfo.compress_size =zinfo.file_size

zip64 = zinfo.file_size > ZIP64_LIMITor \

zinfo.compress_size >ZIP64_LIMIT

if zip64andnot self._allowZip64:

raiseLargeZipFile("Filesize wouldrequire ZIP64 extensions")

self.fp.write(zinfo.FileHeader(zip64))

self.fp.write(bytes)

ifzinfo.flag_bits & 0x08:

# Write CRC and file sizes after the file data

fmt = '

self.fp.write(struct.pack(fmt,zinfo.CRC, zinfo.compress_size,

zinfo.file_size))

self.fp.flush()

self.filelist.append(zinfo)

self.NameToInfo[zinfo.filename] = zinfo

def__del__(self):

"""Call the "close()" method incase the user forgot."""

self.close()

def close(self):

"""Close the file, and for mode"w" and "a" write the ending

records."""

ifself.fp is None:

return

try:

ifself.mode in ("w", "a") and self._didModify: # write ending records

pos1 = self.fp.tell()

for zinfo inself.filelist: # write central directory

dt = zinfo.date_time

dosdate = (dt[0] - 1980)<< 9 | dt[1] << 5 | dt[2]

dostime = dt[3] << 11| dt[4] << 5 | (dt[5] // 2)

extra = []

ifzinfo.file_size > ZIP64_LIMIT \

or zinfo.compress_size > ZIP64_LIMIT:

extra.append(zinfo.file_size)

extra.append(zinfo.compress_size)

file_size = 0xffffffff

compress_size =0xffffffff

else:

file_size =zinfo.file_size

compress_size = zinfo.compress_size

ifzinfo.header_offset > ZIP64_LIMIT:

extra.append(zinfo.header_offset)

header_offset =0xffffffffL

else:

header_offset = zinfo.header_offset

extra_data = zinfo.extra

ifextra:

# Append a ZIP64 field to the extra's

extra_data =struct.pack(

'

1,8*len(extra), *extra) + extra_data

extract_version =max(45, zinfo.extract_version)

create_version =max(45, zinfo.create_version)

else:

extract_version =zinfo.extract_version

create_version =zinfo.create_version

try:

filename, flag_bits =zinfo._encodeFilenameFlags()

centdir =struct.pack(structCentralDir,

stringCentralDir,create_version,

zinfo.create_system,extract_version, zinfo.reserved,

flag_bits,zinfo.compress_type, dostime, dosdate,

zinfo.CRC,compress_size, file_size,

len(filename),len(extra_data), len(zinfo.comment),

0, zinfo.internal_attr,zinfo.external_attr,

header_offset)

exceptDeprecationWarning:

print >>sys.stderr, (structCentralDir,

stringCentralDir,create_version,

zinfo.create_system,extract_version, zinfo.reserved,

zinfo.flag_bits,zinfo.compress_type, dostime, dosdate,

zinfo.CRC,compress_size, file_size,

len(zinfo.filename),len(extra_data), len(zinfo.comment),

0, zinfo.internal_attr,zinfo.external_attr,

header_offset)

raise

self.fp.write(centdir)

self.fp.write(filename)

self.fp.write(extra_data)

self.fp.write(zinfo.comment)

pos2 = self.fp.tell()

# Write end-of-zip-archive record

centDirCount =len(self.filelist)

centDirSize = pos2 - pos1

centDirOffset = pos1

requires_zip64 = None

ifcentDirCount > ZIP_FILECOUNT_LIMIT:

requires_zip64 = "Files count"

elifcentDirOffset > ZIP64_LIMIT:

requires_zip64 = "Central directory offset"

elifcentDirSize > ZIP64_LIMIT:

requires_zip64 = "Central directory size"

ifrequires_zip64:

# Need to write the ZIP64 end-of-archive records

ifnot self._allowZip64:

raise LargeZipFile(requires_zip64 +

" would require ZIP64 extensions")

zip64endrec = struct.pack(

structEndArchive64,stringEndArchive64,

44, 45, 45, 0, 0,centDirCount, centDirCount,

centDirSize,centDirOffset)

self.fp.write(zip64endrec)

zip64locrec = struct.pack(

structEndArchive64Locator,

stringEndArchive64Locator, 0, pos2, 1)

self.fp.write(zip64locrec)

centDirCount = min(centDirCount,0xFFFF)

centDirSize =min(centDirSize, 0xFFFFFFFF)

centDirOffset =min(centDirOffset, 0xFFFFFFFF)

endrec =struct.pack(structEndArchive, stringEndArchive,

0, 0, centDirCount, centDirCount,

centDirSize, centDirOffset, len(self._comment))

self.fp.write(endrec)

self.fp.write(self._comment)

self.fp.flush()

finally:

fp = self.fp

self.fp = None

ifnot self._filePassed:

fp.close()

class TarFile(object):

"""The TarFile Class provides an interface to tar archives.

"""

debug = 0 # May be set from 0 (no msgs) to 3 (all msgs)

dereference = False # If true, add content of linked file to the

# tar file, else the link.

ignore_zeros = False # If true, skips empty or invalid blocks and

# continues processing.

errorlevel = 1 # If 0, fatal errors only appear in debug

# messages (if debug >= 0). If > 0,errors

# are passed to the caller as exceptions.

format = DEFAULT_FORMAT # The format to use when creating an archive.

encoding = ENCODING # Encoding for 8-bit character strings.

errors = None # Error handler for unicode conversion.

tarinfo = TarInfo # The default TarInfo class to use.

fileobject = ExFileObject # The default ExFileObject class to use.

def__init__(self, name=None,mode="r", fileobj=None, format=None,

tarinfo=None, dereference=None,ignore_zeros=None, encoding=None,

errors=None, pax_headers=None,debug=None, errorlevel=None):

"""Open an (uncompressed) tar archive`name'. `mode' is either 'r' to

read from an existing archive, 'a'to append data to an existing

file or 'w' to create a new fileoverwriting an existing one. `mode'

defaults to 'r'.

If `fileobj' is given, it is usedfor reading or writing data. If it

can be determined, `mode' isoverridden by `fileobj's mode.

`fileobj' is not closed, whenTarFile is closed.

"""

modes = {"r": "rb", "a": "r+b", "w": "wb"}

if mode notin modes:

raiseValueError("mode must be'r', 'a' or 'w'")

self.mode = mode

self._mode = modes[mode]

ifnot fileobj:

ifself.mode == "a"andnot os.path.exists(name):

# Create nonexistent files in append mode.

self.mode = "w"

self._mode = "wb"

fileobj = bltn_open(name,self._mode)

self._extfileobj = False

else:

if name is None and hasattr(fileobj, "name"):

name = fileobj.name

ifhasattr(fileobj, "mode"):

self._mode = fileobj.mode

self._extfileobj = True

self.name = os.path.abspath(name) if name else None

self.fileobj = fileobj

# Init attributes.

ifformat isnot None:

self.format = format

if tarinfoisnot None:

self.tarinfo = tarinfo

ifdereference isnot None:

self.dereference = dereference

ifignore_zeros isnot None:

self.ignore_zeros = ignore_zeros

ifencoding isnot None:

self.encoding = encoding

iferrors isnot None:

self.errors = errors

elif mode== "r":

self.errors = "utf-8"

else:

self.errors = "strict"

ifpax_headers isnot None and self.format== PAX_FORMAT:

self.pax_headers = pax_headers

else:

self.pax_headers = {}

if debugisnot None:

self.debug = debug

iferrorlevel isnot None:

self.errorlevel = errorlevel

# Init datastructures.

self.closed = False

self.members = [] # list of members as TarInfo objects

self._loaded = False # flag if all members have been read

self.offset = self.fileobj.tell()

# current position in the archive file

self.inodes = {} # dictionary caching the inodes of

# archive members already added

try:

ifself.mode == "r":

self.firstmember = None

self.firstmember = self.next()

ifself.mode == "a":

# Move to the end of the archive,

# before the first empty block.

while True:

self.fileobj.seek(self.offset)

try:

tarinfo =self.tarinfo.fromtarfile(self)

self.members.append(tarinfo)

exceptEOFHeaderError:

self.fileobj.seek(self.offset)

break

exceptHeaderError, e:

raise ReadError(str(e))

ifself.mode in"aw":

self._loaded = True

ifself.pax_headers:

buf =self.tarinfo.create_pax_global_header(self.pax_headers.copy())

self.fileobj.write(buf)

self.offset += len(buf)

except:

ifnot self._extfileobj:

self.fileobj.close()

self.closed = True

raise

def _getposix(self):

returnself.format == USTAR_FORMAT

def _setposix(self, value):

importwarnings

warnings.warn("use the format attribute instead", DeprecationWarning, 2)

ifvalue:

self.format = USTAR_FORMAT

else:

self.format = GNU_FORMAT

posix = property(_getposix, _setposix)

#--------------------------------------------------------------------------

# Below are the classmethods which act as alternate constructors to the

# TarFile class. The open() method is the only one that is needed for

# public use; it is the "super"-constructor and is able toselect an

# adequate "sub"-constructor for a particular compression usingthe mapping

# from OPEN_METH.

#

# This concept allows one to subclass TarFile without losing the comfortof

# the super-constructor. A sub-constructor is registered and madeavailable

# by adding it to the mapping in OPEN_METH.

@classmethod

def open(cls, name=None, mode="r", fileobj=None, bufsize=RECORDSIZE, **kwargs):

"""Open a tar archive for reading, writingor appending. Return

an appropriate TarFile class.

mode:

'r' or 'r:*' open for reading withtransparent compression

'r:' open for reading exclusivelyuncompressed

'r:gz' open for reading with gzip compression

'r:bz2' open for reading with bzip2 compression

'a' or 'a:' open for appending, creating the file ifnecessary

'w' or 'w:' open for writing without compression

'w:gz' open for writing with gzip compression

'w:bz2' open for writing with bzip2 compression

'r|*' open a stream of tar blocks withtransparent compression

'r|' open an uncompressed stream of tarblocks for reading

'r|gz' open a gzip compressed stream of tarblocks

'r|bz2' open a bzip2 compressed stream of tarblocks

'w|' open an uncompressed stream forwriting

'w|gz' open a gzip compressed stream forwriting

'w|bz2' open a bzip2 compressed stream forwriting

"""

ifnot name andnot fileobj:

raiseValueError("nothing toopen")

if mode in ("r", "r:*"):

# Find out which *open() is appropriate for opening thefile.

forcomptype in cls.OPEN_METH:

func = getattr(cls,cls.OPEN_METH[comptype])

iffileobj isnot None:

saved_pos = fileobj.tell()

try:

returnfunc(name, "r", fileobj, **kwargs)

except(ReadError, CompressionError), e:

iffileobj isnot None:

fileobj.seek(saved_pos)

continue

raiseReadError("file could notbe opened successfully")

elif":"in mode:

filemode, comptype = mode.split(":", 1)

filemode = filemode or"r"

comptype = comptype or"tar"

# Select the *open() function according to

# given compression.

ifcomptype in cls.OPEN_METH:

func = getattr(cls,cls.OPEN_METH[comptype])

else:

raiseCompressionError("unknowncompression type %r" % comptype)

returnfunc(name, filemode, fileobj, **kwargs)

elif"|"in mode:

filemode, comptype = mode.split("|", 1)

filemode = filemode or"r"

comptype = comptype or"tar"

iffilemode notin ("r", "w"):

raiseValueError("mode must be'r' or 'w'")

stream = _Stream(name, filemode,comptype, fileobj, bufsize)

try:

t = cls(name, filemode, stream,**kwargs)

except:

stream.close()

raise

t._extfileobj = False

return t

elif mode in ("a", "w"):

returncls.taropen(name, mode, fileobj, **kwargs)

raiseValueError("undiscerniblemode")

@classmethod

def taropen(cls, name, mode="r", fileobj=None, **kwargs):

"""Open uncompressed tar archive name forreading or writing.

"""

if mode notin ("r", "a", "w"):

raiseValueError("mode must be'r', 'a' or 'w'")

returncls(name, mode, fileobj, **kwargs)

@classmethod

def gzopen(cls, name, mode="r", fileobj=None, compresslevel=9, **kwargs):

"""Open gzip compressed tar archive namefor reading or writing.

Appending is not allowed.

"""

if mode notin ("r", "w"):

raiseValueError("mode must be'r' or 'w'")

try:

import gzip

gzip.GzipFile

except(ImportError, AttributeError):

raiseCompressionError("gzip moduleis not available")

try:

fileobj = gzip.GzipFile(name, mode,compresslevel, fileobj)

exceptOSError:

iffileobj isnot None and mode== 'r':

raiseReadError("not a gzipfile")

raise

try:

t = cls.taropen(name, mode,fileobj, **kwargs)

exceptIOError:

fileobj.close()

if mode== 'r':

raiseReadError("not a gzipfile")

raise

except:

fileobj.close()

raise

t._extfileobj = False

return t

@classmethod

def bz2open(cls, name, mode="r", fileobj=None, compresslevel=9, **kwargs):

"""Open bzip2 compressed tar archive namefor reading or writing.

Appending is not allowed.

"""

if mode notin ("r", "w"):

raiseValueError("mode must be'r' or 'w'.")

try:

import bz2

exceptImportError:

raiseCompressionError("bz2 moduleis not available")

iffileobj isnot None:

fileobj = _BZ2Proxy(fileobj, mode)

else:

fileobj = bz2.BZ2File(name, mode,compresslevel=compresslevel)

try:

t = cls.taropen(name, mode,fileobj, **kwargs)

except(IOError, EOFError):

fileobj.close()

if mode== 'r':

raiseReadError("not a bzip2file")

raise

except:

fileobj.close()

raise

t._extfileobj = False

return t

# All *open() methods are registered here.

OPEN_METH = {

"tar": "taropen", # uncompressed tar

"gz": "gzopen", # gzip compressed tar

"bz2": "bz2open" # bzip2 compressed tar

}

#--------------------------------------------------------------------------

# The public methods which TarFile provides:

def close(self):

"""Close the TarFile. In write-mode, twofinishing zero blocks are

appended to the archive.

"""

ifself.closed:

return

ifself.mode in"aw":

self.fileobj.write(NUL * (BLOCKSIZE* 2))

self.offset += (BLOCKSIZE * 2)

# fill up the end with zero-blocks

# (like option -b20 for tar does)

blocks, remainder =divmod(self.offset, RECORDSIZE)

ifremainder > 0:

self.fileobj.write(NUL *(RECORDSIZE - remainder))

ifnot self._extfileobj:

self.fileobj.close()

self.closed = True

def getmember(self, name):

"""Return a TarInfo object for member`name'. If `name' can not be

found in the archive, KeyError israised. If a member occurs more

than once in the archive, its lastoccurrence is assumed to be the

most up-to-date version.

"""

tarinfo = self._getmember(name)

iftarinfo is None:

raiseKeyError("filename %rnot found" % name)

returntarinfo

def getmembers(self):

"""Return the members of the archive as alist of TarInfo objects. The

list has the same order as themembers in the archive.

"""

self._check()

ifnot self._loaded: # if we want to obtain a list of

self._load() # all members, we first have to

# scan the whole archive.

returnself.members

def getnames(self):

"""Return the members of the archive as alist of their names. It has

the same order as the list returnedby getmembers().

"""

return[tarinfo.name for tarinfo inself.getmembers()]

def gettarinfo(self, name=None, arcname=None,fileobj=None):

"""Create a TarInfo object for either thefile `name' or the file

object `fileobj' (using os.fstat onits file descriptor). You can

modify some of the TarInfo'sattributes before you add it using

addfile(). If given, `arcname'specifies an alternative name for the

file in the archive.

"""

self._check("aw")

# When fileobj is given, replace name by

# fileobj's real name.

iffileobj isnot None:

name = fileobj.name

# Building the name of the member in the archive.

# Backward slashes are converted to forward slashes,

# Absolute paths are turned to relative paths.

ifarcname is None:

arcname = name

drv, arcname = os.path.splitdrive(arcname)

arcname = arcname.replace(os.sep, "/")

arcname = arcname.lstrip("/")

# Now, fill the TarInfo object with

# information specific for the file.

tarinfo = self.tarinfo()

tarinfo.tarfile = self

# Use os.stat or os.lstat, depending on platform

# and if symlinks shall be resolved.

iffileobj is None:

ifhasattr(os, "lstat") andnot self.dereference:

statres = os.lstat(name)

else:

statres = os.stat(name)

else:

statres =os.fstat(fileobj.fileno())

linkname = ""

stmd = statres.st_mode

ifstat.S_ISREG(stmd):

inode = (statres.st_ino, statres.st_dev)

ifnot self.dereference and statres.st_nlink > 1 and \

inode in self.inodes and arcname != self.inodes[inode]:

# Is it a hardlink to an already

# archived file?

type = LNKTYPE

linkname = self.inodes[inode]

else:

# The inode is added only if its valid.

# For win32 it is always 0.

type = REGTYPE

ifinode[0]:

self.inodes[inode] =arcname

elifstat.S_ISDIR(stmd):

type = DIRTYPE

elifstat.S_ISFIFO(stmd):

type = FIFOTYPE

elifstat.S_ISLNK(stmd):

type = SYMTYPE

linkname = os.readlink(name)

elifstat.S_ISCHR(stmd):

type = CHRTYPE

elifstat.S_ISBLK(stmd):

type = BLKTYPE

else:

return None

# Fill the TarInfo object with all

# information we can get.

tarinfo.name = arcname

tarinfo.mode = stmd

tarinfo.uid = statres.st_uid

tarinfo.gid = statres.st_gid

if type== REGTYPE:

tarinfo.size = statres.st_size

else:

tarinfo.size = 0L

tarinfo.mtime = statres.st_mtime

tarinfo.type = type

tarinfo.linkname = linkname

if pwd:

try:

tarinfo.uname =pwd.getpwuid(tarinfo.uid)[0]

exceptKeyError:

pass

if grp:

try:

tarinfo.gname =grp.getgrgid(tarinfo.gid)[0]

exceptKeyError:

pass

if type in (CHRTYPE, BLKTYPE):

ifhasattr(os, "major") and hasattr(os, "minor"):

tarinfo.devmajor =os.major(statres.st_rdev)

tarinfo.devminor =os.minor(statres.st_rdev)

returntarinfo

def list(self, verbose=True):

"""Print a table of contents tosys.stdout. If `verbose' is False, only

the names of the members areprinted. If it is True, an `ls -l'-like

output is produced.

"""

self._check()

fortarinfo in self:

ifverbose:

printfilemode(tarinfo.mode),

print"%s/%s" % (tarinfo.uname ortarinfo.uid,

tarinfo.gname or tarinfo.gid),

iftarinfo.ischr() or tarinfo.isblk():

print"%10s" % ("%d,%d" \

% (tarinfo.devmajor, tarinfo.devminor)),

else:

print"%10d" % tarinfo.size,

print"%d-%02d-%02d %02d:%02d:%02d" \

%time.localtime(tarinfo.mtime)[:6],

printtarinfo.name + ("/"if tarinfo.isdir() else""),

ifverbose:

iftarinfo.issym():

print"->", tarinfo.linkname,

iftarinfo.islnk():

print"link to", tarinfo.linkname,

def add(self, name, arcname=None,recursive=True, exclude=None, filter=None):

"""Add the file `name' to the archive.`name' may be any type of file

(directory, fifo, symbolic link,etc.). If given, `arcname'

specifies an alternative name forthe file in the archive.

Directories are added recursively bydefault. This can be avoided by

setting `recursive' to False.`exclude' is a function that should

return True for each filename to beexcluded. `filter' is a function

that expects a TarInfo objectargument and returns the changed

TarInfo object, if it returns Nonethe TarInfo object will be

excluded from the archive.

"""

self._check("aw")

ifarcname is None:

arcname = name

# Exclude pathnames.

ifexclude isnot None:

importwarnings

warnings.warn("use the filter argument instead",

DeprecationWarning, 2)

ifexclude(name):

self._dbg(2, "tarfile: Excluded %r" % name)

return

# Skip if somebody tries to archive the archive...

ifself.name isnot None and os.path.abspath(name)== self.name:

self._dbg(2, "tarfile: Skipped %r" % name)

return

self._dbg(1, name)

# Create a TarInfo object from the file.

tarinfo = self.gettarinfo(name,arcname)

iftarinfo is None:

self._dbg(1, "tarfile: Unsupported type %r" % name)

return

# Change or exclude the TarInfo object.

iffilter isnot None:

tarinfo = filter(tarinfo)

iftarinfo is None:

self._dbg(2, "tarfile: Excluded %r" % name)

return

# Append the tar header and data to the archive.

iftarinfo.isreg():

with bltn_open(name, "rb") as f:

self.addfile(tarinfo, f)

eliftarinfo.isdir():

self.addfile(tarinfo)

ifrecursive:

for f in os.listdir(name):

self.add(os.path.join(name,f), os.path.join(arcname, f),

recursive, exclude,filter)

else:

self.addfile(tarinfo)

def addfile(self, tarinfo, fileobj=None):

"""Add the TarInfo object `tarinfo' to thearchive. If `fileobj' is

given, tarinfo.size bytes are readfrom it and added to the archive.

You can create TarInfo objects usinggettarinfo().

On Windows platforms, `fileobj'should always be opened with mode

'rb' to avoid irritation about thefile size.

"""

self._check("aw")

tarinfo = copy.copy(tarinfo)

buf = tarinfo.tobuf(self.format,self.encoding, self.errors)

self.fileobj.write(buf)

self.offset += len(buf)

# If there's data to follow, append it.

iffileobj isnot None:

copyfileobj(fileobj, self.fileobj,tarinfo.size)

blocks, remainder =divmod(tarinfo.size, BLOCKSIZE)

ifremainder > 0:

self.fileobj.write(NUL * (BLOCKSIZE- remainder))

blocks += 1

self.offset += blocks * BLOCKSIZE

self.members.append(tarinfo)

def extractall(self, path=".", members=None):

"""Extract all members from the archive tothe current working

directory and set owner,modification time and permissions on

directories afterwards. `path'specifies a different directory

to extract to. `members' is optionaland must be a subset of the

list returned by getmembers().

"""

directories = []

ifmembers is None:

members = self

fortarinfo in members:

iftarinfo.isdir():

# Extract directories with a safe mode.

directories.append(tarinfo)

tarinfo = copy.copy(tarinfo)

tarinfo.mode = 0700

self.extract(tarinfo, path)

# Reverse sort directories.

directories.sort(key=operator.attrgetter('name'))

directories.reverse()

# Set correct owner, mtime and filemode on directories.

fortarinfo in directories:

dirpath = os.path.join(path,tarinfo.name)

try:

self.chown(tarinfo, dirpath)

self.utime(tarinfo, dirpath)

self.chmod(tarinfo, dirpath)

exceptExtractError, e:

ifself.errorlevel > 1:

raise

else:

self._dbg(1, "tarfile: %s" % e)

def extract(self, member, path=""):

"""Extract a member from the archive tothe current working directory,

using its full name. Its fileinformation is extracted as accurately

as possible. `member' may be a filenameor a TarInfo object. You can

specify a different directory using`path'.

"""

self._check("r")

ifisinstance(member, basestring):

tarinfo = self.getmember(member)

else:

tarinfo = member

# Prepare the link target for makelink().

iftarinfo.islnk():

tarinfo._link_target =os.path.join(path, tarinfo.linkname)

try:

self._extract_member(tarinfo,os.path.join(path, tarinfo.name))

exceptEnvironmentError, e:

ifself.errorlevel > 0:

raise

else:

ife.filename is None:

self._dbg(1, "tarfile: %s" % e.strerror)

else:

self._dbg(1, "tarfile: %s %r" % (e.strerror, e.filename))

exceptExtractError, e:

ifself.errorlevel > 1:

raise

else:

self._dbg(1, "tarfile: %s" % e)

def extractfile(self, member):

"""Extract a member from the archive as afile object. `member' may be

a filename or a TarInfo object. If`member' is a regular file, a

file-like object is returned. If`member' is a link, a file-like

object is constructed from thelink's target. If `member' is none of

the above, None is returned.

The file-like object is read-onlyand provides the following

methods: read(), readline(),readlines(), seek() and tell()

"""

self._check("r")

ifisinstance(member, basestring):

tarinfo = self.getmember(member)

else:

tarinfo = member

iftarinfo.isreg():

returnself.fileobject(self, tarinfo)

eliftarinfo.type notin SUPPORTED_TYPES:

# If a member's type is unknown, it is treated as a

# regular file.

returnself.fileobject(self, tarinfo)

eliftarinfo.islnk() or tarinfo.issym():

ifisinstance(self.fileobj, _Stream):

# A small but ugly workaround for the case that someonetries

# to extract a (sym)link as a file-object from anon-seekable

# stream of tar blocks.

raise StreamError("cannot extract (sym)link as fileobject")

else:

# A (sym)link's file object is its target's file object.

returnself.extractfile(self._find_link_target(tarinfo))

else:

# If there's no data associated with the member(directory, chrdev,

# blkdev, etc.), return None instead of a file object.

return None

def _extract_member(self, tarinfo, targetpath):

"""Extract the TarInfo object tarinfo to aphysical

file called targetpath.

"""

# Fetch the TarInfo object for the given name

# and build the destination pathname, replacing

# forward slashes to platform specific separators.

targetpath = targetpath.rstrip("/")

targetpath = targetpath.replace("/", os.sep)

# Create all upper directories.

upperdirs = os.path.dirname(targetpath)

ifupperdirs andnot os.path.exists(upperdirs):

# Create directories that are not part of the archivewith

# default permissions.

os.makedirs(upperdirs)

iftarinfo.islnk() or tarinfo.issym():

self._dbg(1, "%s -> %s" % (tarinfo.name, tarinfo.linkname))

else:

self._dbg(1, tarinfo.name)

iftarinfo.isreg():

self.makefile(tarinfo, targetpath)

eliftarinfo.isdir():

self.makedir(tarinfo, targetpath)

eliftarinfo.isfifo():

self.makefifo(tarinfo, targetpath)

eliftarinfo.ischr() or tarinfo.isblk():

self.makedev(tarinfo, targetpath)

eliftarinfo.islnk() or tarinfo.issym():

self.makelink(tarinfo, targetpath)

eliftarinfo.type notin SUPPORTED_TYPES:

self.makeunknown(tarinfo,targetpath)

else:

self.makefile(tarinfo, targetpath)

self.chown(tarinfo, targetpath)

ifnot tarinfo.issym():

self.chmod(tarinfo, targetpath)

self.utime(tarinfo, targetpath)

#--------------------------------------------------------------------------

# Below are the different file methods. They are called via

# _extract_member() when extract() is called. They can be replaced in a

# subclass to implement other functionality.

def makedir(self, tarinfo, targetpath):

"""Make a directory called targetpath.

"""

try:

# Use a safe mode for the directory, the real mode is set

#later in _extract_member().

os.mkdir(targetpath, 0700)

exceptEnvironmentError, e:

ife.errno != errno.EEXIST:

raise

def makefile(self, tarinfo, targetpath):

"""Make a file called targetpath.

"""

source = self.extractfile(tarinfo)

try:

with bltn_open(targetpath, "wb") as target:

copyfileobj(source, target)

finally:

source.close()

def makeunknown(self, tarinfo, targetpath):

"""Make a file from a TarInfo object withan unknown type

at targetpath.

"""

self.makefile(tarinfo, targetpath)

self._dbg(1, "tarfile: Unknown file type %r, " \

"extracted as regular file." % tarinfo.type)

def makefifo(self, tarinfo, targetpath):

"""Make a fifo called targetpath.

"""

ifhasattr(os, "mkfifo"):

os.mkfifo(targetpath)

else:

raiseExtractError("fifo notsupported by system")

def makedev(self, tarinfo, targetpath):

"""Make a character or block device calledtargetpath.

"""

ifnot hasattr(os, "mknod") ornot hasattr(os, "makedev"):

raiseExtractError("specialdevices not supported by system")

mode = tarinfo.mode

iftarinfo.isblk():

mode |= stat.S_IFBLK

else:

mode |= stat.S_IFCHR

os.mknod(targetpath, mode,

os.makedev(tarinfo.devmajor,tarinfo.devminor))

def makelink(self, tarinfo, targetpath):

"""Make a (symbolic) link calledtargetpath. If it cannot be created

(platform limitation), we try to makea copy of the referenced file

instead of a link.

"""

ifhasattr(os, "symlink") and hasattr(os, "link"):

# For systems that support symbolic and hard links.

iftarinfo.issym():

ifos.path.lexists(targetpath):

os.unlink(targetpath)

os.symlink(tarinfo.linkname,targetpath)

else:

# See extract().

ifos.path.exists(tarinfo._link_target):

ifos.path.lexists(targetpath):

os.unlink(targetpath)

os.link(tarinfo._link_target, targetpath)

else:

self._extract_member(self._find_link_target(tarinfo), targetpath)

else:

try:

self._extract_member(self._find_link_target(tarinfo), targetpath)

exceptKeyError:

raiseExtractError("unable toresolve link inside archive")

def chown(self, tarinfo, targetpath):

"""Set owner of targetpath according totarinfo.

"""

if pwd and hasattr(os, "geteuid") and os.geteuid() == 0:

# We have to be root to do so.

try:

g =grp.getgrnam(tarinfo.gname)[2]

exceptKeyError:

g = tarinfo.gid

try:

u =pwd.getpwnam(tarinfo.uname)[2]

exceptKeyError:

u = tarinfo.uid

try:

iftarinfo.issym() and hasattr(os, "lchown"):

os.lchown(targetpath, u, g)

else:

ifsys.platform != "os2emx":

os.chown(targetpath, u,g)

exceptEnvironmentError, e:

raiseExtractError("could notchange owner")

def chmod(self, tarinfo, targetpath):

"""Set file permissions oftargetpath according to tarinfo.

"""

ifhasattr(os, 'chmod'):

try:

os.chmod(targetpath,tarinfo.mode)

exceptEnvironmentError, e:

raiseExtractError("could notchange mode")

def utime(self, tarinfo, targetpath):

"""Set modification time of targetpathaccording to tarinfo.

"""

ifnot hasattr(os, 'utime'):

return

try:

os.utime(targetpath,(tarinfo.mtime, tarinfo.mtime))

exceptEnvironmentError, e:

raiseExtractError("could notchange modification time")

#--------------------------------------------------------------------------

def next(self):

"""Return the next member of the archiveas a TarInfo object, when

TarFile is opened for reading.Return None if there is no more

available.

"""

self._check("ra")

ifself.firstmember isnot None:

m = self.firstmember

self.firstmember = None

return m

# Read the next block.

self.fileobj.seek(self.offset)

tarinfo = None

while True:

try:

tarinfo =self.tarinfo.fromtarfile(self)

exceptEOFHeaderError, e:

ifself.ignore_zeros:

self._dbg(2, "0x%X: %s" % (self.offset, e))

self.offset += BLOCKSIZE

continue

exceptInvalidHeaderError, e:

ifself.ignore_zeros:

self._dbg(2, "0x%X: %s" % (self.offset, e))

self.offset += BLOCKSIZE

continue

elif self.offset== 0:

raiseReadError(str(e))

exceptEmptyHeaderError:

ifself.offset == 0:

raiseReadError("emptyfile")

exceptTruncatedHeaderError, e:

ifself.offset == 0:

raiseReadError(str(e))

exceptSubsequentHeaderError, e:

raiseReadError(str(e))

break

iftarinfo isnot None:

self.members.append(tarinfo)

else:

self._loaded = True

returntarinfo

#--------------------------------------------------------------------------

# Little helper methods:

def _getmember(self, name, tarinfo=None,normalize=False):

"""Find an archive member by name frombottom to top.

If tarinfo is given, it is used asthe starting point.

"""

# Ensure that all members have been loaded.

members = self.getmembers()

# Limit the member search list up to tarinfo.

iftarinfo isnot None:

members =members[:members.index(tarinfo)]

ifnormalize:

name = os.path.normpath(name)

formember in reversed(members):

ifnormalize:

member_name =os.path.normpath(member.name)

else:

member_name = member.name

if name== member_name:

returnmember

def _load(self):

"""Read through the entire archive fileand look for readable

members.

"""

while True:

tarinfo = self.next()

iftarinfo is None:

break

self._loaded = True

def _check(self, mode=None):

"""Check if TarFile is still open, and ifthe operation's mode

corresponds to TarFile's mode.

"""

ifself.closed:

raiseIOError("%s isclosed" % self.__class__.__name__)

if mode isnot None andself.mode notin mode:

raiseIOError("bad operationfor mode %r" % self.mode)

def _find_link_target(self, tarinfo):

"""Find the target member of a symlink orhardlink member in the

archive.

"""

iftarinfo.issym():

# Always search the entire archive.

linkname = "/".join(filter(None, (os.path.dirname(tarinfo.name), tarinfo.linkname)))

limit = None

else:

# Search the archive before the link, because a hard linkis

# just a reference to an already archived file.

linkname = tarinfo.linkname

limit = tarinfo

member = self._getmember(linkname,tarinfo=limit, normalize=True)

ifmember is None:

raiseKeyError("linkname %rnot found" % linkname)

returnmember

def__iter__(self):

"""Provide an iterator object.

"""

ifself._loaded:

returniter(self.members)

else:

returnTarIter(self)

def _dbg(self, level, msg):

"""Write debugging output to sys.stderr.

"""

if level<= self.debug:

print>> sys.stderr, msg

def__enter__(self):

self._check()

return self

def__exit__(self, type, value,traceback):

if type is None:

self.close()

else:

# An exception occurred. We must not call close() because

# it would try to write end-of-archive blocks andpadding.

ifnot self._extfileobj:

self.fileobj.close()

self.closed = True

# class TarFile

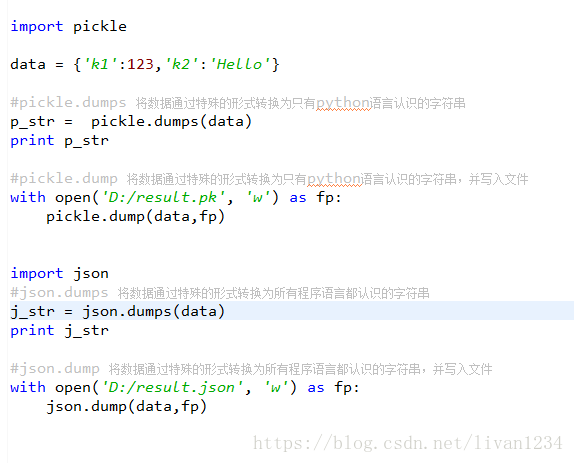

json & pickle 模块

序列化:将内存中的数据类型存储在硬盘上。

反序列化:硬盘上的字符串转换成内存中的数据类型。

用于序列化的两个模块

· json,用于字符串和 python数据类型间进行转换

· pickle,用于python特有的类型和 python的数据类型间进行转换

Json模块提供了四个功能:

Dumps:序列化、将各个Python格式转化成string格式,并存储。

loads:反序列化、

pickle模块提供了四个功能:dumps、dump、loads、load

import json

info={

'name':'alex'

'age':22

}

#存字符串(序列化):

f=open("test.text","w")

f.write(json.dumps(info))

#将字符串取出(反序列化):

f=open("test.text","r")

data=json.loads(f.read())

print(data["age"])

json是现在不同的语言系统之间交流的媒介。

shelve 模块

shelve模块是一个简单的k,v将内存数据通过文件持久化的模块,可以持久化任何pickle可支持的python数据格式

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 |

import shelve

d = shelve.open('shelve_test') #打开一个文件

class Test(object): def __init__(self,n): self.n = n

t = Test(123) t2 = Test(123334)

name = ["alex","rain","test"] d["test"] = name #持久化列表 d["t1"] = t #持久化类 d["t2"] = t2

d.close() |

#!/usr/bin/env python

# _*_ UTF-8 _*_

import shelve

import datetime

d=shelve.open('test_module')

info={'age':22, "job":'it'}

#存入数据:

name=["alex","rain","test"]

d["name"]=name

d["info"]=info

d["date"]=datetime.datetime.now()

d.close()

#读取数据:

print(d.get("name"))

print(d.get("info"))

print(d.get("date"))

xml处理模块

xml是实现不同语言或程序之间进行数据交换的协议,跟json差不多,但json使用起来更简单,不过,古时候,在json还没诞生的黑暗年代,大家只能选择用xml呀,至今很多传统公司如金融行业的很多系统的接口还主要是xml。

xml的格式如下,就是通过<>节点来区别数据结构的:

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 |

|

xml协议在各个语言里的都是支持的,在python中可以用以下模块操作xml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 |

|

修改和删除xml文档内容

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 |

|

自己创建xml文档

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

|

PyYAML模块

Python也可以很容易的处理ymal文档格式,只不过需要安装一个模块,参考文档:

http://pyyaml.org/wiki/PyYAMLDocumentation

Ymal是配置文件的常用文件格式。

ConfigParser模块

用于生成和修改常见配置文档,当前模块的名称在 python 3.x 版本中变更为 configparser。

来看一个好多软件的常见文档格式如下

1 2 3 4 5 6 7 8 9 10 11 12 |

[DEFAULT] ServerAliveInterval = 45 Compression = yes CompressionLevel = 9 ForwardX11 = yes

[bitbucket.org] User = hg

[topsecret.server.com] Port = 50022 ForwardX11 = no |

如果想用python生成一个这样的文档怎么做呢?

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 |

import configparser

config = configparser.ConfigParser() config["DEFAULT"] = {'ServerAliveInterval': '45', 'Compression': 'yes', 'CompressionLevel': '9'}

config['bitbucket.org'] = {} config['bitbucket.org']['User'] = 'hg' config['topsecret.server.com'] = {} topsecret = config['topsecret.server.com'] topsecret['Host Port'] = '50022' # mutates the parser topsecret['ForwardX11'] = 'no' # same here config['DEFAULT']['ForwardX11'] = 'yes' with open('example.ini', 'w') as configfile: config.write(configfile) |

写完了还可以再读出来哈。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 |

>>> import configparser >>> config = configparser.ConfigParser() >>> config.sections() [] >>> config.read('example.ini') ['example.ini'] >>> config.sections() ['bitbucket.org', 'topsecret.server.com'] >>> 'bitbucket.org' in config True >>> 'bytebong.com' in config False >>> config['bitbucket.org']['User'] 'hg' >>> config['DEFAULT']['Compression'] 'yes' >>> topsecret = config['topsecret.server.com'] >>> topsecret['ForwardX11'] 'no' >>> topsecret['Port'] '50022' >>> for key in config['bitbucket.org']: print(key) ... user compressionlevel serveraliveinterval compression forwardx11 >>> config['bitbucket.org']['ForwardX11'] 'yes' |

configparser增删改查语法

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 |

[section1] k1 = v1 k2:v2

[section2] k1 = v1

import ConfigParser

config = ConfigParser.ConfigParser() config.read('i.cfg')

# ########## 读 ########## #secs = config.sections() #print secs #options = config.options('group2') #print options

#item_list = config.items('group2') #print item_list

#val = config.get('group1','key') #val = config.getint('group1','key')

# ########## 改写 ########## #sec = config.remove_section('group1') #config.write(open('i.cfg', "w"))

#sec = config.has_section('wupeiqi') #sec = config.add_section('wupeiqi') #config.write(open('i.cfg', "w"))

#config.set('group2','k1',11111) #config.write(open('i.cfg', "w"))

#config.remove_option('group2','age') #config.write(open('i.cfg', "w")) |

hashlib模块

用于加密相关的操作,3.x里代替了md5模块和sha模块,主要提供 SHA1,SHA224, SHA256, SHA384, SHA512 ,MD5 算法

还不够吊?python 还有一个 hmac 模块,它内部对我们创建 key 和内容再进行处理然后再加密

散列消息鉴别码,简称HMAC,是一种基于消息鉴别码MAC(Message Authentication Code)的鉴别机制。使用HMAC时,消息通讯的双方,通过验证消息中加入的鉴别密钥K来鉴别消息的真伪;

一般用于网络通信中消息加密,前提是双方先要约定好key,就像接头暗号一样,然后消息发送把用key把消息加密,接收方用key +消息明文再加密,拿加密后的值跟发送者的相对比是否相等,这样就能验证消息的真实性,及发送者的合法性了。

1 2 3 |

import hmac h = hmac.new(b'天王盖地虎', b'宝塔镇河妖') print h.hexdigest() |

更多关于md5,sha1,sha256等介绍的文章看这里https://www.tbs-certificates.co.uk/FAQ/en/sha256.html

Subprocess模块

常用subprocess方法示例

#执行命令,返回命令执行状态, 0 or 非0

>>> retcode = subprocess.call(["ls", "-l"])

#执行命令,如果命令结果为0,就正常返回,否则抛异常

>>> subprocess.check_call(["ls", "-l"])

0

#接收字符串格式命令,返回元组形式,第1个元素是执行状态,第2个是命令结果

>>> subprocess.getstatusoutput('ls /bin/ls')

(0, '/bin/ls')

#接收字符串格式命令,并返回结果

>>> subprocess.getoutput('ls /bin/ls')

'/bin/ls'

#执行命令,并返回结果,注意是返回结果,不是打印,下例结果返回给res

>>> res=subprocess.check_output(['ls','-l'])

>>> res

b'total 0\ndrwxr-xr-x 12 alex staff 408 Nov 2 11:05 OldBoyCRM\n'

#上面那些方法,底层都是封装的subprocess.Popen

poll()

Check if child process has terminated. Returns returncode

wait()

Wait for child process to terminate. Returns returncode attribute.

terminate() 杀掉所启动进程

communicate() 等待任务结束

stdin 标准输入

stdout 标准输出

stderr 标准错误

pid

The process ID of the child process.

#例子

>>> p = subprocess.Popen("df -h|grepdisk",stdin=subprocess.PIPE,stdout=subprocess.PIPE,shell=True)

>>> p.stdout.read()

b'/dev/disk1 465Gi 64Gi 400Gi 14% 16901472 104938142 14% /\n'

1 2 3 4 5 6 7 8 9 10 |